Peer Reviewed

Toxic politics and TikTok engagement in the 2024 U.S. election

Article Metrics

0

CrossRef Citations

PDF Downloads

Page Views

What kinds of political content thrive on TikTok during an election year? Our analysis of 51,680 political videos from the 2024 U.S. presidential cycle reveals that toxic and partisan content consistently attracts more user engagement—despite ongoing moderation efforts. Posts about immigration and election fraud, in particular, draw high levels of toxicity and attention. While Republican-leaning videos tend to reach more viewers, Democratic-leaning ones generate more active interactions like comments and shares. As TikTok becomes an important news source for many young voters, these patterns raise questions about how algorithmic curation might amplify divisive narratives and reshape political discourse.

Research Questions

- How does the political content of TikTok videos affect engagement levels? More specifically, how do the number of videos posted, viewed, and interacted with vary across the leanings of political content?

- How does user interaction with political TikTok videos differ across various content characteristics, such as the presence of toxic content and other features?

- How common is toxic content across different political topics, and how does toxic content in these topics relate to user engagement?

- What patterns emerge in user engagement with toxic content during major political events?

Essay Summary

- This study offers one of the first empirical examinations of how partisanship, political toxicity, and topical focus—such as immigration, racism, and election fraud—shaped user engagement with TikTok videos during the 2024 U.S. presidential election.

- The analysis was drawn from 51,680 political videos on TikTok, using models adjusted for feed ranking, user behavior (author, music, posting time), and platform engagement metrics.

- The majority of videos analyzed (77%) were explicitly partisan and were associated with approximately twice the engagement of nonpartisan content. Republican-leaning videos received more views, while Democratic-leaning ones showed more interactions—measured by total likes, comments, and shares.

- Toxic videos were associated with 2.3% more interactions. Partisan content also tended to show higher engagement, with Democratic-leaning toxic videos linked to even higher interactions.

- Racism, antisemitism, and election fraud were among the most toxic topics, with toxicity defined as rude or disrespectful language. Toxic videos on elections (+1.3%) and immigration (+3.5%) received higher engagement.

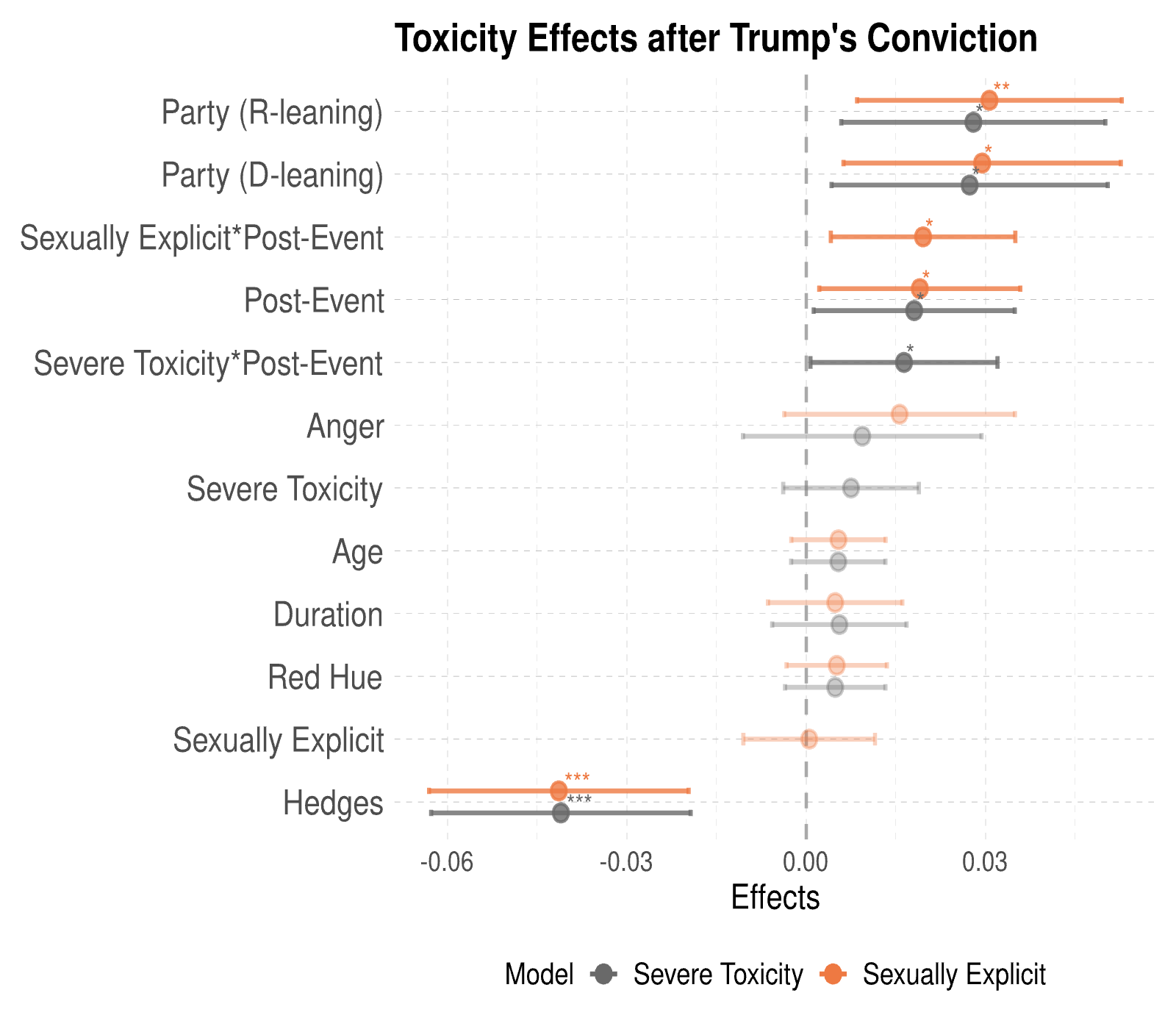

- Toxicity and engagement levels changed after major political events. Following Trump’s conviction, videos with severe toxicity and sexual attacks saw an approximate 2% surge in interactions.

- Captions alone were weak predictors of partisanship and toxicity, but transcripts of the audio from the videos improved alignment with manual labels (68.2%) and had significantly more (56.2%) toxic content. These results highlight the limitations of surface-level text features and the need for multimodal analysis in political content detection.

Implications

This study examines how political toxicity—such as insults, threats, and harassment—manifests and gains engagement on TikTok, a recommendation-driven platform especially popular among users under 30 (Leppert, & Matsa, 2024; TikTok, 2025e). While toxicity is often treated separately from misinformation and disinformation, recent literature shows that these forms of harmful content frequently overlap, particularly in polarized or conflict settings (Mosleh et al., 2024; Wardle, 2024). False or misleading narratives often co-occur with toxic or identity-targeted language (Ferrara et al., 2020; Marwick & Lewis, 2017). Many topics in our analysis—for example, racism, immigration, and election fraud—are frequently linked to misinformation campaigns, particularly during elections or crises (Donovan & Boyd, 2021; Guess et al., 2018; Neidhardt & Butcher, 2022). While misinformation and disinformation can intensify hostility, rising toxicity may signal deeper engagement with conspiratorial or extremist narratives (Bennett & Livingston, 2018; Meleagrou-Hitchens & Kaderbhai, 2017; Wardle & Derakhshan, 2017). Our findings reveal that engagement with political toxicity increases during politically sensitive events, raising concerns about how algorithmic systems may amplify such content, echoing similar concerns raised in recent work on platform visibility dynamics (Biswas, Lin, et al., 2025). This study contributes to ongoing discussions on platform governance and informs strategies for responsibly addressing harmful political content.

We focused on nuanced political content—ranging from broadly political discourse to explicitly partisan posts—and how user interaction varies based on both toxicity and partisan alignment of the content. TikTok’s algorithmically curated feed presents a unique context in which content visibility is shaped not just by popularity but by engagement-driven ranking systems. Thus, what users encounter is filtered through algorithmic choices, not solely their preferences or followings. We also focused on user engagement with this political content, not merely passive exposure (views) but also active interactions such as likes, comments, and shares—forms of interaction that help us understand what types of political content resonate with users. Our analysis shows that toxic content draws higher engagement when tied to high-profile issues like labor rights, socio-cultural controversies, or major political events. Moreover, politically aligned content with toxic framing tends to receive more interaction than non-partisan toxic content, indicating patterns of differential responsiveness likely influenced by algorithmic sorting.

This raises important questions about the platform’s role in amplifying divisive content: moderation practices and content delivery algorithms are inseparable, and accountability for one cannot be meaningfully separated from the other. Platform accountability—the responsibility to explain, justify, and refine how their systems govern content exposure—must address not only enforcement (what gets removed) but also amplification (what gets promoted), particularly when these systems mediate access to political expression.

While TikTok has stated its commitment to balancing freedom of expression and community safety, its moderation policies and enforcement processes remain opaque (TikTok, 2024; TikTok, 2025c; TikTok, 2025d). Although the platform uses a combination of human review and machine learning for moderation, there is limited public documentation on how these systems function, particularly in the political domain. The observed association between toxicity and partisan content engagement suggests that moderation processes may not be uniformly sensitive to context, though we caution against overgeneralizing these effects. Rather than implying moderation failure, these patterns point to the complexity of content governance in politically charged environments.

Moreover, TikTok’s current data-sharing infrastructure, particularly its Research API (TikTok, 2025a), presents significant barriers to external auditing. The platform restricts access to historical content and disallows targeted user queries, limiting researchers’ ability to trace moderation decisions over time or across demographics and making it difficult to assess whether moderation practices are equitable or effective. Addressing this would require not only greater data access but also disaggregated transparency reporting, including enforcement actions by topic, timeframe, and moderation rationale. Procedural clarity and the opportunity to appeal can help ensure that moderation does not feel arbitrary or politicized.

We found that signals from full video transcripts were more predictive of toxicity and political alignment than simply using captions or other content descriptors (e.g., hashtags). This is particularly relevant for political TikToks, which often rely on verbal arguments delivered in visually minimal formats. While TikTok has stated that it employs multimodal moderation techniques—including audio and visual processing (TikTok, 2025b)—public documentation is limited. Given the rhetorical characteristics of political videos, we argue that moderation strategies should be tailored to the dominant communicative modality. For political content, transcript-based analysis may yield more reliable signals than visual classifiers or keyword filters.

These accountability mechanisms should be embedded not just in daily moderation operations but also in the platform’s crisis response protocols. Our study shows that engagement with toxic content spikes around political flashpoints, such as Trump’s conviction, mirrors well-documented patterns in crisis-driven disinformation surges (Pierri et al., 2023; Starbird, 2017). These moments of heightened uncertainty and collective attention are precisely when platforms are most vulnerable to coordinated influence efforts and viral toxicity. We, therefore, echo calls for event-triggered moderation protocols (Goldstein et al., 2023), enabling platforms to promptly curb the spread of harmful political content during sensitive periods such as elections, protests, or geopolitical conflict. Platforms have implemented such measures in the past—for example, TikTok’s removal of Capitol riot-related hashtags, or Facebook’s pause on political ads during election periods (Paul, 2020; Perez, 2021)—but these interventions are often ad hoc. These mechanisms should be designed to prevent overreach while enabling human oversight and third-party auditability (Koshiyama et al., 2024).

If platforms like TikTok continue to supply toxic and partisan content—particularly around charged sociopolitical issues—there is a risk of normalizing incivility and entrenching polarization. This is especially concerning given the platform’s influence over users under 30 (Karimi & Fox, 2023), many of whom rely on it as a source of political news (McClain, 2023; Siegel-Stechler et al., 2025). These patterns also create challenges for content creators who produce nuanced commentary, as they may struggle to compete with more provocative content optimized for engagement. Greater platform transparency (Balasubramaniam et al., 2022; Diab, 2024; Felzmann et al., 2020) could empower educators, researchers, and civil organizations to use legal yet norm-challenging content as tools for media literacy and develop critical awareness of how algorithmic systems shape political understanding.

Findings

Finding 1: Partisan videos garner higher engagement.

Figure 1A shows the dominance of partisan over non-partisan videos on TikTok in the overall share of posts, views, and interactions. A Mann-Whitney U1Mann–Whitney U test: A non-parametric statistical test used to determine whether two groups differ in their distribution. It is an alternative to the t-test when data do not meet the assumptions of normality. test showed that partisan videos receive significantly more views (U = 144089417.0, r = 0.08, 95% CI = 0.07, 0.09, p < .001) and interactions (U = 140706446.5, r = 0.10, 95% CI = 0.08, 0.11, p < .001) than non-partisan ones.

Figure 1B presents total posts, median views, and interactions per post across political leanings. Partisan videos receive nearly 2.2 times more median views and interactions compared to non-partisan videos. Republican-leaning videos receive slightly more views than Democratic-leaning content on average (MedRepub = 4,428, MedDem = 4,359); however, Democratic-leaning videos have higher interactions per post (MedRepub = 664, MedDem = 739). This suggests that while Republican content reaches a wider audience, Democratic content may foster more interaction and discussion.

Finding 2: Toxicity is linked to higher interaction; partisan toxicity has a stronger association than non-partisan toxicity.

To examine whether toxic language drives user engagement, we used linear mixed-effects regression models2A type of regression model that accounts for both fixed effects (variables of interest like toxicity or topic) and random effects (unobserved variations across clusters such as users or videos). This allows for more accurate estimates when data is grouped or hierarchical. that account for platform-driven exposure biases. Adjusting for these sources of bias helps reduce confounding from factors that influence content visibility and engagement. Specifically, we included random effects for post author, featured music, and posting time to control for algorithmic amplification and habitual engagement patterns (e.g., highly followed users or trending sounds).

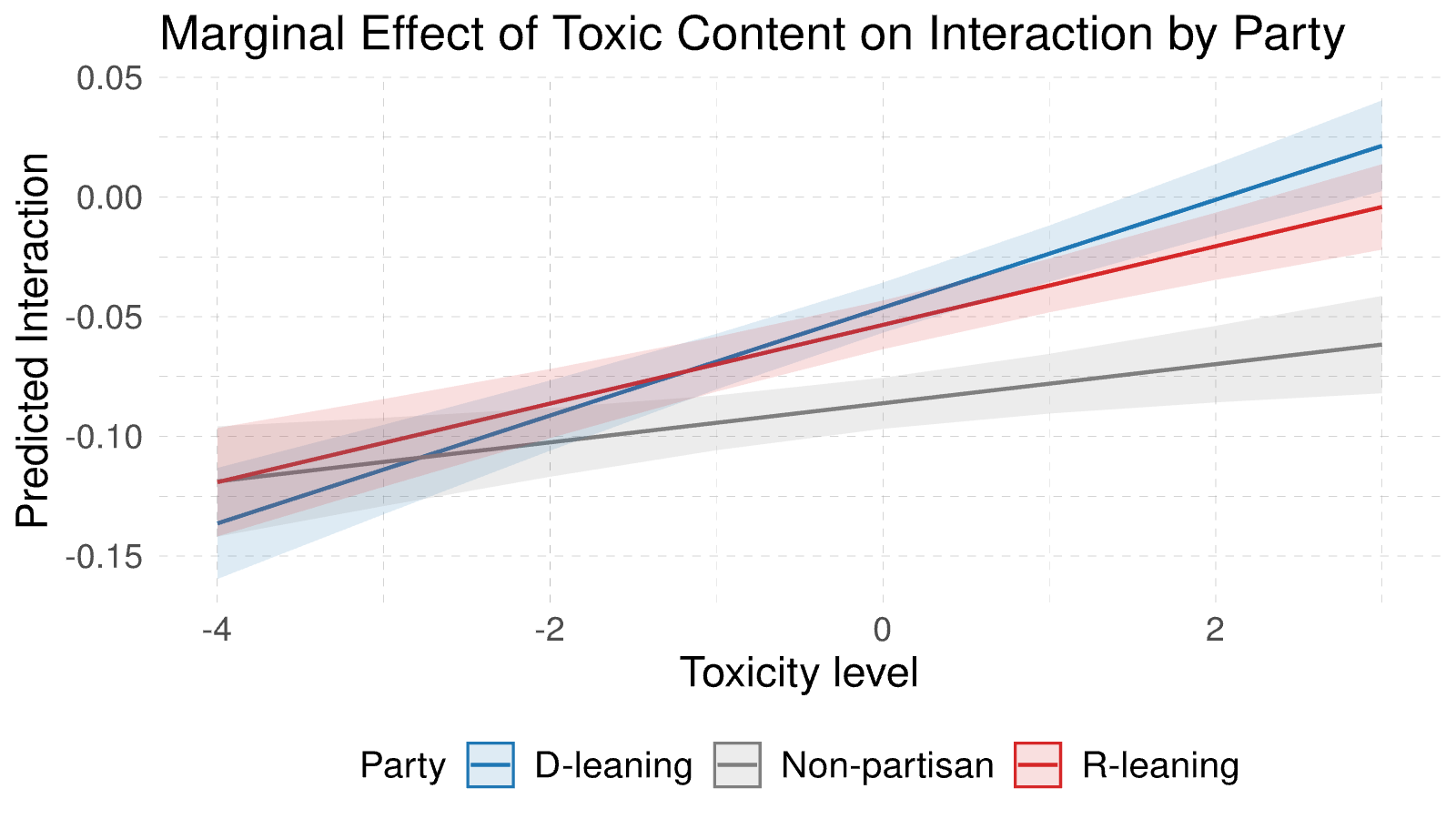

We found that videos containing toxic language received 2.3% (or b = 0.023)3The regression estimate, or effect size (b), is a quantitative measure of the strength or magnitude of a relationship between two variables. For instance, videos containing toxic language receive 2.3% (or b = 0.023) more interaction. This means that, on average, a toxic video receives 2.3% more interactions than a similar non-toxic video, after accounting for topic, political leaning, user-level effects, and views. more interaction than non-toxic ones (95% CI = 0.017, 0.028, p < .001). The effect is stronger when toxicity appears in partisan content: toxic partisan videos received significantly more interaction than nonpartisan ones (b = -0.014, 95% CI = -0.022, -0.007, p < .001), with Democratic-leaning toxic posts slightly outperforming Republican-leaning ones (b = -0.006, 95% CI = -0.013, 0.000, p < .10).

Beyond toxicity, we found that affective and demographic features also influence engagement. Videos with stronger red hues4Red hue was measured as the average saturation of red pixels across video frames—a visual cue associated with emotional intensity or urgency. received 0.7% more interaction (b = 0.007, 95% CI = 0.004, 0.010, p < .001), and longer videos gained 1.0% more interaction (b = 0.010, 95% CI = 0.006, 0.014, p < .001). Videos featuring older speakers were also associated with slightly higher engagement (b = 0.005, 95% CI = 0.003, 0.008, p < .001).

In contrast, hedging language5Hedging language refers to linguistic expressions that convey uncertainty or soften claims, such as “it seems,” “perhaps,” or “I believe.” was associated with lower interaction (b = -0.016, 95% CI = -0.025, -0.008, p < .001). Compared to Democratic-leaning videos, nonpartisan and Republican-leaning content received 4.0% (95% CI = -0.049, -0.031, p < .001) and 0.7% (95% CI = -0.014, 0.000, p < .05) less interaction, respectively. These findings suggest that rhetorical certainty, visual salience, speaker characteristics, and political framing all shape user engagement on TikTok.

Finding 3: Associations between toxicity and engagement vary by topic and partisanship.

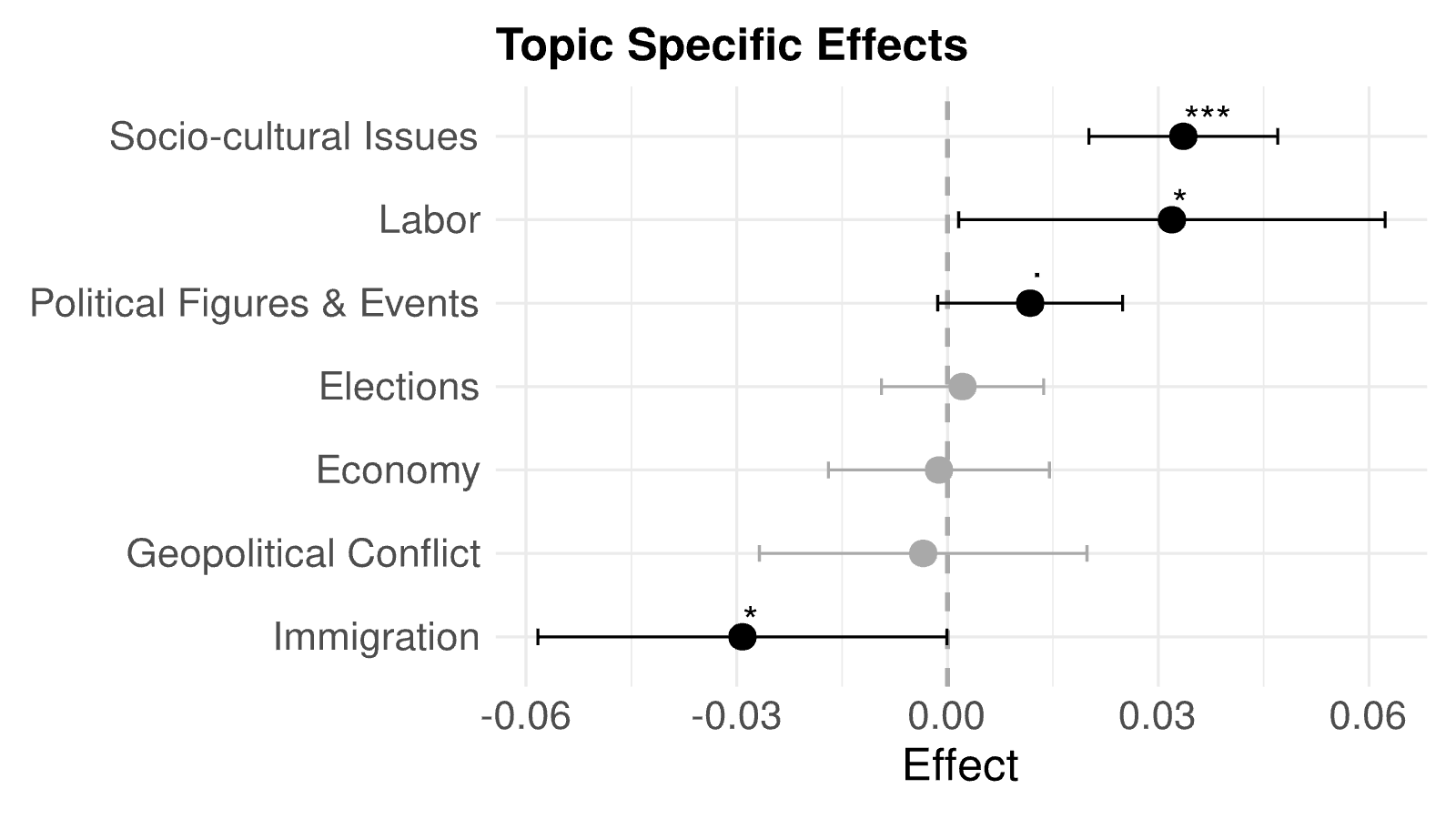

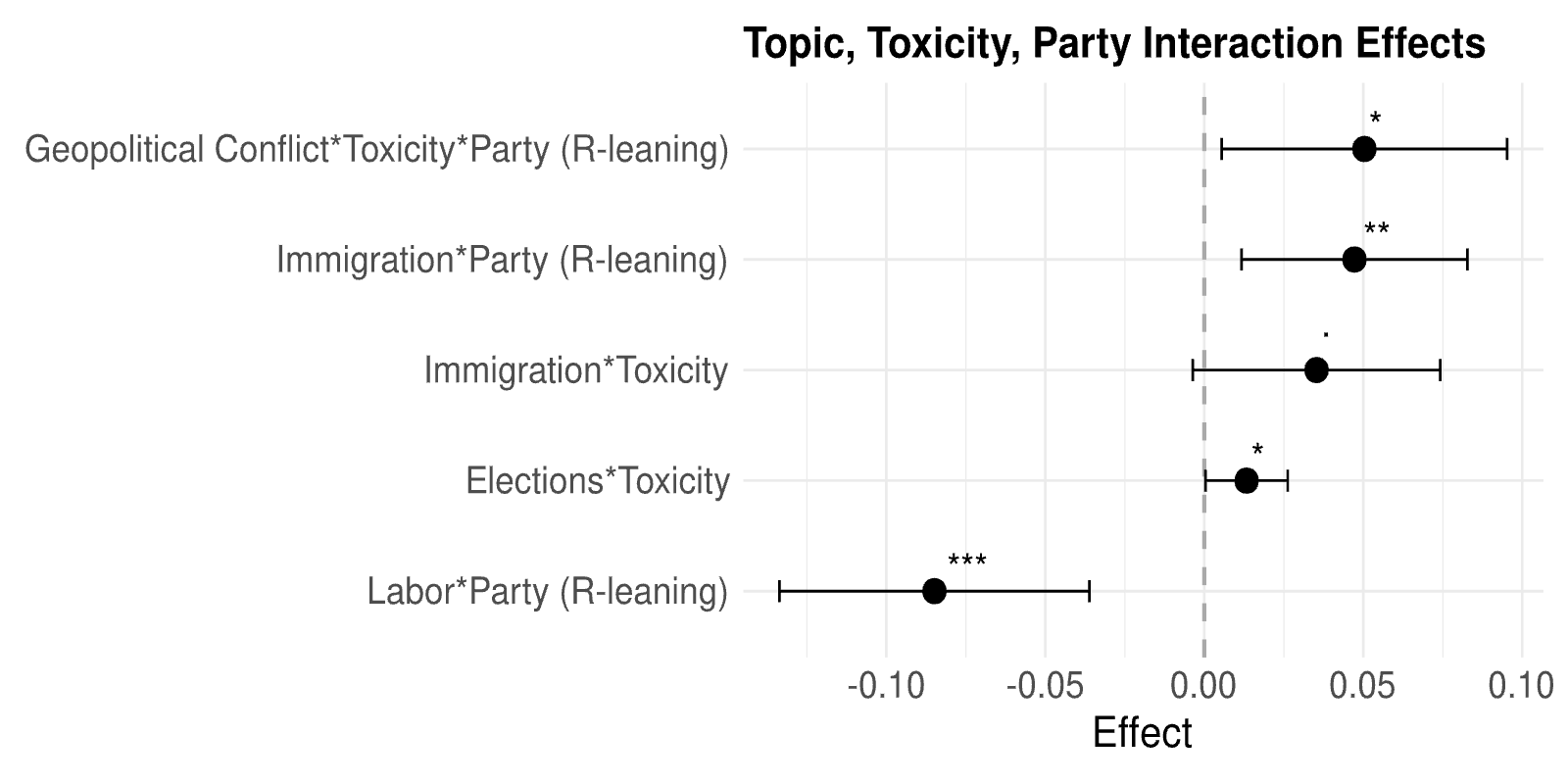

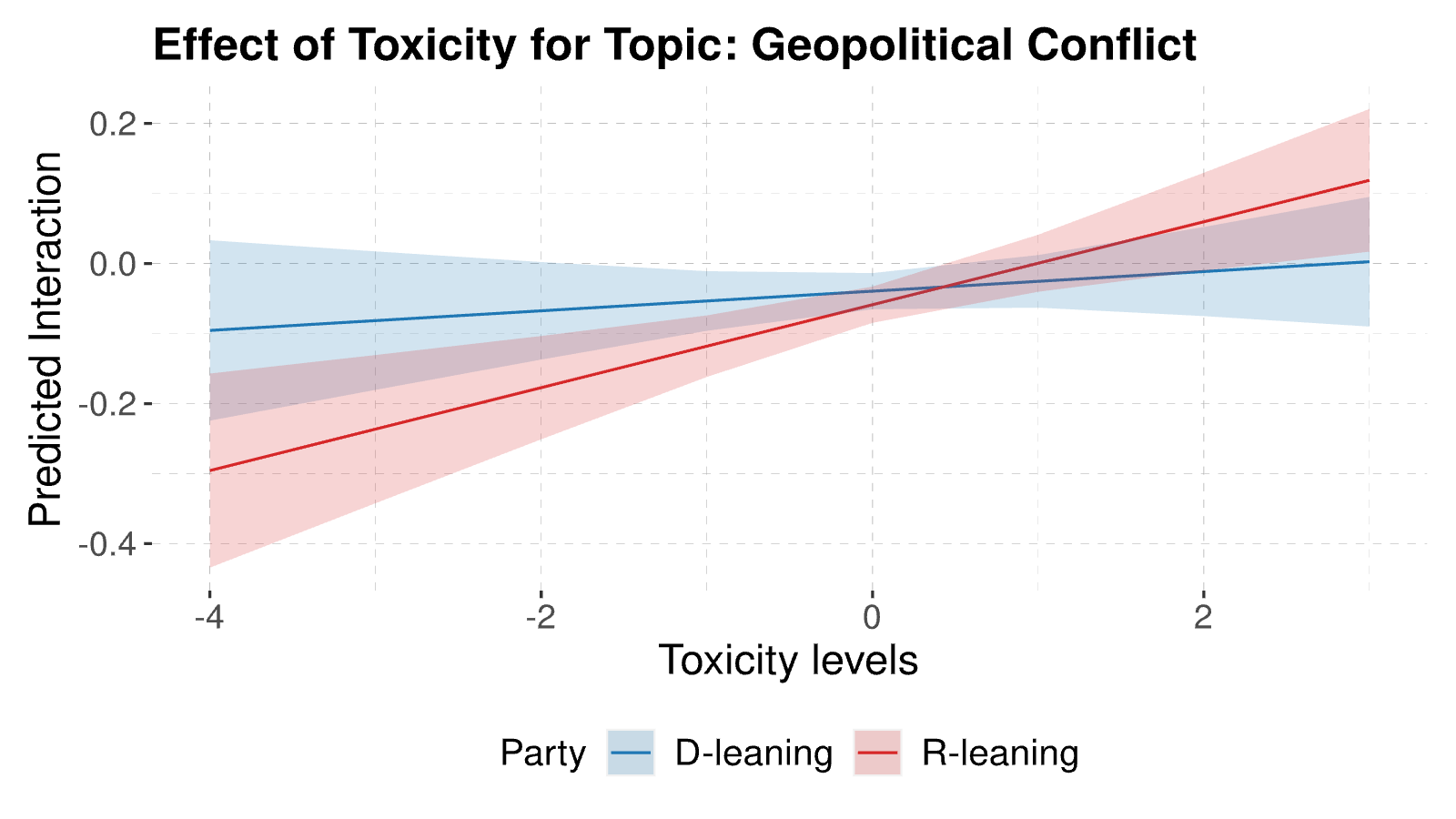

To understand how toxicity and engagement interact across political themes, we categorized each video into one or more of 22 manually validated political topics (see Appendix C), including salient issues like immigration, racism, antisemitism, and election fraud. We found that toxicity levels were highest in topics such as racism, antisemitism, Nazi references, election fraud, and Trump’s assassination attempt (see Figure D1, Appendix D). Toxic content was associated with higher user interaction in videos about elections (b = 0.013, 95% CI = 0.000, 0.026, p < .05) and immigration (b = 0.035, 95% CI = -0.004, 0.074, p < .10) (Figure 4). In Republican-leaning videos, toxic geopolitical content (e.g., discussions of international conflict) showed a stronger engagement effect than nonpartisan equivalents (b = 0.050, 95% CI = 0.005, 0.095, p < .05) (see Figure 5).

We also examined how topics themselves—not just their toxicity—shaped engagement. Topics related to social and cultural issues (b = 0.034, 95% CI = 0.020, 0.047, p < .001), political figures and events (b = 0.012, 95% CI = -0.001, 0.025, p < 0.1), and labor (b = 0.032, 95% CI = 0.002, 0.062, p < .05) were all associated with significantly higher interaction (Figure 3). By contrast, immigration was associated with lower interaction overall (b = -0.029, 95% CI = -0.058, 0.000, p < .05), except for Republican-leaning immigration posts, which saw higher engagement (b = 0.047, 95% CI = 0.012, 0.083, p < .01). Republican-leaning videos on labor issues also saw significantly lower engagement (b = -0.085, 95% CI = -0.134, -0.036, p < .001) (see Figure 4).

Finding 4: Toxic content dynamics shift following political events.

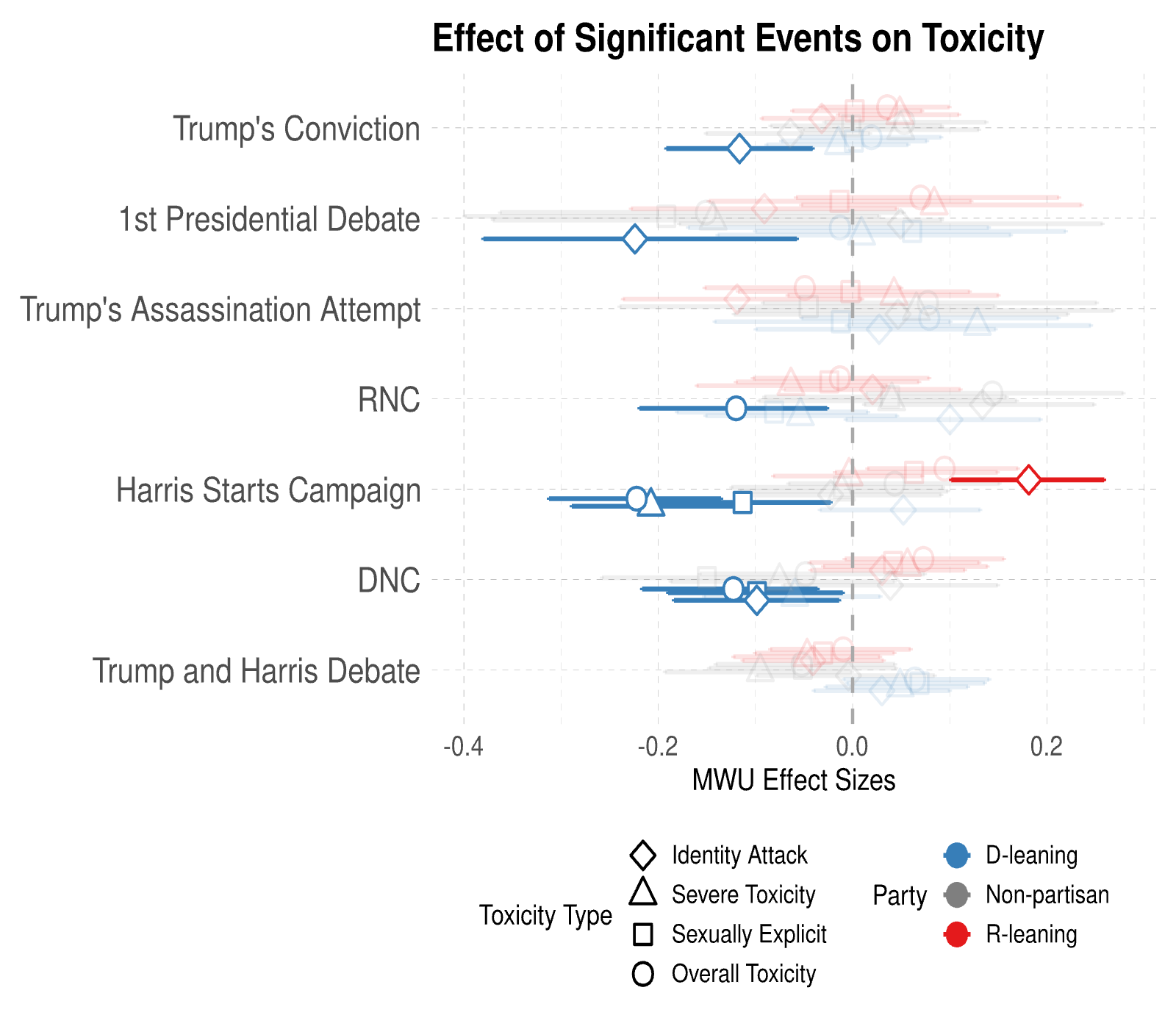

Toxic content notably changed after major political events—such as Trump’s conviction, the first presidential debate between Biden and Trump, the Republican National Convention (RNC), the Harris campaign announcement, and Democratic National Convention (DNC)—as shown in Figure 6. We found that overall toxicity (U = 68401.0, r = -0.22, 95% CI = -0.31, -0.14, p < .001), sexual toxicity (U = 62777.0, r = -0.11, 95% CI = -0.21, -0.02, p < .05), and severe toxicity (U = 66374.0, r = -0.21, 95% CI = -0.29, -0.12, p < .001) decreased in Democrat-leaning videos, while identity attacks (U = 69685.0, r = 0.18, 95% CI = 0.10, 0.26, p < .001) increased in Republican-leaning videos after the Harris campaign announcement. Political videos with sexual attacks and severe toxic language saw increased user interactions by 2.0% (95% CI = 0.004, 0.035, p < .05) and 1.6% (95% CI = 0.001, 0.032, p < .05), respectively, following the Trump conviction controversy, as shown in Figure 7.

Finding 5: Shallow textual features alone fail to detect partisanship and toxicity.

To evaluate how well different inputs capture political content signals, we compared the performance of caption-based inputs (i.e., short text metadata) with transcript-based inputs (full spoken content extracted from videos). Specifically, we assessed whether models using full transcripts were better at identifying toxicity and political alignment than those using captions alone.

For partisan alignment detection,6Partisan leaning was identified using Mistral-7B-Instruct-v0.3 model as described in Appendix B. using captions, we achieved a Cohen’s Kappa of 0.44 with human-coded labels, while using transcripts improved agreement to 0.74—indicating substantially better performance. For toxicity detection, using transcript, we identified7Perspective API was used to detect toxicity, please see Appendix H for details on manual validation. higher toxicity levels in 56.2% more cases compared to caption-based models (Cliff’s δ8Cliff’s δ is a non-parametric effect size measure that quantifies the degree of difference between two groups. It ranges from -1 to 1, where 0 indicates no difference, and values closer to -1 or 1 indicate stronger group differences. = 0.12, 95% CI = 0.04, 0.21). This improvement was especially pronounced for toxicity subtypes like sexual toxicity, identity attacks, and severe toxicity, increasing by 60.4% (Cliff’s δ = 0.21, 95% CI = 0.12, 0.29), 62.9% (Cliff’s δ = 0.26, 95% CI = 0.17, 0.34) and 72.6% (Cliff’s δ = 0.45, 95% CI = 0.37, 0.52) respectively (see Figure E1, Appendix E).

These findings suggest that models relying only on shallow metadata, such as captions, risk systematically missing toxic or partisan cues that are more clearly expressed in spoken language. Our results highlight the importance of incorporating multimodal content signals—especially full transcripts—for accurate political content analysis on platforms like TikTok.

Methods

Data collection and feature extraction

We used the TikTok Research API to collect video posts in three waves. Our process began with identifying politically active users (or seed users) who posted at least three videos between January and March 2024 using U.S. election-related hashtags (e.g., #election2024, #biden2024, #trump2024, #maga; see full list in Appendix A). We then applied a snowball sampling strategy, retrieving up to 1,000 liked videos per user, and iteratively expanding the sample of seed users by including authors of these liked videos. Our data collection period ranged from January 01, 2024, until the 2024 U.S. presidential election on November 7, 2024 (see Appendix A for details). This yielded a dataset of 51,680 downloadable political video posts created by 15,344 unique users.

We extracted video transcripts using Whisper (Radford et al., 2023), enabling the analysis of full spoken content. We classified the partisan alignment of videos based on the transcripts, using the Mistral-7B-Instruct-v0.3 model (Mistral AI, 2024). This yielded 20,385 Republican (R) leaning, 19,114 Democratic (D) leaning, and 12,047 non-partisan posts, which we further validated by manual annotation of 150 samples (see Appendix B).

To examine how language and visuals influence engagement, we extracted a combination of linguistic, visual, and structural features from each video (Appendix A). Visual features included speaker demographics—age, gender—identified using DeepFace (Serengil & Özpınar, 2024) and verified through manual annotation (see Appendix G). We also extracted facial expressions (e.g., joy, anger), average red hue as a measure of color saturation, and technical video properties such as duration and frames per second (FPS). Linguistic features derived from transcripts included markers of generalization, causation, hedging (e.g., “I think,” “maybe”), subjectivity, and emotion words, based on lexicons and prior NLP work (Appendix A). Toxicity was assessed using Perspective API (Jigsaw, 2025), which detects general incivility as well as specific forms such as identity attacks, sexually explicit language, and severe toxicity. We adopted Perspective’s definition of toxicity as “a rude, disrespectful, or unreasonable comment that is likely to make you leave a discussion,” and validated this output through manual annotation, achieving Cohen’s Kappa = 0.79 (see Appendix H). Finally, we used a hybrid method combining keyword filtering and semantic clustering to identify politically salient video topics such as immigration, racism, and labor rights. Topic labels were finalized through manual review (see Appendix C). By integrating these diverse modalities—spoken text, visual signals, and structural attributes—our approach provides a robust framework for analyzing how TikTok videos shape political discourse and drive user engagement.

Significant events

We focused our analysis on several significant events during the U.S. presidential election cycle, as these moments had a substantial impact on political discourse and public opinion. These events include Trump’s conviction on 34 felony counts, the first presidential debate between Biden and Trump, the assassination attempt on Trump, the date Kamala Harris officially launched her presidential campaign after Joe Biden’s resignation, and the debate between Kamala Harris and Donald Trump following her entry into the race. Additionally, we examined the Democratic National Convention (DNC) and the Republican National Convention (RNC), which spanned multiple days and served as critical moments for each party to energize their base and present their platforms.

Statistical analyses

We conducted Mann-Whitney U (MWU) tests with Benjamini false discovery rate9A statistical method used to adjust p-values when performing multiple comparisons. It controls the false discovery rate—the expected proportion of incorrect rejections of the null hypothesis—helping reduce the likelihood of false positives. (FDR) correction to compare differences in views and interactions (total number of likes, comments, and shares on a video) for partisan and non-partisan videos (RQ1). For research questions 2–4 (see Appendix F for model specifications and full regression results), we used linear mixed effect models with random effects on the user, post timing (i.e., day posted), and music effects in the video—to account for the hierarchical data structure and unobserved heterogeneity, such as variations in user behavior, content trends, or time-specific factors influencing engagement.

We assessed the impact of toxicity and how it varied by partisan leaning while accounting for other significant predictors of interactions (RQ2). To understand the effect of political topics, the model was extended by introducing interaction effects between toxicity, party affiliation, and topic groups (RQ3). For RQ4, we evaluate the impact of significant events by comparing changes in overall toxicity as well as its subtypes in a week before versus after each event (i.e., all videos in a window of seven days pre- vs. post-event) using MWU tests with FDR corrections. Furthermore, we evaluate how toxicity is associated with interactions after three of the most significant political events: Trump’s conviction, Trump’s assassination attempt, and Harris’ campaign announcement.

Topics

Bibliography

Balasubramaniam, N., Kauppinen, M., Hiekkanen, K., & Kujala, S. (2022). Transparency and explainability of AI systems: Ethical guidelines in practice. In V. Gervasi & A. Vogelsang (Eds.), 28th International Working Conference, REFSQ 2022, Birmingham, UK, March 21–24, 2022, Proceedings (pp. 3–18). Springer. https://doi.org/10.1007/978-3-030-98464-9_1

Bennett, W. L., & Livingston, S. (2018). The disinformation order: Disruptive communication and the decline of democratic institutions. European journal of communication, 33(2), 122–139. https://doi.org/10.1177/0267323118760317

Biswas, A., Lin, Y.-R., Tai, Y. C., & Desmarais, B. A. (2025). Political elites in the attention economy: Visibility over civility and credibility? Proceedings of the International AAAI Conference on Web and Social Media, 19(1), 241–258. https://doi.org/10.1609/icwsm.v19i1.35814

Biswas, A., Javadian Sabet, A., & Lin, Y.-R. (2025). TikTok political engagement dataset (Version V1) dataset. Harvard Dataverse. https://doi.org/doi:10.7910/DVN/CHYOPR

Boyd, R. L., Ashokkumar, A., Seraj, S., & Pennebaker, J. W. (2022). The development and psychometric properties of LIWC-22 [Technical report]. University of Texas at Austin. https://www.liwc.app

Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. In S. A. Friedler & C. Wilson (Eds.), Proceedings of the 1st Conference on Fairness, Accountability and Transparency (Vol. 81, pp. 77–91). Proceedings of Machine Learning Research (PMLR). https://proceedings.mlr.press/v81/buolamwini18a.html

Siegel-Stechler, K., Hilton, K., & Medina, A. (2025, May 12). Youth rely on digital platforms, need media literacy to access political information. CIRCLE, Tisch College, Tufts University. https://circle.tufts.edu/latest-research/youth-rely-digital-platforms-need-media-literacy-access-political-information

Chung, H.W., Hou, L., Longpre, S., Zoph, B., Tay, Y., Fedus, W., Li, Y., Wang, X., Dehghani, M., Brahma, S., & Webson, A. (2024). Scaling instruction-finetuned language models. Journal of Machine Learning Research, 25(70), 1–53. https://www.jmlr.org/papers/v25/23-0870.html

Diab, R. (2024, July 11). The case for mandating finer-grained control over social media algorithms. Tech Policy Press. https://www.techpolicy.press/the-case-for-mandating-finergrained-control-over-social-media-algorithms/

Donovan, J., & Boyd, D. (2021). Stop the presses? Moving from strategic silence to strategic amplification in a networked media ecosystem. American Behavioral Scientist, 65(2), 333–350. https://doi.org/10.1177/0002764219878229

Felzmann, H., Fosch-Villaronga, E., Lutz, C., & Tamò-Larrieux, A. (2020). Towards transparency by design for artificial intelligence. Science and Engineering Ethics, 26(6), 3333–3361. https://doi.org/10.1007/s11948-020-00276-4

Ferrara, E., Cresci, S., & Luceri, L. (2020). Misinformation, manipulation, and abuse on social media in the era of COVID-19. Journal of Computational Social Science, 3, 271–277. https://doi.org/10.1007/s42001-020-00094-5

Goldstein, I., Edelson, L., Nguyen, M. K., Goga, O., McCoy, D., & Lauinger, T. (2023). Understanding the (in) effectiveness of content moderation: A case study of Facebook in the context of the U.S. Capitol riot. arXiv. https://doi.org/10.48550/arXiv.2301.02737

Grootendorst, M. (2022). BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv https://doi.org/10.48550/arXiv.2203.05794

Gilda, S., Giovanini, L., Silva, M., & Oliveira, D. (2022). Predicting different types of subtle toxicity in unhealthy online conversations. Procedia Computer Science, 198, 360–366. https://doi.org/10.1016/j.procs.2021.12.254

Guess, A., Nyhan, B., Lyons, B., & Reifler, J. (2018). Avoiding the echo chamber about echo chambers: Why selective exposure to like-minded political news is less prevalent than you think. Knight Foundation. https://kf-site-production.s3.amazonaws.com/media_elements/files/000/000/133/original/Topos_KF_White-Paper_Nyhan_V1.pdf

Islam, J., Xiao, L., & Mercer, R. E. (2020, May). A lexicon-based approach for detecting hedges in informal text. In N. Calzolari, F. Béchet, P. Blache, K. Choukri, C. Cieri, T. Declerck, S. Goggi, H. Isahara, B. Maegaard, J. Mariani, H. Mazo, A. Moreno, J. Odijk, & S. Piperidis (Eds.), Proceedings of the Twelfth Language Resources and Evaluation Conference (pp. 3109-3113). European Languages Resources Association. https://aclanthology.org/2020.lrec-1.380/

Karimi, K., & Fox, R. (2023). Scrolling, simping, and mobilizing: TikTok’s influence over Generation Z’s political behavior. The Journal of Social Media in Society, 12(1), 181–208. https://www.thejsms.org/index.php/JSMS/article/view/1251

Koshiyama, A., Kazim, E., Treleaven, P., Rai, P., Szpruch, L., Pavey, G., Ahamat, G., Leutner, F., Goebel, R., Knight, A., & Adams, J. (2024). Towards algorithm auditing: Managing legal, ethical and technological risks of AI, ML and associated algorithms. Royal Society Open Science, 11(5), 230859. https://doi.org/10.1098/rsos.230859

Leppert, R., & Matsa, K. E. (2024). More Americans–especially young adults–are regularly getting news on TikTok. Pew Research Center. https://www.pewresearch.org/short-reads/2024/09/17/more-americans-regularly-get-news-on-tiktok-especially-young-adults/

Llama-3.2-3B-Instruct [Computer software]. (2024). Meta Llama. https://huggingface.co/meta-llama/Llama-3.2-3B-Instruct

Marwick, A., & Lewis, R. (2017). Media manipulation and disinformation online. Data & Society Research Institute. https://datasociety.net/library/media-manipulation-and-disinfo-online/

McClain, C. (2023, August 20). About half of TikTok users under 30 say they use it to keep up with politics, news. Pew Research Center. https://www.pewresearch.org/short-reads/2024/08/20/about-half-of-tiktok-users-under-30-say-they-use-it-to-keep-up-with-politics-news/

Meleagrou-Hitchens, A., & Kaderbhai, N. (2017). Research perspectives on online radicalisation: A literature review, 2006–2016. International Centre for the Study of Radicalisation, King’s College London. https://icsr.info/2017/05/03/icsr-vox-pol-paper-research-perspectives-online-radicalisation-literature-review-2006-2016-2/

Mistral-7B-Instruct-v0.3 [Computer software]. (2024). Mistral AI. https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.3

Mosleh, M., Cole, R., & Rand, D. G. (2024). Misinformation and harmful language are interconnected, rather than distinct, challenges. PNAS Nexus, 3(3), pgae111. https://doi.org/10.1093/pnasnexus/pgae111

Neidhardt, A. H., & Butcher, P. (2022). Disinformation on migration: How lies, half-truths, and mischaracterizations spread. Migration Policy Institute. https://www.migrationpolicy.org/article/how-disinformation-fake-news-migration-spreads

Perspective API [Computer software]. (2025). Jigsaw. https://www.perspectiveapi.com/

Pierri, F., Luceri, L., Chen, E., & Ferrara, E. (2023). How does Twitter account moderation work? Dynamics of account creation and suspension on Twitter during major geopolitical events. EPJ Data Science, 12(1), Article 43. https://doi.org/10.1140/epjds/s13688-023-00420-7

Radford, A., Kim, J. W., Xu, T., Brockman, G., McLeavey, C., & Sutskever, I. (2023). Robust speech recognition via large-scale weak supervision. In A. Krause, E. Brunskill, K. Cho, B. Engelhardt, S. Sabato, & J. Scarlett (Eds.), ICML ’23: Proceedings of the International Conference on Machine Learning (pp. 28492–28518). Association for Computing Machinery. https://dl.acm.org/doi/10.5555/3618408.3619590

Resende, G. H., Nery, L. F., Benevenuto, F., Zannettou, S., & Figueiredo, F. (2024). A comprehensive view of the biases of toxicity and sentiment analysis methods towards utterances with African American English expressions. arXiv. https://doi.org/10.48550/arXiv.2401.12720

Serengil, S., & Özpınar, A. (2024). A benchmark of facial recognition pipelines and co-usability performances of modules. Journal of Information Technologies, 17(2), 95–107. https://doi.org/10.17671/gazibtd.1399077

Somasundaran, S., Ruppenhofer, J., & Wiebe, J. (2007). Detecting arguing and sentiment in meetings. In H. Bunt, S. Keizer, & T. Paek (Eds.), Proceedings of the 8th SIGdial Workshop on Discourse and Dialogue (pp. 26–34). https://doi.org/10.18653/v1/2007.sigdial-1.5

Starbird, K. (2017). Examining the alternative media ecosystem through the production of alternative narratives of mass shooting events on Twitter. Proceedings of the International AAAI Conference on Web and Social Media, 11(1), 230–239. https://doi.org/10.1609/icwsm.v11i1.14878

Perez S. (2021, January 7). TikTok bans videos of Trump inciting mob, blocks #stormthecapital and other hashtags. TechCrunch. https://techcrunch.com/2021/01/07/tiktok-bans-videos-of-trump-inciting-mob-blocks-stormthecapital-and-other-hashtags/

TikTok. (2024). Community principles. https://www.tiktok.com/community-guidelines/en/community-principles

TikTok. (2025a). Research API. TikTok for Developers. https://developers.tiktok.com/products/research-api/

TikTok. (2025b). Our approach to content moderation. TikTok Transparency Center. https://www.tiktok.com/transparency/en/content-moderation/

TikTok. (2025c). Content moderation. https://www.tiktok.com/euonlinesafety/en/content-moderation/

TikTok. (2025d). Teen safety. https://www.tiktok.com/euonlinesafety/en/teen-safety/

TikTok. (2025e). How TikTok recommends content. https://support.tiktok.com/en/using-tiktok/exploring-videos/how-tiktok-recommends-content

Paul K. (2020, October 7). Facebook announces plan to stop political ads after 3 November. The Guardian. https://www.theguardian.com/technology/2020/oct/07/facebook-stop-political-ads-policy-3-november

Wardle, C., & Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policymaking. Council of Europe. https://edoc.coe.int/en/media/7495-information-disorder-toward-an-interdisciplinary-framework-for-research-and-policy-making.html

Wardle, C. (2024). A conceptual analysis of the overlaps and differences between hate speech, misinformation and disinformation. United Nations Peacekeeping. https://peacekeeping.un.org/en/conceptual-analysis-of-overlaps-and-differences-between-hate-speech-misinformation-and

Wilson, T., Hoffmann, P., Somasundaran, S., Kessler, J., Wiebe, J., Choi, Y., Cardie, C., Riloff, E., & Patwardhan, S. (2005). OpinionFinder: A system for subjectivity analysis. In D. Byron, A. Venkataraman, & D. Zhang (Eds), Proceedings of HLT/EMNLP interactive demonstrations (pp. 34–35). Association for Computational Linguistics. https://aclanthology.org/H05-2018/

Funding

This project did not receive direct support from any agency or foundation.

Competing Interests

The authors declare no competing interests.

Ethics

This study does not involve human subjects, and IRB review was not applicable. A state-of-the-art approach, DeepFace, is employed to infer demographic features (e.g., gender, ethnicity, as defined by DeepFace), which we further manually validated (Appendix G). Recognizing potential minority bias (Buolamwini & Gebru, 2018), we recommend further research and the implementation of transparent mitigation strategies to ensure fairness and accountability in algorithmic detection.

While automated toxicity detection tools like Perspective API offer scalable methods for analyzing harmful language, they are not without limitations. Prior research has shown that such models can overestimate toxicity in certain contexts—particularly when analyzing emotionally expressive content, sarcasm, or speech containing African American Vernacular English (Gilda et al., 2022; Resende et al., 2024). To mitigate this, we use continuous toxicity scores and validated Perspective API outputs against human-annotated labels (see Appendix H), finding strong alignment. However, we acknowledge that residual biases may persist. These biases could potentially skew topic-level or group-level toxicity estimates—for example, inflating toxicity scores for content related to race, protest, or polarizing events—thereby influencing interpretations of which topics or partisan groups appear most toxic. While our statistical models control for topic and party interactions, we still warrant some caution in interpreting the results. These limitations underscore the need for future work on bias-aware, context-sensitive toxicity models.

As with any observational study relying on platform APIs, our dataset is shaped by visibility constraints and discovery mechanisms inherent to TikTok’s design. While we cannot claim platform-wide representativeness, our sampling strategy—grounded in election-related hashtags, user engagement behaviors (likes), and multi-step snowball expansion—captures the ecosystem of political content actively circulating during the 2024 U.S. election. This includes videos engaged with from both Democratic- and Republican-aligned creators, as well as a wide range of topics and rhetorical styles. Our findings should therefore be interpreted as reflecting patterns within politically salient and user-engaged content, rather than all political TikTok posts or broader user sentiment. By focusing on videos that users actively engaged with, rather than relying solely on posted content, our study offers insights into how partisanship and toxicity surfaced and amplified within TikTok’s recommendation-driven environment. Future work may expand upon this by comparing patterns across less engaging or emergent content spaces.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

We collected data using the TikTok Research API, following its terms of service. In accordance with TikTok’s data-sharing policies, we are unable to share raw video content, transcripts, or user metadata. However, to support reproducibility, we have published the TikTok post IDs corresponding to the analyzed content on Harvard Dataverse: https://doi.org/10.7910/DVN/CHYOPR. All code required for analysis is available on our GitHub repository: https://github.com/picsolab/Toxic-Politics-and-TikTok-Engagement-in-the-2024-U.S.-Election.

Acknowledgments

We thank NSF, AFOSR, ONR, the Pitt Cyber Institute, and the Pitt CRCD for providing partial support and resources that enabled this research. Any opinions, findings, conclusions, or recommendations expressed here do not necessarily reflect the views of the funding agencies. We also would like to acknowledge and thank Julie Lawler, Sodi Kroehler, and the wider team of PICSO Lab for their early input for this research. We are also grateful to the reviewers for their constructive feedback, which significantly strengthened the final manuscript.