Peer Reviewed

Who knowingly shares false political information online?

Article Metrics

1

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

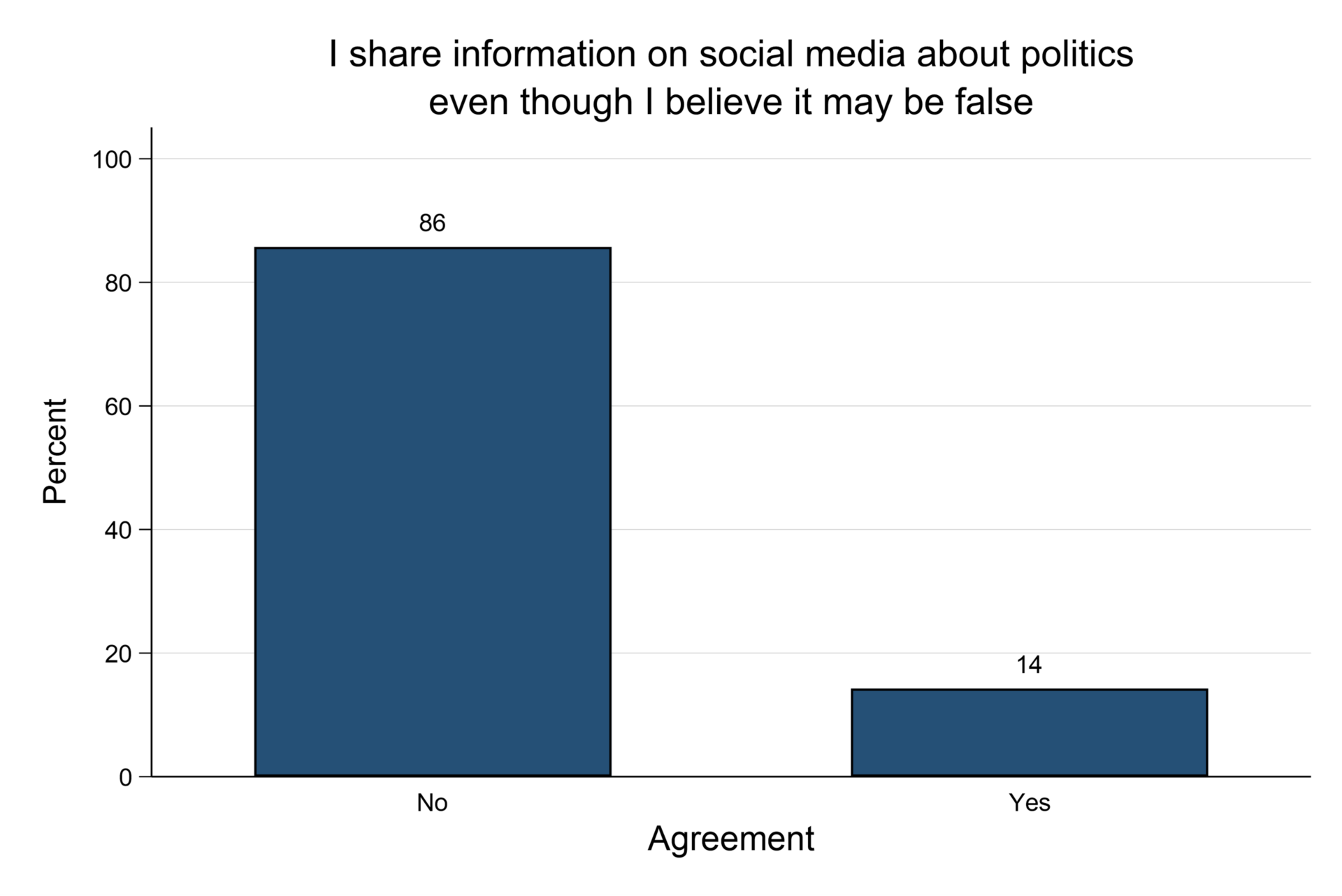

Some people share misinformation accidentally, but others do so knowingly. To fully understand the spread of misinformation online, it is important to analyze those who purposely share it. Using a 2022 U.S. survey, we found that 14 percent of respondents reported knowingly sharing misinformation, and that these respondents were more likely to also report support for political violence, a desire to run for office, and warm feelings toward extremists. These respondents were also more likely to have elevated levels of a psychological need for chaos, dark tetrad traits, and paranoia. Our findings illuminate one vector through which misinformation is spread.

Research Questions

- What percentage of Americans admit to knowingly sharing political information on social media they believe may be false?

- How politically engaged, and in what ways, are people who report knowingly sharing false political information online?

- Are people who report knowingly sharing false political information online more likely to report extremist views and support for extremist groups?

- What are the psychological, political, and social characteristics of those who report knowingly sharing false political information online?

Essay Summary

- While most people are exposed to small amounts of misinformation online (in comparison to their overall news diet), previous studies have shown that only a small number of people are responsible for sharing most of it. Gaining a better understanding of the motivations of social media users who share information they believe to be false could lead to interventions aimed at limiting the spread of online misinformation.

- Using a national survey from the United States (n = 2,001; May–June 2022), we asked respondents if they share political information on social media that they believe is false; 14% indicated that they do.

- Respondents who reported purposefully sharing false political information online were more likely to harbor (i) a desire to run for political office, (ii) support for political violence, and (iii) positive feelings toward QAnon, Proud Boys, White Nationalists, and Vladimir Putin. Furthermore, these respondents displayed elevated levels of anti-social characteristics, including a psychological need for chaos, “dark” personality traits (narcissism, psychopathy, Machiavellianism, and sadism), paranoia, dogmatism, and argumentativeness.

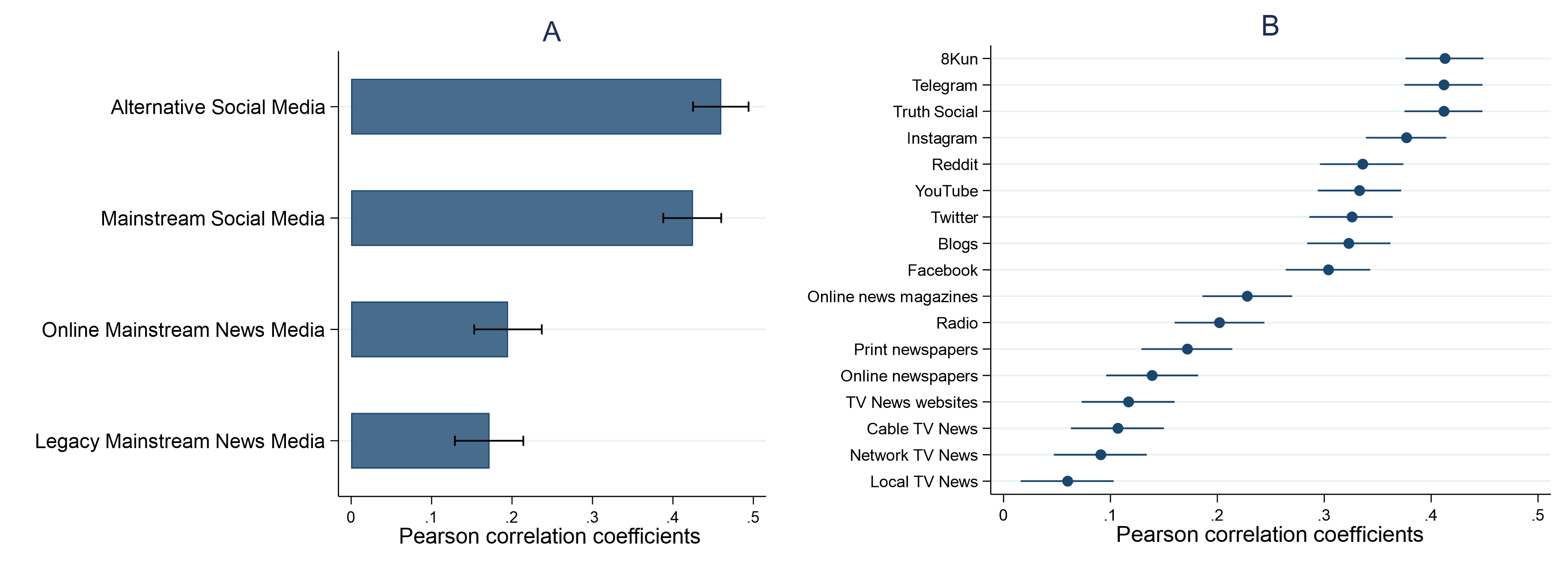

- People who reported sharing political information they believe is false on social media were more likely to use social media platforms known for promoting extremist views and conspiracy theories (e.g., 8Kun, Telegram, Truth Social).

Implications

A growing body of research shows that online misinformation is both easily accessible (Allcott & Gentzkow, 2017; Del Vicario et al., 2016) and can spread quickly through online social networks (Vosoughi et al., 2018). Though misinformation—information that is false or misleading according to the best currently established knowledge (Ecker et al., 2021; Vraga & Bode, 2020)—is often spread unintentionally, disinformation, a subcategory of misinformation, is spread with the deliberate intent to deceive (Starbird, 2019). Critically, the pervasiveness of online mis- and disinformation has made attempts by online news and social media companies to prevent, curtail, or remove it from various platforms difficult (Courchesne et al., 2021; Ha et al., 2022; Sanderson et al., 2021; Vincent et al., 2022). While the causal impact of online misinformation is often difficult to determine (Enders et al., 2022; Uscinski et al., 2022), numerous studies have shown that exposure is (at least) correlated with false beliefs (Bryanov & Vziatysheva, 2021), beliefs in conspiracy theories (Xiao et al., 2021), and nonnormative behaviors, including vaccine refusal (Romer & Jamieson, 2021).

Numerous studies have investigated the spread of political mis- and disinformation as a “top-down” phenomenon (Garrett, 2017; Lasser et al., 2022; Mosleh & Rand, 2022) emanating from domestic political actors (Berlinski et al., 2021), untrustworthy websites (Guess et al., 2020), and hostile foreign governments (Bail et al., 2019), and flowing through social media and other networks (Johnson et al., 2022). Indeed, studies have found that most online political content, as well as most online misinformation, is produced by a relatively small number of accounts (Grinberg et al., 2019; Hughes, 2019). Other research has focused on how the public interacts with and evaluates misinformation to identify the individual differences related not only to falling for misinformation but also to unintentionally spreading it (Littrell et al., 2021a; Pennycook & Rand, 2021).

However, rather than being unknowingly duped into sharing misinformation, many people (who are not political elites, paid activists, or foreign political actors) knowingly share false information in a deliberate attempt to deceive or mislead others, often in the service of a specific goal (Buchanan & Benson, 2019; Littrell et al., 2021b; MacKenzie & Bhatt, 2020; Metzger et al., 2021). For instance, people who create and spread fake news content and highly partisan disinformation online are often motivated by the desire that such posts will “go viral,” attracting attention that will hopefully provide a reliable stream of advertising revenue (Guess & Lyons, 2020; Pennycook & Rand, 2020; Tucker et al., 2018). Others may do so to discredit political or ideological outgroups, advance their own ideological agenda or that of their partisan ingroup, or simply because they enjoy instigating discord and chaos online (Garrett et al., 2019; Marwick & Lewis, 2017; Petersen et al., 2023).

Though the art of deception is likely as old as communication itself, in the past, a person’s ability to meaningfully communicate with (and perhaps deceive) large groups was arguably limited. In contrast, social media now gives every person the power to rapidly broadcast (false) information to potentially global, mass audiences (DePaulo et al., 1996; Guess & Lyons, 2020). This implicates social media as a critical vector in the spread of misinformation. Whatever the motivations for sharing false information online, a better understanding of the human sources who create it by identifying the psychological, political, and ideological factors common to those who do so intentionally can provide crucial insights to aid in developing interventions that decrease its spread.

In a national survey of the United States, we asked participants to rate their agreement (“strongly agree” to “strongly disagree”) with the statement, “I share information on social media about politics even though I believe it may be false.” In total, 14% of respondents agreed or strongly agreed with this statement; these findings coincide with those of other studies on similar topics (Buchanan & Kempley, 2021; Halevy et al., 2014; Serota & Levine, 2015). Normatively, it is encouraging that only a small minority of our respondents indicated that they share false information about politics on social media. However, the fact that 14% of the U.S. adult population claims to purposely spread political misinformation online is nonetheless troubling. Rather than being exclusively a top-down phenomenon, the purposeful sharing of false information by members of the public appears to be an important vector of misinformation that deserves more attention from researchers and practitioners.

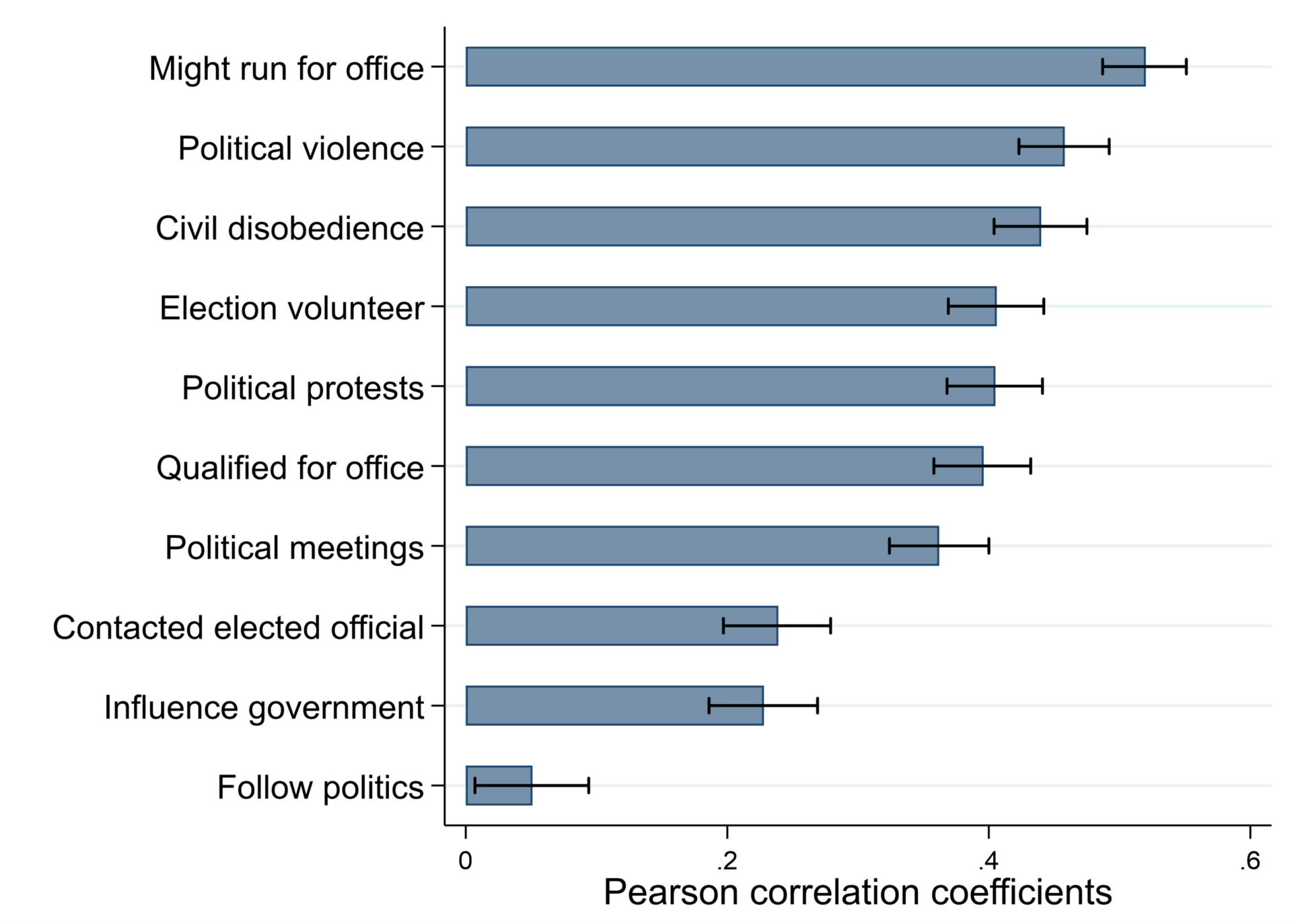

Of further concern, our findings show that people who claimed to knowingly share information on social media about politics were more politically active in meaningful ways. First and perhaps foremost, such respondents were not only more likely to state a desire to run for political office but were also more likely to feel qualified for office, compared to people that do not claim to knowingly share false information. This finding is troubling from a normative perspective since such people might not be honest with constituents if elected (consider, for example, Representative George Santos of New York), and this could further erode our information environment (e.g., Celse & Chang, 2019). However, this finding may also offer crucial insights to better understand the tendency and motivations of at least some politicians to share misinformation or outright lie to the public (Arendt, 1972; Sunstein, 2021). Beyond aspirations for political office, spreading political misinformation online is positively associated with support for political violence, civil disobedience, and protests. Moreover, though spreading misinformation is also associated with participating in political campaigns, it is only weakly related to attending political meetings, contacting elected representatives, or staying informed about politics. Taken together, these findings paint a somewhat nuanced picture: People who were more likely to self-report having intentionally shared false political information on social media were more likely to be politically active and efficacious in certain aggressive ways, while simultaneously being less likely to participate in more benign or arguably positive ways.

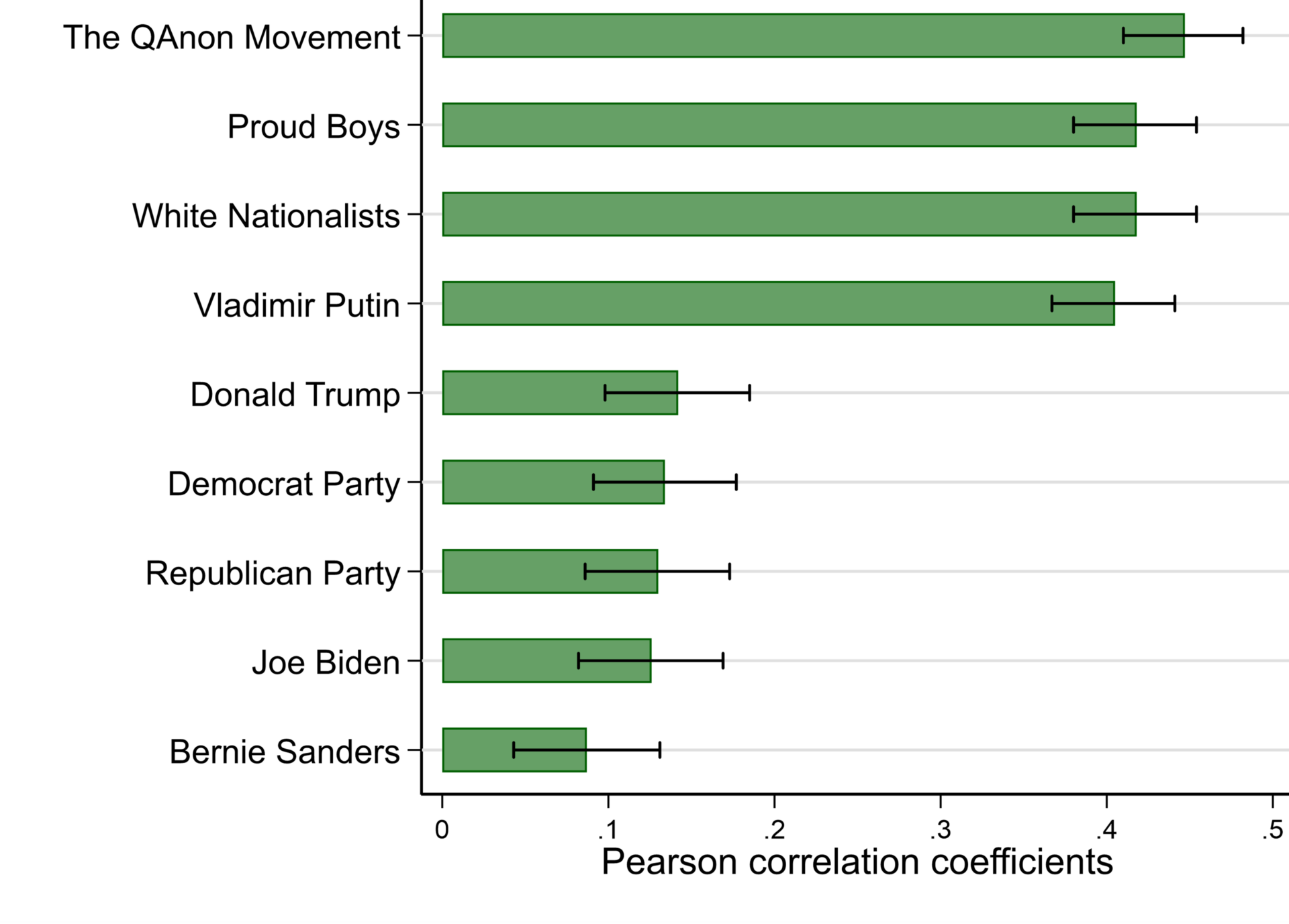

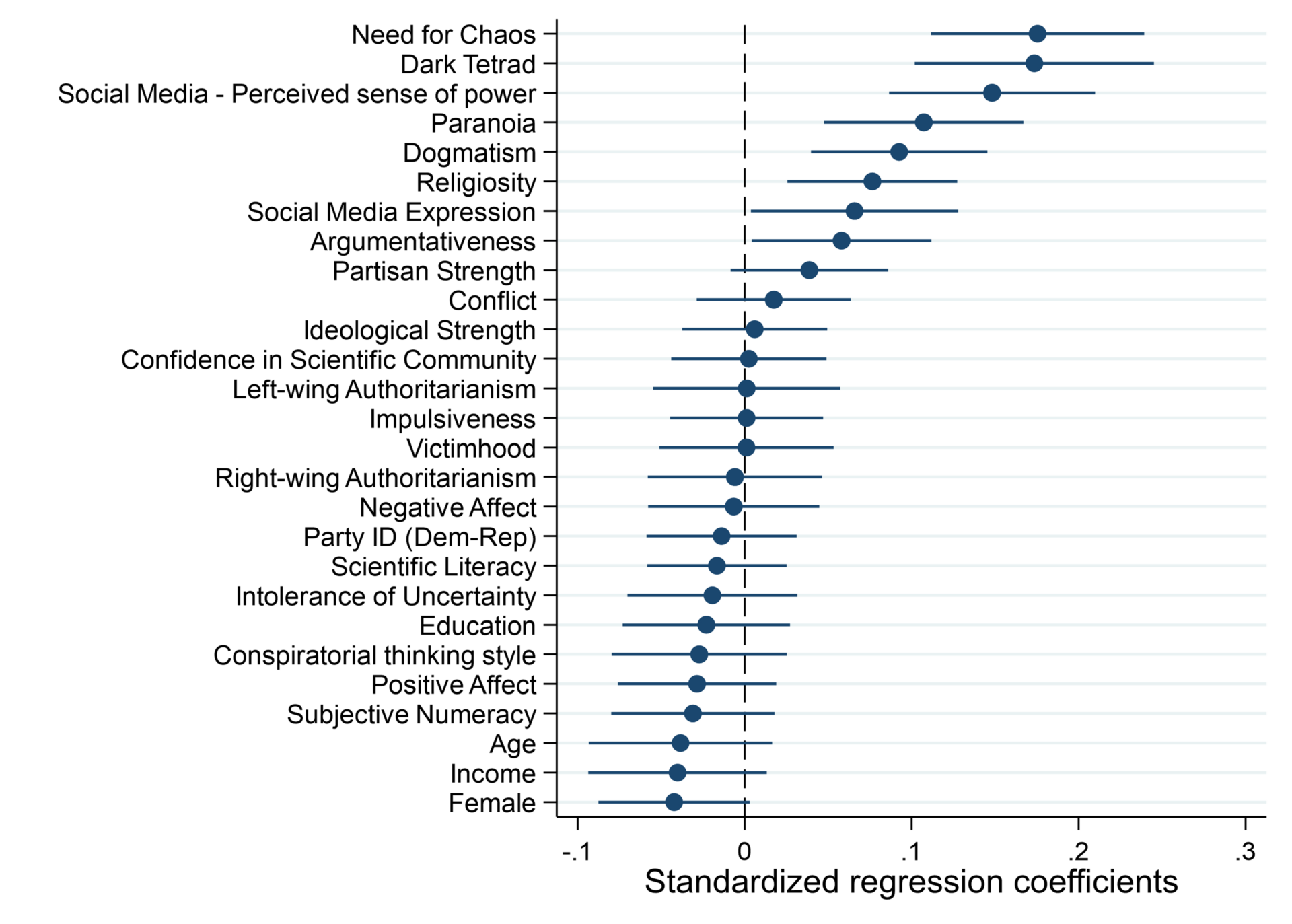

Our findings also revealed that respondents who reported sharing false political information on social media were more likely to express support for extremist groups such as QAnon, Proud Boys, and White Nationalists. These observations coincide with previous studies linking extremist groups to the spread of misinformation, disinformation, and conspiracy theories (Moran et al., 2021; Nguyen & Gokhale, 2022; Stern, 2019). One possible explanation for this association is that supporters of extremist groups recognize their outsider status in comparison to mainstream political groups, leveraging false information to manage public impressions, attract new members, and further their group’s cause. Alternatively, it could be that the beliefs promoted by extremist groups are so disconnected from our shared political reality that these groups may need to rely on falsehoods to manipulate their own followers and prevent attrition of group membership. While the exact nature of these associations remains unclear, future research should further interrogate the connection between sharing false information and support for extremism and extremist groups. In line with previous studies (Lawson & Kakkar, 2021), our findings show that people who reported sharing false political information on social media were more likely to report higher levels of antisocial psychological characteristics. Specifically, they reported higher levels of a “need for chaos,” “dark tetrad” personality traits (a combined measure of narcissism, Machiavellianism, psychopathy, and sadism), paranoia, dogmatism, and argumentativeness when compared to respondents who did not report knowingly sharing false information on social media. Much like the Joker in the movie The Dark Knight, people who intentionally spread false information online may, at least on some level, simply want to “watch the world burn” (Arceneaux et al., 2021). Indeed, previous studies suggest that much of the toxicity of social media is not due to a “mismatch” between human psychology and online platforms (i.e., that online platforms bring out the worst in otherwise nice people); instead, such toxicity results from a relatively smaller fraction of people with status-seeking antisocial tendencies, who act overtly antisocial online, and are drawn to interactions in which they express elevated levels of aggressiveness toward others with toxic language (Bor & Petersen, 2022; Kim et al., 2021). Such observations are echoed in our own results, which showed that people who knowingly share false information online were also more likely to indicate that posting on social media gives them a greater feeling of power and control and allows them to express themselves more freely.

While research on the associations between religiosity and lying/dishonesty has shown mixed results (e.g., Desmond & Kraus, 2012; Grant et al., 2019), we found that religiosity positively predicts knowingly sharing false information online. Additionally, despite numerous studies of online activity suggesting that people on the political right are more likely to share misinformation (e.g., DeVerna et al., 2022; Garrett & Bond, 2021), our findings show no significant association between self-reported sharing of false information online and political identity or the strength of one’s partisan or ideological views.

Our findings offer a broad psychological and ideological blueprint of individuals who reported intentionally spreading false information online, implicating specific personality and attitudinal characteristics as potential motivators of such behavior. Overall, these individuals are more antagonistic and argumentative, have a higher need for chaos, and tend to be more dogmatic and religious. Additionally, they are more politically engaged and active, often in counterproductive and destructive ways, and show higher support for extremist groups. They are also more likely to get their news from fringe social media sources and feel a heightened sense of power and self-expression from their online interactions. Taken together, these findings suggest that interventions which focus on eliminating the perceived social incentives gained from intentionally spreading misinformation online (e.g., heightened feelings of satisfaction, power, and enjoyment associated with discrediting ideological outgroups, instigating chaos, and “trolling”) may be effective at attenuating this type of online behavior.

Though some research has shown promising results using general interventions such as “accuracy nudges” (Pennycook et al., 2021) and educational video games to inoculate people against misinformation (Roozenbeek et al., 2022), more direct measures may also need to be implemented by social media companies. For example, companies might consider restructuring the online environment to remove overt social incentives that may inadvertently reward pernicious behavior (e.g., reconsidering how “likes” and sharing are implemented) and instead create online ecosystems that reward more positive social media interactions. For practitioners, at the very least, our finding that some people claim to share online misinformation for reasons other than simply being duped, suggests that future interventions aimed at limiting the spread of misinformation should attempt to address users who both unknowingly and knowingly share misinformation, as these two groups of users may require different interventions. More specifically, if one does not care about accuracy, then accuracy nudges will do little to prevent them from sharing misinformation. Taken together, our findings further implicate personality and attitudinal characteristics as potentially significant motivators for the spread of misinformation. As such, we join others who have called for greater integration of personality research into the study of online misinformation and the ways in which it spreads (e.g., Lawson & Kakkar, 2021; van der Linden et al., 2021).

Findings

Finding 1: Most people do not report intentionally spreading false political information online.

We asked participants to rate their agreement (“strongly agree” to “strongly disagree”) with the statement, “I share information on social media about politics even though I believe it may be false.” At best, agreement with this statement reflects a carefree disregard for the truth, a key characteristic of certain types of “bullshitting” (Frankfurt, 2009; Littrell et al., 2021a). However, at worst, strong agreement with this statement is admitting to intentional deception (i.e., lying). Though most participants disagreed with this statement, a non-trivial percentage of respondents (14%) indicated that they do intentionally share false political information on social media (Figure 1). These findings are consistent with empirical studies of similar constructs, such as lying (Buchanan & Kempley, 2021; Halevy et al., 2014; Serota & Levine, 2015) and “bullshitting” (Littrell et al., 2021a), which have shown that a small but consistent percentage of people admit to intentionally misleading others.

Notably, it is possible that the prevalence of knowingly sharing political misinformation online is somewhat underreported in our data, given that some of the spreaders of it in our sample could have denied it when responding to that item (which, ironically, would be another instance of them spreading misinformation). Indeed, some research has found that survey respondents may sometimes hide their true beliefs or express agreement or support for a specific idea they actually oppose either as a joke or to signal their group identity (i.e., Lopez & Hillygus, 2018; Schaffner & Luks, 2018; Smallpage et al., 2022). Further, self-reported measures of behavior are sometimes only weakly correlated with actual behavior (Dang et al., 2020). However, there are good reasons to have confidence in this self-reported measure. First, self-report surveys have high reliability for measuring complex psychological constructs (e.g., beliefs, attitudes, preferences) and are sometimes better at predicting real-world outcomes than behavioral measures of those same constructs (Kaiser & Oswald, 2022). Second, the percentage of respondents in our sample who admitted to spreading false political information online aligns with findings from previous research. For example, Serota and Levine (2015) found that 14.1% of their sample admitted to telling at least one “big lie” per day, while Littrell and colleagues (2021a) found 17.3% of their sample admitted to engaging in “persuasive bullshitting” on a regular basis. These numbers are similar to the 14% of our sample who self-reported knowingly sharing false information. Third, previous studies have found that self-report measures of lying and bullshitting positively correlate with behavioral measures of those same constructs (Halevy et al., 2014; Littrell et al., 2021a; Zettler et al., 2015). Given that our dependent variable captures a conceptually similar construct to those other measures, we are confident that our self-report data reflects real-world behavior, at least to a reasonable degree.

Crucially, we also found that the correlational patterns we reported across multiple variables are highly consistent and make sense with respect to what prior theory would predict of people who share information they believe to be false. Indeed, “need for chaos” has recently been shown to be a strong motivator of sharing hostile, misleading political rumors online (Petersen et al., 2023) and, as Figure 4 illustrates, “need for chaos” was also the strongest positive predictor in our study of sharing false political information online (β = .18, p < .001). Moreover, as an added test of the reliability and validity of our dependent variable, we examined correlations between responses to our measure of sharing false political information online and single-scale items that reflect similar behavioral tendencies. For instance, our dependent variable is significantly and positively correlated (r = .53, p < .001) with the statement, “Just for kicks, I’ve said mean things to people on social media,” from the Sadism subscale of our “Dark Tetrad” measure. Additionally, our dependent variable also correlated well with two conceptually similar items from the Machiavellianism subscale, “I tend to manipulate others to get my way” (r = .43, p < .001) and “I have used deceit or lied to get my way” (r = .35, p < .001). Although the sizes of these effects do not suggest that these constructs are isomorphic, it is helpful to note that our dependent variable item specifically measures sharing false information about politics on social media, which arises from a diversity of motivations, and not lying about anything and everything across all domains.

Finding 2: Reporting sharing false political information is associated with politically motivated behaviors and attitudes.

Self-reported sharing of false political information on social media was significantly and positively correlated with having contacted an elected official within the previous year (r = .24, p < .001) and with the belief that, “People like me can influence government” (r = .23, p < .001). Additionally, people who self-report sharing false political information online also reported more frequent attendance at political meetings (r = .36, p < .001) and volunteering during elections (r = .41, p < .001) compared to participants who do not report sharing false political information online.

Although these findings may give the impression that respondents reporting spreading online political misinformation are somewhat civically virtuous, these respondents also report engaging in aggressive and disruptive political behaviors. Specifically, reporting spreading false information was significantly associated with greater reported involvement in political protests (r = .40, p < .001), acts of civil disobedience (r = .44, p < .001), and political violence (r = .46, p < .001). Spreading false information online was also significantly and positively related to believing that one is qualified for public office (r = .40, p < .001) and the desire to possibly run for office one day (r = .52, p < .001), but was only weakly related to staying informed about government and current affairs (“follows politics”; r = .05, p = .024).

Finding 3: Reporting sharing false political information online is associated with support for extremist groups.

Using a sliding scale from 0 to 100, participants rated their feelings about various public figures and groups (Figure 3). While the self-reported tendency to knowingly share false political information online was weakly, but positively, associated with support for more mainstream public figures such as Donald Trump (r = .14, p < .001), Joe Biden (r = .13, p < .001), and Bernie Sanders (r = .09, p < .001), it was more strongly associated with support for Vladimir Putin (r = .40, p < .001). Likewise, self-reported sharing of false political information online was weakly but positively associated with support for the Democrat Party (r = .13, p < .001) and the Republican Party (r = .13, p < .001), but was most strongly associated with support for extremist groups such as the QAnon movement (r = .45, p < .001), Proud Boys (r = .42, p < .001), and White Nationalists (r = .42, p < .001).

Finding 4: Reporting sharing false political information online is associated with dark psychological traits.

We constructed a multiple linear regression model to better understand the extent to which various psychological, political, and demographic characteristics might underlie the proclivity to knowingly share political misinformation on social media. Holding all other variables constant, a greater “need for chaos” (β = .18, p < .001) as well as higher levels of antagonistic, “dark tetrad” personality traits (a single factor measure of narcissism, Machiavellianism, psychopathy, and sadism; β = .18, p < .001) were the strongest positive predictors of self-reported sharing of political misinformation online. Self-reported sharing of false information was also predicted by higher levels of paranoia (β = .11, p < .001), dogmatism (β = .09, p = .001), and argumentativeness (β = .06, p = .035). People who feel that posting on social media gives them greater feelings of power and control (β = .14, p < .001) and allows them to more freely express opinions and attitudes they are reluctant to express in person (β = .06, p = .038) are also more likely to report knowingly sharing false political information online. Importantly, though sharing political misinformation online is positively predicted by religiosity (β = .07, p = .003), it is not significantly associated with political identity or the strength of one’s partisan or ideological views.

Finding 5: People who report intentionally sharing false political information online are more likely to get their news from social media sites, particularly from outlets that are known for perpetuating fringe views.

On a scale from “everyday” to “never,” participants reported how often they get “information about current events, public issues, or politics” from various media sources, including offline legacy media sources (e.g., network television, cable news, local television, print newspapers, radio) and online media sources (e.g., online newspapers, blogs, YouTube, and various social media platforms). A principal components analysis of the online media sources revealed three distinct categories: 1) online mainstream news media, made up of TV news websites, online news magazines, online newspapers; 2) mainstream social media sites, such as YouTube, Facebook, Twitter, Instagram; and 3) alternative social media sites, which comprised blogs, Reddit, Truth Social, Telegram, and 8Kun (factor loadings are listed in Table A8 of the appendix). After reverse-coding the scale for analysis, we examined bivariate correlations to determine whether the proclivity to share false political information online is meaningfully associated with the types of media sources participants get their information from.

As shown in Figure 5A, reporting sharing false political information online is strongly associated with more frequent use of alternative (r = .46, p < .001) and mainstream (r = .42, p < .001) social media sites and weakly-to-moderately correlated with getting information from online (r = .20, p < .001) or offline/legacy (r = .17, p < .001) mainstream news sources. On an individual level (Figure 5B), reporting sharing false political information online was most strongly associated with getting information on current events, public issues, and politics from Truth Social (r = .41, p < .001), Telegram (r = .41, p < .001), and 8Kun (r = .41, p < .001), of which the latter two are popular among fringe groups known for promoting extremist views and conspiracy theories (Urman & Katz, 2022; Zeng & Schäfer, 2021).

Methods

We surveyed 2,001 American adults (900 male, 1,101 female, Mage = 48.54, SDage = 18.51, Bachelor’s degree or higher = 43.58%) from May 26 through June 30, 2022, using Qualtrics (qualtrics.com). For this survey, Qualtrics partnered with Cint and Dynata to recruit a demographically representative sample (self-reported sex, age, race, education, and income) based on U.S. Census records. Cint and Dynata maintain panels of subjects that are only used for research, and both comply fully with European Society for Opinion and Marketing Research (ESOMAR) standards for protecting research participants’ privacy and information security. Additionally, and in keeping with Qualtrics data quality standards, responses were excluded from the data set from participants who failed six attention check items or completed the survey in less than one-half of the estimated median completion time of 18.6 minutes (calculated from a soft-launch test of the questionnaire, n = 50). In exchange for their participation, respondents received incentives redeemable from the sample provider. These data were collected as part of a larger survey.

Our dependent variable asked respondents to rate their agreement with the following statement using a 5-point Likert-type scale (Figure 1):

“I share information on social media about politics even though I believe it may be false.”

In addition to this question, participants were also asked to rate the strength of certain political beliefs (i.e., whether they feel qualified to run for office, whether they think they might run for office one day, and whether they believe someone like them can influence government) and the frequency that they engaged in specific political behaviors in the previous 12 months (contacting elected officials, volunteering during an election, staying informed about government, and participating in political meetings, protests, civil disobedience, or violence). We calculated bivariate Pearson’s correlation coefficients for each of these variables with the item measuring whether one shares false political information on social media, which we have displayed in Table A4 of the Appendix.

Participants also used “feelings thermometers” to rate their attitudes toward a number of public political figures and groups. Each public figure or group was rated on a scale from 0 to 100, with scores of 0 to 50 reflecting negative feelings and scores from above 50 to 100 reflecting positive feelings. Although all correlations between the sharing false political information variable the public figures and groups were statistically significant, the strongest associations were with more adversarial figures (e.g., Putin) and groups (e.g., The QAnon Movement, Proud Boys, White Nationalists). We have plotted these associations in Figure 3.

To provide a more complete description of individuals who are more likely to report intentionally sharing false political information online, we examine the predictive utility of a number of psychological attributes, political attitudes, and demographics variables in an ordinary least squares (OLS) multiple linear regression model (Figure 4). We provide precise estimates in tabular form for all predictors as well as the overall model in the Appendix.

Finally, participants were asked to rate 17 media sources according to the frequency (“everyday” to “never”) with which they use each for staying informed on current events, public issues, and politics. A principal components analysis revealed that the media sources represented four categories: legacy mainstream news media (network TV, cable TV, local TV, radio, and print newspapers), online mainstream news media (TV news websites, online news magazines, online newspapers), mainstream social media sites (YouTube, Facebook, Twitter, Instagram), and alternative social media sites (blogs, Reddit, Truth Social, Telegram, 8Kun). We calculated bivariate correlations between reporting sharing false political information online with the four categories of media sources as well as the 17 individual sources. We have plotted these associations in Figure 5 and provide a full list of intercorrelations for all variables as well as factor loadings from the PCA in the Appendix.

Topics

Bibliography

Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211

Arceneaux, K., Gravelle, T. B., Osmundsen, M., Petersen, M. B., Reifler, J., & Scotto, T. J. (2021). Some people just want to watch the world burn: The prevalence, psychology and politics of the ‘need for chaos’. Philosophical Transactions of the Royal Society B: Biological Sciences, 376(1822), 20200147. https://doi.org/10.1098/rstb.2020.0147

Arendt, H. (1972). Crises of the Republic: Lying in politics, civil disobedience on violence, thoughts on politics, and revolution. Houghton Mifflin Harcourt.

Armaly, M. T., & Enders, A. M. (2022). ‘Why me?’ The role of perceived victimhood in American politics. Political Behavior, 44(4), 1583–1609. https://doi.org/10.1007/s11109-020-09662-x

Bail, C., Guay, B., Maloney, E., Combs, A., Hillygus, D. S., Merhout, F., Freelon, D., & Volfovsky, A. (2019). Assessing the Russian Internet Research Agency’s impact on the political attitudes and behaviors of American Twitter users in late 2017. Proceedings of the National Academy of Sciences, 117(1), 243–250. https://doi.org/10.1073/pnas.1906420116

Berlinski, N., Doyle, M., Guess, A. M., Levy, G., Lyons, B., Montgomery, J. M., Nyhan, B., & Reifler, J. (2021). The effects of unsubstantiated claims of voter fraud on confidence in elections. Journal of Experimental Political Science, 10(1), 34–49. https://doi.org/10.1017/XPS.2021.18

Bizumic, B., & Duckitt, J. (2018). Investigating right wing authoritarianism with a very short authoritarianism scale. Journal of Social and Political Psychology, 6(1), 129–150. https://doi.org/10.5964/jspp.v6i1.835

Bor, A., & Petersen, M. B. (2022). The psychology of online political hostility: A comprehensive, cross-national test of the mismatch hypothesis. American Political Science Review, 116(1), 1–18. https://doi.org/10.1017/S0003055421000885

Bryanov, K., & Vziatysheva, V. (2021). Determinants of individuals’ belief in fake news: A scoping review determinants of belief in fake news. PLOS ONE, 16(6), e0253717. https://doi.org/10.1371/journal.pone.0253717

Buchanan, T., & Benson, V. (2019). Spreading disinformation on Facebook: Do trust in message source, risk propensity, or personality affect the organic reach of “fake news”? Social Media + Society, 5(4), 2056305119888654. https://doi.org/10.1177/2056305119888654

Buchanan, T., & Kempley, J. (2021). Individual differences in sharing false political information on social media: Direct and indirect effects of cognitive-perceptual schizotypy and psychopathy. Personality and Individual Differences, 182, 111071. https://doi.org/10.1016/j.paid.2021.111071

Buhr, K., & Dugas, M. J. (2002). The intolerance of uncertainty scale: Psychometric properties of the English version. Behaviour Research and Therapy, 40(8), 931–945. https://doi.org/10.1016/S0005-7967(01)00092-4

Celse, J., & Chang, K. (2019). Politicians lie, so do I. Psychological Research, 83(6), 1311–1325. https://doi.org/10.1007/s00426-017-0954-7

Choi, T. R., & Sung, Y. (2018). Instagram versus Snapchat: Self-expression and privacy concern on social media. Telematics and Informatics, 35(8), 2289–2298. https://doi.org/10.1016/j.tele.2018.09.009

Chun, J. W., & Lee, M. J. (2017). When does individuals’ willingness to speak out increase on social media? Perceived social support and perceived power/control. Computers in Human Behavior, 74, 120–129. https://doi.org/10.1016/j.chb.2017.04.010

Conrad, K. J., Riley, B. B., Conrad, K. M., Chan, Y.-F., & Dennis, M. L. (2010). Validation of the Crime and Violence Scale (CVS) against the Rasch measurement model including differences by gender, race, and age. Evaluation Review, 34(2), 83–115. https://doi.org/10.1177/0193841×10362162

Costello, T. H., Bowes, S. M., Stevens, S. T., Waldman, I. D., Tasimi, A., & Lilienfeld, S. O. (2022). Clarifying the structure and nature of left-wing authoritarianism. Journal of Personality and Social Psychology, 122(1), 135–170. https://doi.org/10.1037/pspp0000341

Courchesne, L., Ilhardt, J., & Shapiro, J. N. (2021). Review of social science research on the impact of countermeasures against influence operations. Harvard Kennedy School (HKS) Misinformation Review, 2(5). https://doi.org/10.37016/mr-2020-79

Crawford, J. R., & Henry, J. D. (2004). The positive and negative affect schedule (PANAS): Construct validity, measurement properties and normative data in a large non-clinical sample. British Journal of Clinical Psychology, 43(3), 245–265. https://doi.org/10.1348/0144665031752934

Dang, J., King, K. M., & Inzlicht, M. (2020). Why are self-report and behavioral measures weakly correlated? Trends in Cognitive Sciences, 24(4), 267–269. https://doi.org/10.1016/j.tics.2020.01.007

Del Vicario, M., Bessi, A., Zollo, F., Petroni, F., Scala, A., Caldarelli, G., Stanley, H. E., & Quattrociocchi, W. (2016). The spreading of misinformation online. Proceedings of the National Academy of Sciences, 113(3), 554–559. https://doi.org/10.1073/pnas.1517441113

DePaulo, B. M., Kashy, D. A., Kirkendol, S. E., Wyer, M. M., & Epstein, J. A. (1996). Lying in everyday life. Journal of Personality and Social Psychology, 70(5), 979–995. https://doi.org/10.1037/0022-3514.70.5.979

Desmond, S. A., & Kraus, R. (2012). Liar, liar: Adolescent religiosity and lying to parents. Interdisciplinary Journal of Research on Religion, 8, 1–26. https://www.religjournal.com/pdf/ijrr08005.pdf

DeVerna, M. R., Guess, A. M., Berinsky, A. J., Tucker, J. A., & Jost, J. T. (2022). Rumors in retweet: Ideological asymmetry in the failure to correct misinformation. Personality and Social Psychology Bulletin, 01461672221114222. https://doi.org/10.1177/01461672221114222

Durand, M.-A., Yen, R. W., O’Malley, J., Elwyn, G., & Mancini, J. (2020). Graph literacy matters: Examining the association between graph literacy, health literacy, and numeracy in a Medicaid eligible population. PLOS ONE, 15(11), e0241844. https://doi.org/10.1371/journal.pone.0241844

Ecker, U. K. H., Sze, B. K. N., & Andreotta, M. (2021). Corrections of political misinformation: No evidence for an effect of partisan worldview in a US convenience sample. Philosophical Transactions of the Royal Society B: Biological Sciences, 376(1822), 20200145. https://doi.org/10.1098/rstb.2020.0145

Edelson, J., Alduncin, A., Krewson, C., Sieja, J. A., & Uscinski, J. E. (2017). The effect of conspiratorial thinking and motivated reasoning on belief in election fraud. Political Research Quarterly, 70(4), 933–946. https://doi.org/10.1177/1065912917721061

Enders, A. M., Uscinski, J., Klofstad, C., & Stoler, J. (2022). On the relationship between conspiracy theory beliefs, misinformation, and vaccine hesitancy. PLOS ONE, 17(10), e0276082. https://doi.org/10.1371/journal.pone.0276082

Frankfurt, H. G. (2009). On bullshit. Princeton University Press.

Garrett, R. K. (2017). The “echo chamber” distraction: Disinformation campaigns are the problem, not audience fragmentation. Journal of Applied Research in Memory and Cognition, 6(4), 370–376. https://doi.org/10.1016/j.jarmac.2017.09.011

Garrett, R. K., & Bond, R. M. (2021). Conservatives’ susceptibility to political misperceptions. Science Advances, 7(23), eabf1234. https://doi.org/10.1126/sciadv.abf1234

Garrett, R. K., Long, J. A., & Jeong, M. S. (2019). From partisan media to misperception: Affective polarization as mediator. Journal of Communication, 69(5), 490–512. https://doi.org/10.1093/joc/jqz028

Grant, J. E., Paglia, H. A., & Chamberlain, S. R. (2019). The phenomenology of lying in young adults and relationships with personality and cognition. Psychiatric Quarterly, 90(2), 361–369. https://doi.org/10.1007/s11126-018-9623-2

Green, C. E. L., Freeman, D., Kuipers, E., Bebbington, P., Fowler, D., Dunn, G., & Garety, P. A. (2008). Measuring ideas of persecution and social reference: The Green et al. Paranoid Thought Scales (GPTS). Psychological Medicine, 38(1), 101–111. https://doi.org/10.1017/S0033291707001638

Grinberg, N., Joseph, K., Friedland, L., Swire-Thompson, B., & Lazer, D. (2019). Fake news on Twitter during the 2016 U.S. presidential election. Science, 363(6425), 374–378. https://doi.org/10.1126/science.aau2706

Guess, A., Nyhan, B., & Reifler, J. (2020). Exposure to untrustworthy websites in the 2016 U.S. election. Nature Human Behaviour, 4(5), 472–480. https://doi.org/10.1038/s41562-020-0833-x

Guess, A. M., & Lyons, B. A. (2020). Misinformation, disinformation, and online propaganda. In N. Persily & J. A. Tucker (Eds.), Social media and democracy: The state of the field and prospects for reform (pp. 10–33), Cambridge University Press. https://doi.org/10.1017/9781108890960

Ha, L., Graham, T., & Gray, J. (2022). Where conspiracy theories flourish: A study of YouTube comments and Bill Gates conspiracy theories. Harvard Kennedy School (HKS) Misinformation Review, 3(5). https://doi.org/10.37016/mr-2020-107

Halevy, R., Shalvi, S., & Verschuere, B. (2014). Being honest about dishonesty: Correlating self-reports and actual lying. Human Communication Research, 40(1), 54–72. https://doi.org/10.1111/hcre.12019

Hughes, A. (2019). A small group of prolific users account for a majority of political tweets sent by U.S. adults. Pew Research Center. https://pewrsr.ch/35YXMrM

Johnson, T. J., Wallace, R., & Lee, T. (2022). How social media serve as a super-spreader of misinformation, disinformation, and conspiracy theories regarding health crises. In J. H. Lipschultz, K. Freberg, & R. Luttrell (Eds.), The Emerald handbook of computer-mediated communication and social media (pp. 67–84). Emerald Publishing Limited. https://doi.org/10.1108/978-1-80071-597-420221005

Jonason, P. K., & Webster, G. D. (2010). The dirty dozen: A concise measure of the dark triad. Psychological Assessment, 22(2), 420–432. https://doi.org/https://doi.org/10.1037/a0019265

Kaiser, C., & Oswald, A. J. (2022). The scientific value of numerical measures of human feelings. Proceedings of the National Academy of Sciences, 119(42), e2210412119. https://doi.org/doi:10.1073/pnas.2210412119

Kim, J. W., Guess, A., Nyhan, B., & Reifler, J. (2021). The distorting prism of social media: How self-selection and exposure to incivility fuel online comment toxicity. Journal of Communication, 71(6), 922–946. https://doi.org/10.1093/joc/jqab034

Lasser, J., Aroyehun, S. T., Simchon, A., Carrella, F., Garcia, D., & Lewandowsky, S. (2022). Social media sharing of low-quality news sources by political elites. PNAS Nexus, 1(4). https://doi.org/10.1093/pnasnexus/pgac186

Lawson, M. A., & Kakkar, H. (2021). Of pandemics, politics, and personality: The role of conscientiousness and political ideology in the sharing of fake news. Journal of Experimental Psychology: General, 151(5), 1154–1177. https://doi.org/10.1037/xge0001120

Littrell, S., Risko, E. F., & Fugelsang, J. A. (2021a). The bullshitting frequency scale: Development and psychometric properties. British Journal of Social Psychology, 60(1), e12379. https://doi.org/10.1111/bjso.12379

Littrell, S., Risko, E. F., & Fugelsang, J. A. (2021b). ‘You can’t bullshit a bullshitter’ (or can you?): Bullshitting frequency predicts receptivity to various types of misleading information. British Journal of Social Psychology, 60(4), 1484–1505. https://doi.org/10.1111/bjso.12447

Lopez, J., & Hillygus, D. S. (March 14, 2018). Why so serious?: Survey trolls and misinformation. SSRN. http://dx.doi.org/10.2139/ssrn.3131087

MacKenzie, A., & Bhatt, I. (2020). Lies, bullshit and fake news: Some epistemological concerns. Postdigital Science and Education, 2(1), 9–13. https://doi.org/10.1007/s42438-018-0025-4

Marwick, A., & Lewis, R. (2017). Media manipulation and disinformation online. Data & Society Research Institute. https://datasociety.net/library/media-manipulation-and-disinfo-online/

McClosky, H., & Chong, D. (1985). Similarities and differences between left-wing and right-wing radicals. British Journal of Political Science, 15(3), 329–363. https://doi.org/10.1017/S0007123400004221

Metzger, M. J., Flanagin, A. J., Mena, P., Jiang, S., & Wilson, C. (2021). From dark to light: The many shades of sharing misinformation online. Media and Communication, 9(1), 134–143. https://doi.org/10.17645/mac.v9i1.3409

Moran, R. E., Prochaska, S., Schlegel, I., Hughes, E. M., & Prout, O. (2021). Misinformation or activism: Mapping networked moral panic through an analysis of #savethechildren. AoIR Selected Papers of Internet Research, 2021. https://doi.org/10.5210/spir.v2021i0.12212

Mosleh, M., & Rand, D. G. (2022). Measuring exposure to misinformation from political elites on Twitter. Nature Communications, 13, 7144. https://doi.org/10.1038/s41467-022-34769-6

Nguyen, H., & Gokhale, S. S. (2022). Analyzing extremist social media content: A case study of Proud Boys. Social Network Analysis and Mining, 12(1), 115. https://doi.org/10.1007/s13278-022-00940-6

Okamoto, S., Niwa, F., Shimizu, K., & Sugiman, T. (2001). The 2001 survey for public attitudes towards and understanding of science and technology in Japan. National Institute of Science and Technology Policy Ministry of Education, Culture, Sports, Science and Technology. https://nistep.repo.nii.ac.jp/record/4385/files/NISTEP-NR072-SummaryE.pdf

Paulhus, D. L., Buckels, E. E., Trapnell, P. D., & Jones, D. N. (2020). Screening for dark personalities. European Journal of Psychological Assessment, 37(3), 208–222. https://doi.org/10.1027/1015-5759/a000602

Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., & Rand, D. G. (2021). Shifting attention to accuracy can reduce misinformation online. Nature, 592(7855), 590–595. https://doi.org/10.1038/s41586-021-03344-2

Pennycook, G., & Rand, D. G. (2020). Who falls for fake news? The roles of bullshit receptivity, overclaiming, familiarity, and analytic thinking. Journal of Personality, 88(2), 185–200. https://doi.org/10.1111/jopy.12476

Pennycook, G., & Rand, D. G. (2021). The psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402. https://doi.org/10.1016/j.tics.2021.02.007

Petersen, M. B., Osmundsen, M., & Arceneaux, K. (2023). The “need for chaos” and motivations to share hostile political rumors. American Political Science Review, 1–20. https://doi.org/10.1017/S0003055422001447

Romer, D., & Jamieson, K. H. (2021). Patterns of media use, strength of belief in Covid-19 conspiracy theories, and the prevention of Covid-19 from March to July 2020 in the United States: Survey study. Journal of Medical Internet Research, 23(4), e25215. https://doi.org/10.2196/25215

Roozenbeek, J., van der Linden, S., Goldberg, B., Rathje, S., & Lewandowsky, S. (2022). Psychological inoculation improves resilience against misinformation on social media. Science Advances, 8(34), eabo6254. https://doi.org/doi:10.1126/sciadv.abo6254

Sanderson, Z., Brown, M. A., Bonneau, R., Nagler, J., & Tucker, J. A. (2021). Twitter flagged Donald Trump’s tweets with election misinformation: They continued to spread both on and off the platform. Harvard Kennedy School (HKS) Misinformation Review, 2(4). https://doi.org/10.37016/mr-2020-77

Schaffner, B. F., & Luks, S. (2018). Misinformation or expressive responding? What an inauguration crowd can tell us about the source of political misinformation in surveys. Public Opinion Quarterly, 82(1), 135–147. https://doi.org/10.1093/poq/nfx042

Serota, K. B., & Levine, T. R. (2015). A few prolific liars: Variation in the prevalence of lying. Journal of Language and Social Psychology, 34(2), 138–157. https://doi.org/10.1177/0261927X14528804

Smallpage, S. M., Enders, A. M., Drochon, H., & Uscinski, J. E. (2022). The impact of social desirability bias on conspiracy belief measurement across cultures. Political Science Research and Methods, 11(3), 555–569. https://doi.org/10.1017/psrm.2022.1

Starbird, K. (2019). Disinformation’s spread: Bots, trolls and all of us. Nature, 571(7766), 449–450. https://doi.org/10.1038/d41586-019-02235-x

Stern, A. M. (2019). Proud Boys and the white ethnostate: How the alt-right is warping the American imagination. Beacon Press.

Sunstein, C. R. (2021). Liars: Falsehoods and free speech in an age of deception. Oxford University Press.

Tucker, J. A., Guess, A., Barberá, P., Vaccari, C., Siegel, A., Sanovich, S., Stukal, D., & Nyhan, B. (2018). Social media, political polarization, and political disinformation: A review of the scientific literature. SSRN. https://dx.doi.org/10.2139/ssrn.3144139

Urman, A., & Katz, S. (2022). What they do in the shadows: Examining the far-right networks on Telegram. Information, Communication & Society, 25(7), 904–923. https://doi.org/10.1080/1369118X.2020.1803946

Uscinski, J., Enders, A., Seelig, M. I., Klofstad, C. A., Funchion, J. R., Everett, C., Wuchty, S., Premaratne, K., & Murthi, M. N. (2021). American politics in two dimensions: Partisan and ideological identities versus anti-establishment orientations. American Journal of Political Science, 65(4), 773–1022. https://doi.org/10.1111/ajps.12616

Uscinski, J., Enders, A. M., Klofstad, C., & Stoler, J. (2022). Cause and effect: On the antecedents and consequences of conspiracy theory beliefs. Current Opinion in Psychology, 47, 101364. https://doi.org/10.1016/j.copsyc.2022.101364

van der Linden, S., Roozenbeek, J., Maertens, R., Basol, M., Kácha, O., Rathje, S., & Traberg, C. S. (2021). How can psychological science help counter the spread of fake news? The Spanish Journal of Psychology, 24, e25. https://doi.org/10.1017/SJP.2021.23

Vincent, E. M., Théro, H., & Shabayek, S. (2022). Measuring the effect of Facebook’s downranking interventions against groups and websites that repeatedly share misinformation. Harvard Kennedy School (HKS) Misinformation Review, 3(3). https://doi.org/10.37016/mr-2020-100

Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. https://doi.org/10.1126/science.aap9559

Vraga, E. K., & Bode, L. (2020). Defining misinformation and understanding its bounded nature: Using expertise and evidence for describing misinformation. Political Communication, 37(1), 136–144. https://doi.org/10.1080/10584609.2020.1716500

Xiao, X., Borah, P., & Su, Y. (2021). The dangers of blind trust: Examining the interplay among social media news use, misinformation identification, and news trust on conspiracy beliefs. Public Understanding of Science, 30(8), 977–992. https://doi.org/10.1177/0963662521998025

Zeng, J., & Schäfer, M. S. (2021). Conceptualizing “dark platforms.” Covid-19-related conspiracy theories on 8kun and Gab. Digital Journalism, 9(9), 1321–1343. https://doi.org/10.1080/21670811.2021.1938165

Zettler, I., Hilbig, B. E., Moshagen, M., & de Vries, R. E. (2015). Dishonest responding or true virtue? A behavioral test of impression management. Personality and Individual Differences, 81, 107–111. https://doi.org/10.1016/j.paid.2014.10.007

Funding

This research was funded by grants from the National Science Foundation #2123635 and #2123618.

Competing Interests

All authors declare no competing interests.

Ethics

Approval for this study was granted by the University of Miami Human Subject Research Office on May 13, 2022 (Protocol #20220472).

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/AWNAKN