Peer Reviewed

What do we study when we study misinformation? A scoping review of experimental research (2016-2022)

Article Metrics

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

We reviewed 555 papers published from 2016–2022 that presented misinformation to participants. We identified several trends in the literature—increasing frequency of misinformation studies over time, a wide variety of topics covered, and a significant focus on COVID-19 misinformation since 2020. We also identified several important shortcomings, including overrepresentation of samples from the United States and Europe and excessive emphasis on short-term consequences of brief, text-based misinformation. Most studies examined belief in misinformation as the primary outcome. While many researchers identified behavioural consequences of misinformation exposure as a pressing concern, we observed a lack of research directly investigating behaviour change.

Research Question

- What populations, materials, topics, methods, and outcomes are common in published misinformation research from 2016–2022?

Essay Summary

- The goal of this review was to identify the scope of methods and measures used in assessing the impact of real-world misinformation.

- We screened 8,469 papers published between 2016 and 2022, finding 555 papers with 759 studies where participants were presented with misinformation.

- The vast majority of studies included samples from the United States or Europe, used brief text-based misinformation (1–2 sentences), measured belief in the misinformation as a primary outcome, and had no delay between misinformation exposure and measurement of the outcome.

- The findings highlight certain elements of misinformation research that are currently underrepresented in the literature. In particular, we note the need for more diverse samples, measurement of behaviour change in response to misinformation, and assessment of the longer-term consequences of misinformation exposure.

- Very few papers directly examined effects of misinformation on behaviour (1%) or behavioural intentions (10%), instead measuring proxy outcomes such as belief or attitudes. Nevertheless, many papers draw conclusions regarding the consequences of misinformation for real-world behaviour.

- We advise caution in inferring behavioural consequences unless behaviours (or behavioural intentions) are explicitly measured.

- We recommend that policymakers reflect on the specific outcomes they hope to influence and consider whether extant evidence indicates that their efforts are likely to be successful.

Implications

In this article, we report a scoping review of misinformation research from 2016-2022. A scoping review is a useful evidence synthesis approach that is particularly appropriate when the purpose of the review is to identify knowledge gaps or investigate research conduct across a body of literature (Munn et al., 2018). Our review investigates the methods used in misinformation research since interest in so-called “fake news” spiked in the wake of the 2016 U.S. presidential election and the Brexit referendum vote. While previous publications have reflected critically on the current focus and future pathways for the field (Camargo & Simon, 2022), here we address a simple question: what do we study when we study misinformation? We are interested in the methods, outcomes, and samples that are commonly used in misinformation research and what that might tell us about our focus and blind spots.

Our review covers studies published from January 2016 to July 2022 and includes any studies where misinformation was presented to participants by researchers. The misinformation had to be related to real-world information (i.e., not simple eyewitness misinformation effects), and the researchers had to measure participants’ response to the misinformation as a primary outcome. As expected, we found an increase in misinformation research over time, from just three studies matching our criteria in 2016 to 312 published in 2021. As the number of studies has grown, so too has the range of topics covered. The three studies published in 2016 all assessed political misinformation, but by 2021, just 35% of studies addressed this issue, while the remainder examined other topics, including climate change, vaccines, nutrition, immigration, and more. COVID-19 became a huge focus for misinformation researchers in late 2020, and our review includes over 200 studies that used COVID-related materials. Below we discuss some implications and recommendations for the field based on our findings.

Call for increased diversity & ecological validity

It has been previously noted that the evidence base for understanding misinformation is skewed by pragmatic decisions affecting the topics that researchers choose to study. For example, Altay and colleagues (2023) argue that misinformation researchers typically focus on social media because it is methodologically convenient, and that this can give rise to the false impression that misinformation is a new phenomenon or one solely confined to the internet. Our findings highlight many other methodological conveniences that affect our understanding of misinformation relating to samples, materials, and experimental design.

Our findings clearly show that certain populations and types of misinformation are well-represented in the literature. In particular, the majority of studies (78%) drew on samples from the United States or Europe. Though the spread of misinformation is widely recognised as a global phenomenon (Lazer, 2018), countries outside the United States and Europe are underrepresented in misinformation research. We recommend more diverse samples for future studies, as well as studies that assess interventions across multiple countries at once (e.g., Porter & Wood, 2021). Before taking action, policymakers should take note of whether claims regarding the spread of misinformation or the effectiveness of particular interventions have been tested in their jurisdictions and consider whether effects are likely to generalise to other contexts.

There are growing concerns about large-scale disinformation campaigns and how they may threaten democracies (Nagasko, 2020; Tenove, 2020). For example, research has documented elaborate Russian disinformation campaigns reaching individuals via multiple platforms, delivery methods, and media formats (Erlich & Garner, 2023; Wilson & Starbird, 2020). Our review of the misinformation literature suggests that most studies don’t evaluate conditions that are relevant to these disinformation campaigns. Most studies present misinformation in a very brief format, comprising a single presentation of simple text. Moreover, most studies do not include a delay between presentation and measurement of the outcome. This may be due to ethical concerns, which are, of course, of crucial importance when conducting misinformation research (Greene et al., 2022). Nevertheless, this has implications for policymakers, who may draw on research that does not resemble the real-world conditions in which disinformation campaigns are likely to play out. For example, there is evidence to suggest that repeated exposure can increase the potency of misinformation (Fazio et al., 2022; Pennycook et al., 2018), and some studies have found evidence of misinformation effects strengthening over time (Murphy et al., 2021). These variables are typically studied in isolation, and we,therefore, have an incomplete understanding of how they might interact in large-scale campaigns in the real world. This means that policymakers may make assumptions about which messages are likely to influence citizens based on one or two variables—for example, a news story’s source or the political congeniality of its content—without considering the impact of other potentially interacting variables, such as the delay between information exposure and the target action (e.g., voting in an election) or the number of times an individual is likely to have seen the message. In sum, we would recommend a greater focus on ecologically valid methods to assess misinformation that is presented in multiple formats, across multiple platforms, on repeated occasions, and over a longer time interval. We also encourage future research that is responsive to public and policy-maker concerns with regard to misinformation. For example, a misinformation-related topic that is frequently covered in news media is the looming threat of deepfake technology and the dystopian future it may herald (Broinowski, 2022). However, deepfakes were very rarely examined in the studies we reviewed (nine studies in total).

Our review contributes to a growing debate as to how we should measure the effectiveness of misinformation interventions. Some have argued that measuring discernment (the ability to distinguish true from false information) is key (Guay et al., 2023). For example, in assessing whether an intervention is effective, we should consider the effects of the intervention on belief in fake news (as most studies naturally do) but also consider effects on belief in true news—that is, news items that accurately describe true events. This reflects the idea that while believing and sharing misinformation can lead to obvious dangers, not believing or not sharing true information may also be costly. Interventions that encourage skepticism towards media and news sources might cause substantial harm if they undercut trust in real news, particularly as true news is so much more prevalent than fake news (Acerbi et al., 2022). In our review, less than half of the included studies presented participants with both true and false information. Of those that did present true information, just 15% reported a measure of discernment (7% of all included studies), though there was some indication that this outcome measure has been more commonly reported in recent years. We recommend that future studies consider including both true items and a measure of discernment, particularly when assessing susceptibility to fake news or evaluating an intervention. Furthermore, policymakers should consider the possibility of unintended consequences if interventions aiming to reduce belief in misinformation are employed without due consideration of their effects on trust in news more generally.

Is misinformation likely to change our behaviour?

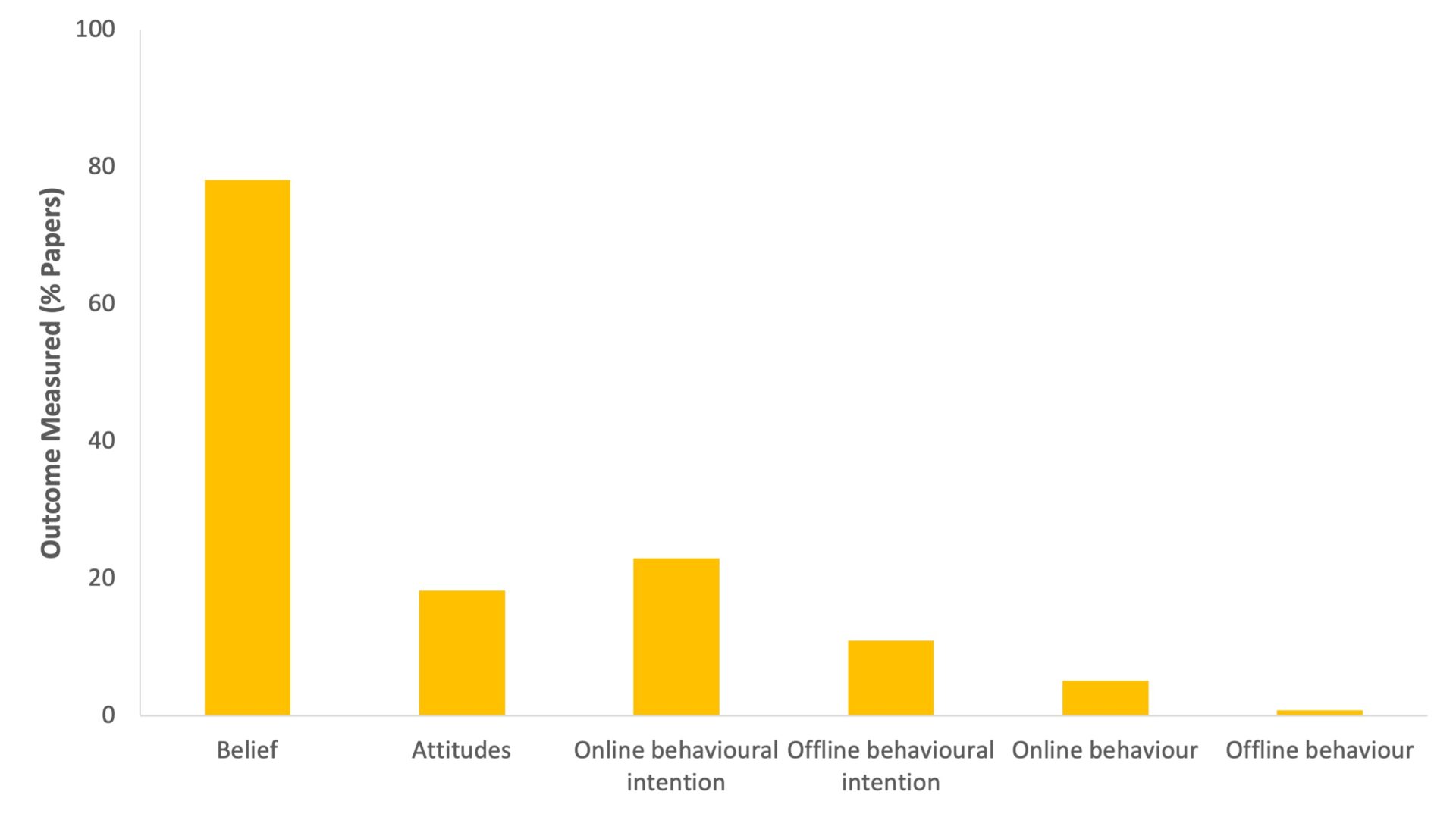

Many of our most pressing social concerns related to misinformation centre on the possibility of false information inciting behaviour change—for example, that political misinformation might have a causal effect on how we decide to vote, or that health misinformation might have a causal effect in refusal of vaccination or treatment. In the current review, we found the most common outcome measure was belief in misinformation (78% of studies), followed by attitudes towards the target of the misinformation (18% of studies). While it is, of course, of interest to examine how misinformation can change beliefs and attitudes, decades of research have shown that information provision is often ineffective at meaningfully changing attitudes (Albarracin & Shavitt, 2018) and even where such an intervention is successful, attitude change is not always sufficient to induce behavioural change (Verplanken & Orbell, 2022).

When assessing whether misinformation can affect behaviour, previous research has reported mixed results. Loomba et al. (2021) found that exposure to COVID vaccine misinformation reduced intentions to get vaccinated, but other studies have reported null or inconsistent effects (Aftab & Murphy, 2022; de Saint Laurent et al., 2022; Greene & Murphy, 2021; Guess et al., 2020). The current review highlights the small number of studies that have examined offline behavioural intentions (10% of papers reviewed) or offline behaviour itself (< 1% of studies) as an outcome of misinformation exposure. Our findings reveal a mismatch between the stated goals and methodology of research, where many papers conceive of misinformation as a substantial problem and may cite behavioural outcomes (such as vaccine refusal) as the driver of this concern, but the studies instead measure belief. We acknowledge that studying real-world effects of misinformation presents some significant challenges, both practical (we cannot follow people into the voting booth or doctor’s office) and ethical (e.g., if experimental presentation of misinformation has the potential to cause real-world harm to participants or society). Moreover, it can be exceptionally difficult to identify causal links between information exposure and complex behaviours such as voting (Aral & Eckles, 2019). Nevertheless, we recommend that where researchers have an interest in behaviour change, they should endeavour to measure that as part of their study. Where a study has only measured beliefs, attitudes, or sharing intentions, we should refrain from drawing conclusions with regard to behaviour.

From a policy perspective, those who are concerned about misinformation, such as governments and social media companies, ought to clearly specify whether these concerns relate to beliefs or behaviour, or both. Behaviour change is not the only negative outcome that may result from exposure to misinformation—confusion and distrust in news sources are also significant outcomes that many policymakers may wish to address. We recommend that policymakers reflect on the specific outcomes they hope to influence and consider whether extant evidence indicates that their efforts are likely to be successful. For example, if the goal is to reduce belief in or sharing of misinformation, there may be ample evidence to support a particular plan of action. On the other hand, if the goal is to affect a real-world behaviour such as vaccine uptake, our review suggests that the jury is still out. Policymakers may, therefore, be best advised to lend their support to new research aiming to explicitly address the question of behaviour change in response to misinformation. Specifically, we suggest that funding should be made available by national and international funding bodies to directly evaluate the impacts of misinformation in the real world.

Findings

Finding 1: Studies assessing the effects of misinformation on behaviour are rare.

As shown in Table 1, the most commonly recorded outcome by far was belief in the misinformation presented (78% of studies), followed by attitudes (18.31%). Online behavioural intentions, like intention to share (18.05%) or intention to like or comment on a social media post (5.01%), were more commonly measured than offline behavioural intentions (10.94%), like planning to get vaccinated. A tiny proportion of studies (1.58%) measured actual behaviour and how it may change as a result of misinformation exposure. Even then, just one study (0.13%) assessed real-world behaviour—speed of tapping keys in a lab experiment (Bastick, 2021)—all other studies assessed online behaviour such as sharing of news articles or liking social media posts. Thus, no studies in this review assessed the kind of real-world behaviour targeted by misinformation, such as vaccine uptake or voting behaviour.

| Outcomes | n | Percentage (%) |

| Belief in Misinformation | 593 | 78.13% |

| Attitudes (e.g., towards topic or person) | 139 | 18.31% |

| Online Behavioural Intention (Sharing) | 137 | 18.05% |

| Offline Behavioural Intention | 83 | 10.94% |

| Familiarity | 41 | 5.40% |

| Online Behavioural Intention (Other) | 38 | 5.01% |

| Emotional Reactions | 18 | 2.37% |

| Perceptions of News Source | 17 | 2.24% |

| False Memory | 12 | 1.58% |

| Recall | 11 | 1.45% |

| Gaze Behaviour (Eye-Tracking) | 8 | 1.05% |

| Level of Interest | 6 | 0.79% |

| Online Behaviour (Other) | 6 | 0.79% |

| Online Behaviour (Sharing) | 5 | 0.66% |

| Offline Behaviour | 1 | 0.13% |

| Other | 101 | 13.31% |

| Note: Percentages do not sum to 100% as some studies measured more than one outcome (e.g., if a study measured belief in misinformation and sharing intention, then both were selected). | ||

Finding 2: Studies in this field overwhelmingly use short, text-based misinformation.

The most common format for presenting misinformation was text only (62.71% of included studies), followed by text accompanied by an image (32.41%). Use of other formats was rare; video only (1.84%), text and video (1.32%), images only (< 1%), and audio only (< 1%).

Of the studies that used textual formats (with or without additional accompanying media), the majority (62.72%) presented between one and two sentences of text. An additional 17.50% presented misinformation in a longer paragraph (more than two sentences), 12.92% presented a page or more, and 6.86% did not specify the length of misinformation text presented.

The most frequent framing for the misinformation presented was news stories (44.27%), misinformation presented with no context (33.47%), and Facebook posts (16.47%). Other less frequent misinformation framing included Twitter posts (7.64%), other social media posts (8.04%), other types of webpages (2.11%), fact checks of news stories (1.58%), and government and public communications (0.26%).

Very few studies presented doctored media to participants; a small number (1.19%) presented deepfake videos and 1.05% presented other forms of doctored media.

Finding 3: Most studies assess outcomes instantly.

Fewer than 7% of studies reported any delay between exposure to the misinformation and the measurement of outcome (n = 52). While many did not specify exactly how long the delay was (n = 30), most were less than a week; 1–2 minutes (n = 4), 5–10 minutes (n = 4), 1 day–1 week (n = 13), 3 weeks (n = 1) and 1–6 months (n = 2).

Finding 4: Most participants were from the USA or Europe.

The majority of participants sampled were from the United States (49.93%), followed by Europe (28.19%) (see Table 2). All other regions each accounted for 6% or less of the total number of participants sampled, such as East Asia (5.53%), Africa (5.27%) and the Middle East (4.74%). Furthermore, 102 studies (13.26%) did not specify from where they sampled participants.

| Region | n | Percentage (%) |

| USA | 379 | 49.93% |

| North America (excluding USA) | 10 | 1.32% |

| Central/South America | 18 | 2.37% |

| Europe | 214 | 28.19% |

| Middle East (including Egypt) | 36 | 4.74% |

| African (excluding Egypt) | 40 | 5.27% |

| South Asian | 22 | 2.90% |

| East Asian | 42 | 5.53% |

| Southeast Asian | 27 | 3.56% |

| Asian (Other) | 2 | 0.26% |

| Europe/Asia | 7 | 0.92% |

| Oceania | 13 | 1.71% |

| Not specified | 102 | 13.26% |

| Note: “Europe/Asia” refers to transcontinental Eurasian countries, such as Türkiye, Azerbaijan, and Russia. Where the region sampled was not explicitly stated but could be inferred based on the materials presented to participants or other references to specific regions, the inferred region was recorded (e.g., a study that presented participants with misinformation relating to U.S. politics was recorded as USA). | ||

Finding 5: COVID-19 became a major focus of misinformation research.

Political misinformation was the most commonly studied topic until 2021, when COVID-related misinformation research became the dominant focus of the field (see Table 3 for a full breakdown of the topics included in the selected studies). Overall, experimental misinformation research is on the rise. Our review included one paper from 2016, 12 papers from 2017, 18 papers from 2018, 48 papers from 2019, 123 papers from 2020, 231 papers from 2021, and 122 papers for the first half of 2022.

| Year | Politics | Health (vaccines) | Health (nutrition) | Health (COVID) | Health (other) | Science (climate change) | Science (other) | Immigration | Other | Total Studies in Year |

| 2016 | 3 (100%) | 0 (0%) | 0 (0%) | 0(0%) | 0(0%) | 0(0%) | 0(0%) | 0(0%) | 0(0%) | 3 |

| 2017 | 11 (57.89%) | 7 (36.84%) | 0(0%) | 0(0%) | 1(5.26%) | 5(26.32%) | 0(0%) | 2(10.53%) | 3(15.79%) | 19 |

| 2018 | 8(38.09%) | 3(14.29%) | 0(0%) | 0(0%) | 5(23.81%) | 1(4.76%) | 1(4.76%) | 1(4.76%) | 9(42.86%) | 21 |

| 2019 | 35(53.03%) | 11(52.38%) | 1(1.51%) | 0(0%) | 13(19.70%) | 8(12.12%) | 3(4.55%) | 2(3.03%) | 13(19.70%) | 66 |

| 2020 | 84(48%) | 23(13.14%) | 14(8%) | 29(16.57%) | 36(20.57%) | 13(7.43%) | 14(8%) | 16(9.14%) | 63(36%) | 175 |

| 2021 | 110(35.26%) | 44(14.10%) | 19(6.09%) | 111(35.58%) | 57(18.23%) | 25(8.01%) | 11(3.53%) | 17(5.45%) | 70(22.44%) | 312 |

| 2022 | 58(35.58%) | 7(4.29%) | 3(1.84%) | 84(51.53%) | 26(15.95%) | 10(6.13%) | 2(1.23%) | 9(5.52%) | 31(19.02%) | 163 |

| Total | 309 | 95 | 37 | 124 | 138 | 62 | 31 | 47 | 189 | 759 |

| Note: Where studies presented participants with misinformation on more than one topic, all relevant topics were selected. Topics categorised as “Health (other)” include e-cigarettes, cancer, genetically modified foods, and other viruses. Topics categorised as “Other” include crime, historical conspiracies, celebrity misinformation, commercial misinformation, and misinformation that was not specified. | ||||||||||

Finding 6: Most studies do not report discernment between true and false misinformation.

In total, 340 studies (45.12%) presented participants with both true and false information. Of these studies, 52 (15.29%) reported a measure of discernment based on participants’ ability to discriminate between true and false information (e.g., the difference in standardised sharing intention scores between true and false items). Across the entire review then, fewer than 7% of studies report discernment between true and false information as an outcome. There was some indication that measurement of discernment is becoming more common over time; no studies included in the review reported a measure of discernment prior to 2019, and 48 out of the 52 studies that did measure discernment were published between 2020 and 2022.

Methods

A search was conducted to identify studies that presented participants with misinformation and measured their responses (e.g., belief in misinformation) after participants were exposed to misinformation. All studies must have been published since January 2016, with an English-language version available in a peer-reviewed journal. The final search for relevant records was carried out on the 16th of July, 2022. Searches were carried out in three databases (Scopus, Web of Science, and PsychINFO) using the search terms “misinformation” OR “fake news” OR “disinformation” OR “fabricated news” OR “false news.” The search strategy, inclusion criteria, and extraction templates were preregistered at https://osf.io/d5hrj/.

Inclusion criteria

There were two primary criteria for inclusion in the current scoping review. A study was eligible for inclusion if it (i) presented participants with misinformation with any potential for real-world consequences and (ii) measured participants’ responses to this misinformation (e.g., belief in the misinformation, intentions to share the misinformation) as a main outcome.

Exclusion criteria

Studies were excluded if they presented participants with misinformation of no real-world consequence (e.g., misinformation about a simulated crime, fabricated stories about fictitious plane crashes, misinformation about fictional persons that were introduced during the course of an experiment). If the misinformation was only relevant within the narrow confines of an experiment, we considered the paper ineligible. Furthermore, studies were excluded if they presented participants with general knowledge statements (e.g., trivia statements) or if they presented participants with misleading claims that were not clearly inaccurate (e.g., a general exaggeration of the benefits of a treatment). Studies were also excluded if the misinformation was only presented in the context of a debunking message, as were studies where the misinformation was presented as a hypothetical statement (e.g., “imagine if we told you that …”, “how many people do you think believe that…”). Studies of eyewitness memory were excluded, as were any studies not published in English. Finally, opinion pieces, commentaries, systematic reviews, or observational studies were excluded.

Originally, only experimental studies were to be included in the review. However, upon screening the studies, it became apparent that distinguishing between experiments, surveys, and intervention-based research was sometimes difficult—for example, cross-sectional studies exploring individual differences in fake news susceptibility might not be classified as true experiments (as they lack control groups and measure outcomes at only one time point), but they were clearly relevant to our aims. To avoid arbitrary decisions, we decided to drop this requirement and instead included all articles that met the inclusion criteria.

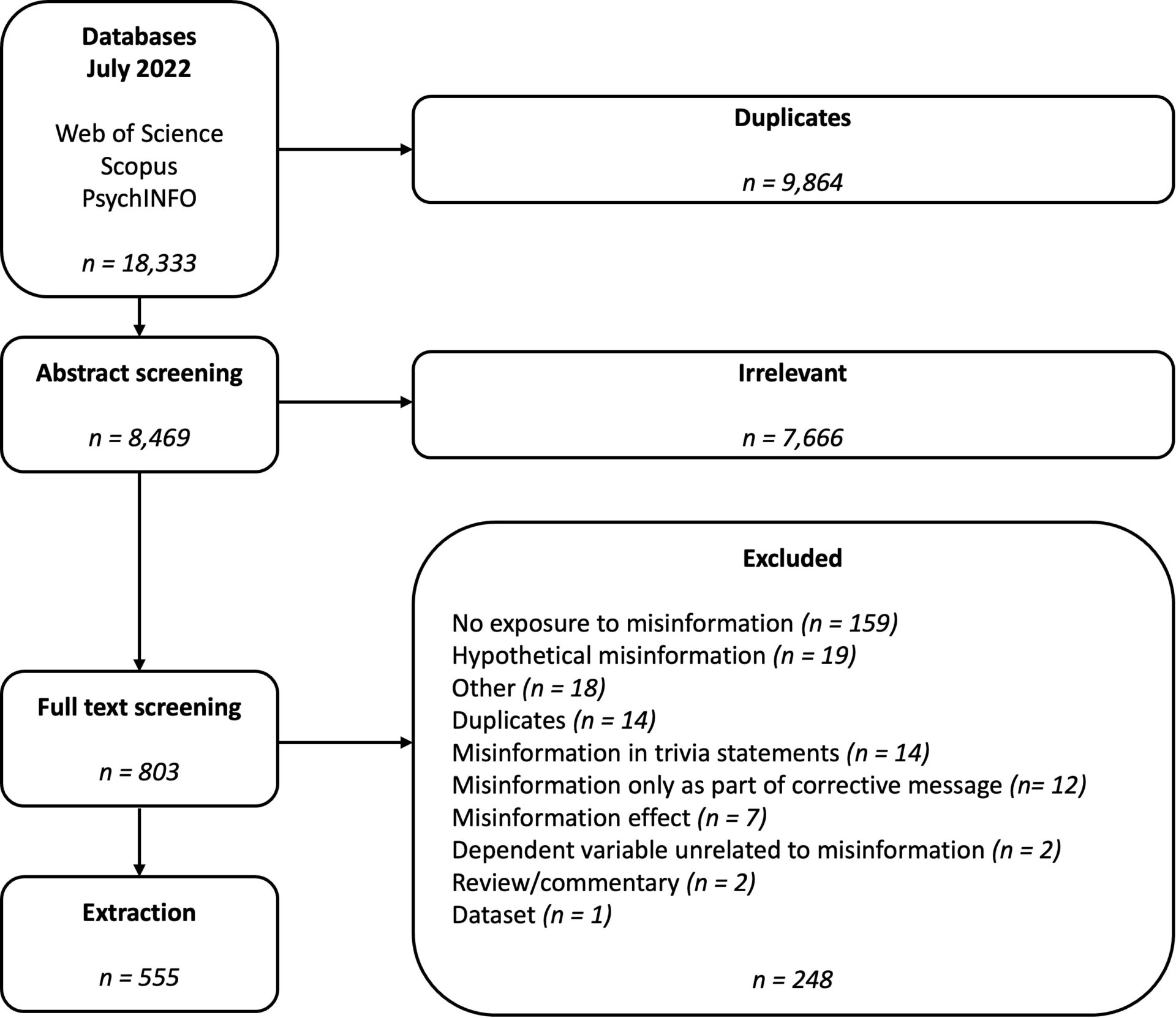

Screening and selection process

The search strategy yielded a total of 18,333 records (see Figure 2 for a summary of the screening process). Curious readers may note that a Google Scholar search for the search terms listed above produces a substantially different number of hits, though the number will vary from search to search. This lack of reproducibility in Google Scholar searches is one of many reasons why Google Scholar is not recommended for use in systematic reviews, and the three databases employed here are preferred (Gusenbauer & Haddaway, 2020; also see Boeker et al., 2013; Bramer et al., 2016). Following the removal of duplicates (n = 9,864), a total of 8,469 records were eligible to be screened. The titles and abstracts of the 8,469 eligible records were screened by six reviewers, in pairs of two, with a seventh reviewer resolving conflicts where they arose (weighted Cohen’s κ = 0.81). A total of 7,666 records were removed at this stage, as the records did not meet the criteria of the scoping review.

The full texts of the remaining 803 records were then screened by four reviewers in pairs of two, with conflicts resolved by discussion among the pair with the conflict (weighted Cohen’s κ = 0.68). Among the 803 records, 248 records were excluded (see Figure 2 for reasons for exclusion). Thus, there were 555 papers included for extraction, with a total of 759 studies included therein. An alphabetical list of all included articles is provided in the Appendix, and the full data file listing all included studies and their labels is available at https://osf.io/3apkt/.

Topics

- Impact

- / Psychology

- / Research

Bibliography

Acerbi, A., Altay, S., & Mercier, H. (2022). Fighting misinformation or fighting for information?. Harvard Kennedy School (HKS) Misinformation Review, 3(1). https://doi.org/10.37016/mr-2020-87

Aftab, O., & Murphy, G. (2022). A single exposure to cancer misinformation may not significantly affect related behavioural intentions. HRB Open Research, 5(82), 82. https://doi.org/10.12688/hrbopenres.13640.1

Albarracin, D., & Shavitt, S. (2018). Attitudes and attitude change. Annual Review of Psychology, 69, 299–327. https://psycnet.apa.org/doi/10.1146/annurev-psych-122216-011911

Altay, S., Berriche, M., & Acerbi, A. (2023). Misinformation on misinformation: Conceptual and methodological challenges. Social Media+ Society, 9(1), 20563051221150412. https://doi.org/10.1177/20563051221150412

Aral, S., & Eckles, D. (2019). Protecting elections from social media manipulation. Science, 365(6456), 858–861. https://doi.org/doi:10.1126/science.aaw8243

Bastick, Z. (2021). Would you notice if fake news changed your behavior? An experiment on the unconscious effects of disinformation. Computers in Human Behavior, 116, 106633. https://doi.org/10.1016/j.chb.2020.106633

Bramer, W. M., Giustini, D., & Kramer, B. M. R. (2016). Comparing the coverage, recall, and precision of searches for 120 systematic reviews in Embase, MEDLINE, and Google Scholar: A prospective study. Systematic Reviews,5(1), 39. https://doi.org/10.1186/s13643-016-0215-7

Boeker, M., Vach, W., & Motschall, E. (2013). Google Scholar as replacement for systematic literature searches: good relative recall and precision are not enough. BMC Medical Research Methodology, 13(1), 131. https://doi.org/10.1186/1471-2288-13-131

Broinowski, A. (2022). Deepfake nightmares, synthetic dreams: A review of dystopian and utopian discourses around deepfakes, and why the collapse of reality may not be imminent—yet. Journal of Asia-Pacific Pop Culture, 7(1), 109–139. https://doi.org/10.5325/jasiapacipopcult.7.1.0109

Camargo, C. Q., & Simon, F. M. (2022). Mis- and disinformation studies are too big to fail: Six suggestions for the field’s future. Harvard Kennedy School (HKS) Misinformation Review, 3(5). https://doi.org/10.37016/mr-2020-106

de Saint Laurent, C., Murphy, G., Hegarty, K., & Greene, C. M. (2022). Measuring the effects of misinformation exposure and beliefs on behavioural intentions: A COVID-19 vaccination study. Cognitive Research: Principles and Implications, 7(1), 87. https://doi.org/10.1186/s41235-022-00437-y

Erlich, A., & Garner, C. (2023). Is pro-Kremlin disinformation effective? Evidence from Ukraine. The International Journal of Press/Politics, 28(1), 5–28. https://doi.org/10.1177/19401612211045221

Fazio, L. K., Pillai, R. M., & Patel, D. (2022). The effects of repetition on belief in naturalistic settings. Journal of Experimental Psychology: General, 151(10), 2604–2613. https://doi.org/10.1037/xge0001211

Greene, C. M., de Saint Laurent, C., Murphy, G., Prike, T., Hegarty, K., & Ecker, U. K. (2022). Best practices for ethical conduct of misinformation research: A scoping review and critical commentary. European Psychologist, 28(3), 139–150. https://doi.org/10.1027/1016-9040/a000491

Greene, C. M., & Murphy, G. (2021). Quantifying the effects of fake news on behavior: Evidence from a study of COVID-19 misinformation. Journal of Experimental Psychology: Applied, 27(4), 773–784. https://doi.org/10.1037/xap0000371

Guay, B., Berinsky, A. J., Pennycook, G., & Rand, D. (2023). How to think about whether misinformation interventions work. Nature Human Behaviour, 7, 1231–1233. https://doi.org/10.1038/s41562-023-01667-w

Guess, A. M., Lockett, D., Lyons, B., Montgomery, J. M., Nyhan, B., & Reifler, J. (2020). “Fake news” may have limited effects beyond increasing beliefs in false claims. Harvard Kennedy School (HKS) Misinformation Review, 1(1).https://doi.org/10.37016/mr-2020-004

Gusenbauer, M., & Haddaway, N. R. (2020). Which academic search systems are suitable for systematic reviews or meta-analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Research Synthesis Methods, 11(2), 181–217. https://doi.org/https://doi.org/10.1002/jrsm.1378

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., & Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nature Human Behaviour, 5(3), 337–348. https://doi.org/10.1038/s41562-021-01056-1

Munn, Z., Peters, M. D., Stern, C., Tufanaru, C., McArthur, A., & Aromataris, E. (2018). Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Medical Research Methodology, 18. https://doi.org/10.1186/s12874-018-0611-x

Murphy, G., Lynch, L., Loftus, E., & Egan, R. (2021). Push polls increase false memories for fake new stories. Memory, 29(6), 693–707. https://doi.org/10.1080/09658211.2021.1934033

Nagasako, T. (2020). Global disinformation campaigns and legal challenges. International Cybersecurity Law Review, 1(1–2), 125–136. https://doi.org/10.1365/s43439-020-00010-7

Lazer, D. M. J., Baum, M. A., Benkler, Y., Berinsky, A. J., Greenhill, K. M., Menczer, F., Metzger, M. J., Nyhan, B., Pennycook, G., Rothschild, D., Schudson, M., Sloman, S. A., Sunstein, C. R., Thorson, E. A., Watts, D. J., & Zittrain, J. L. (2018). The science of fake news. Science, 359(6380), 1094-1096. https://doi.org/doi:10.1126/science.aao2998

Pennycook, G., Cannon, T. D., & Rand, D. G. (2018). Prior exposure increases perceived accuracy of fake news. Journal of Experimental Psychology: General, 147(12), 1865–1880. https://doi.org/10.1037/xge0000465

Porter, E., & Wood, T. J. (2021). The global effectiveness of fact-checking: Evidence from simultaneous experiments in Argentina, Nigeria, South Africa, and the United Kingdom. Proceedings of the National Academy of Sciences, 118(37), e2104235118. https://doi.org/10.1073/pnas.2104235118

Tenove, C. (2020). Protecting democracy from disinformation: Normative threats and policy responses. The International Journal of Press/Politics, 25(3), 517–537. https://doi.org/10.1177/1940161220918740

Verplanken, B., & Orbell, S. (2022). Attitudes, habits, and behavior change. Annual Review of Psychology, 73, 327–352. https://doi.org/10.1146/annurev-psych-020821-011744

Wilson, T., & Starbird, K. (2020). Cross-platform disinformation campaigns: lessons learned and next steps. Harvard Kennedy School (HKS) Misinformation Review, 1(1). https://doi.org/10.37016/mr-2020-002

Funding

This project was funded by the Health Research Board of Ireland – COV19-2020-030. The funding body had no role in the design, interpretation, or reporting of the research.

Competing Interests

The authors declare no competing interests.

Ethics

This review protocol was exempt from ethics approval.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse at https://doi.org/10.7910/DVN/X1YH6S and OSF at https://osf.io/3apkt/.