Peer Reviewed

Assessing misinformation recall and accuracy perceptions: Evidence from the COVID-19 pandemic

Article Metrics

0

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

Misinformation is ubiquitous; however, the extent and heterogeneity in public uptake of it remains a matter of debate. We address these questions by exploring Americans’ ability to recall prominent misinformation during the COVID-19 pandemic and the factors associated with accuracy perceptions of these claims. Comparing reported recall rates of real and “placebo” headlines, we estimate “true” recall of misinformation is lower than self-reporting suggests but still troubling. Supporters of President Trump, particularly strong news consumers, were most likely to believe misinformation, including ideologically dissonant claims. These findings point to the importance of tailoring corrections to address key correlates of misinformation uptake.

Research Questions

- As misinformation spread in the early days of the pandemic, to what extent could Americans faithfully recall prominent misinformation about COVID-19?

- What factors are most strongly associated with accuracy perceptions of misinformation, including misinformation from foreign sources?

- What political characteristics are most strongly associated with susceptibility to misinformation, and how do they interact with news consumption?

Essay Summary

- Using a national survey of U.S. adults [n = 1,045] fielded from May 2–3, 2020, we examined the extent of public recall of prominent misinformation about the COVID-19 pandemic and the factors associated with accuracy perceptions of those claims.

- Across all categories, “true” recall (self-reported recall of actual misinformation headlines minus self-reported recall of “placebo” headlines) averaged 7%. While significantly lower than self-reported true recall, this figure is significantly higher than past estimates of true recall of electoral misinformation, suggesting that the threat has grown in recent years and in new contexts.

- Approval of President Trump, even more than partisanship, was a strong predictor of incorrectly believing misinformation to be true.

- Trump supporters were also more susceptible to believing ideologically incongruent misinformation propagated by foreign sources.

- Trump supporters who were high news consumers were the most likely to believe misinformation, suggesting the need for tailored correction interventions and strategies based on the correlates of misinformation uptake.

Implications

As the 2024 election approaches, a familiar admonition sounds: the threat of foreign influence in U.S. elections through the spread of misinformation. Whereas the threat from Russia featured prominently in the last two major elections, concerns about China have increasingly taken center stage. As American attitudes and U.S. policy toward China have become more acrimonious since 2020, China has worked to amplify Russian misinformation while also expanding its own misinformation campaigns on social media abroad (Harold et al., 2021; Repnikova, 2022). Although research on misinformation has thrived, particularly since the 2016 elections, scholars remain divided on the actual consequences of the threat (Guess et al., 2020). Just because misinformation exists does not mean that individuals are either exposed to it or take up that misinformation. And the threat, rather than being general, could be more acute among some demographics than others (Hall Jamieson & Albarracín, 2020; Uscinski et al., 2020).

The debate about the scale of the threat posed by misinformation remains lively in part because of problematic measurement. For example, one Kaiser Family Foundation report found that 78% of the public believes or is unsure about misinformation around the COVID-19 pandemic. However, the conclusions were based on a study that exposed individuals to a range of myths and asked whether individuals believed those to be true or false (Palosky, 2021). Other approaches emphasize the sheer volume of false claims as a proxy for the magnitude of the threat. For example, by November 2020, Facebook alone claimed to have labeled 167 million posts as false and fully removed more than 16 million posts for violating its misinformation policies (Clarke, 2021). These approaches produce sensational stories but may be misleading. Prior research on the 2016 U.S. presidential election suggests that self-reported misinformation recall rates may be significantly inflated by false recall (Allcott & Gentzkow, 2017; Oliver & Wood, 2014). Questions of recall may be increasingly important, given growing concerns about the capacity of new technologies, such as artificial intelligence-enabled language models, for creating credible misinformation at scale (Kreps et al., 2022).

We studied both the extent to which Americans faithfully recall misinformation and the factors associated with wrongly judging misinformation credible in the context of the COVID-19 infodemic. Whether citizens are able to faithfully recall misinformation may be even more important in a public health setting than in an electoral context. During an electoral campaign, false claims can affect assessments of a candidate, and those effects can persist even after the false claim is forgotten (Lodge et al., 1995). However, the extent of public recall of false claims, particularly about COVID-19 treatments, is important for shaping the extent to which those claims will lead people to adopt fake treatments and threaten public health.

To estimate “true” recall of prominent misinformation about the COVID-19 pandemic, we fielded a national survey of U.S. adults from May 2–3, 2020, and asked subjects to evaluate three types of headlines: real headlines from reputable news outlets that faithfully reported, what at the time, was the best current understanding about the COVID-19 pandemic; misinformation headlines that had circulated widely on social media; and placebo headlines—false claims of equal plausibility (or, perhaps more accurately, implausibility) that we invented and that had not circulated widely on social media or other news outlets. We estimate true recall as the percentage self-reporting recall of actual prominent misinformation claims minus the percentage self-reporting recall of corresponding placebo headlines (Allcott & Gentzkow, 2017).

We found that true recall of COVID-19 misinformation was significantly lower than self-reports suggested but significantly higher than previous estimates of true recall of political misinformation during the 2016 election. Across all substantive categories, we estimated that true recall of pandemic misinformation averaged 7%; by contrast, Allcott & Gentzkow (2017) estimated true recall of just over 1% for false claims from the 2016 election. This does not mean that most Americans were not exposed to misinformation about COVID-19 treatments or that misinformation did not indirectly influence beliefs and behaviors (Bridgman et al., 2020; Enders et al., 2020; Lodge et al., 1995; Loomba et al., 2021). However, it does suggest that most of this false information had been forgotten and was no longer readily accessible or salient in most Americans’ minds (Zaller, 1992) at the time of our survey.

While true recall rates of misinformation were significantly lower than self-reported recall, our data points to another, oft-overlooked aspect of the over-saturated media environment about the pandemic: many struggled to correctly identify factual information as true. This is particularly concerning for real headlines about treatments for the virus, which only 41% of respondents, on average, correctly identified as true. This suggests the need for even greater and more consistent public health messaging of key facts to break through a chaotic and conflicting media environment.

Our analysis also showed that political lenses affected not just the uptake of political misinformation, but also public health misinformation. While past research has found evidence of partisan and ideological divides in susceptibility to COVID-19 misinformation (Calvillo et al., 2020; Hall Jamieson & Albarracín, 2020), we found that support for President Trump was a stronger predictor of believing misinformation than partisan affiliations. Trump supporters were significantly more likely to believe all categories of misinformation than non-Trump supporters—even ideologically incongruent misinformation from Chinese sources blaming the United States for the pandemic and praising the efficacy of China’s response to it. Moreover, Trump supporters were not simply more likely to believe all claims about COVID-19; they were no more or less likely to believe that real headlines about the pandemic were true. This finding speaks to the critical importance of President Trump as a cue-giver (Uscinski et al., 2020) and conduit of pandemic misinformation (Evanega et al., 2020), rather than an intractable partisan divide. This has important implications for efforts to combat the spread of false claims and complements research showing that partisan divides in susceptibility to misinformation and conspiratorial beliefs are context-dependent (Enders et al., 2022).

Further, while past research has examined the relationship between news consumption and susceptibility to misinformation (Hall Jamieson & Albarracín, 2020; Enders et al., 2023), we found that this relationship was strongest among Trump supporters. Among strong news consumers, Trump supporters were more than twice as likely to believe pandemic misinformation than were those who did not support Trump. However, among low news consumers, the gap in propensity to believe misinformation was substantively small. A better understanding of precisely who is most likely to believe misinformation and why, coupled with recent research on the conditions under which corrections are (and are not) effective (Bailard et al., 2022; Carey et al., 2022), can inform more robust efforts to guard against misinformation.

These findings validate the attention that scholars have devoted to the study of misinformation corrections in recent years (Kreps & Kriner, 2022; Porter & Wood, 2022). Our findings corroborate the basis of those studies, which is that misinformation abounds and that individuals are exposed to it; however, our findings about true recall rates add important context about the scale of the threat, and our analyses of variation in accuracy perceptions speak to significant heterogeneity in misinformation uptake. Taken together, our findings suggest the importance of more targeted interventions tailored to address the threat among groups most susceptible to misinformation uptake.

Findings

Finding 1: “True” recall of misinformation is significantly lower than self-reported recall.

Each survey respondent was asked to evaluate a series of headlines that were either real, prominent misinformation, or “placebo” (i.e., fake headlines invented by the researchers that had not circulated widely on social media). Topically, the headlines spanned two substantive categories: 1) headlines about the origins of/government response to COVID-19, and 2) headlines about treatments for the virus. For each headline, respondents were asked whether they recalled seeing the claim reported or discussed in recent months; respondents could answer “yes,” “no,” or “unsure.”

The top panel of Figure 1 plots the average percentage of respondents reporting that they remembered hearing about the headline claims across the six categories. Several findings are of note. First, across both topical categories, self-reported recall was highest for real headlines. On average, 58% of respondents recalled seeing the information in real headlines about the virus’s origins or the government response to it, and 50% recalled seeing the information in real headlines about treatments for the virus.

Second, a significant share of respondents reported recalling many false claims prominent in online misinformation. On average, 37% of respondents reported recalling prominent false claims about the virus’s origins/the nature of the government’s response, and 30% reported having seen prominent misinformation headlines about COVID-19 treatments. These self-reported figures are more than double self-reported recall of misinformation in the 2016 election (Allcott & Gentzkow, 2017).

However, our data also suggests that these high self-reported recall figures are grossly inflated. Significant shares of our sample also reported recalling our placebo headlines. We estimate true recall as the percentage self-reporting recall of actual prominent misinformation claims minus the percentage self-reporting recall of the placebo headlines in the same category (Allcott & Gentzkow, 2017). The bottom panel of Figure 1 presents our estimates of true recall of misinformation for each category. We estimate that true recall of prominent false claims about the virus’s origins/government response was approximately 11%. Perhaps more importantly, our estimate of true recall of misinformation about fake treatments for COVID-19 was just over 3%. These figures are significantly lower than self-reported recall, and they suggest that “true recall” of even some of the most prominent fake news claims—such as claims that drinking chlorine kills COVID-19—that provoked a flurry of media coverage and extensive fact-checking/debunking is limited.1For a parallel analysis of the percentages who reported recalling and believing headlines across categories, see Appendix Figure A1.

Finding 2: Americans were generally better at identifying misinformation as false—including misinformation promoted by foreign sources—than factual headlines as true.

After answering our recall question, respondents evaluated the accuracy of each headline. Moreover, to examine whether Americans were more or less susceptible to believing misinformation propagated by foreign sources, after evaluating the headlines discussed previously, respondents also evaluated the accuracy of three misinformation claims advanced on social media by Chinese government sources. Figure 2 presents the percentage of respondents who identified each category of headline as “true,” “false,” or who reported being “unsure.” Correct responses are indicated with numbers in a larger font. Respondents were generally better at flagging misinformation headlines as false than spotting real headlines as true. For example, 61% of respondents, on average, correctly identified misinformation headlines about COVID-19 treatments as false (60% similarly flagged our placebo treatment headlines as false), while only 41%, on average, correctly identified real headlines about virus treatments as true. Most respondents also correctly flagged misinformation promoted by Chinese government sources as false (61%), but one in five, on average, said they were true. The most important exception is that respondents struggled to identify misinformation claims about the origins of/response to the pandemic as false (only 46% correctly did so, on average).

Finding 3: Approval of Trump was a stronger predictor than partisanship of believing misinformation – including that advanced by foreign sources.

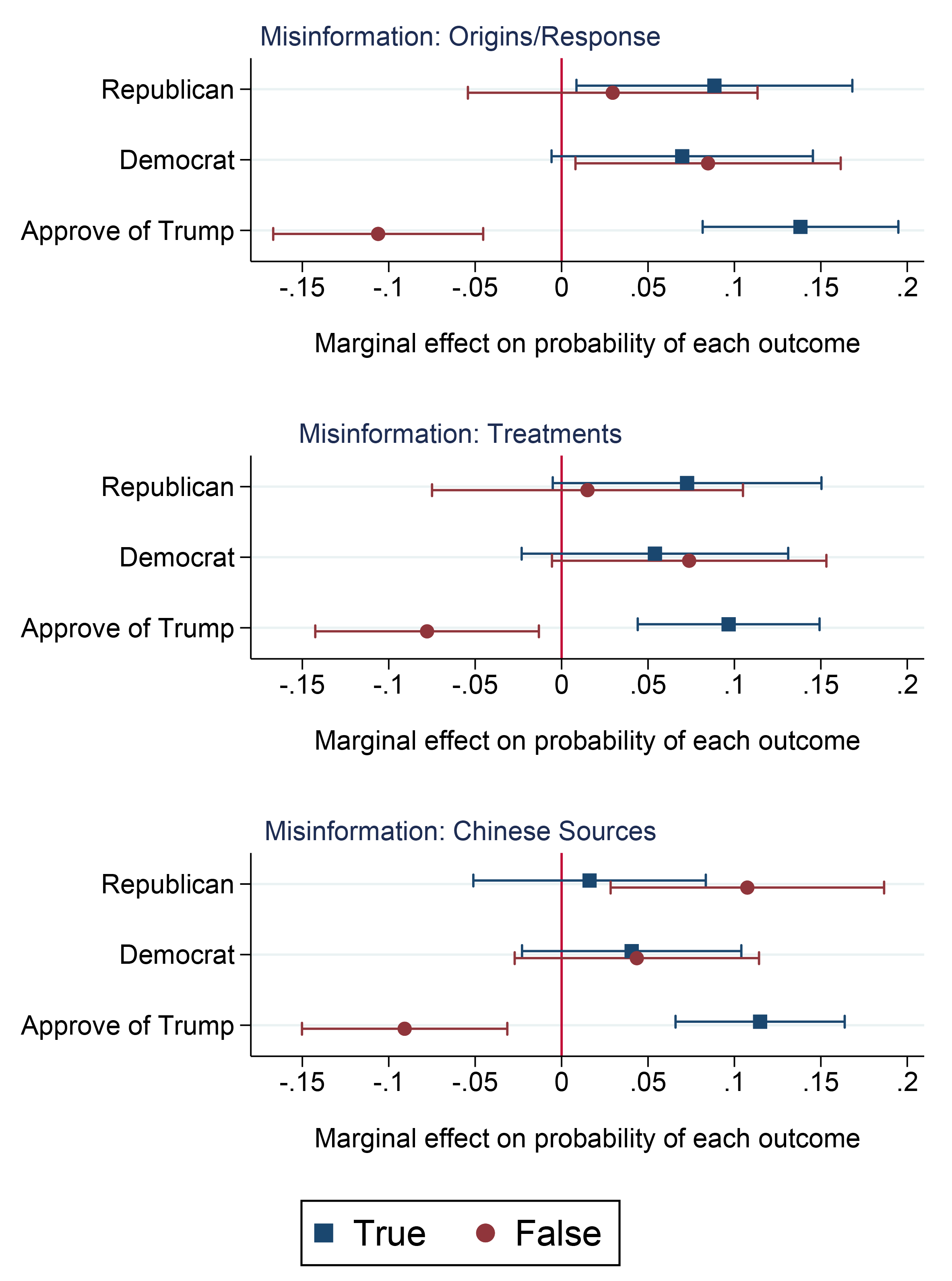

To explore the factors associated with believing false claims, we estimated multinomial logit regressions modeling accuracy perceptions of each category of misinformation headline (origins/response; treatments; Chinese sources) as a function of partisan indicators for Democrats and Republicans; an indicator identifying respondents who approve of President Trump’s job performance; and a series of demographic control variables including educational attainment, gender, race, and age.2For the associations between these demographic variables and accuracy perceptions, see Appendix Table A5. While partisanship and approval of Trump were strongly related, a sizeable number of partisans held incongruent assessments of Trump in our survey. Support for Trump was high among co-partisan Republicans in our sample (over 80%) but not universal. Almost 15% of Democrats in our sample approved of Trump’s job performance, as did almost 40% of independents.3These partisan approval figures are broadly comparable to those observed in contemporaneous Gallup surveys. See Gallup Presidential Job Approval Center, https://news.gallup.com/interactives/507569/presidential-job-approval-center.aspx. This allowed us to examine the relative strength of the relationship between susceptibility to believing misinformation and partisanship vs. affinity toward the former president, who has a long track record of trafficking conspiracy theories (Hellinger, 2019) and whom past research identified as a major disseminator of COVID-19 misinformation in particular (Evanega et al., 2020).

After controlling for opinions toward Trump, we found little evidence of significant partisan divides across categories.4Alternate analyses presented in the Appendix from models that exclude Trump approval find several statistically significant but substantively modest partisan differences (Appendix Figure A2). While some studies have found evidence that Republicans are more likely to believe misinformation about the pandemic than are Democrats (Calvillo et al., 2020; Freiling et al., 2023); others have found relatively scant evidence of major partisan divides (Hall Jamieson & Albarracín, 2020). In additional analyses, we also explored whether strong partisans from both sides of the aisle are more susceptible to COVID-19 misinformation (Druckman et al., 2021), but we found little evidence of this dynamic in our data. By contrast, the model finds that support for President Trump was a strong and significant predictor of accuracy perceptions. Trump supporters were 14% more likely to believe misinformation about the virus’s origins/government response and 10% more likely to believe misinformation about treatments than were respondents who did not support Trump, all else equal.5Trump supporters were also significantly more likely to believe our placebo misinformation headlines (Appendix Figure A5). Perhaps surprisingly, Trump supporters were also more likely (11%) to believe misinformation disseminated by Chinese sources, even though these false claims blamed the United States for the outbreak of the pandemic and praised China’s response to it as uniquely successful. This suggests that Trump supporters were not only more likely to believe in conspiracy theories or false treatments that were broadly consistent with narratives on conservative media (and sometimes those of the President himself). Rather, they were also more susceptible to believe misinformation broadly, even ideologically incongruent claims that blamed the United States for the pandemic and implicitly criticized the Trump administration’s handling of it by praising China’s response as uniquely successful.

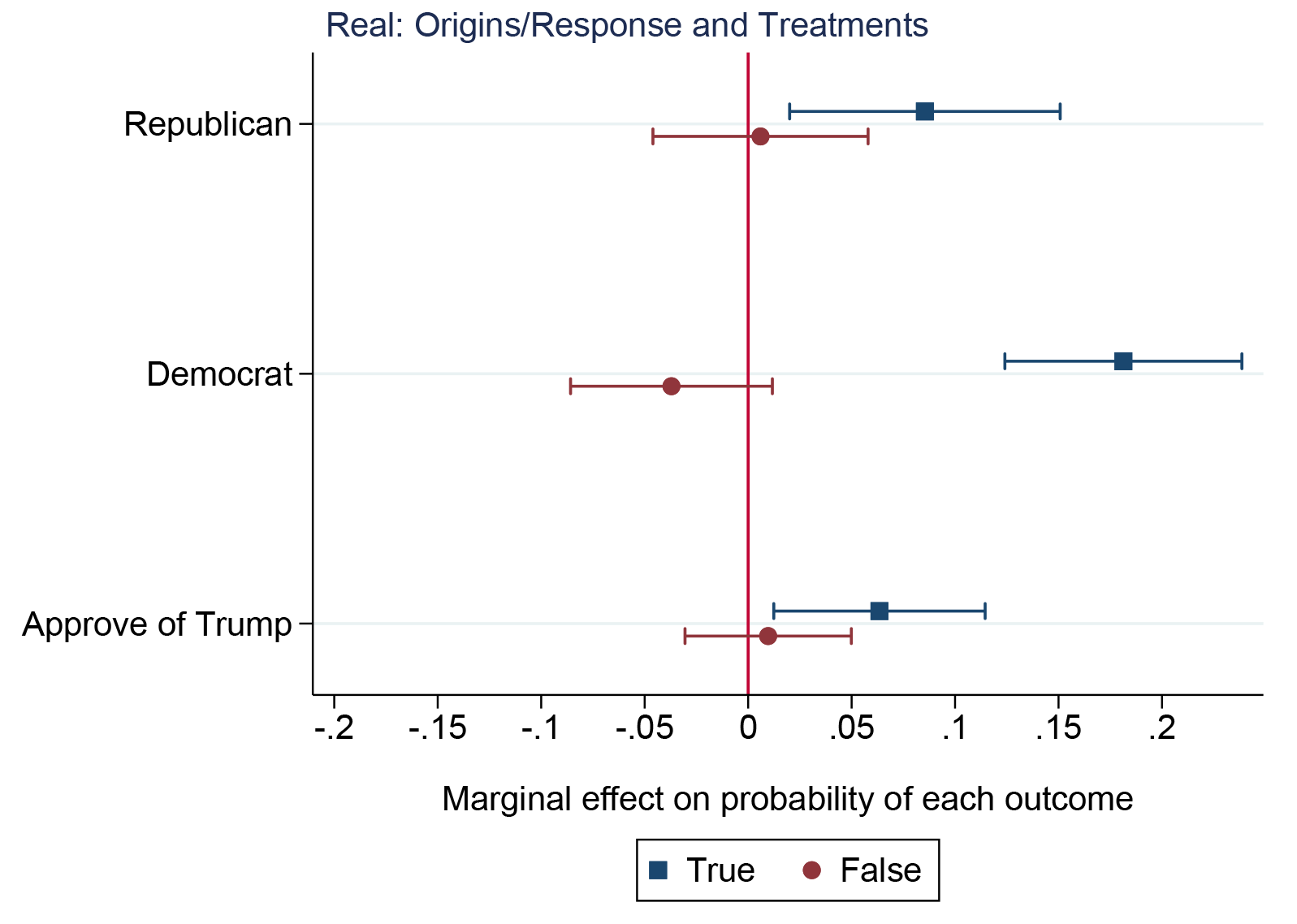

It is possible that Trump supporters were not uniquely likely to believe misinformation claims; rather, they could simply have been more likely to judge all headlines true. To test this alternate possibility, we estimated another multinomial logit model with an identical specification assessing the factors associated with accuracy perceptions of real headlines. As shown in Figure 4, approval of Trump was not a significant predictor of accuracy perceptions of real headlines. Trump supporters were no more likely to believe a real headline was true than false6.Wald test cannot reject the null of no significant difference, p < .10, two-tailed test. Estimating separate analyses on real origins/response and treatments headlines separately similarly yield null results for Trump approval (Appendix Figure A4). Trump supporters were not more credulous of all headlines; rather, they were specifically more likely to believe misinformation.

Finding 4: Trump supporters who were most attuned to the news were the most susceptible to believing misinformation.

Finally, to explore whether the relationship between believing misinformation and Trump approval varies with news consumption, we estimated another multinomial logit model examining accuracy perceptions of headlines in all three misinformation categories.7Additional analyses reported in the Appendix show that the interactive relationship is strongest in the Treatments and Chinese Sources categories (Appendix Figure A6). Specifically, we explored whether the positive relationship between Trump approval and believing misinformation observed previously was concentrated among less-informed respondents, high news consumers, or neither.

As shown in Figure 5, at low levels of news consumption, there was little difference in the propensity to believe misinformation between Trump supporters and those who did not support President Trump. By contrast, among those most attuned to the news the gap widened considerably. At the highest level of news consumption, a Trump supporter was more than twice as likely, on average, to believe false claims as an otherwise similar respondent who did not support President Trump. Rationally, greater news consumers should have more exposure to false claims, which could make them more likely to believe at least some misinformation. However, this relationship in which greater news consumption is correlated with greater exposure to and uptake of misinformation was most acute among Trump supporters. Our data is generally consistent with a logic outlined by Pennycook and Rand (2019) in which individuals lack careful reasoning and rely on heuristics such as familiarity. To the extent that the source of misinformation in these stages of the pandemic stemmed in part from the White House and ricocheted in Trump-supporting corners of social media, this may explain why highly attentive Trump supporters were the most likely to believe misinformation claims. Moreover, this logic might also explain why high news-consuming Trump supporters were also more likely to believe even ideologically incongruent misinformation from foreign sources if they relied more heavily on familiarity heuristics rather than motivated reasoning (Pennycook & Rand, 2019, 2021). Future research should examine these and alternate possible dynamics more directly.

Methods

To estimate “true” recall of prominent misinformation about the COVID-19 pandemic and to analyze the relationships between partisanship, support for President Trump, news consumption, and accuracy perceptions of misinformation, we administered a national survey of U.S. adults through Qualtrics from May 2–3, 2020 (n = 1,045). Respondents were recruited via the Lucid platform, which employs quota sampling to produce samples matched to the U.S. population on age, gender, ethnicity, and geographic region. Sample demographics and comparisons to U.S. census figures and benchmark surveys are provided in Appendix Table A1.

Respondents were asked to evaluate ten headlines advancing some claim about the pandemic chosen from a larger pool of 22. The Appendix provides complete wording for each headline (Appendix Table A2) and additional information on the randomization of headlines. Many studies of misinformation examine accuracy perceptions of headlines (e.g., Luo et al., 2020); others examine images of social media posts featuring short statements akin to headlines (e.g., Loomba et al., 2021); and some recent studies have gauged the correlates of accuracy perceptions of misinformation in a longer full article format (e.g., Pehlivanoglu et al., 2021). Each approach has tradeoffs. Because exposure to headlines is consistent with the type of scrolling common online and, indeed, has been shown to affect readers’ memory, inferences, and behavioral intentions (Ecker et al., 2014), we focus on reported recall and accuracy perceptions of headlines. However, as a result, we cannot assess the correlates of accuracy perceptions when respondents have access to greater contextual information than just what is captured in a headline (Pehlivanoglu et al., 2021). This is an important area for future research.

Each respondent evaluated a mix of real, prominent misinformation, and placebo headlines across two substantive topics (the origins of/government response to the virus; and treatments for the virus). To identify prominent misinformation and real headlines within each category, we searched news coverage in major U.S. newspapers and prominent fact-checking websites. For additional details, see the Appendix. Placebo headlines were created by the researchers for each topical category. Media searches confirmed that the claims advanced in these placebo headlines did not receive widespread media attention in early 2020. At the conclusion of the survey, all respondents received a debrief alerting them to which headlines were real vs. which ones were misinformation with links to sources of up-to-date information about COVID-19.

The two dependent variables were measured with the following questions, asked after each headline:

- Do you recall seeing this claim about COVID-19 reported or discussed in recent months? (Answer choices: yes, no, or unsure)

- Just your best guess, is this statement true? (Answer choices: yes, no, or unsure)

Following the metric created by Allcott & Gentzkow (2017), we estimated true recall as the difference in self-reported recall rates between prominent misinformation and placebo headlines.

The COVID-19 pandemic also affords an opportunity to examine public accuracy perceptions of misinformation promoted by foreign sources and to investigate whether the factors associated with accuracy misperceptions in such cases differ from those observed with respect to other types of misinformation. For example, Chinese officials openly pushed anti-American misinformation, such as the claim that the U.S. Army brought the coronavirus to Wuhan, on social media.8For example, see: Edward Wong, Matthew Rosenberg, and Julian Barnes, “Chinese agents helped spread messages that sowed virus panic in U.S., officials say,” The New York Times, April 22, 2020; Julian Barnes, Matthew Rosenberg, and Edward Wong, “As virus spreads, China and Russia see openings for disinformation,” The New York Times, March 28, 2020. Thus, after answering whether they recalled the headlines discussed previously and whether they perceived each as accurate or not, subjects were also asked to evaluate the accuracy of an additional pair of headlines chosen from a pool of three headlines. For the full wording of each headline and additional details about randomization, see Appendix Table A3.

To assess the relationships between partisanship, support for President Trump, social media use, and accuracy perceptions of prominent misinformation, including misinformation from foreign sources, we estimated a series of multinomial logit models. We measured partisanship using the standard Gallup question. We then asked respondents who initially identified as independents if they “leaned” toward one party or the other. Consistent with research showing that “leaners” have similar opinions and behaviors to other partisans (Petrocik, 2009), we code leaners as partisans.9Additional analyses that do not code “leaners” as partisans yield substantively similar results (Appendix Figure A3). We measure support for President Trump using the standard Gallup presidential approval question; our indicator variable was coded 1 for those who approve of President Trump and 0 otherwise. All models also controlled for respondents’ self-reported income, educational attainment, gender, race/ethnicity, and age.

Across substantive categories, the models show that approval of Trump is a strong and statistically significant predictor of incorrectly judging misinformation to be true. Moreover, after controlling for opinions toward Trump, the partisan gaps in accuracy perceptions (observed in models without Trump approval; see Appendix Figure A2) are small, and many are no longer statistically significant.

To explore whether Trump supporters are simply more likely to believe all headlines—not just misinformation—we estimated an additional multinomial logit for accuracy perceptions of real headlines. As shown in Figure 4, Trump supporters were not significantly more likely to judge real headlines as true than false.

A final multinomial logit includes the interaction of Trump approval and a measure of news consumption. To measure news consumption, our survey asked respondents how often they used three sources—TV news, newspapers, and Facebook or other social media sites—to stay up-to-date on the news. For each source, respondents answered on a four-point scale ranging from 1 (not at all) to 4 (a great deal). From these, we created an additive index of news consumption.10Estimating separate regressions examining the interaction of Trump approval and reliance on each media source for news individually yields similar results across media sources (Appendix Figure A7). Figure 5 shows that the gap between Trump supporters and opponents is concentrated among high-news consumers.

Because inattentive respondents can inject noise into survey data, weaken correlations, and lead to null results (Berinsky et al., 2021), we also replicated our analyses excluding “speeders” who completed the survey much faster than most respondents (Greszki et al., 2015). In all cases, results are substantively similar (Appendix Figures A8–A10).

Topics

Bibliography

Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211

Bailard, C. S., Porter, E., & Gross, K. (2022). Fact-checking Trump’s election lies can improve confidence in U.S. elections: Experimental evidence. Harvard Kennedy School (HKS) Misinformation Review, 3(6). https://doi.org/10.37016/MR-2020-109

Barnes, J., Rosenberg, M., & Wong, E. (2020, March 28). As virus spreads, China and Russia see openings for disinformation. The New York Times. https://www.nytimes.com/2020/03/28/us/politics/china-russia-coronavirus-disinformation.html

Berinsky, A. J., Margolis, M. F., Sances, M. W., & Warshaw, C. (2021). Using screeners to measure respondent attention on self-administered surveys: Which items and how many? Political Science Research and Methods, 9(2), 430–437. https://doi.org/10.1017/PSRM.2019.53

Bridgman, A., Merkley, E., Loewen, P. J., Owen, T., Ruths, D., Teichmann, L., & Zhilin, O. (2020). The causes and consequences of COVID-19 misperceptions: Understanding the role of news and social media. Harvard Kennedy School (HKS) Misinformation Review, 1(3). https://doi.org/10.37016/MR-2020-028

Calvillo, D. P., Ross, B. J., Garcia, R. J. B., Smelter, T. J., & Rutchick, A. M. (2020). Political ideology predicts perceptions of the threat of COVID-19 (and susceptibility to fake news about it). Social Psychological and Personality Science, 11(8), 1119–1128. https://doi.org/10.1177/1948550620940539

Carey, J. M., Guess, A. M., Loewen, P. J., Merkley, E., Nyhan, B., Phillips, J. B., & Reifler, J. (2022). The ephemeral effects of fact-checks on COVID-19 misperceptions in the United States, Great Britain and Canada. Nature Human Behaviour, 6(2), 236–243. https://doi.org/10.1038/s41562-021-01278-3

Clarke, L. (2021). Covid-19: Who fact checks health and science on Facebook? BMJ, 373. https://doi.org/10.1136/BMJ.N1170

Coppock, A., & McClellan, O. A. (2019). Validating the demographic, political, psychological, and experimental results obtained from a new source of online survey respondents. Research and Politics, 6(1). https://doi.org/10.1177/2053168018822174

Druckman, J. N., Ognyanova, K., Baum, M. A., Lazer, D., Perlis, R. H., Volpe, J. della, Santillana, M., Chwe, H., Quintana, A., & Simonson, M. (2021). The role of race, religion, and partisanship in misperceptions about COVID-19. Group Processes & Intergroup Relations, 24(4), 638–657. https://doi.org/10.1177/1368430220985912

Enders, A. M., Farhart, C., Miller, J., Uscinski, J., Saunders, K. & Drochon, H. (2022). Are Republicans and conservatives more likely to believe conspiracy theories? Political Behavior. https://doi.org/10.1007/S11109-022-09812-3

Enders, A. M., Uscinski, J. E., Klofstad, C., & Stoler, J. (2020). The different forms of COVID-19 misinformation and their consequences. Harvard Kennedy School (HKS) Misinformation Review, 1(8). https://doi.org/10.37016/MR-2020-48

Enders, A. M., Uscinski, J. E., Seelig, M. I., Klofstad, C., Wuchty, S., Funchion, J., Murthi, M., Premaratne, K., & Stoler, J. (2023). The relationship between social media use and beliefs in conspiracy theories and misinformation. Political Behavior, 45(2), 781–804. https://doi.org/10.1007/s11109-021-09734-6

Evanega, S., Lynas, M., Adams, J., & Smolenyak, K. (2020). Coronavirus misinformation: Quantifying sources and themes in the COVID-19 ‘infodemic’. JMIR Preprints. https://www.uncommonthought.com/mtblog/wp-content/uploads/2020/12/Evanega-et-al-Coronavirus-misinformation-submitted_07_23_20-1.pdf

Freiling, I., Krause, N. M., Scheufele, D. A., & Brossard, D. (2023). Believing and sharing misinformation, fact-checks, and accurate information on social media: The role of anxiety during COVID-19. New Media and Society, 25(1), 141–162. https://doi.org/10.1177/14614448211011451

Greszki, R., Meyer, M., & Schoen, H. (2015). Exploring the effects of removing “too fast” responses and respondents from web surveys. Public Opinion Quarterly, 79(2), 471–503. https://doi.org/10.1093/POQ/NFU058

Guess, A. M., Nyhan, B., & Reifler, J. (2020). Exposure to untrustworthy websites in the 2016 US election. Nature Human Behaviour, 4(5), 472–480. https://doi.org/10.1038/s41562-020-0833-x

Hall Jamieson, K., & Albarracín, D. (2020). The relation between media consumption and misinformation at the outset of the SARS-CoV-2 pandemic in the US. Harvard Kennedy School (HKS) Misinformation Review, 1(3). https://doi.org/10.37016/mr-2020-012

Harold, S., Beauchamp-Mustafaga, N., & Hornung, J. (2021). Chinese disinformation efforts on social media. RAND Corporation. https://www.rand.org/pubs/research_reports/RR4373z3.html

Hellinger, D. C. (2019). Conspiracies and conspiracy theories in the age of Trump. Palgrave MacMillan.

Kreps, S., & Kriner, D. L. (2022). The COVID-19 infodemic and the efficacy of interventions intended to reduce misinformation. Public Opinion Quarterly, 86(1), 162–175. https://doi.org/10.1093/poq/nfab075

Kreps, S., McCain, R. M., & Brundage, M. (2022). All the news that’s fit to fabricate: AI-generated text as a tool of media misinformation. Journal of Experimental Political Science, 9(1), 104–117. https://doi.org/10.1017/XPS.2020.37

Lodge, M., Steenbergen, M. R., & Brau, S. (1995). The responsive voter: Campaign information and the dynamics of candidate evaluation. American Political Science Review, 89(2), 309–326. https://doi.org/10.2307/2082427

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., & Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nature Human Behaviour, 5(3), 337–348. https://doi.org/10.1038/s41562-021-01056-1

Luo, M., Hancock, J. T., & Markowitz, D. M. (2020). Credibility perceptions and detection accuracy of fake news headlines on social media: Effects of truth-bias and endorsement cues. Communication Research, 49(2), 171–195. https://doi.org/10.1177/0093650220921321

Oliver, J. E., & Wood, T. J. (2014). Conspiracy theories and the paranoid style(s) of mass opinion. American Journal of Political Science, 58(4), 952–966. https://doi.org/10.1111/ajps.12084

Palosky C. (2021). COVID-19 misinformation is ubiquitous: 78% of the public believes or is unsure about at least one false statement, and nearly a third believe at least four of eight false statements tested. Kaiser Family Foundation. https://www.kff.org/coronavirus-covid-19/press-release/covid-19-misinformation-is-ubiquitous-78-of-the-public-believes-or-is-unsure-about-at-least-one-false-statement-and-nearly-at-third-believe-at-least-four-of-eight-false-statements-tested/

Pehlivanoglu, D., Lin, T., Deceus, F., Heemskerk, A., Ebner, N. C., & Cahill, B. S. (2021). The role of analytical reasoning and source credibility on the evaluation of real and fake full-length news articles. Cognitive Research: Principles and Implications, 6(1). https://doi.org/10.1186/S41235-021-00292-3

Pennycook, G., & Rand, D. G. (2019). Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188, 39–50. https://doi.org/10.1016/j.cognition.2018.06.011

Pennycook, G., & Rand, D. G. (2021). The psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402. https://doi.org/10.1016/J.TICS.2021.02.007

Petrocik, J. R. (2009). Measuring party support: Leaners are not independents. Electoral Studies, 28(4), 562–572. https://doi.org/10.1016/j.electstud.2009.05.022

Porter, E., & Wood, T. J. (2022). Political misinformation and factual corrections on the Facebook news feed: Experimental evidence. Journal of Politics, 84(3), 1812–1817. https://doi.org/10.1086/719271

Repnikova, M. (2022). How the People’s Republic of China amplifies Russian disinformation. U.S. Department of State. https://www.state.gov/briefings-foreign-press-centers/how-the-prc-amplifies-russian-disinformation

Schwarz, N., Sanna, L. J., Skurnik, I., & Yoon, C. (2007). Metacognitive experiences and the intricacies of setting people straight: Implications for debiasing and public information campaigns. Advances in Experimental Social Psychology, 39, 127–161. https://doi.org/10.1016/S0065-2601(06)39003-X

Uscinski, J. E., Enders, A. M., Klofstad, C., Seelig, M., Funchion, J., Everett, C., Wuchty, S., Premaratne, K., & Murthi, M. (2020). Why do people believe COVID-19 conspiracy theories? Harvard Kennedy School (HKS) Misinformation Review, 1(3). https://doi.org/10.37016/MR-2020-015

Wong, E., Rosenberg, M., & Barnes, J. (2020, April 22). Chinese agents helped spread messages that sowed virus panic in U.S., officials say. The New York Times. https://www.nytimes.com/2020/04/22/us/politics/coronavirus-china-disinformation.html

Zaller, J. (1992). The nature and origins of mass opinion. Cambridge University Press.

Funding

Funding was provided by the Cornell Atkinson Center for Sustainability.

Competing Interests

The authors declare no competing interest.

Ethics

All survey protocols were approved by Cornell University’s Institutional Review Board (Protocol #2004009569). All survey respondents provided informed consent before beginning the survey. Gender and race/ethnicity were queried with standard measures for matching to U.S. Census figures.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/3QR1MA