Peer Reviewed

The different forms of COVID-19 misinformation and their consequences

Article Metrics

112

CrossRef Citations

PDF Downloads

Page Views

As the COVID-19 pandemic progresses, an understanding of the structure and organization of beliefs in pandemic conspiracy theories and misinformation becomes increasingly critical for addressing the threat posed by these dubious ideas. In polling Americans about beliefs in 11 such ideas, we observed clear groupings of beliefs that correspond with different individual-level characteristics (e.g., support for Trump, distrust of scientists) and behavioral intentions (e.g., to take a vaccine, to engage in social activities). Moreover, we found that conspiracy theories enjoy more support, on average, than misinformation about dangerous health practices. Our findings suggest several paths for policymakers, communicators, and scientists to minimize the spread and impact of COVID-19 misinformation and conspiracy theories.

Research Questions

- Which COVID-19 conspiracy theories and misinformation receive the most and least support from the American public?

- Do people believe in “types” of COVID-19 conspiracy theories and misinformation? What are the types?

- What characteristics explain beliefs in different types of COVID-19 conspiracy theories and misinformation?

- How do the types of COVID-19 conspiracy theories and misinformation differentially relate to people’s pandemic behavior, assessments of the immediate future, and propensity to get vaccinated?

Essay Summary

- Efficiently addressing COVID-19 misinformation and conspiracy theories begins with an understanding of the prevalence of each of these dubious ideas, the individual predispositions that make them attractive to people, and their potential consequences.

- Using a national survey of U.S. adults fielded June 4–17, 2020 (n = 1,040), we found that conspiracy theories, especially those promoted by visible partisan figures, exhibited higher levels of support than medical misinformation about the treatment and transmissibility of COVID-19. This suggests that potentially dangerous health misinformation is more difficult to believe than abstract ideas about the nefarious intentions of governmental and political actors.

- Not everyone is equally susceptible to all forms of conspiracy theories and misinformation. Instead, people tend to believe in various classifications or “clusters” of dubious beliefs. Beliefs in partisan and non-partisan COVID-19 conspiracy beliefs and beliefs in health-related misinformation operate differently from one another. While some dubious beliefs find roots in liberal-conservative ideology and support for President Trump, belief in health-related misinformation is more a product of distrust in scientists.

- All classifications of dubious beliefs about COVID-19 are positively correlated, to varying degrees, with an optimistic view of health risks, engagement in leisure activities, and perceptions of government overreach in response to the pandemic, suggesting that the acceptance of dubious ideas may lead to risky behaviors.

- The classifications of beliefs in COVID-19 conspiracy theories and misinformation are differentially related to intentions to vaccinate, levels of education, and attitudes about governmental enforcement of pandemic-related regulations. These findings can aid policymakers and science communicators in prioritizing their efforts at “pre-bunking” and debunking misinformation.

Implications

Numerous conspiracy theories have attempted to tie the COVID-19 pandemic to nefarious intentions and scapegoat the supposed conspirators (Uscinski et al., 2020). Accused villains include China, Russia, Bill Gates, Democrats, the “deep state,” and the pharmaceutical industry, to name a few. Moreover, numerous pieces of misinformation have gained traction (e.g., Bridgman et al., 2020), including false claims about the medicinal properties of disinfectants, hydroxychloroquine, and ultraviolet light.

While it is true that similar forces tend to promote belief in conspiracy theories and misinformation (Enders & Smallpage, 2019), the specific content of each conspiracy theory and piece of misinformation is likely to attract its own set of adherents (Sternisko et al., 2020). The burgeoning literature on COVID-19 beliefs hints that there may be a structure to these dubious ideas, as they seem to vary in their content (Brennen et al., 2020), causal antecedents (Cassese et al., 2020; Uscinski et al., 2020), popularity (Miller, 2020), and behavioral and attitudinal consequences (Imhoff & Lamberty, 2020; Jolley & Paterson, 2020). However, researchers have yet to identify what the structure of COVID-19 conspiracy theories and misinformation looks like.

Thus, critical questions abound. Do people who are attracted to dubious ideas engage with just any conspiracy theory or piece of misinformation, or are they are more discriminating? How might dubious beliefs about COVID-19 “go together” to attract particular groups of adherents? Which sets of dubious beliefs are associated with which downstream attitudes and behaviors? Answers to these questions are central to the successful application of preventive and corrective measures on the part of policymakers, communicators, and public health officials.

To gain some leverage on these questions, we polled a representative sample of 1,040 Americans on their agreement with 11 dubious ideas about COVID-19 to uncover the structure undergirding these beliefs. By grouping these ideas by both the people who believe them and the predispositions associated with them, we demonstrate both that people are discerning with which ideas they adopt and that different dubious ideas are associated with different attitudes and behavioral intentions. We note that this study is confined to U.S. respondents. Similar studies undertaken in other cultural contexts may uncover different organizations of beliefs conditional on the popularity, availability, cultural relevance, and use of those ideas by influential elites. We also note that the patterns we identify are not necessarily fixed over time within a given context.

The first implication of our findings is that some dubious ideas find more support than others and may, therefore, matter more. For example, the theory that “the number of deaths related to the coronavirus has been exaggerated” is endorsed by nearly 30% of Americans, compared to only 13% who believe that “Bill Gates is behind the coronavirus pandemic.” More abstract conspiracy theories tend to find the most support, likely because of their greater generality (Borghi et al., 2017). The more general the dubious idea, the easier it is for individuals to fill in specific details using their existing belief system and other predispositions (DiMaggio, 1997; Lakoff; 2014; Paivio, 1991). This line of reasoning is consistent with empirical evidence demonstrating that general questions about conspiracy theories (e.g., a conspiracy to assassinate President Kennedy) are more popular than specific versions that, for example, specify a particular villain (Swift, 2013). Ideas such as these may also find support because politicians and media personalities echo them (Jamieson & Albarracín, 2020), often in only very general, abstract ways (Rosenblum & Muirhead, 2019).

More specific misinformation about the treatment and transmissibility of COVID-19, in contrast, finds less support. For example, only 12% of respondents agree that “putting disinfectant into your body can prevent or cure COVID-19.” While policymakers and public health officials should be concerned that anyone believes this, the relatively meager support signals, on the one hand, the general public’s resilience to some dangerous misinformation, even when it is spread by the president. On the other hand, low levels of support also suggest that a narrowly targeted approach may be necessary to address some beliefs. For example, health communicators, in conjunction with social media and internet service companies, might program warnings for people who search for terms related to disinfectant and COVID-19. Communicators might also target health care professionals, who can subsequently address specific issues with their most at-risk patients (in terms of either their health or perceived susceptibility to misinformation). More limited, targeted efforts such as these should prove more manageable and cost-effective and could also avoid incidentally amplifying the misinformation (Ingram, 2020).

The analyses presented below also demonstrate that various beliefs in conspiracy theories and misinformation are differentially associated with political ideology and (dis)trust in experts—these orientations color one’s interactions with new information and fill in the (perceived) motivations of scientists, politicians, and other elites in their public communications about the pandemic. We find that misinformed beliefs about hydroxychloroquine, for example, are strongly related to distrust in experts. Therefore, corrective strategies—which seek to alter misinformed beliefs by exposing individuals to valid information after a belief has formed (Nyhan et al., 2013)—that employ information generated by scientists or public health officials might not be effective, depending on the belief in question. If people do not trust scientists, they will not accept information associated with scientists. Trusted partisan figures may be able to mitigate these beliefs where scientists cannot (Berinsky, 2015), but many partisan and ideological elites will not have a strategic political incentive to do so (Uscinski et al., 2020). In our analyses, Trump support and conservative self-identification are associated with several conspiracy theories and health-related misinformation precisely because Trump and his allies engage in conspiracy theorizing and misinformation.

Instead of correcting misinformed pandemic-related beliefs, communicators and policymakers might consider preventive strategies, such as “pre-bunking” or “inoculation” (Banas & Miller 2013; Banas & Rains, 2010; Roozenbeek et al., 2020). These strategies seek to cast doubt on the veracity of misinformation and conspiracy theories before individuals are exposed to them. Just like vaccines generate resistance to viruses by exposing one to a weakened version of virus-causing germs, a limited exposure to harmful misinformation and reasonable counterarguments can help limit the extent to which such misinformation takes hold in the future (Banas & Miller, 2013; McGuire & Papageorgis, 1961). When it comes to the pandemic misinformation we identified, communicators and other practitioners might consider explicitly warning people that specific types of misinformation exist and that they are being promoted by fake experts, conspiracy theorists, and even hostile foreign entities. Preventive messaging might also explicitly emphasize the consensus among experts regarding mask usage and the harm of ingesting disinfectant and UV lights. This precise approach has proved efficacious in inoculating people against climate change misinformation (Cook et al., 2017).

Finally, our findings reveal that various types of COVID-19 misinformation and conspiracy theories can differ in their impact on subsequent behaviors and attitudes. Science and policy communicators, limited by funding and access to audiences, may need to prioritize their efforts based upon the impact of particular beliefs. Misinformed beliefs that are not associated with deleterious behaviors, or only weakly so, should be a lower priority. For example, while all types of COVID-19 conspiracy/misinformation beliefs are positively correlated with participation in risky leisure activities, the magnitude of the relationships between optimistic assessments of the threat of the virus in the short term and perceptions of government overreach in the response to the pandemic vary considerably. Beliefs in conspiracy theories touted by Trump and his supporters are most strongly related to the aforementioned ideas and activities, demonstrating the political component to the public’s response to pandemic information and policies.

Understanding these patterns can additionally help policy communicators and public health experts design targeted campaigns against misinformation based on the potential causes and consequences of such misinformation. For example, the disinfectant theory, given its general lack of support, may not be worth the resources necessary to address it with a large campaign. However, political conspiracy theories are more strongly related to undesirable behaviors and may be easier to combat with support from willing political elites. We also observe no significant correlation between beliefs in health-related misinformation and educational attainment. This suggests that education, as measured in this study, fails to outweigh substantive political and psychological motivations, such as distrust of or support for the president.

Not everyone is equally susceptible to conspiracy theories and misinformation. The major culprits in the spread of misinformation about COVID-19 are people’s orientations towards scientists and policymakers and their willingness to blindly accept proclamations from likeminded politicians who traffic in misinformation. The availability of conspiracy theories and misinformation online is, of course, worrisome and must be addressed. But, without a distrust of scientists and willingness to accept misinformation by prominent elites (like the president), many dubious ideas would likely fail to gain traction. Therefore, it is in the interest of policymakers, science communicators, and journalists to take on a longer-term project focused on fostering trust in political institutions, the scientific process, and journalism, as well as the creation of new institutions that disincentivize elite engagement with misinformation and conspiracy theories.

Findings

Finding 1: Beliefs in COVID-19 conspiracy theories and misinformation vary in support.

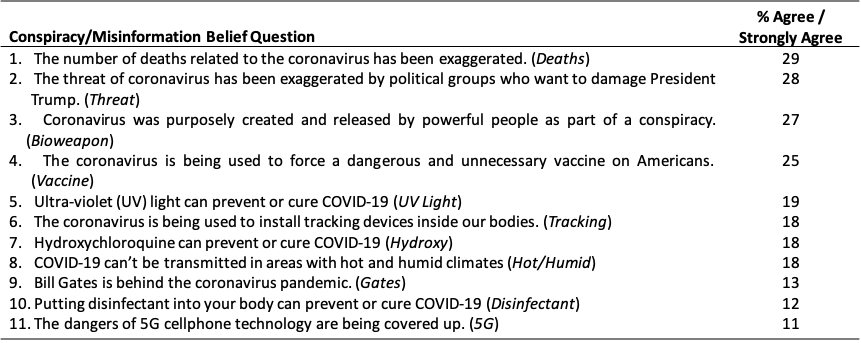

COVID-19 conspiracy theories find more support among the mass public, on average, than misinformation regarding treatment and transmissibility (Table 1). Theories that the virus, or the deaths associated with it, have been exaggerated for political gain, and that the virus is a bioweapon, garner support among close to 30% of the mass public. These levels of support are nearly identical to (if not slightly lower than) those found using the same question wording in March 2020 (Uscinski et al., 2020). The theory about the pandemic being used as a ploy to “force a dangerous and unnecessary vaccine on Americans” is endorsed by one in four respondents, suggesting that herd immunity may be illusive even after a vaccine becomes available. Similarly, nearly 20% believe that the pandemic is a ruse “to install tracking devices inside our bodies.” And, despite the attention given to conspiracy theories about Bill Gates and 5G technology, very few (13% and 11%, respectively) people believe that Gates “is behind the coronavirus pandemic” or that “the dangers of 5G cellphone technology are being covered up.”

Statements regarding medical misinformation find less support, on average, in our survey (Table 1). Dubious ideas about disinfectants, hot/humid climates, and hydroxychloroquine are believed by 12%, 18%, and 18% of Americans, respectively. Despite President Trump mentioning each of these, they have not received widespread support, suggesting limits to the spread of misinformation even when influential elites endorse it. We also suspect that the conspiracy beliefs are among the most popular in our survey because they are more abstract than the medical misinformation we asked about. It is encouraging that, while some people exhibit antagonistic orientations toward the government and experts, relatively few individuals are willing to adopt misinformation with inherent health implications.

Finding 2: Beliefs in COVID-19 conspiracy theories and misinformation are most associated with political motivations and distrust in scientists.

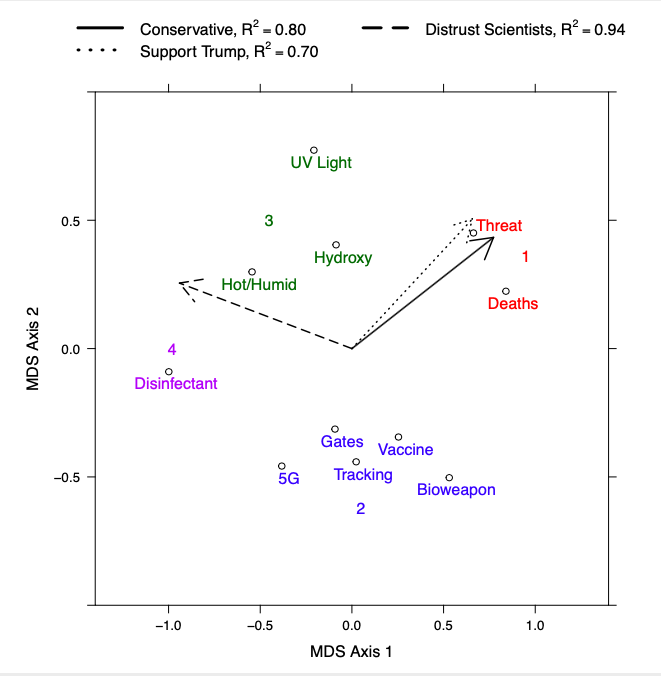

Figure 1 plots a cognitive map of people’s perceptions of COVID-19 conspiracy theories and misinformation as estimated by a nonmetric multidimensional scaling (MDS) analysis. The closer each of the points are to one another, the more similar they are in the minds of the mass public.

To facilitate interpretation, we color coded the beliefs by cluster. The eleven beliefs break into four distinct clusters. The first cluster contains two conspiracy theories that are partisan in nature, according to either their specific content (Threat) or the support they have found among salient partisan elites (Deaths). The second cluster contains five non-partisan/ideological conspiracy theories about origins and nefarious purposes of the virus. The fourth cluster includes health-related misinformation about hydroxychloroquine, the effect of UV light on the virus, and the transmissibility rate of the virus in hot and humid conditions. The final cluster contains a single belief about how consuming disinfectant can “prevent or cure COVID-19.” This belief is distinct from the others because it received relatively little support among the mass public, perhaps because the claim carries little face validity.

We further decipher the structure of dubious beliefs about COVID-19 by embedding external variables into the plot. We did this by regressing measures of potential drivers of some COVID-19 misinformation—support for Donald Trump, liberal-conservative ideological self-identification, and distrust in scientists—onto the point coordinates. The coefficients, which tell us about the strength and magnitude of the relationship between these external variables and the psychological structure of COVID-19 beliefs, are represented as arrows in the plot. The direction of the arrow corresponds to the types of beliefs held by conservatives, Trump supporters, and those distrustful of scientists.

Those who are most distrustful of scientists are most likely to believe the sentiments grouped in clusters 3 and 4, the clusters that contain potentially harmful misinformation about the treatment and transmissibility of COVID-19. Strong conservatives and those who ardently support Donald Trump are most likely to believe in the sentiments in cluster 1 and, to a lesser extent, cluster 3. Even though Donald Trump regularly touted the preventive power of hydroxychloroquine and publicly questioned the ability of UV light to treat the virus, these ideas are very different in their basic content, especially their political content, than conspiracy theories about the virus being exaggerated for political purposes.

Even though the mass public tends to follow leaders who share partisan and ideological labels, there are limits. This is especially evidenced by the lack of relationship between Trump support and beliefs about the power of consuming disinfectant, which Trump publicly proposed during a press conference and later backtracked.

Finding 3: The various types of misinformation and conspiracy theories are differentially related to the propensity to get the eventual vaccine, participation in public leisure activities, and optimism about the immediate future.

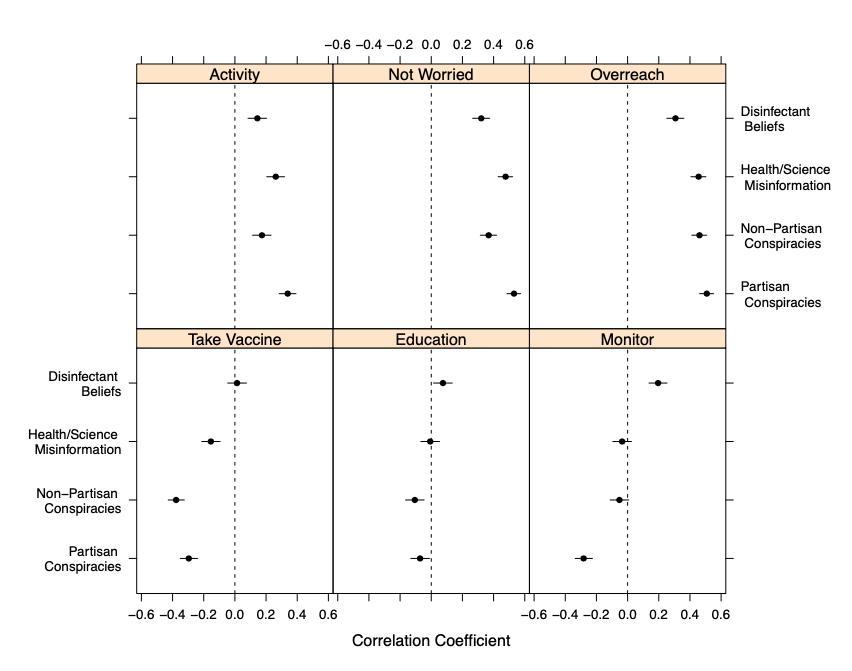

Beliefs in each of the four clusters of sentiments highlighted in Figure 1 are positively and statistically significantly related to participation in risky leisure activities, exhibiting optimism about the future, and feelings that the government has overstepped its bounds in dealing with the pandemic (Figure 2, top row).1For a different view of this information, see the heat map in the Supplemental Appendix. That said, the magnitude of relationships varies in systematic ways. Partisan conspiracy beliefs (cluster 1) are more strongly correlated with each of the variables in the top row of Figure 2 than any of the other belief clusters. The Disinfectant belief (cluster 4) is consistently the weakest correlate. This means that partisan conspiracy theories may be more dangerous than some health misinformation, perhaps because they are “confirmed” in the minds of many individuals by virtue of endorsement by co-partisan elites.

Relationships are less consistent when it comes to the variables in the bottom row. In each case, we observed relationships that vary in strength, sign, and significance across belief clusters. Belief in the healing power of disinfectant is unrelated to one’s intention to get the eventual vaccination, though the other belief clusters are negatively related. Beliefs in non-partisan conspiracy theories (cluster 2) and health misinformation (cluster 3) are unrelated to attitudes about the government monitoring social distancing and enforcing rules related to COVID-19, though partisan conspiracy beliefs (cluster 1) are strongly negatively related. Finally, we note that education is unrelated to beliefs in most health misinformation (cluster 3), which is particularly disconcerting. The correlations with education are considerably weaker, on average, than with any other attitude or behavior.

These findings underscore that beliefs in COVID-19 conspiracy theories and misinformation are born more of substantive orientations toward the government, scientists, and particular leaders, and less so a mechanical inability to accurately discern quality information about health and medicine.

Methods

The survey was administered to 1,040 adults between June 4–17, 2020. Qualtrics administered the survey, partnered with Lucid (luc.id) to recruit a sample that matched 2010 U.S. census records on sex, age, race, and income. Lucid, who complies fully with European Society for Opinion and Marketing Research (ESOMAR) standards for protecting research subjects’ privacy and information, maintains panels of subjects that are only used for research. Only respondents who successfully passed two attention checks were retained and analyzed. This data collection was approved by the university Institutional Review Board. Details about precise demographic composition of the sample appear in the Supplemental Appendix.

The central result of Figure 1—the configuration of beliefs in COVID-19 misinformation and conspiracy theories—was produced using a nonmetric multidimensional scaling analysis (MDS). MDS seeks to represent dissimilarities between stimuli as distances between points representing those stimuli in a low-dimensional space so that structure of attitudes can be easily visualized. This procedure is similar in spirit to factor analysis, except that the output is primarily graphical, and the input data need not be correlations. In this case, we use the Euclidean distance between each pair of conspiracy theories, . We use the “smacof” package in the R statistical computing environment to execute this analysis (De Leeuw & Mair, 2009).

The most common fit metric, the STRESS coefficient, is a “badness of fit” measure that quantifies the discrepancy between the input dissimilarities and the output distances: , where

are output distances between stimuli

and

, and

are input dissimilarities. Traditional rules of thumb stipulate that STRESS coefficients below 0.10 constitute a “good” fit, and those below 0.05 constitute “excellent” fit (Mair, 2018). The two-dimensional solution we present in Figure 2 has a STRESS coefficient of 0.068, which is a marked improvement over a one-dimensional solution (0.286). The Spearman rank order correlation between the input dissimilarities data and the output distances is also a strong 0.95, and the results of a permutation test suggest that it is unlikely that we achieved the relatively low STRESS value by chance (p = 0.014, 500 replications). Finally, a bootstrapped MDS configuration (see Supplemental Appendix) shows a stable solution. All metrics suggest good model fit.

We use hierarchical agglomerative clustering (complete linkage method) to decipher the clusters that are represented by color coding in Figure 1. The dendrogram, partitioned by the clusters identified in Figure 1, appears in the Supplemental Appendix. We also examined a scree plot of total within-cluster variance by number of clusters (see the Supplemental Appendix), a common technique for identifying the appropriate number of clusters before a k-means cluster analysis is conducted, to guide our decision-making about the proper number of clusters. A combination of this plot and a visual inspection of the MDS plot in Figure 1 led to the identification of four clusters. The belief variables that belong to each of these clusters were then averaged into distinct indexes for the analyses presented in Figure 2 (αCluster 1 = 0.83, αCluster 2 = 0.91, αCluster 3 = 0.79, αCluster 4 = N/A).

The length and direction of the vectors (arrows) in Figure 1 were generated by regressing external variables onto the MDS point coordinates, which resulted in a plot referred to as an MDS biplot. To do this, we first broke the distribution of the distrust in scientists, Trump support, and conservative ideology variables into terciles. Then we calculated the difference in each misinformation/conspiracy belief question between those in the first and third tercile. These differences are regressed onto the first and second MDS axis coordinates and the estimated regression coefficients are used as terminal points for the three vectors presented in Figure 1 (Greenacre, 2010). Regression coefficients and associated details appear in the Supplemental Appendix.

The Trump support variable is a 101-point feeling thermometer ranging from 0, very “cold” (negative) feelings, to 100, very “warm” (positive) feelings (M=38.09, SD=39.10). The ideological self-identification variable is a traditional seven-point measure ranging from 1, (extremely liberal) to 7 (extremely conservative) (M= 3.94, SD=1.76). The distrust in scientists variable is an index of responses on a 5-point strongly agree to strongly disagree scale to the following three statements: 1) “I trust doctors,” 2) “I trust scientists,” and 3) “I trust federal public health officials” (α = 0.80, range = 1-5, M = 3.74, SD = 0.85).

Finally, the quantities plotted in Figure 2 are bivariate Pearson product-moment correlations between the aforementioned scales of COVID-19 belief clusters and six attitudinal and behavioral variables regarding COVID-19. Education is a 6-point measure ranging from 1 (less than high school) to 6 (post-graduate degree) (M = 3.88, SD = 1.46). The take vaccine variable was measured on a 5-point scale ranging from 1 (strongly disagree) to 5 (strongly agree) response to the following statement: “If a vaccine for COVID-19 becomes available I would be willing to take it” (M = 3.70, SD= 1.37). The remaining four variables were measured using additive indices of responses to several items as follows:

Activity. “Please tell us how soon you would be willing to do the following activities in person. (1 = a year or longer, 4 = today; “I don’t normally do this” coded as missing; α = 0.92, Range = 1–4, M = 2.12, SD = 0.79)

- Attend a party

- Eat at a restaurant

- Use public transportation

- Go to the beach

- Travel by plane

- Go to a movie theater

- Take a cruise

- Attend a sporting event

- Visit a theme park

Monitor. (Each item is 1 = strongly disagree, 5 = strongly agree; α = 0.84, Range = 1–5, M = 3.25, SD = 1.00)

- Drones should monitor people’s movements and vital signs.

- A COVID-19 vaccine should be mandatory.

- Government officials should monitor public spaces to enforce social distancing guidelines.

- Face masks should be mandatory at most place of employment, schooling, and on mass transportation.

- People who violate social distancing rules should be fined.

Not worried. (Each item is 1 = strongly disagree, 5 = strongly agree; α = 0.81, Range = 1–5, M = 3.15, SD = 1.04)

- In the next month, it will be safe to leave the house more often.

- In the next month, it will be safe to relax social distancing guidelines.

- In the next month, the risk of me, or those close to me, catching the coronavirus will be low

Overreach. (Each item is 1 = strongly disagree, 5 = strongly agree; α = 0.71, Range = 1–5, M = 2.87, SD = 1.17)

- The government has overstepped its authority during the pandemic

- I support protests against current government closures and restrictions

Topics

Bibliography

Banas, J. A., & Miller, G. (2013). Inducing resistance to conspiracy theory propaganda: Testing inoculation and metainoculation strategies. Human Communication Research, 39(2), 184-207.

Banas, J. A., & Rains, S. A. (2010). A meta-analysis of research on inoculation theory. Communication Monographs, 77(3), 281-311.

Berinsky, A. (2015). Rumors and health care reform: Experiments in political misinformation. British Journal of Political Science, 47(2), 241-262.

Borghi, A. M., Binkofski, F., Castelfranchi, C., Cimatti, F., Scorolli, C., & Tummolini, L. (2017). The challenge of abstract concepts. Psychological Bulletin, 143(3), 263-292.

Brennen, J. S., Simon, F., Howard, P. N., & Nielsen, R. K. (2020). Types, sources, and claims of COVID-19 misinformation. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/types-sources-and-claims-covid-19-misinformation

Bridgman, A., Merkley, E., Loewen, P. J., Owen, T., Ruths, D., Teichmann, L., & Zhilin, O. (2020). The causes and consequences of COVID-19 misperceptions: Understanding the role of news and social media. Harvard Kennedy School Misinformation Review. https://doi.org/10.37016/mr-2020-028

Cassese, E. C., Farhart, C. E., & Miller, J. M. (2020). Gender differences in COVID-19 conspiracy theory beliefs. Politics & Gender, 1-10.

Cook, J., Lewandowsky, S., & Ecker, U. K. (2017). Neutralizing misinformation through inoculation: Exposing misleading argumentation techniques reduces their influence. PloS One, 12(5), e0175799. https://doi.org/10.1371/journal.pone.0175799

De Leeuw, J., & Mair, P. (2009). Multidimensional scaling using majorization: Smacof in R. Journal of Statistical Software, 31(3), 1-30.

DiMaggio, P. (1997). Culture and cognition. Annual Review of Sociology, 23(1), 263-287.

Enders, A. M., & Smallpage, S. M. (2019). Who are conspiracy theorists? A comprehensive approach to explaining conspiracy beliefs. Social Science Quarterly, 100(6), 2017-2032.

Greenacre, M. J. (2010). Biplots in practice. Fundacón BBVA.

Imhoff, R., & Lamberty, P. (2020). A bioweapon or a hoax? The link between distinct conspiracy beliefs about the coronavirus disease (COVID-19) outbreak and pandemic behavior. Social Psychological and Personality Science, 11(8), 1110-1118. https://doi.org/10.1177/1948550620934692

Ingram, M. (2020). The QAnon cult is growing and the media is helping. Columbia Journalism Review. https://www.cjr.org/the_media_today/the-qanon-conspiracy-cult-is-growing-and-the-media-is-helping.php

Jamieson, K. H., & Albarracín, D. (2020). The relation between media consumption and misinformation at the outset of the SARS-CoV-2 pandemic in the US. Harvard Kennedy School (HKS) Misinformation Review. https://doi.org/10.37016/mr-2020-012

Jolley, D., & Paterson, J. L. (2020). Pylons ablaze: Examining the role of 5G COVID‐19 conspiracy beliefs and support for violence. British Journal of Social Psychology, 59(3), 628-640.

Lakoff, G. (2014). Mapping the brain’s metaphor circuitry: Metaphorical thought in everyday reason. Frontiers in Human Neuroscience, 8, 958.

Mair, P. (2018). Modern psychometrics with R. Springer International Publishing.

McGuire, W. J., & Papageorgis, D. (1961). The relative efficacy of various types of prior belief-defense in producing immunity against persuasion. The Journal of Abnormal and Social Psychology, 62(2), 327-337.

Miller, J. M. (2020). Psychological, political, and situational factors combine to boost COVID-19 conspiracy theory beliefs. Canadian Journal of Political Science/Revue canadienne de science politique, 53(2), 327-334.

Nyhan, B., Reifler, J., & Ubel, P. A. (2013). The hazards of correcting myths about health care reform. Medical Care, 51(2), 127-132.

Paivio, A. (1991). Dual coding theory: Retrospect and current status. Canadian Journal of Psychology/Revue canadienne de psychologie, 45(3), 255-287. https://doi.org/10.1037/h0084295

Roozenbeek, J., van der Linden, S., & Nygren, T. (2020). Prebunking interventions based on “inoculation” theory can reduce susceptibility to misinformation across cultures. Harvard Kennedy School Misinformation Review. https://doi.org/10.37016//mr-2020-008

Rosenblum, N. L., & Muirhead, R. (2020). A lot of people are saying: The new conspiracism and the assault on democracy.Princeton University Press.

Sternisko, A., Cichocka, A., & Van Bavel, J. J. (2020). The dark side of social movements: Social identity, non-conformity, and the lure of conspiracy theories. Current Opinion in Psychology, 35, 1-6. https://doi.org/10.1016/j.copsyc.2020.02.007

Swift, A. (2013, Nov. 15). Majority in U.S. still believe JFK killed in a conspiracy. Gallup. https://news.gallup.com/poll/165893/majority-believe-jfk-killed-conspiracy.aspx

Uscinski, J. E., Enders, A. M., Klofstad, C., Seelig, M., Funchion, J., Everett, C., Wuchty, S., Premaratne, K., & Murthi, M. (2020). Why do people believe COVID-19 conspiracy theories? Harvard Kennedy School Misinformation Review. https://doi.org/10.37016/mr-2020-015

Funding

Funded by the University of Miami College of Arts & Sciences and University of Miami COVID-19 Rapid Response Grant UM 2020–2241.

Competing Interests

There are no conflicts of interest to report.

Ethics

Approval to conduct research with human subjects was granted by the University of Miami Human Subject Research Office on June 3, 2020 (Protocol #20200673).

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

The data and code necessary to replicate all the findings in this article are available in the Center for Open Science repository: https://osf.io/xu2mv/