Peer Reviewed

Structured expert elicitation on disinformation, misinformation, and malign influence: Barriers, strategies, and opportunities

Article Metrics

1

CrossRef Citations

PDF Downloads

Page Views

We used a modified Delphi method to elicit and synthesize experts’ views on disinformation, misinformation, and malign influence (DMMI). In a three-part process, experts first independently generated a range of effective strategies for combatting DMMI, identified the most impactful barriers to combatting DMMI, and proposed areas for future research. In the second stage, experts deliberated over the results of the first stage and in the final stage, experts rated and ranked the strategies, barriers, and opportunities for future research. Research into intervention effectiveness was a strategy that received the highest level of agreement, while robust platform regulation was deemed the strategy of highest priority to address. They also identified distrust in institutions, biases, political divisions, relative inattention to non-English-language DMMI, and politicians’ use of DMMI as major barriers to combatting DMMI. Vulnerability to DMMI was chosen by experts as the top priority for future study. Experts also agreed with definitions of disinformation as deliberately false/misleading information and misinformation as unintentionally so.

Research Questions

- To what degree do experts agree with emerging “standard” definitions of misinformation and disinformation?

- According to experts, what are the most effective strategies and practices for combatting and building resilience to disinformation, misinformation, and malign influence (DMMI)?

- What are the biggest challenges (or barriers) to combatting and building resilience to DMMI?

- What areas/topics for future actionable research on DMMI do experts identify as most promising?

Essay Summary

- We conducted a structured expert elicitation to synthesize experts’ views on disinformation, misinformation, and malign influence (DMMI). Forty-two experts participated across all stages of the study, around half of which were academics from various disciplines while the remainder were professionals engaged with DMMI in some way (see Methods for more details).

- Experts largely agreed on definitions of the terms misinformation and disinformation, based on those found in Wardle and Derakhshan (2017).

- The top five priority strategies identified for combating DMMI were: 1) establishing regulatory frameworks for digital platforms, 2) improving media literacy and critical thinking, 3) raising awareness in schools about DMMI’s dangers, 4) investing in research to understand and counter DMMI, and 5) fostering cross-sector collaboration.

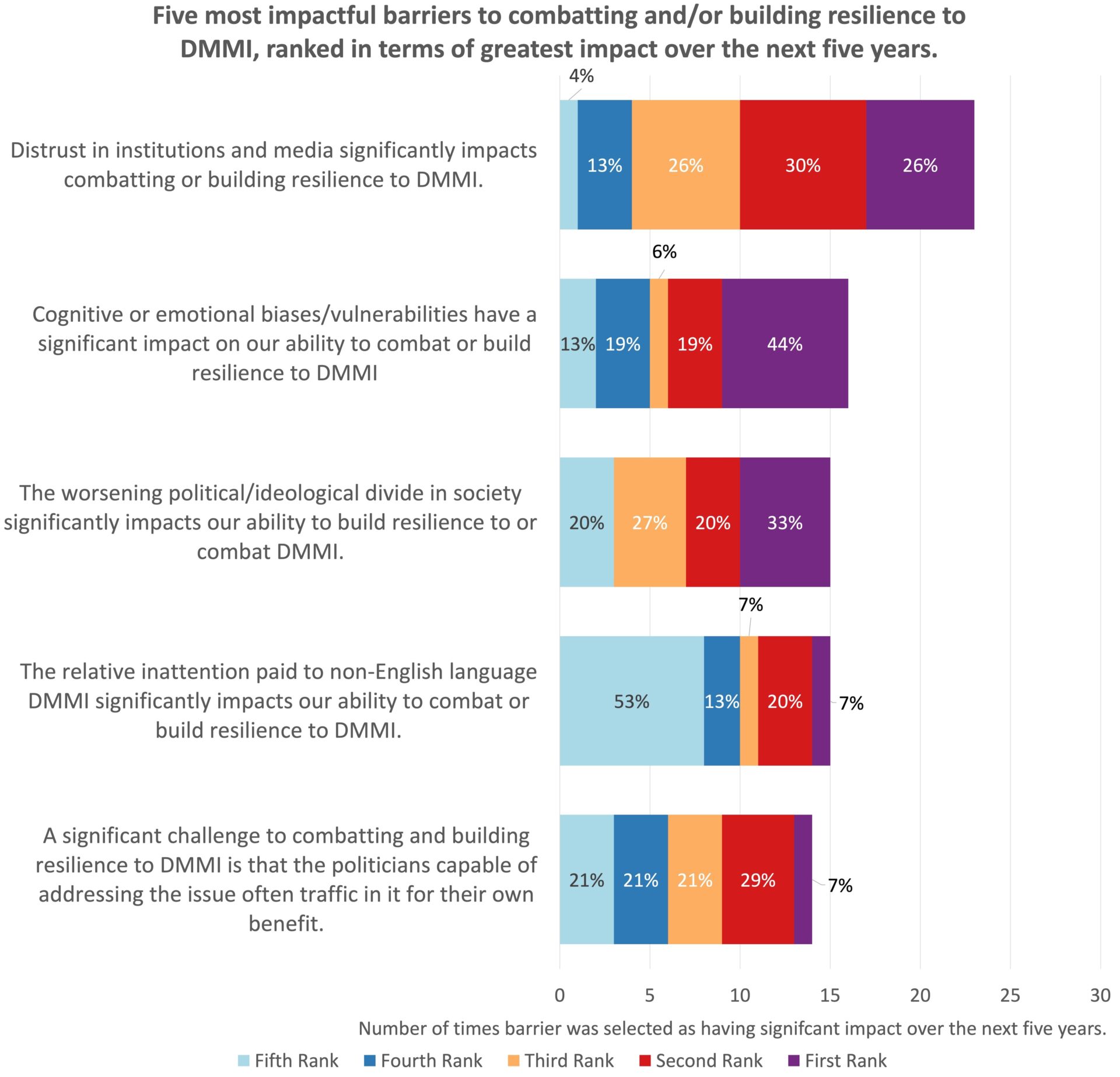

- The main barriers highlighted were distrust in institutions, cognitive/emotional biases, political divides, inattention to non-English-language DMMI, and the use of DMMI by politicians for personal gain.

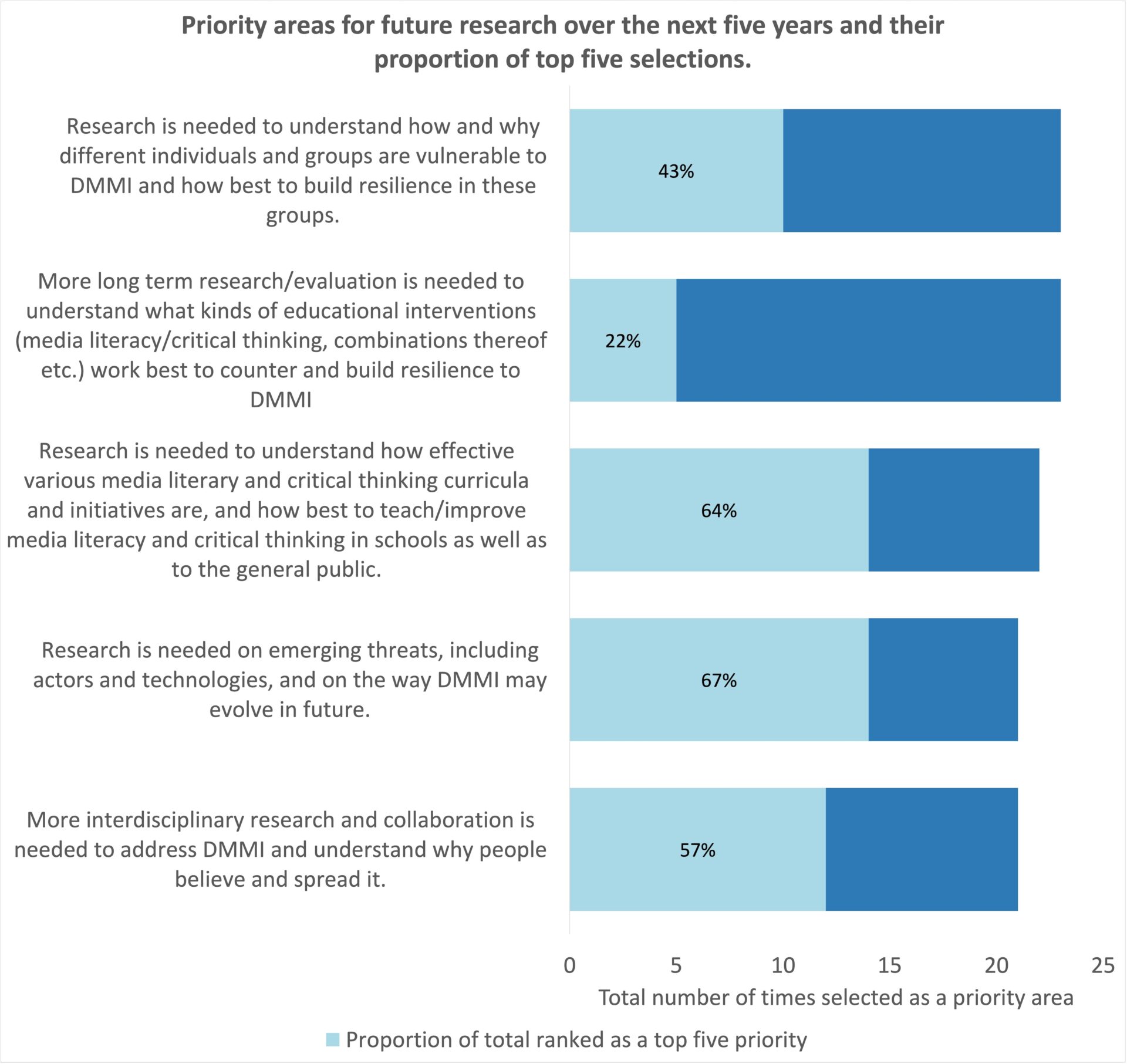

- The top five priority areas for future research were 1) research into individual and group vulnerabilities and building resilience to DMMI, 2) long-term research into the effectiveness of media literacy/critical thinking interventions, 3) research into the effectiveness of interventions on the general public, 4) research on emerging threats, including actors and technologies, and 5) interdisciplinary research to understand why people believe and spread DMMI.

Implications

DMMI are significant problems for civil societies around the world and are the focus of major research efforts and interventions by academics and professionals (journalists, educators, etc.). However, a major issue facing decision and policy makers, as well as academics, is what research areas and which interventions to prioritize. This is especially challenging because—unlike more mature areas of teaching, research, and practice, such as cyber-security, which has become a discipline in its own right—the study of DMMI is scattered among a variety of disciplines and professions ranging from psychology to journalism, each with their own specific perspectives, practices, and frameworks (Mahl et al., 2023; Nimmo & Hutchins, 2023). Thus, while recent research has highlighted major areas of agreement in the study of misinformation and related phenomena (Altay et al., 2023; Martel et al., 2024; Traberg et al., 2024), there is considerable disagreement about the most suitable interventions (Bateman & Jackson, 2024; Modirrousta-Galin & Higham, 2023; van der Linden et al., 2023). Thus, despite years of research, there remain pervasive research gaps, especially as to the efficiency of differing interventions (Aïmeur et al., 2023; Farhoudinia et al., 2023). All of this highlights the need for a more strategically intertwined research and intervention program, but the question, then, is how best to identify priority areas of research and the most promising interventions, despite research gaps and uncertainties. We need to move fast and fix things.

Expert elicitations are commonly used for situations involving complex problems, where there is considerable uncertainty, a relative lack of data, and where there are active debates among experts on substantive issues, but where prediction, decisions, and/or policies need to be made rapidly (Fraser et al., 2023; Martin et al., 2012).

In this article, we do not seek to lay out a prescriptive roadmap for combatting and building resilience to DMMI, but, instead, seek to provide the aggregated, considered judgements of experts on key questions relating to DMMI to assist decision and policy makers in their tasks. The key questions we sought to answer were:

- What are the most effective strategies or practices for combatting and/or building resilience to DMMI?

- What are the important factors, challenges, or opportunities that impact combatting and building resilience to DMMI?

- What are the most important areas/topics for future actionable research on DMMI?

Additionally, previous research has highlighted disciplinary differences in definitions of misinformation as well as the use of disinformation and misinformation as umbrella terms. Based on our experience in the area, as well as on a review of the relevant literature, we have observed that many (though not all) researchers and practitioners have adopted with slight variations definitions of misinformation and disinformation that hinge on intent (Wardle & Derakhshan, 2017). Indeed, most experts in our survey agreed with these definitions, confirming our view that these terms are becoming the “standard” definitions, even if many experts continue to use the terms misinformation or disinformation as umbrella terms, and others prefer to leave aside the issue of intentionality (van der Linden, 2022). This highlights the need for better umbrella terms—hence our use of DMMI.

Many interventions and strategies to combat and build resilience to DMMI have been proposed, but there is scholarly disagreement about which interventions are the most effective, in what circumstances, and at what scale. On the other hand, when surveyed, experts have also shown a tendency to agree that most deployed interventions would be effective, at least to some degree (Altay et al., 2023). Calls for multiple approaches (Bak-Coleman et al., 2022) and whole-of-society approaches (e.g., Murphy, 2023) to the problems of DMMI are common in the literature but policy makers, platforms, and funding institutions need to be able to make decisions now, not only about which evidence-based interventions to prioritize but about what research to prioritize in such a whole-of-society and generational effort (Bateman & Jackson, 2024).

The results of our elicitation process are consistent with those found in Altay et al. (2023) in that our experts agreed that a wide variety of strategies for combatting and building resilience to DMMI could be effective. Additionally, when asked which interventions should be prioritized over the next five years, experts believed that the top priority should be a more robust regulatory framework for social media companies to be overseen and enforced by a dedicated standards body. This was followed by improving media and information literacy (including critical thinking) and by raising awareness about the dangers of DMMI (especially in schools).

There were also some more surprising results. For example, experts ranked improving support and outreach to communities fifth in potential efficacy, but only 14th in terms of priority. Moreover, although our panel thought investment in DMMI research would be the most effective strategy, they thought that regulating social media companies and improving education should be a higher priority. The implication here is that decision and policy makers should prioritize both regulatory and educational intervention—they are thought of as both effective and a high priority.

Experts also identified many barriers to combatting and building resilience to DMMI. And, more so than any other barrier, experts agreed that the increasing use of DMMI by malicious state actors was a significant barrier to building resilience to DMMI. But when asked to prioritize which barriers should be focused on in the next five years, the number one choice was distrust in institutions and media. One implication here is that while malign state actors are seen as a major problem, the experts think that the best way to build resilience is to focus on building trust in institutions and the media.

Experts also identified and ranked priority areas for future research: research on emerging threats, research to better understand the effectiveness of media literacy and how best to teach it, interdisciplinary research on why people believe and spread dis/misinformation, and research into better audit methods for algorithms. However, of these, only research into media literacy/educational programs in schools was seen as a top five priority by the majority of our experts. The most frequently selected area to prioritize by our panel was research into how and why individuals/groups are vulnerable to DMMI. These results together suggest that research into interventions that focus on those most vulnerable to DMMI (specific individuals/groups and school children) would be promising avenues for future research.

The identification and ranking of barriers, strategies, interventions, and areas for future research by our experts are presented in more detail in the following section. These will help decision and policy makers better plan research programs and prioritize interventions.

Findings

Finding 1: Definitions of disinformation and misinformation.

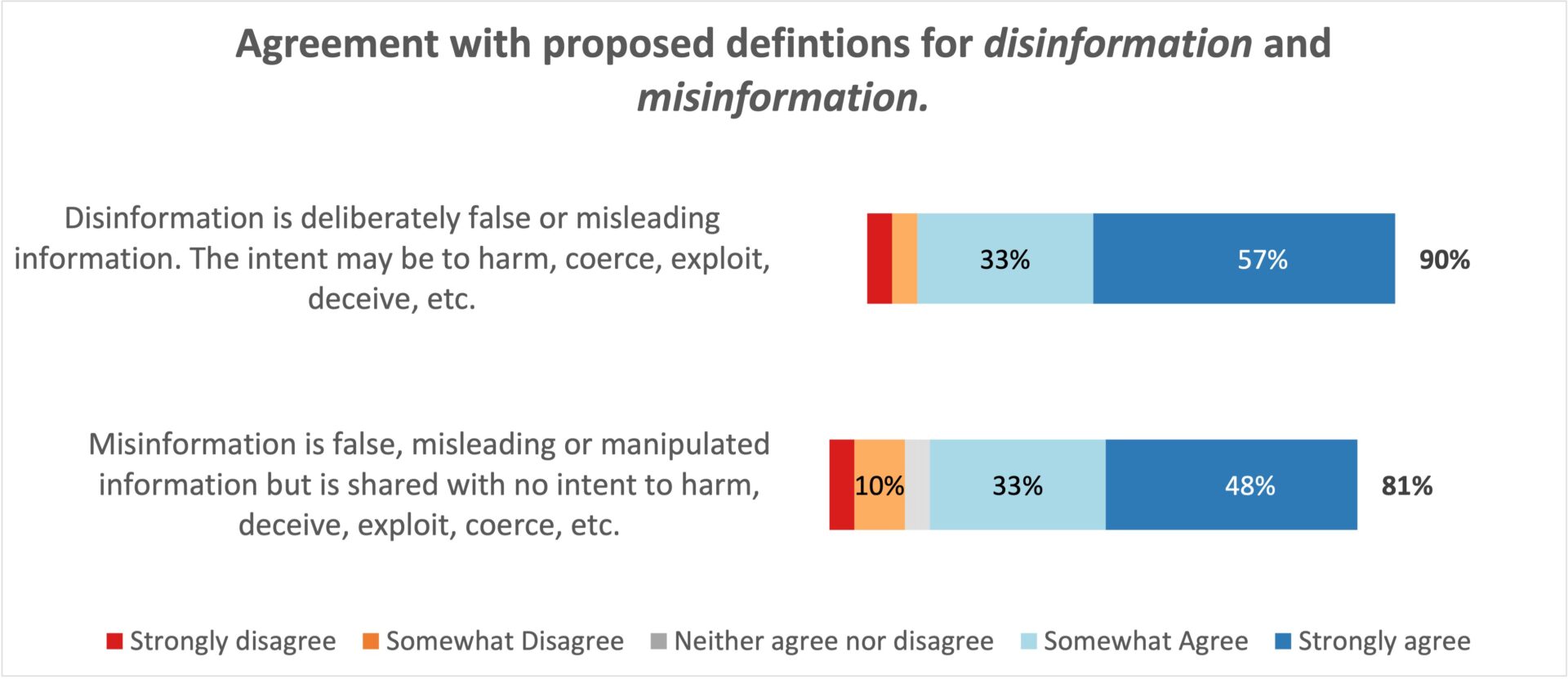

During the exercise, experts noted there was a need for consistent, agreed-upon definitions of key terms in the DMMI space. To address this, our final survey asked participants to indicate their level of agreement with definitions of disinformation and misinformation derived from Wardle and Derakhshan (2017) using a 5-point Likert-type scale ranging from 1 (strongly disagree) to 5 (strongly agree). As shown in Figure 1, we found broad agreement among our expert panel on the proposed definitions:

- Disinformation is deliberately false or misleading information. The intent may be to harm, coerce, exploit, deceive, etc.

- Misinformation is false, misleading, or manipulated information but is shared with no intent to harm, deceive, exploit, coerce, etc.

However, discussions between experts highlighted that these definitions are not perfect, for example, something may start as disinformation and be spread as misinformation and vice versa. Interestingly, the level of support for the definition of disinformation was stronger than for misinformation. This may reflect changing patterns in the use of these terms, where misinformation may be used as an umbrella term, which does not necessarily imply intent but does not exclude it either. Nevertheless, these findings support the view that these definitions are emerging as the standard consensus definitions of these terms.

Finding 2: Strategies for combatting and/or building resilience to DMMI.

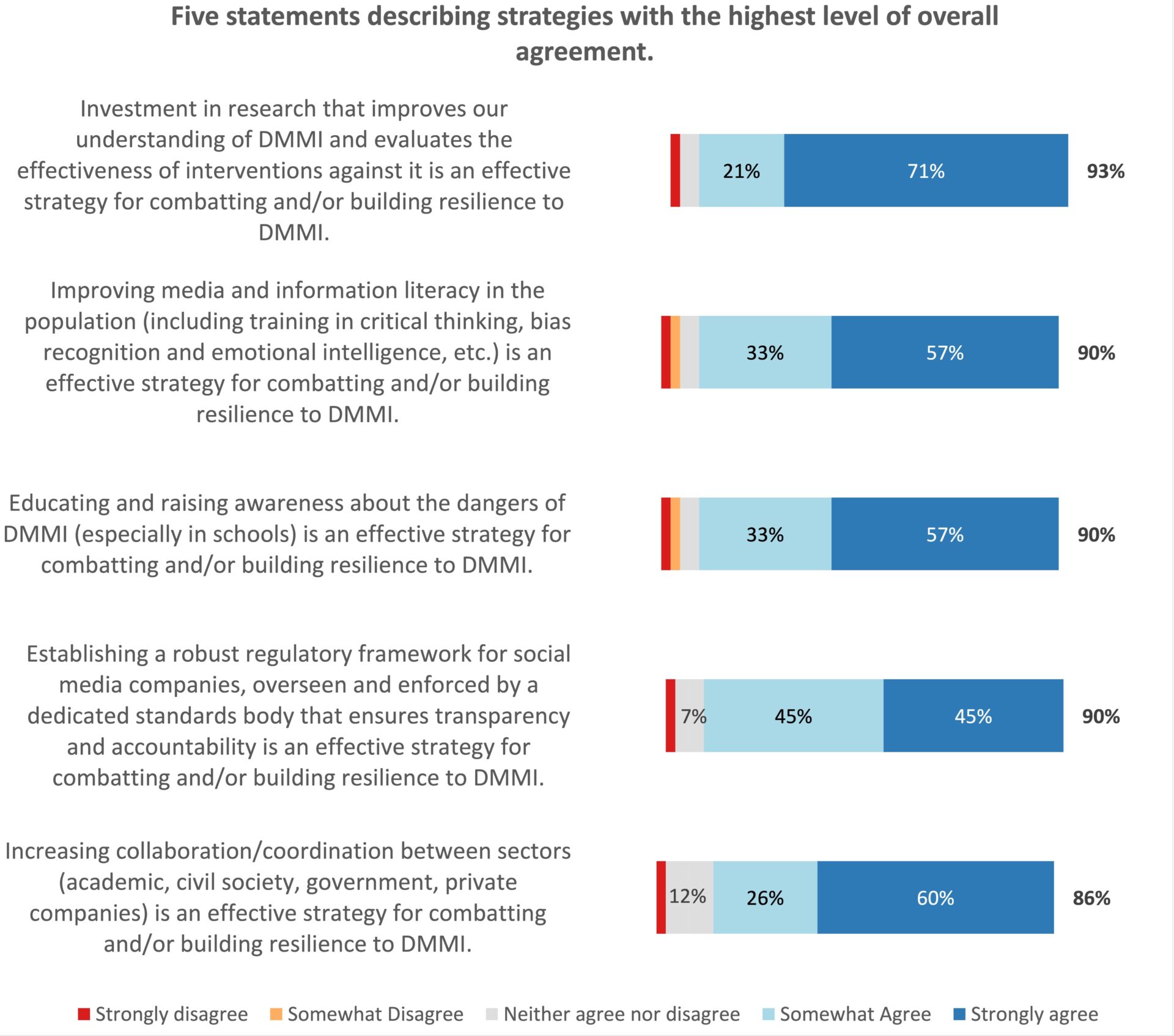

We then asked participants to express their level of agreement with statements describing strategies for combatting DMMI that emerged from Phases 1 and 2 of our process. Statements and their levels of agreement are shown in Figure 2. The strategy that garnered the most agreement (93%) was related to investing in research to improve our understanding of DMMI and to evaluate the effectiveness of interventions. Two strategies related to education and training—specifically, improving media and information literacy (90%) and raising awareness in schools about the dangers of DMMI (90%)—also received strong support. Additionally, 90% of experts agreed that establishing and enforcing a robust regulatory framework for social media companies is an effective strategy. A slightly lower, but still practically significant, level of agreement (86%) was observed for increasing collaboration and coordination between sectors, including academia, civil society, government, and private companies.

Finding 3: Prioritizing effective strategies over the next five years.

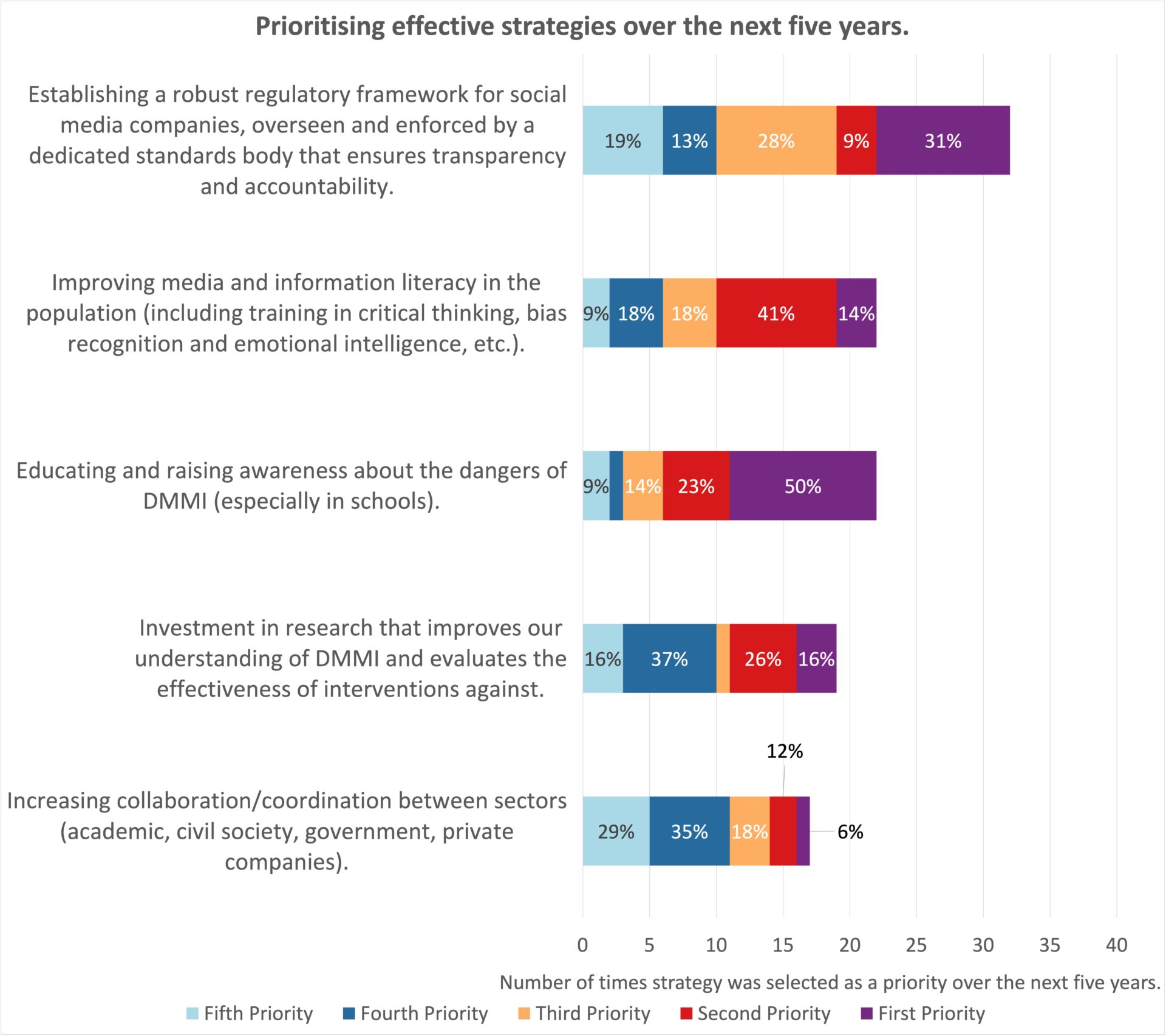

We asked panelists to select up to five strategies to prioritize over the next five years and then rank those selected in terms of priority (see Figure 3). The strategies most frequently prioritized were largely consistent with the top strategies identified earlier. However, there was a notable distinction: While “Establishing a robust regulatory framework for social media companies” ranked fourth in terms of agreement as an effective strategy—indicating broad consensus amongst experts on its potential impact—it was selected most frequently as the top priority for implementation over the next five years. This suggests that, despite not being seen as the most effective strategy overall, experts viewed it as the most urgent for practical action in the near term.

The ranking of strategies by priority over the next five years revealed further insights. For example, both education-related strategies—“Improving media and information literacy” and “Educating and raising awareness about the dangers of DMMI, especially in schools”—were selected as priorities 22 times each. However, “Educating and raising awareness” was ranked as the highest priority by 50% of participants who selected the strategy as a priority compared to only 14% for “improving media and information literacy.” Additionally, although “increasing collaboration between sectors” was the fifth most selected strategy, it was rarely ranked as the first, second, or third priority.

Finding 4: Factors that significantly impact the ability to combat and/or build resilience to DMMI.

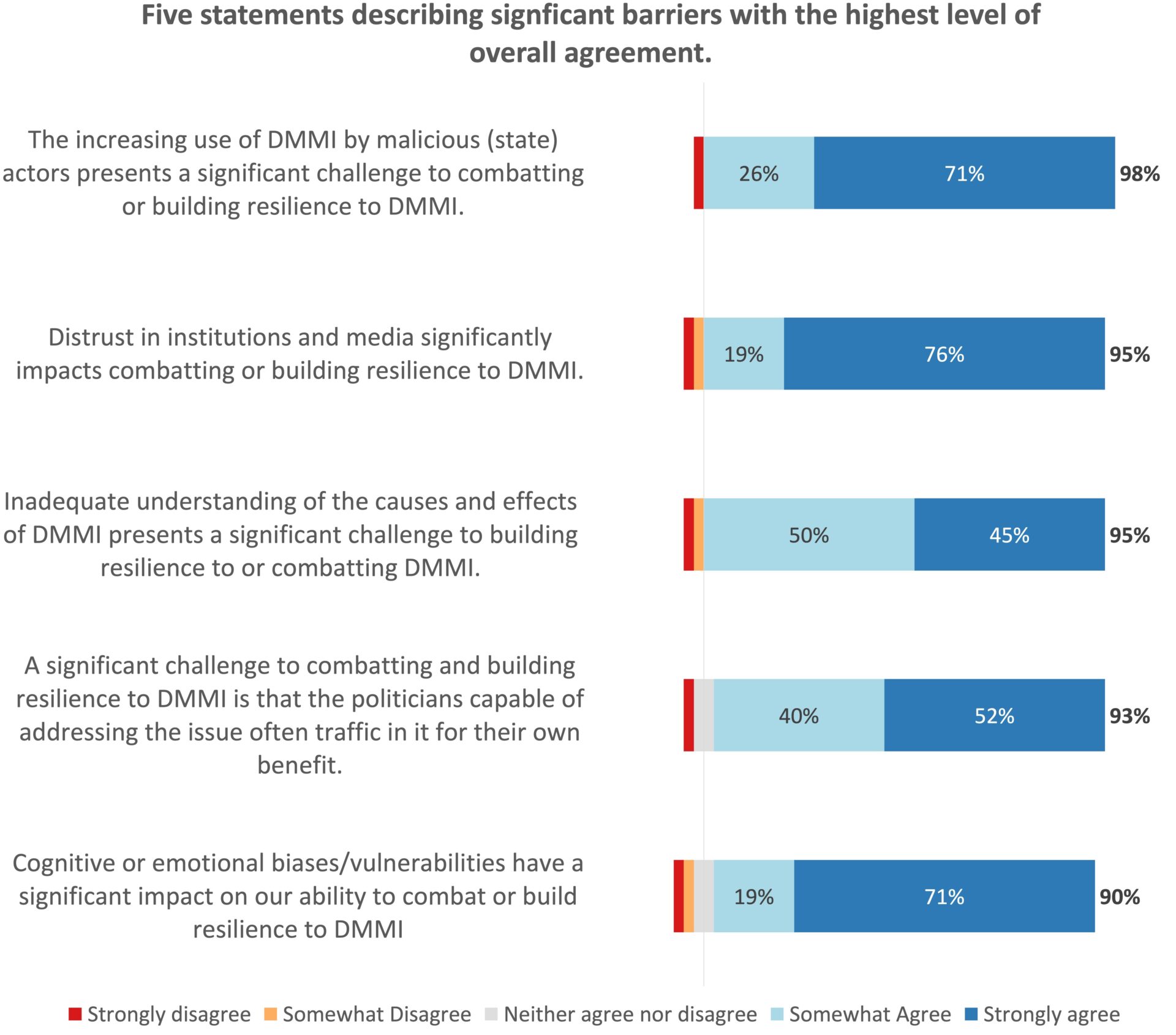

We asked participants to express their level of agreement with the various barriers to combatting DMMI that emerged from Phases 1 and 2 of our process. As expected, many barriers were identified, and many of these received broad levels of agreement. The top five barriers, based on level of agreement, were:

- The increasing use of DMMI, especially by state actors

- Inadequate understanding of the causes and effects of DMMI

- Distrust in institutions and the media

- Politicians who might be capable of addressing the issue often traffic in DMMI for their own benefit

- Cognitive and emotional biases and vulnerabilities

Interestingly, there was substantive discussion between experts of the information deficit model—problems with it, and whether it nevertheless captured some important aspects of the problem. In the online survey, these two positions, summarized as “reliance on the deficit model is a barrier to combatting or building resilience” and “the information deficit model, while incomplete, contributes to a useful understanding of DMMI” received very similar, but low levels of agreement, with approximately 43% and 42% respectively.

Finding 5: Ranking barriers that most impact combatting and building resilience to DMMI.

In addition to ranking barriers by the level of agreement among experts, we asked participants to identify the five most important or impactful barriers for the next five years and to rank them in order of significance. This exercise yielded a somewhat different list from the previous findings, with the five most frequently selected barriers being:

- Distrust in institutions and media

- Cognitive and emotional biases and vulnerabilities

- The worsening political/ideological divide in society

- The relative inattention paid to non-English language DMMI

- The use of DMMI by the very politicians who might be capable of addressing the problem

Finding 6: Areas for future research to be prioritized over the next five years.

That more research is needed with regard to disinformation, misinformation, and malign influence is a common refrain among experts, but after eliciting these areas in the first phase, we asked experts to select which future research areas should be prioritized over the next five years and then rank those selected in terms of the highest priority. These are the areas selected most often as an area to prioritize over the next five years (see Figure 6):

- Research is needed to understand how and why different individuals and groups are vulnerable to DMMI and how best to build resilience in these groups.

- More long-term research/evaluation is needed to understand what kinds of educational interventions (media literacy/critical thinking, combinations thereof etc.) work best to counter and build resilience to DMMI.

- Research is needed to understand how effective various media literary and critical thinking curricula and initiatives are, and how best to teach/improve media literacy and critical thinking in schools as well as to the general public.

- Research is needed on emerging threats, including actors and technologies, and on the way DMMI may evolve in future.

- More interdisciplinary research and collaboration is needed to address DMMI and understand why people believe and spread it.

Interestingly, although both options 1 and 2 were selected as an area to prioritize over the next five years 23 times, only 22% ranked 2 in their “top five.” This suggests that although both 1 and 2 were seen as priority areas for future research, experts thought 1 was a higher priority than 2. Moreover, while both 2 and 3 refer to research investigating the effectiveness of media literacy/critical thinking interventions, 2 is specifically about research into their long-term effectiveness. This result suggests that our experts recognize the importance of prioritizing research into these interventions but that long-term research is not as much of a priority for them.

Methods

Overview

Our study utilized a modified Delphi method, first described in Barnett et al. (2021), involving three phases to gather and refine expert judgments. Delphi processes are well-established elicitation techniques and offer reliable means of structuring group communication so that individuals, as a whole, can solve a common problem (Linstone & Turoff, 1975). Like all Delphi processes, our process shares the fundamentals of the method in that 1) it imposes/allows some level of anonymity to avoid undesirable psychological effects, 2) it is iterative, unfolding over multiple rounds, 3) between rounds experts are informed of other experts’ responses in some way, and 4) group responses are statistically summarized at the end of the process (Rowe & Wright, 1999).

However, unlike most existing Delphis, our process has an exploratory first phase and a deliberative second phase.1Delphis were originally designed to mitigate unwanted effects arising from committee processes by eliminating all interaction between experts (Linstone & Turoff, 1975; Rowe & Wright, 2001). Thus a distinct discussion phase is avoided by almost all Delphi designs. A notable exception is the IDEA Protocol, which is a quantitative Delphi design with a facilitated discussion phase. An exploratory first phase allows the experts themselves to proffer the range of topics under consideration, rather than researchers predetermining which topics will be ultimately evaluated. This is especially important in emerging areas like DMMI, where it would be difficult to properly justify any predetermined list of, for example, effective strategies for combatting and/or building resilience to DMMI. To ensure the full range of potential topics is captured, our process starts with an exploratory phase.

Our process also includes a deliberative second phase, which helps experts reach a shared understanding of the topics before evaluating them in the final phase. Facilitating expert interaction is especially important when the experts represent a range of disciplines/professions, as ultimately experts will be asked to evaluate judgments from experts outside their own area. A deliberative second phase allows experts to share supporting arguments and evidence, ask questions, resolve ambiguities, and ultimately gain the understanding required to properly evaluate judgments outside their expertise in the final phase.

Participants

We invited 304 experts from diverse fields via targeted conference and institutional mailing lists, professional networks, and authorship in relevant publications to participate in all phases. We used a loose definition of expert (and made clear our definition in the invitation) as anyone with “expertise in combatting, mitigating, and building resilience to disinformation, misinformation, and malign influence.” Participants were encouraged to forward the invitation within their professional networks but refrain from sharing the invitation on social media. The results of our recruitment efforts were 66 (25 female) experts from 20 different countries, all of whom provided their informed consent to participate. Experts averaged 7.7 years of experience, representing a diverse array of fields (see Table 1). The sample can be considered representative due to the strategic recruitment of experts from a range of fields related to disinformation, selected through targeted channels and professional networks that ensured diversity in geography, gender, and sector.

| Sector | Field/Profession | Number of Experts |

| Academic | Psychology | 8 |

| Computer Science | 4 | |

| International Relations | 2 | |

| Accounting | 1 | |

| Anthropology | 1 | |

| Information Science | 1 | |

| Political Science | 1 | |

| Philosophy | 1 | |

| Sociology | 1 | |

| Behavioral Science | 1 | |

| Professional | Non-Profits | 3 |

| Policy Analysis | 3 | |

| Journalism | 3 | |

| Education | 3 | |

| Media | 2 | |

| National Security | 2 | |

| Chaplaincy | 1 | |

| Fact Checking | 1 | |

| Public Relations | 1 | |

| Consultancy | 1 |

Design and Procedure

Phase 1: Generate. Experts were asked to submit up to five ideas in response to each of the three questions concerning effective DMMI strategies, key challenges, and crucial areas for future research (up to 15 ideas across the three questions). We received 791 ideas, which were thematically analyzed by the research team using an inductive, or bottom-up approach where themes are inferred from codes rather than fitting codes to predetermined themes. (Braun & Clarke, 2006). Next, we drafted a synthesis statement for each theme identified in the data. A total of 84 statements were generated in Phase 1.

Phase 2: Discuss. Synthesis statements were uploaded to the online discussion forum Loomio as separate “pages,” providing a self-contained space to discuss the statement. The forum remained open 24 hours a day for 10 days to give experts a chance to contribute to or review the discussion. Of the 66 experts invited to the forum, 48 accepted the invitation, and of those 48, 34 were actively engaged in discussion contributing at least one comment. In total, 243 comments were collected at the conclusion of Phase 2.

Phase 3: Assess. Following the discussion, statements were refined based on participant feedback, and a final survey was circulated. Participants rated their agreement with each of the 84 statements using a 5-point Likert scale (strongly disagree to strongly agree) and prioritized statements for future action. This phase concluded with 42 participants providing their evaluations, which informed the final analysis of the study.

While our study offers a comprehensive aggregation of expert opinions, several factors limit the generalizability of our results. First, since our sampling was non-random and purposeful, the sample favors experts within established professional networks, potentially underrepresenting less prominent but equally valid perspectives. Second, the majority of our sample was located in Australia, which may limit how generalizable the results are to other geographic areas. Finally, because of the highly dynamic nature of disinformation, misinformation, and malign influence, results may need to be reassessed in light of emerging technologies or actors.

Nevertheless, our structured expert elicitation has provided valuable insights into the challenges and strategies for combating DMMI. The experts agreed on key definitions and identified priority strategies, such as establishing regulatory frameworks for digital platforms, enhancing media literacy, and promoting cross-sector collaboration. Significant barriers—including distrust in institutions, cognitive and emotional biases, and political exploitation of DMMI—were highlighted. Areas for future research were also identified, including research into emerging threats and the effectiveness of media literacy programs. Overall, these findings outline the need for a research program and priority interventions that include a mix of regulation, media and information literacy programs (and additional research into how best they can be effective), institutional trust building, and a priority focus on research into how best to build resilience in the groups most vulnerable to disinformation, misinformation, and malign influence.

Topics

Bibliography

Aïmeur, E., Amri, S., & Brassard, G. (2023). Fake news, disinformation and misinformation in social media: A review. Social Network Analysis and Mining, 13(1), 30. https://doi.org/10.1007/s13278-023-01028-5

Altay, S., Berriche, M., Heuer, H., Farkas, J., & Rathje, S. (2023). A survey of expert views on misinformation: Definitions, determinants, solutions, and future of the field. Harvard Kennedy School (HKS) Misinformation Review, 4(4). https://doi.org/10.37016/mr-2020-119

Bak-Coleman, J. B., Kennedy, I., Wack, M., Beers, A., Schafer, J. S., Spiro, E. S., Starbird, K., & West, J. D. (2022). Combining interventions to reduce the spread of viral misinformation. Nature Human Behaviour, 6(10), 1372–1380.

Barnett, A., Primoratz, T., de Rozario, R., Saletta, M., Thornburn, L., & van Gelder, T. (2021). Analytic rigour in intelligence. The University of Melbourne. https://bpb-ap-se2.wpmucdn.com/blogs.unimelb.edu.au/dist/8/401/files/2021/04/Analytic-Rigour-in-Intelligence-Approved-for-Public-Release.pdf

Bateman, J., & Jackson, D. (2024). Countering disinformation effectively: An evidence-based policy guide. Carnegie Endowment for International Peace. https://carnegie-production-assets.s3.amazonaws.com/static/files/Carnegie_Countering_Disinformation_Effectively.pdf

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Farhoudinia, B., Ozturkcan, S., & Kasap, N. (2023). Fake news in business and management literature: A systematic review of definitions, theories, methods and implications. Aslib Journal of Information Management. Advance online publication. https://doi.org/10.1108/AJIM-09-2022-0418

Fraser, H., Bush, M., Wintle, B. C., Mody, F., Smith, E. T., Hanea, A. M., Gould, E., Hemming, V., Hamilton, D. G., Rumpff, L., Wilkinson, D. P., Pearson, R., Thorn, F. S., Ashton, R., Willcox, A., Gray, C. T., Head, A., Ross, M., Groenewegen, R., … Fidler, F. (2023). Predicting reliability through structured expert elicitation with the repliCATS (Collaborative Assessments for Trustworthy Science) process. PLOS ONE, 18(1), e0274429. https://doi.org/10.1371/journal.pone.0274429

Linstone, H. A., & Turoff, M. (Eds.). (1975). The Delphi method: Techniques and application. Addison-Wesley Publishing Co.

Mahl, D., Schäfer, M. S., & Zeng, J. (2023). Conspiracy theories in online environments: An interdisciplinary literature review and agenda for future research. New Media & Society, 25(7), 1781–1801. https://doi.org/10.1177/14614448221075759

Martel, C., Allen, J., Pennycook, G., & Rand, D. G. (2024). Crowds can effectively identify misinformation at scale. Perspectives on Psychological Science, 19(2), 477–488. https://doi.org/10.1177/17456916231190388

Martin, T. G., Burgman, M. A., Fidler, F., Kuhnert, P. M., Low‐Choy, S., McBride, M., & Mengersen, K. (2012). Eliciting expert knowledge in conservation science. Conservation Biology, 26(1), 29–38. https://doi.org/10.1111/j.1523-1739.2011.01806.x

Modirrousta-Galian, A., & Higham, P. A. (2023). Gamified inoculation interventions do not improve discrimination between true and fake news: Reanalyzing existing research with receiver operating characteristic analysis. Journal of Experimental Psychology: General, 152(9), 2411–2437. https://doi.org/10.1037/xge0001395

Murphy, B. (2023). Disinformation and national power. In B. Murphy (Ed.), Foreign disinformation in America and the U.S. government’s ethical obligations to respond (pp. 81–102). Springer Nature Switzerland. https://doi.org/10.1007/978-3-031-29904-9_7

Nimmo, B., & Hutchins, E. (2023). Phase-based tactical analysis of online operations. Carnegie Endowment for International Peace. https://carnegieendowment.org/research/2023/03/phase-based-tactical-analysis-of-online-operations?lang=en

Patton, M. Q. (2014). Qualitative research & evaluation methods: Integrating theory and practice. Sage Publications.

Rowe, G., & Wright, G. (1999). The Delphi technique as a forecasting tool: Issues and analysis. International Journal of Forecasting, 15(4), 353–375. https://doi.org/10.1016/S0169-2070(99)00018-7

Rowe, G., & Wright, G. (2001). Expert opinions in forecasting: The role of the Delphi technique. In J. S. Armstrong (Ed.), Principles of forecasting: A handbook for researchers and practitioners (pp. 125–144). Springer. https://doi.org/10.1007/978-0-306-47630-3_7

Traberg, C. S., Roozenbeek, J., & van der Linden, S. (2024). Gamified inoculation reduces susceptibility to misinformation from political ingroups. Harvard Kennedy School (HKS) Misinformation Review, 5(2). https://doi.org/10.37016/mr-2020-141

van der Linden, S. (2022). Misinformation: Susceptibility, spread, and interventions to immunize the public. Nature Medicine, 28(3), 460–467. https://doi.org/10.1038/s41591-022-01713-6

van der Linden, S., Thompson, B., & Roozenbeek, J. (2023). Editorial—The truth is out there: The psychology of conspiracy theories and how to counter them. Applied Cognitive Psychology, 37(2), 252–255. https://doi.org/10.1002/acp.4054

Wardle, C., & Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policymaking (Vol. 27). Council of Europe. https://edoc.coe.int/en/media/7495-information-disorder-toward-an-interdisciplinary-framework-for-research-and-policy-making.html

Funding

Funding for this research was provided by the Australian Government’s Department of Foreign Affairs and Trade, Cyber and Critical Technology Cooperation Program.

Competing Interests

The authors declare no competing interests.

Ethics

Participants provided informed consent before participating in any activity. This project has human research ethics approval from The University of Melbourne [ID 27056]. Participants were free to either ignore the question regarding their gender, select either male or female, enter their own text to self-describe, or select “prefer not to say.”

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse https://doi.org/10.7910/DVN/Q2A1RF and OSF https://osf.io/bvxqc/.