Peer Reviewed

Correcting campaign misinformation: Experimental evidence from a two-wave panel study

Article Metrics

1

CrossRef Citations

PDF Downloads

Page Views

In this study, we used a two-wave panel and a real-world intervention during the 2017 UK general election to investigate whether fact-checking can reduce beliefs in an incorrect campaign claim, source effects, the duration of source effects, and how predispositions including political orientations and prior exposure condition them. We find correction effects in the short term only, but across different political divisions and various prior exposure levels. We discuss the significance of independent fact-checking sources and the UK partisan press in facilitating effects.

Research Questions

- How do individuals respond to fact-checking? Do these effects endure over time?

- Are independent fact checkers more successful in correcting beliefs than partisan sources?

- Do predispositions such as initial beliefs and (party-) political orientation make individuals resistant to fact-checking?

- Does repeated prior exposure to a false claim render fact checks ineffective?

Essay Summary

- In an online survey experiment with 1,841 participants, we tested the effect of fact-checking on a campaign claim during the UK 2017 general election that EU immigration hurts the National Health Service. We used an edited version of Full Fact’s fact check which provided an alternative causal account and attributed the fact check to either an independent organization or to one of two partisan broadsheets, The Guardian (left-wing) or The Daily Telegraph (right-wing). The control group was simply asked again about the claim’s believability.

- The fact check led to people correcting their beliefs. However, in the follow-up wave, we found that these effects did not endure. Overall, the source of the correction had little impact.

- Predispositions and prior exposure do explain initial beliefs but do not appear to moderate the effects of fact-checking. One notable exception is that across the Brexit divide, “leavers” receiving The Guardian treatment were unlikely to adjust beliefs. They did so, however, when receiving an independent fact check or The Daily Telegraph treatment. Partisanship did not moderate source effects in a similar way.

- Fact-checking real campaign claims often involves interpreting causality, not just correcting facts. EU immigration does contribute to pressure on the NHS “but its annual impact is small compared to other factors” (Full Fact, 2016). Our study demonstrates the effectiveness of nuanced corrective interventions across political divides.

Implications

The analysis we present in this paper contributes to research on how to correct misbeliefs, particularly those arising from misleading political party campaign messages. Recently documented strategies include “inoculation” by raising awareness of how misinformation is produced (Maertens et al., 2021), news literacy tweets (Vraga et al., 2022), and platform interventions such as social media warnings (Clayton et al., 2020). We build on research exploring expert corrections specifically, produced by independent think tanks and the legacy media, with the latter remaining “the dominant source of political fact-checking” especially in Western Europe (Graves & Cherubini, 2016, p. 8). To this end, we used a real-world intervention but varied the source of the fact check to clarify source effects.

We used a fact check by Full Fact, a well-established fact-checking agency in the United Kingdom, to clarify the relationship between rising immigration levels and financial strain on the UK National Health System (NHS). This was a prominent campaign claim in the 2017 General Election, and the fact check provided a coherent alternative explanation.1See https://fullfact.org/europe/eu-immigration-and-pressure-nhs “Coherence” refers to the format of the reality check—defined by Walter and Murphy (2018) as “corrective messages that integrate retractions with alternative explanations (i.e., coherence)” (p. 436). In the real world, reality checks and fact-checking often are not straightforwardly about facts such as whether unemployment is going up or down but about their interpretation and causality. In our case, the correction did not question the level of EU immigration and its costs but provided an overview of the different sources of financial pressure on the NHS, rendering false the connection between immigration and “unprecedented pressure.” In their meta-analysis, Walter and Murphy (2018) found these kinds of coherent alternative explanations to be effective but also that source credibility matters. We, therefore, attributed the same fact-check content (text plus graph) to different sources: an independent fact-checking organisation or one of the two trusted partisan broadsheets: The Telegraph and The Guardian.

Our study, we argue, accurately reflects the type of misperceptions voters most likely hold (causal attributions about policy problems) and how they might perceive fact checks: another piece of information to evaluate against predispositions. The corrective may be inconsistent with the reasoning underpinning prior beliefs, either correct (see partisan “cheerleading,” Jerit & Zhao, 2020) or incorrect. We, therefore, investigate the differential impacts of fact-checking based on prior attitudes instead of focusing on average treatment effects only. As for incorrect prior beliefs, motivated reasoning accounts suggest that the desire to reach conclusions supportive of prior attitudes or beliefs may limit or override any accuracy motivations (Graves & Amazeen, 2019; Nyhan & Reifler, 2010). As a result, partisan-motivated reasoners are more likely to experience negative affect (Munro & Ditto, 1997), regard the evidence as weak, and to engage in counterargument and disconfirmation (Edwards & Smith, 1996). As for correct prior beliefs, they may be based on uncertain evidence or may be merely guesses—in which case coherent fact checks are still helpful. Finally, more frequent prior exposure to incorrect information may undermine the effectiveness of fact-checking (e.g., Lewandowsky et al., 2017). Therefore, the hypotheses we tested in this study are source effects (source of correction), predispositions including initial beliefs and partisanship, and familiarity with false claim prior to exposure to the corrective.

We found that it is possible to correct misbeliefs about complex causal relationships but these effects, like other types of corrections, do not endure. We thus found evidence more in line with arguments on heuristic “fluency” that suggest that as time progresses after being corrected, individuals will revert to their pre-existing beliefs (Berinsky, 2017; see also Swire-Thompson et. al., 2023). Further research might investigate repetition of narrative correctives to better understand the endurance of corrections.

Despite the limited durability of corrections, we do provide encouraging findings for fact checkers including journalists, educators, and policymakers. While early research found evidence of unintended “backfire” effects from fact-checking (e.g., Nyhan & Reifler, 2010), we found no evidence that predispositions such as strong initial beliefs, familiarity with the false claim, or partisanship would render the short-term impacts of fact-checking ineffective. The campaign claim that rising European Union immigration puts unprecedented financial pressure on the NHS “that has forced doctors to take on 1.5 million extra patients in three years” (Full Fact, 2016) is most consistent with the worldviews of citizens who had voted for leaving the EU in the Brexit referendum. Yet these respondents were equally likely to be corrected if an independent fact checker or a right-of-centre broadsheet (The Telegraph) endorsed the corrective. We also investigated partisan subgroup differences. Contrary to Prike et al. (2023), we found no partisan subgroups that would be more resistant to these corrections, including those who were most likely to believe the claim: voters of the UK Independence Party, Plaid Cymru, and the Conservatives.2On a five-point scale ranging from 1 (strongly believe) to 5 (strongly disbelieve), mean scores of initial belief are M = 1.71, 2.16, and 2.28, respectively.

Future research might also directly compare the efficacy of the type of “enhanced” corrective in our treatments with a simple correction of facts. Sangalang et al. (2019) argue that correctives need to incorporate multiple sides rather than simply stating the facts. Our evidence is consistent with the short-term effectiveness of such a corrective, but we cannot say that they are any more effective than correction of the facts.

Findings

Finding 1: Fact-checking led to correction of false beliefs immediately after treatment delivery, but these effects did not endure.

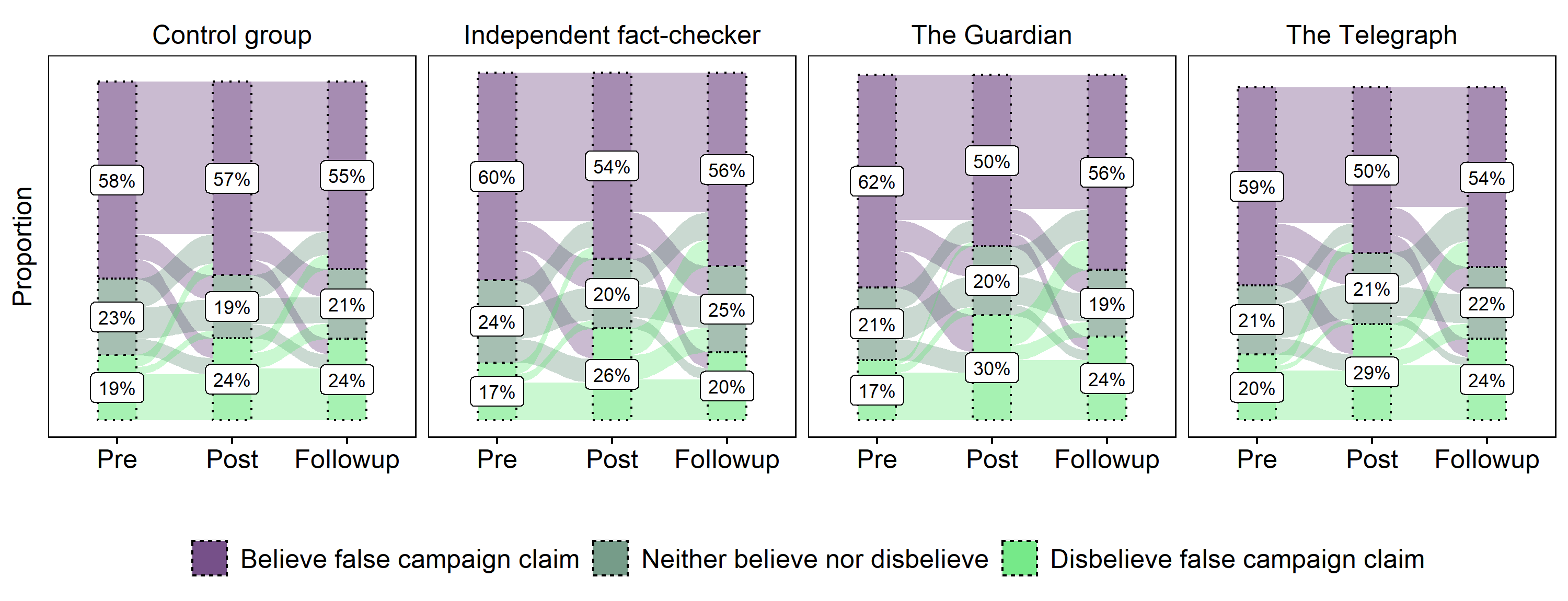

The Sankey diagram in Figure 1 shows descriptively the proportion of respondents believing or disbelieving the claim that the “NHS is under unprecedented pressure due to an influx of EU migrants that has forced doctors to take on 1.5 million extra patients in three years”—a prominent issue during the campaign (Cowley & Kavanagh, 2018) about which they were likely to have pre-existing views. We measured this at three different points for each respondent—pre-treatment, immediately post-treatment (or simply at the end of the survey for the control group), and a week after the treatment. In the three treatment groups, we presented the same fact check but varied whether it was attributed to an independent fact-checking organization, The Daily Telegraph (right-wing newspaper) or The Guardian(left-wing newspaper). The fact check itself, featuring both an explanatory text and a graph, corrected the misinformation by comparing the cost associated with immigration to the costs from other pressures, such as ageing, new technologies and expanded treatment, and rising costs and wages, see Appendix Figure A1.

The observed proportions suggest that believability of the false claim across the treatment groups decreases consistently when compared to the control group. These are relatively small effects, ranging from 6% of respondents correcting their beliefs in the independent fact checker group up to 12% in the Guardian group. In the follow-up wave, the effects dissipate. We show further descriptive statistics in the Appendix Tables A2 and A3.

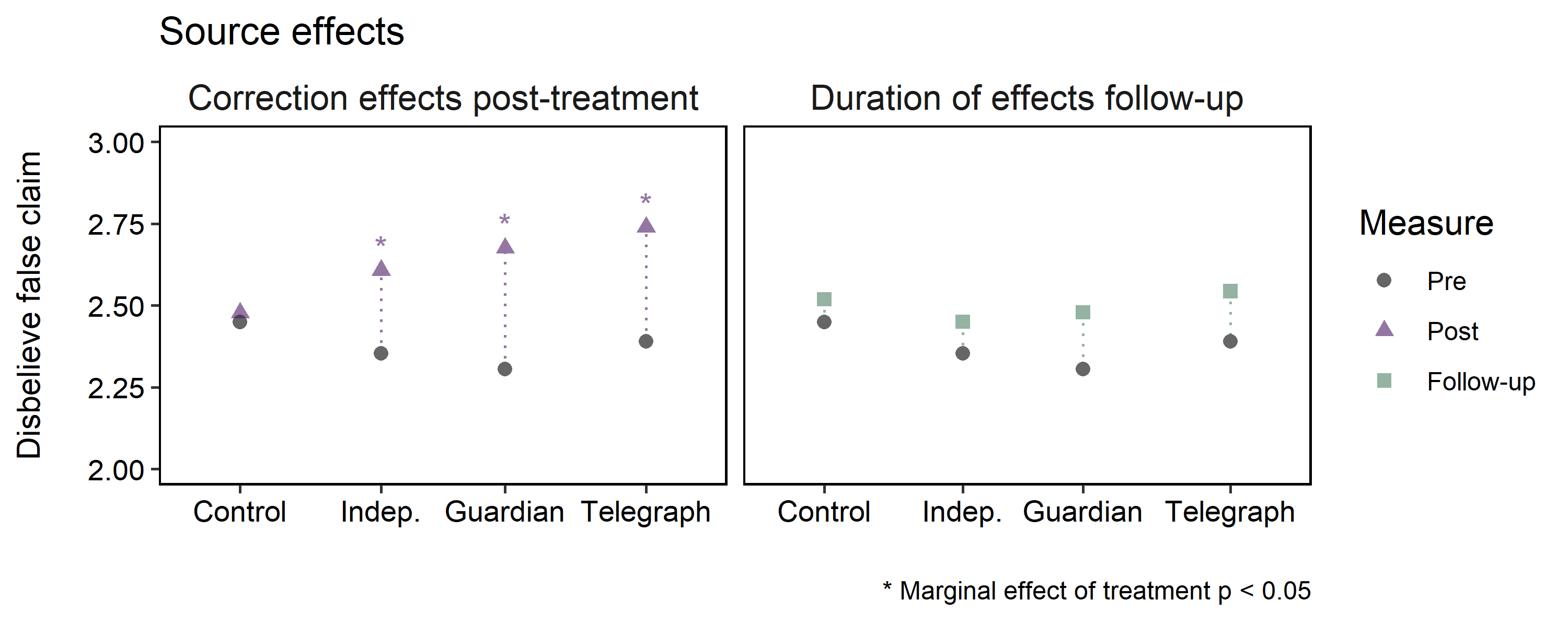

We examine these changes further with two sets of regression models estimating Average Treatment Effects—the average change in a respondent’s belief measured on the original five-point scale. We find significant treatment effects across the three sources, but no enduring effects in the follow-up wave. We do not find a significant difference across treatment groups, suggesting that short-term effects are just as effective from an independent source as from the two newspaper sources. We note, however, that the magnitude of effects is smaller for the independent fact checker than for newspapers. These are small differences, suggesting that independent fact checkers are still influential; however, this finding is consistent with Holman and Lay (2019; see also Wittenberg & Berinsky, 2020, p. 170) that nonpartisan fact-checking led to “backfire” effects among U.S. Republicans. We show the Average Treatment Effects in Figure 2 below as well as in Table 1.

| DV: Correct in post measure | DV: Correct in follow-up measure | |

| Intercept | 1.07*** | 1.24*** |

| (0.07) | (0.07) | |

| Pre-measure | 0.58*** | 0.52*** |

| (0.02) | (0.02) | |

| Treatment: Independent fact checker | 0.19*** | -0.02 |

| (0.07) | (0.07) | |

| Treatment: The Guardian | 0.28*** | 0.04 |

| (0.07) | (0.07) | |

| Treatment: The Telegraph | 0.30*** | 0.06 |

| (0.07) | (0.07) | |

| R2 | 0.29 | 0.25 |

| Adj. R2 | 0.29 | 0.25 |

| Num. obs. | 1841 | 1841 |

| RMSE | 1.07 | 1.06 |

| ***p < 0.01, **p < 0.05, *p < 0.1 | ||

| Notes: OLS models, unstandardised coefficients. Dependent variable is holding correct belief about claim linking financial pressure on NHS to rising levels of immigration (see also Appendix for exact question wording), measured on a five-point scale ranging from 1 (strongly believe) to 5 (strongly disbelieve) false claim. Treatment is exposure to fact-checking (see Research Design) attributed to an “independent fact-checking website” or “The Telegraph” or “The Guardian” with post measure taken directly after delivery of treatment, and follow-up measure an average 16 days after treatment. Survey data collected in May–June 2017 on a representative sample of the UK population. | ||

Finding 2: Consistent effects across different predispositions.

Significant interaction effects between treatment and predispositions indicate that the fact-check works differently among respondents with different characteristics – see regression Table A1 “Pre-exposure and predisposition models” in the Appendix. Among predispositions, we find no evidence that those who initially believed the incorrect claim, even those who strongly believed it, would respond differently to the fact check (see terms Strongly believes x Fact, Guardian and Telegraph in Table A1). This is the case in all treatment groups where the interaction effects have negligible magnitude. We found that respondents who voted for the UK to leave the European Union in the Brexit referendum (a strong predictor of initial believability of the claim) were more likely to resist the Guardian correction, but not the correction when it was attributed to an independent source or to the Daily Telegraph (see term Leave x Guardian in Table A1).3In 2016, the majority of articles published by The Guardian were pro Remain, and the majority of Telegraph articles were pro Leave (Levy et al., 2016, p. 16). Conservative vote intention has no moderating impact on the treatment effects (see terms Conservative x Fact, Guardian and Telegraph in Table A1 in the Appendix).

Finding 3: Familiarity with false claim does not render corrections ineffective.

Those who recalled hearing about the false claim frequently were also more likely to believe it in the pre-measure, r(1839) = -0.35, p < 0.01. However, we find no evidence that the treatment effect itself would change depending on frequency of prior exposure, as shown in Table A1 in the Appendix: The magnitude of the terms Familiarity x Fact, Guardian and Telegraph are about one-fifth of the original treatment effects in each treatment group, thus unlikely to reverse them, although we note that the direction of the interaction is consistent with the hypothesis.

Methods

We conducted a survey experiment in the context of the 2017 UK general election, in which the treatment was a fact check about the relationship between financial pressure on the NHS and levels of immigration, with prior exposure self-assessed by survey respondents before treatment assignment. Our sample demographics were representative of the UK residents aged 18 and above in terms of age, gender, education, and geography (UK regions). The surveys were administered online by ICM Unlimited. Respondents were interviewed from May 23rd, until election day,4Although 90 per cent of Wave 1 surveys were completed by May 28. Time of joining the panel does not influence our results reported in the next sections. June 8th, and re-interviewed after the election from June 9 to June 13. Attrition is planned by the survey provider: of the 2,523 who took the wave 1 survey, 1,841 were re-interviewed after receiving the treatmen—our final N in subsequent analysis for consistency.5We analyzed the factors relating to attrition and found age and social class to predict the probability to drop out between treatment and follow-up. Neither treatment assignment nor the dependent variable was a significant predictor. To further test for informative attrition, we followed the recommendation in Lechner, Rodriguez-Planas and Kranz (2015) and estimated the expected value of our outcome variable with a Difference-in-Difference model (DV pooled across repeated measures) and a simpler OLS without pooling. The authors show that diverging results across the two approaches indicate attrition bias. We found negligible differences (0.17 and 0.18, respectively). The surveys providing the context of our study are part of a broader election study.

Dependent variable

Respondents were asked their beliefs about the following statement: “The NHS is under unprecedented pressure due to an influx of EU migrants that has forced doctors to take on 1.5 million extra patients in three years.” We measure strength of belief on a five-point ordinal scale ranging from strongly believe to strongly disbelieve at the three different points for each respondent: pre-treatment, immediately post-treatment (or simply at the end of the survey for the control group), and a week after the treatment.

For better interpretation in the context of correcting misinformation, this ordinal scale is reversed when estimating Average Treatment Effects (Figure 2 and regression tables) so that higher values indicate being correct about the claim (see Appendix for further details about the dependent variable).

Treatment

At the end of the wave 1 survey, respondents were randomly assigned to one of four groups: a control group that was simply asked again about the believability of the statement about the NHS, or one of three treatments involving exposure to corrective information about the NHS statement, which was an edited version of a real fact-check from fullfact.org capturing the complexity of the original political narrative (which was therefore not pre-tested).6See https://fullfact.org/europe/eu-immigration-and-pressure-nhs The key message of the corrective is that the estimated additional cost of EU immigration to NHS, £160 million, although “a significant figure, […] is small compared to the additional costs caused by other pressures,” namely: new technologies and improved standards of care costed ten times as much, and inflation and rising wages eighteen times as much (Full Fact, 2016). See Appendix Figure A1 for further details. We did not implement manipulation checks.The sources mentioned in the treatment were The Guardian, a reliably left of centre source and The Daily Telegraph, a reliably right of centre source, whose coverage of politics reflects their partisan leanings (Barnes & Hicks, 2018), including in the 2017 election (Cowley & Kavanagh, 2018). We chose broadsheets as sources rather than midmarket outlets or tabloids because they are more credible sources to include fact-checks. For the independent source treatment, we referred to a “fact-checking organization” rather than a specific independent organization such as the BBC or Full Fact because a putatively independent organization such as the BBC may still be seen as partisan by some.7For a similar experimental design in the United States, referring to “a non-partisan fact-checking website”, see Swire et al. (2017). Full Fact describes itself as independent and non-partisan.

Pre-exposure and predispositions

Pre-exposure. Prior to taking the first dependent measure (belief in claim) in wave 1, we asked all participants whether and how often they heard about the claim linking NHS to the rising levels of immigration: “During the election campaign so far, various statements have been made by politicians and in the media about issues, policies and leaders. How often have you heard the following statement…” with response options measured on an ordinal scale of Never, Not very often, Sometimes (the modal category), and Very often.

Initial belief. In some models we included a dichotomous variable for initial belief in the false statement, tapping subgroup difference in predispositions.

Political orientation. We asked whether respondents voted to leave, remain, or did not vote in the 2016 referendum. In addition, we measured party-political orientation by asking vote intention in the 2017 general election.

Analytical strategy

For simplicity of display, we present OLS estimates with the dependent variable being either the scale-reversed (see above) post-treatment belief for short-term effects, or the follow-up measure of belief to gauge lasting effects. The independent variables are pre-treatment belief and treatment group in all models.

Our experiment is embedded in a larger, representative study of voting behaviour in the UK’s 2017 general election. This also has implications for sample and power calculations. While the standard practice in experiments is to calculate the minimum sample size to accommodate a meaningful effect size, given power, we needed to take the sample size granted to meet the standards of multi-wave opinion polling first, giving us ample power to detect meaningful effects and interactions.

Topics

Bibliography

Barnes, L., & Hicks, T. (2018). Making austerity popular: The media and mass attitudes toward fiscal policy. American Journal of Political Science, 62(2), 340–354. https://doi.org/10.1111/ajps.12346

Berinsky, A. J. (2017). Rumors and health care reform: Experiments in political misinformation. British Journal of Political Science, 47(2), 241–262. https://doi.org/10.1017/S0007123415000186

Cappella, J. N., Maloney, E., Ophir, Y., & Brennan, E. (2015). Interventions to correct misinformation about tobacco products. Tobacco Regulatory Science, 1(2), 186–197. https://doi.org/10.18001/TRS.1.2.8

Clayton, K., Blair, S., Busam, J. A., Forstner, S., Glance, J., Green, G., Kawata, A., Kovvuri, A., Martin, J., Morgan, E., Sandhu, M., Sang, R., Scholz-Bright, R., Welch, A. T., Wolff, A. G., Zhou, A., & Nyhan, B. (2020). Real solutions for fake news? Measuring the effectiveness of general warnings and fact-check tags in reducing belief in false stories on social media. Political Behavior, 42, 1073–1095. https://doi.org/10.1007/s11109-019-09533-0

Cowley, P., & Kavanagh, D. (2016). The British general election of 2015. Springer. https://doi.org/10.1057/9781137366115

Edwards, K., & Smith, E. E. (1996). A disconfirmation bias in the evaluation of arguments. Journal of Personality and Social Psychology, 71(1), 5–24. https://doi.org/10.1037/0022-3514.71.1.5

Full Fact. (2016, 11 July). EU immigration and pressure on the NHS. https://fullfact.org/europe/eu-immigration-and-pressure-nhs/

Graves, L., & Amazeen, M. A. (2019). Fact-checking as idea and practice in journalism. Oxford Research Encyclopedia of Communication. https://doi.org/10.1093/acrefore/9780190228613.013.808

Graves, L., & Cherubini, F. (2016). The rise of fact-checking sites in Europe. Reuters Institute for the Study of Journalism. https://ora.ox.ac.uk/objects/uuid:d55ef650-e351-4526-b942-6c9e00129ad7/files/m5cd2184512f8b9670fce5184730d31a0

Green, M. C., & Brock, T. C. (2000). The role of transportation in the persuasiveness of public narratives. Journal of Personality and Social Psychology, 79(5), 701–721. https://doi.org/10.1037/0022-3514.79.5.701

Holman, M. R., & Lay, J. C. (2019). They see dead people (voting): Correcting misperceptions about voter fraud in the 2016 U.S. presidential election. Journal of Political Marketing, 18(1–2), 31–68. https://doi.org/10.1080/15377857.2018.1478656

Jerit, J., & Zhao, Y. (2020). Political misinformation. Annual Review of Political Science, 23(1), 77–94. https://doi.org/10.1146/annurev-polisci-050718-032814

Lechner, M., Rodriguez-Planas, N., & Fernández Kranz, D. (2016). Difference-in-difference estimation by FE and OLS when there is panel non-response. Journal of Applied Statistics, 43(11), 2044–2052. https://doi.org/10.1080/02664763.2015.1126240

Levy, D., Aslan, B., & Bironzo, D. (2016). UK press coverage of the EU referendum. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2018-11/UK_Press_Coverage_of_the_%20EU_Referendum.pdf

Lewandowsky, S., Ecker, U. K., & Cook, J. (2017). Beyond misinformation: Understanding and coping with the “post-truth” era. Journal of Applied Research in Memory and Cognition, 6(4), 353–369. https://doi.org/10.1016/j.jarmac.2017.07.008

Lewandowsky, S., Ecker, U. K., Seifert, C. M., Schwarz, N., & Cook, J. (2012). Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest, 13(3), 106–131. https://doi.org/10.1177/1529100612451018

Maertens, R., Roozenbeek, J., Basol, M., & van der Linden, S. (2021). Long-term effectiveness of inoculation against misinformation: Three longitudinal experiments. Journal of Experimental Psychology: Applied, 27(1), 1–16. https://doi.org/10.1037/xap0000315

Munro, G. D., & Ditto, P. H. (1997). Biased assimilation, attitude polarization, and affect in reactions to stereotype-relevant scientific information. Personality and Social Psychology Bulletin, 23(6), 636–653. https://doi.org/10.1177/0146167297236007

Nyhan, B., & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330. https://doi.org/10.1007/s11109-010-9112-2

Prike, T., Reason, R., Ecker, U. K., Swire-Thompson, B., & Lewandowsky, S. (2023). Would I lie to you? Party affiliation is more important than Brexit in processing political misinformation. Royal Society Open Science, 10(2). https://doi.org/10.1098/rsos.220508

Sangalang, A., Ophir, Y., & Cappella, J. N. (2019). The potential for narrative correctives to combat misinformation. Journal of Communication, 69(3), 298–319. https://doi.org/10.1093/joc/jqz014

Slater, M. D., & Rouner, D. (2002). Entertainment-education and elaboration likelihood: Understanding the processing of narrative persuasion. Communication Theory, 12(2), 173–191. https://doi.org/10.1111/j.1468-2885.2002.tb00265.x

Swire, B., Berinsky, A. J., Lewandowsky, S., & Ecker, U. K. (2017). Processing political misinformation: Comprehending the Trump phenomenon. Royal Society Open Science, 4(3), 160802. https://doi.org/10.1098/rsos.160802

Swire-Thompson, B., Dobbs, M., Thomas, A., & DeGutis, J. (2023). Memory failure predicts belief regression after the correction of misinformation. Cognition, 230. https://doi.org/10.1016/j.cognition.2022.105276

Vraga, E. K., Bode, L., & Tully, M. (2022). Creating news literacy messages to enhance expert corrections of misinformation on Twitter. Communication Research, 49(2), 245–267. https://doi.org/10.1177/0093650219898094

Wittenberg, C., & Berinsky, A. J. (2020). Misinformation and its correction. In N. Persily & J. A. Tucker (Eds.), Social media and democracy: The state of the field, prospects for reform (pp. 163–198). Cambridge University Press. https://doi.org/10.1017/9781108890960.009

Funding

This work was supported by the UK Economic and Social Research Council, grant numbers ES/N012283/1 and ES/R005087/1 (an extension of ES/M010775/1).

Competing Interests

The authors declare no competing interests.

Ethics

This research and data collection has received ethical approval from the University of Exeter College of Social Sciences and International Studies Ethics Committee, reference no. 201718-185.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/NP5AQZ