Peer Reviewed

A survey of expert views on misinformation: Definitions, determinants, solutions, and future of the field

Article Metrics

41

CrossRef Citations

PDF Downloads

Page Views

We surveyed 150 academic experts on misinformation and identified areas of expert consensus. Experts defined misinformation as false and misleading information, though views diverged on the importance of intentionality and what exactly constitutes misinformation. The most popular reason why people believe and share misinformation was partisanship, while lack of education was one of the least popular reasons. Experts were optimistic about the effectiveness of interventions against misinformation and supported system-level actions against misinformation, such as platform design changes and algorithmic changes. The most agreed-upon future direction for the field of misinformation was to collect more data outside of the United States.

Research Questions

- How do experts define misinformation?

- What do experts think about current debates surrounding misinformation, social media, and echo chambers?

- According to experts, why do people believe and share misinformation?

- What do experts think about the effectiveness of interventions against misinformation?

- How do experts think the study of misinformation could be improved?

Essay Summary

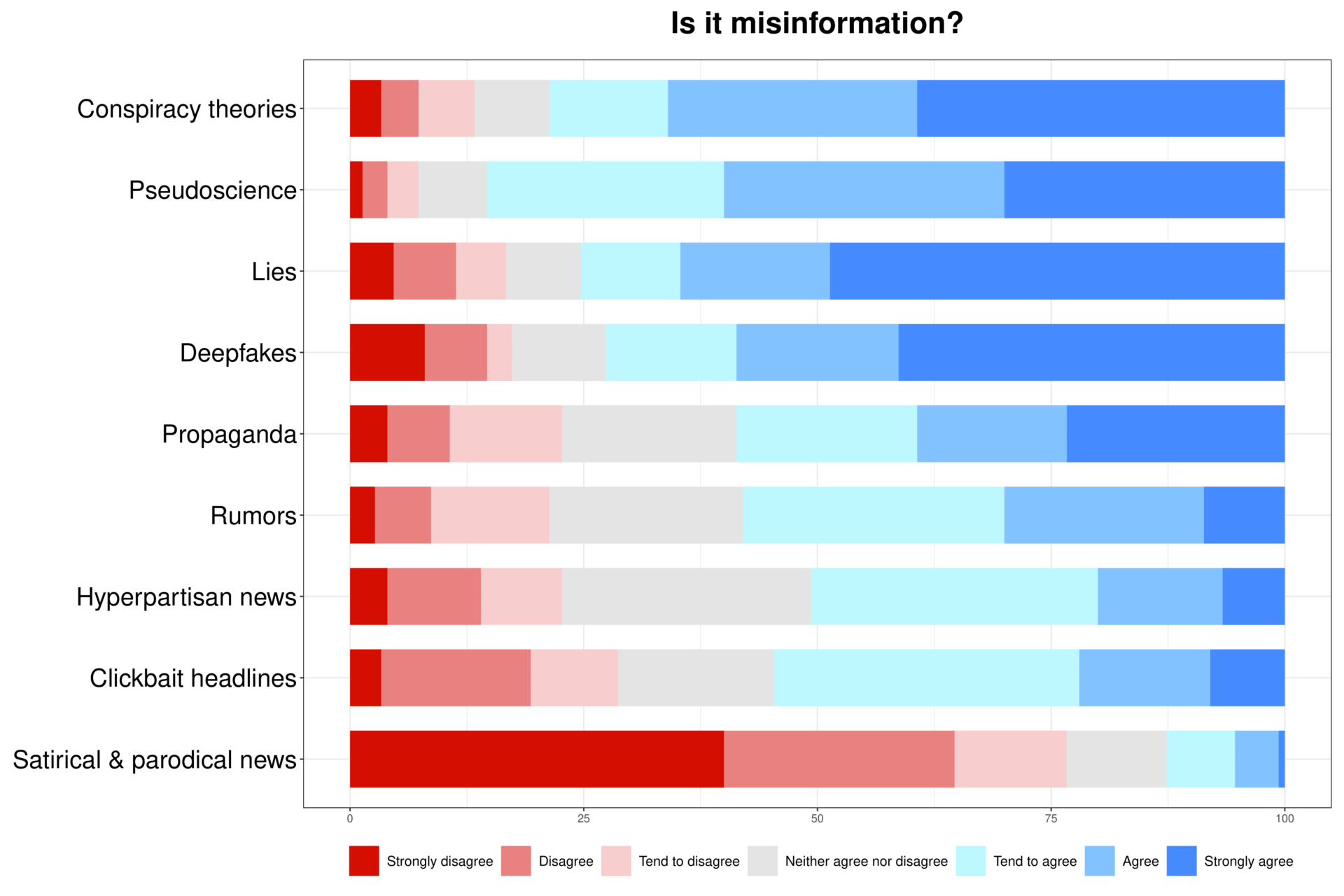

- The experts we surveyed defined misinformation as false and misleading information. They agreed that pseudoscience and conspiracy theories are misinformation, while satirical news is not. Experts across disciplines and methods disagreed on the importance of intentionality and whether propaganda, clickbait headlines, and hyperpartisan news are misinformation.

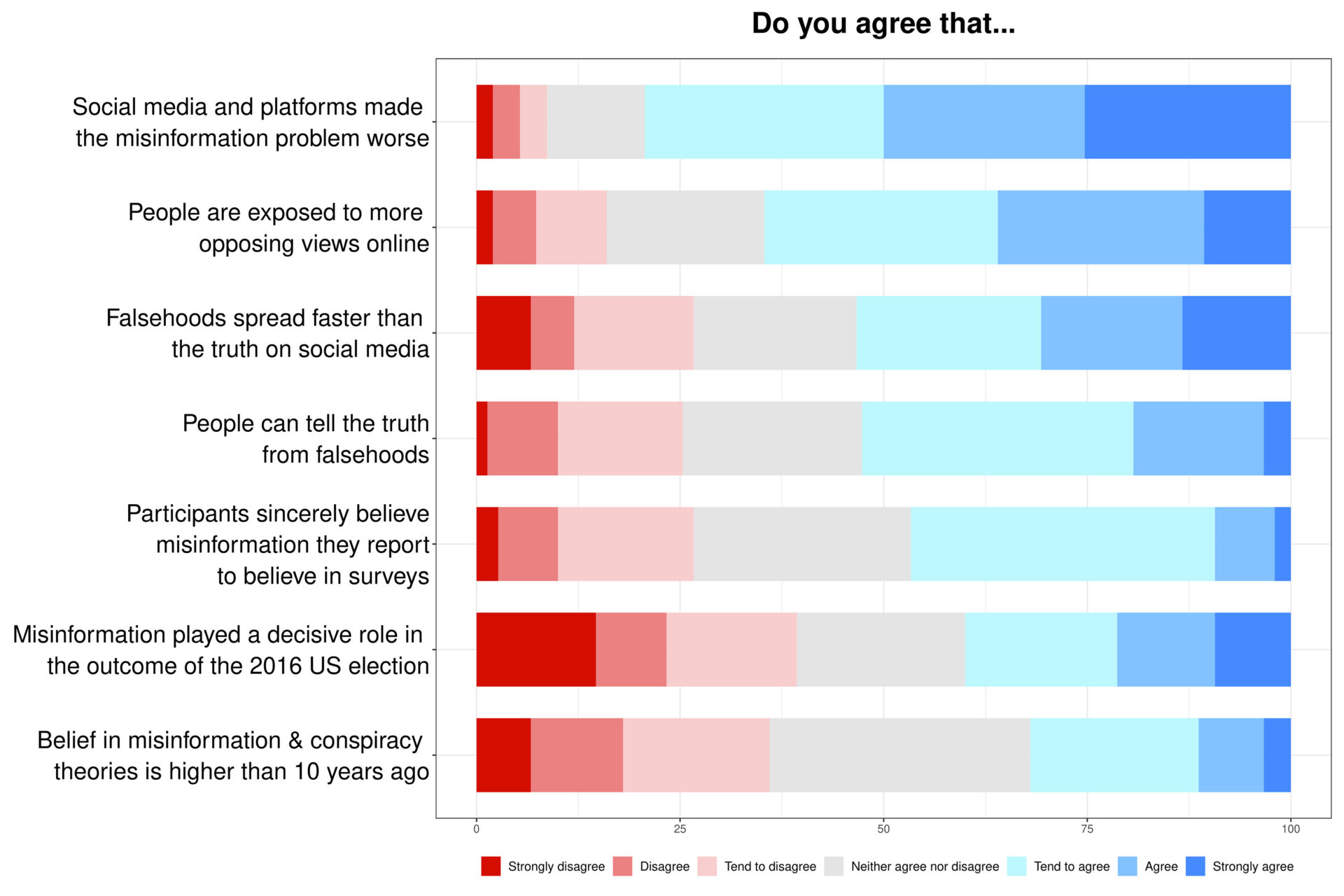

- The experts agreed that social media platforms worsened the misinformation problem and that people are exposed to more opposing viewpoints online than offline. Respondents were also skeptical of the claim that misinformation determined the outcome of the 2016 U.S. presidential election, whereas psychologists were not.

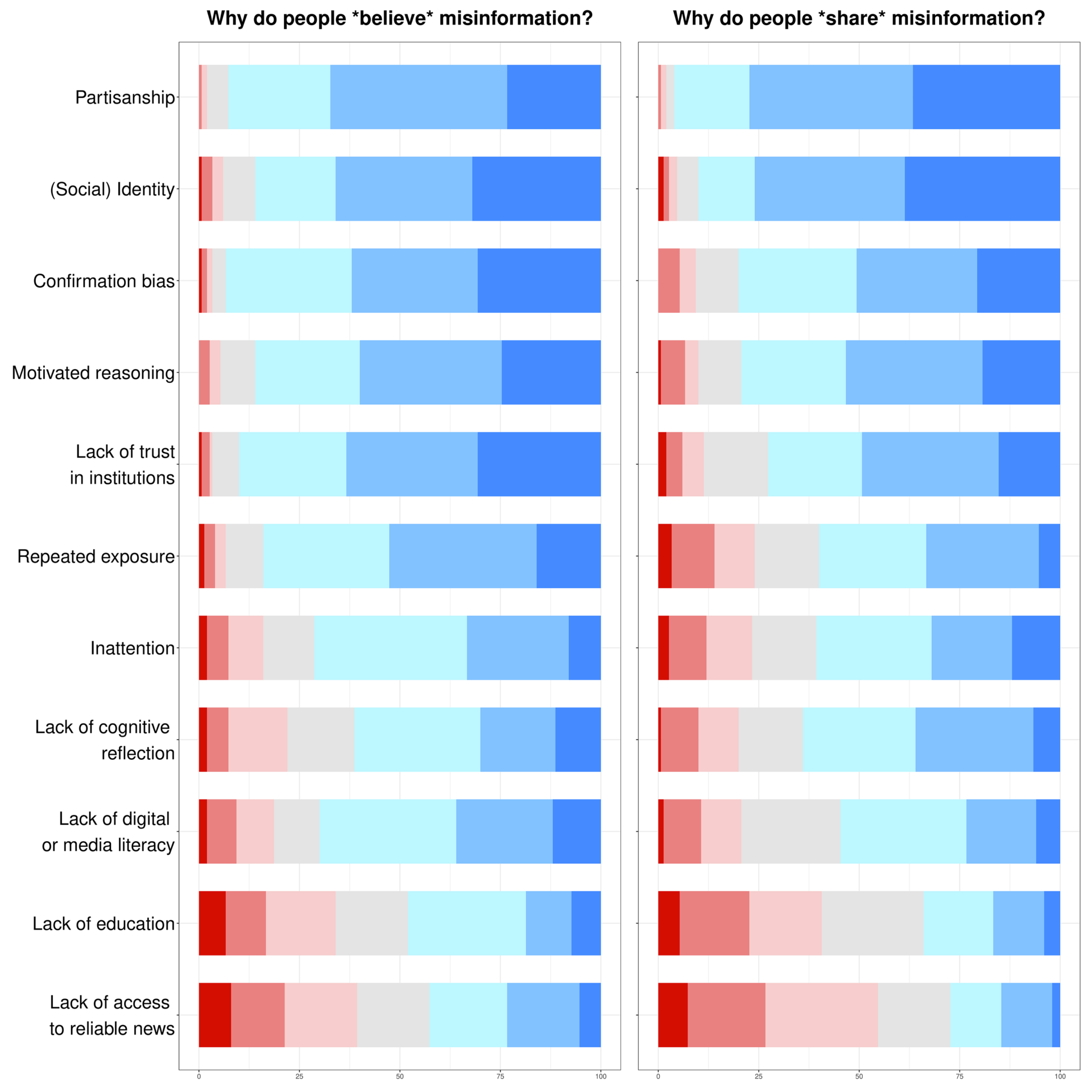

- The most popular explanations as to why people believe and share misinformation were partisanship, identity, confirmation bias, motivated reasoning, and lack of trust in institutions.

- The experts we surveyed agreed that current interventions against misinformation, such as media literacy and fact-checking, would be effective if widely adopted. Experts were in favor of platform design changes, algorithmic changes, content moderation, de-platforming, and stronger regulations.

- These experts also agreed that in the future, it will be important to collect more data outside of the United States, do more interdisciplinary work, examine subtler forms of misinformation, study platforms other than Twitter and Facebook, and develop better theories and interventions.

Implications

Fake news, misinformation, and disinformation have become some of the most studied phenomena in the social sciences (Freelon & Wells, 2020). Despite the widespread use of these concepts, scholars often disagree on their meaning. While some use misinformation as an umbrella term to describe falsehoods and deception broadly (Van Bavel et al., 2021), others use it specifically to capture unintentional forms of false or deceptive content (Wardle & Derakhshan, 2017).

In line with these discrepancies, our survey of 150 experts on misinformation from across academia revealed key definitional differences across disciplines and methods used to study misinformation. Experts relying on qualitative methods were more likely to include the intentionality of the sharer in the definition of misinformation than experts relying on quantitative methods. Moreover, psychologists had broader definitions of misinformation (including, for instance, propaganda and hyperpartisan headlines) compared to political scientists. Disagreement on the definitions of key concepts is not unique to misinformation research (e.g., Pearson, 2006), and such disagreements are not necessarily a problem, as they allow for different analytical perspectives and insights. Yet, if definitions of misinformation are too vague or not consensual enough, policy responses to misinformation can easily be instrumentalized by policymakers to strategically reduce freedom of speech, such as by silencing political opponents—a development already criticized by human rights groups (Human Rights Watch, 2021; Reporters Without Borders, 2017). From a scientific perspective, semantic disagreements across disciplines may sow confusion in the field by artificially creating contradictory findings. For instance, some disagreements about the effect and prevalence of misinformation may be intensified by differences in conceptualizations (Rogers, 2020). This should encourage researchers to better define misinformation and be explicit about it to avoid interdisciplinary misunderstandings.

In this article, we do not seek to provide an authoritative definition of misinformation, but rather to document how experts across disciplines define the concept—which can ultimately help practitioners and researchers to operationalize it. Experts agreed that misinformation not only comprises falsehoods, but also misleading information (Søe, 2021). This stands in contrast with most experimental studies on misinformation, which focus primarily on news determined to be false by fact-checkers (Pennycook & Rand, 2022). Researchers may thus want to broaden their nets to include more misleading information and subtler forms of misinformation, such as biased or partisan news. Most experts in our survey also agreed that pseudoscience and conspiracy theories represent forms of misinformation, while satirical and parodical news do not. These findings could help future studies make decisions about what to include in the misinformation category, as some studies have in the past included satirical and parodical news websites (Cordonier & Brest, 2021).

While alarmist narratives about “fake news,” “echo chambers,” and the “infodemic,” abound in public debate (Altay et al., 2023; Simon & Camargo, 2021), experts had sobering views. First, experts agreed that people are more exposed to opposing viewpoints online than offline. This should motivate scientists to study the effect of exposure to opposing viewpoints (Bail et al., 2018). Second, despite the popularity of the idea that misinformation and belief in conspiracy theories have increased in the past ten years (Uscinski et al., 2022), experts were divided on this question. This is notable considering the popularity of the idea that we live in a new “post-truth era” (Altay et al., 2023), and it highlights the need for more longitudinal studies (Uscinski et al., 2022). Third, less than half of experts surveyed agreed that participants sincerely believe the misinformation they report to believe in surveys. This should motivate journalists to take alarmist survey results with a grain of salt. Researchers should continue to improve survey instruments (Graham, 2023), but also do more conceptual work on defining “(mis)beliefs” and the circumstances in which they are cause for concern (Mercier & Altay, 2022). Fourth, there is no clear consensus on the impact of misinformation on the outcome of the 2016 U.S. election, with 54% of psychologists agreeing that misinformation played a decisive role, while 73% of political scientists disagree. For journalists and policymakers, it stresses the importance of seeking advice from several researchers with different backgrounds.

Scholars from a variety of fields have investigated the reasons why people believe and share misinformation (Ecker et al., 2022; Righetti, 2021). In this literature, some have focused on motivated reasoning and political partisanship (Osmundsen et al., 2021; Van Bavel et al., 2021), while others on inattention, lack of cognitive reflection, or repeated exposure (Fazio et al., 2019; Pennycook & Rand, 2022). However, we observed little disagreement across methods and disciplines on the key determinants of misinformation belief and sharing. The list of determinants detailed in Figure 2 could be used by scientists, platforms, and companies to design more effective interventions against misinformation. For instance, few interventions against misinformation target partisanship, the most agreed-upon determinant of misinformation belief and sharing in our survey. Similarly, while many existing interventions aim at improving media literacy, illiteracy was only the eighth most agreed-upon determinant of belief in misinformation and the ninth most agreed-upon determinant of misinformation sharing. Finally, contrary to the idea that people believe misinformation because they are not educated enough, lack of education was one of the least popular determinants. The discrepancies between current misinformation response strategies and expert consensus highlight the importance of this interdisciplinary meta-study.

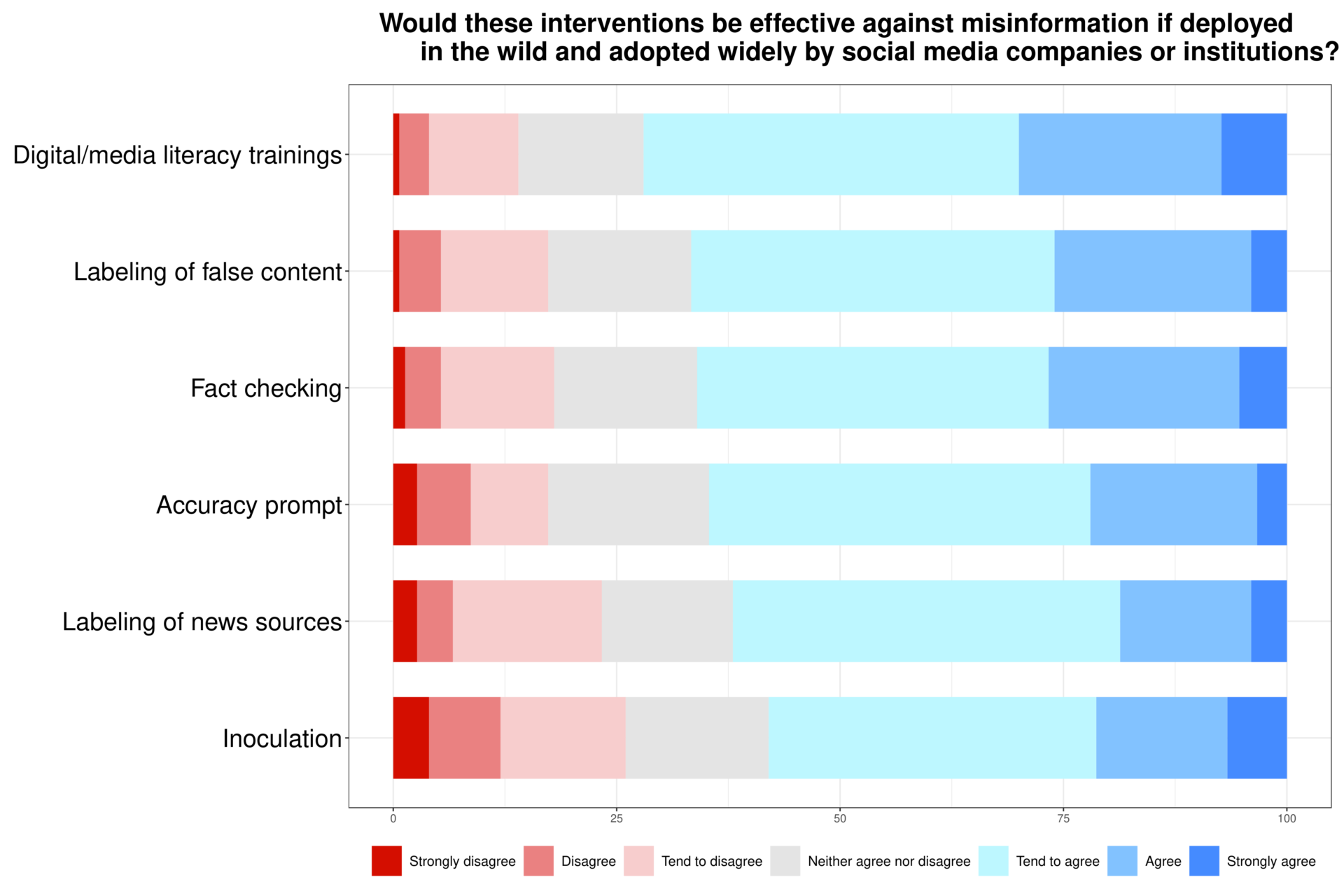

In the literature on misinformation, there are disagreements on which interventions are most effective against misinformation (Guay et al., 2022; Roozenbeek et al., 2021) and on the extent to which interventions against misinformation are effective (Acerbi et al., 2022; Altay, 2022; Modirrousta-Galian & Higham, 2022). Yet, in our survey, we found that experts generally agreed that most existing interventions against misinformation would be effective if deployed in the wild and were adopted widely by social media companies. Despite this apparent optimism, experts mostly selected the “tend to agree” responses, which may reflect their awareness that, in isolation, individual interventions have limited effects, and that it is only in combination that they can have a meaningful impact (Bak-Coleman et al., 2022).

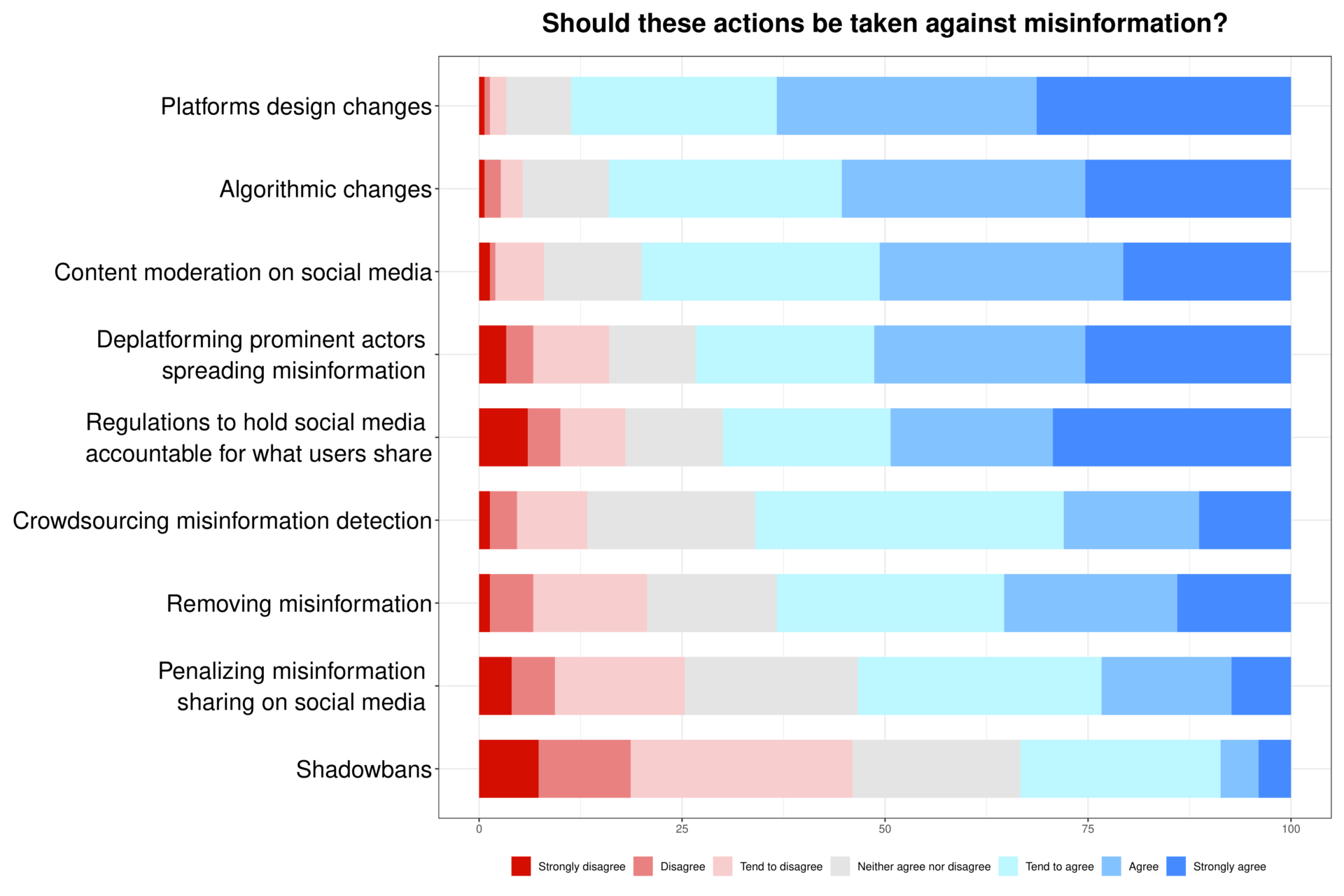

Experts agreed that social media platforms have worsened the problem of misinformation and were in favor of various actions that platforms could take against misinformation, such as platform design changes, algorithmic changes, content moderation, de-platforming prominent actors that spread misinformation, and crowdsourcing misinformation detection or removing it. These findings could help policymakers and social media platforms guide their efforts against misinformation—even if they should also factor in laypeople’s opinions about it and be vigilant about protecting democratic rights in the process (Kozyreva et al., 2023).

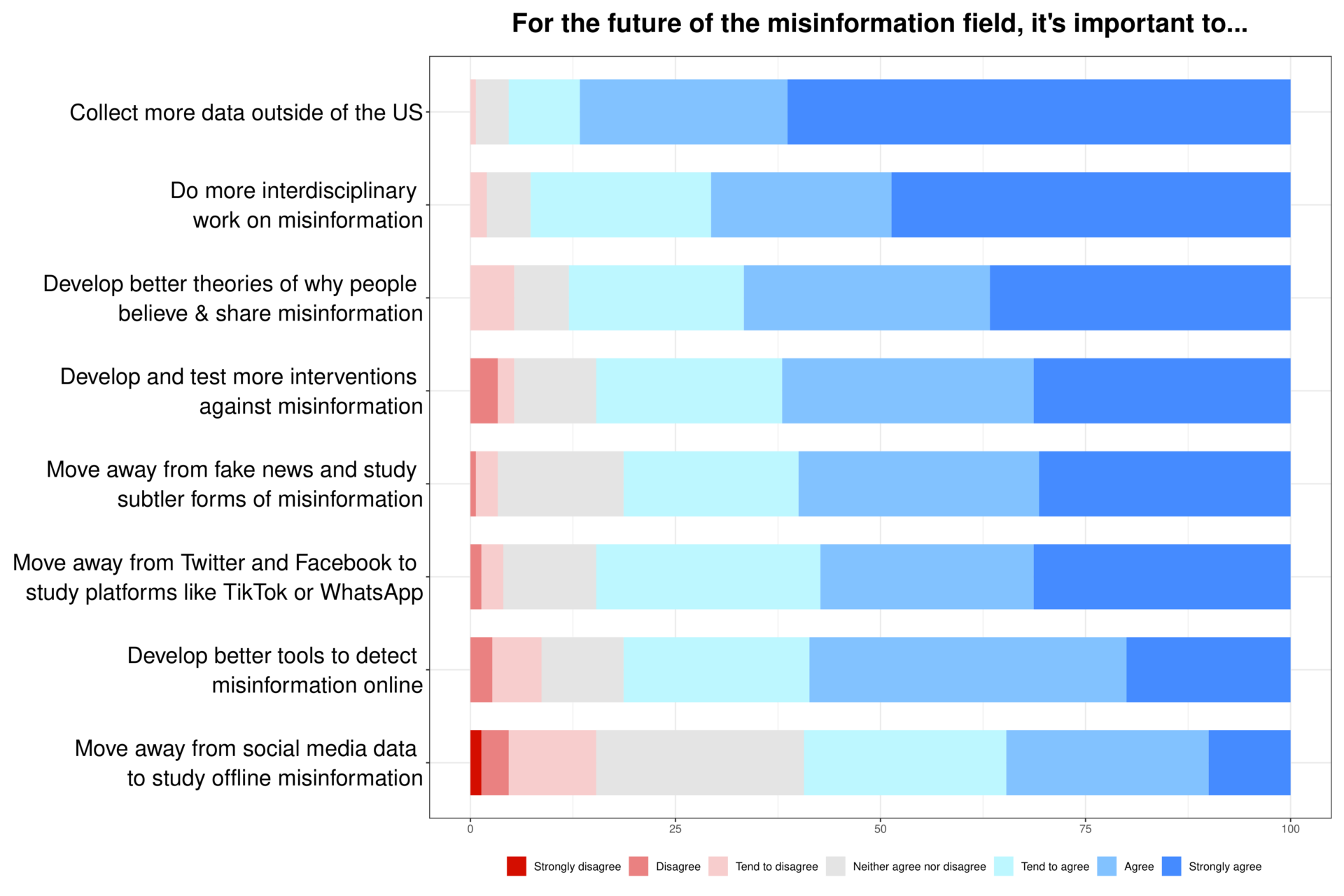

Finally, we offer concrete steps that the field of misinformation could take in the future and that experts agree are important. This includes collecting more data outside of the United States (in particular by local academics), doing more interdisciplinary work, studying subtler forms of misinformation, studying other platforms than Twitter and Facebook, and developing better theories and interventions. To be successful, these efforts will need to be backed up by structural changes, such as infrastructure and funding opportunities to collect data in underrepresented countries, access to more diverse social media data, less siloed disciplinary boundaries in universities, and stronger incentives to follow these recommendations at the publication and funding level. Doing so would address not only the current limitations of misinformation research, but also the wider structural inequalities in social scientific research, where countries such as the United States and platforms such as Twitter are generally overrepresented considering their size (Matamoros-Fernández & Farkas, 2021).

Our findings should be interpreted with a few caveats. First, because most experts in the survey are from the Global North, our findings may not apply to countries in the Global South. Second, while it may be tempting to think that differences in how researchers define misinformation may explain differences in opinions across disciplines, our findings do not formally support it and are inconclusive regarding the existence of such mediation (see Appendix E). More broadly, given the interdisciplinary character and global representation of experts in this survey, we caution against drawing conclusions about the origins of differences across disciplines and methods. For instance, because we did not impose definitions on experts, some differences may be purely semantic (e.g., hyperpartisan news may be defined differently by psychologists and political scientists).

In conclusion, the differences of opinions across disciplines and methods, combined with the agreed-upon need to do more interdisciplinary work on misinformation, should encourage more discussions across disciplines and methods. In particular, collaborations between qualitative and quantitative researchers and between political scientists and psychologists would be fruitful to solve some disagreements. Our findings offer high-level expert consensus on timely questions regarding the definitions of misinformation, determinants of misinformation belief and sharing, individual and system-level solutions against misinformation, and how to improve the study of misinformation. Ultimately, these findings can help policymakers and platforms to tackle misinformation more efficiently, journalists to have a more representative view of experts’ views on misinformation, and scientists to move the field forward.

Findings

Finding 1: Defining misinformation.

The most popular definition of misinformation was “False and misleading information,” followed by “False and misleading information spread unintentionally.” Other definitions, including only false information or only misleading information, were much less popular. Definitions of misinformation varied across methods, with qualitative experts being more likely to include intentionality in the definition of misinformation than quantitative researchers (see Table 1).

| Definitions of misinformation | All experts | Qualitative experts | Quantitative experts |

| False information | 11% | 0% | 15% |

| Misleading information | 7% | 10% | 4% |

| False and misleading information | 43% | 33% | 50% |

| False information spread unintentionally | 5% | 6% | 4% |

| Misleading information spread unintentionally | 5% | 10% | 1% |

| False and misleading information spread unintentionally | 30% | 41% | 25% |

Experts across disciplines generally agreed that pseudoscience (85%), conspiracy theories (79%), lies (75%), and deepfakes (73%) constitute forms of misinformation, while satirical and parodical news does not (77% disagreed). There was less agreement and much more uncertainty regarding propaganda, rumors, hyperpartisan news, and clickbait headlines. Note that many experts also expressed uncertainty by selecting the option “Neither agree nor disagree.”

We observed some variability across disciplines and methods. Qualitative researchers were less likely to agree that lies and deep fakes (59%) are misinformation compared to quantitative researchers (84%). Propaganda was more likely to be considered misinformation by psychologists (72%) than social scientists (51%) or political scientists (36%). Hyperpartisan headlines were more likely to be considered misinformation by psychologists (64%) than by social scientists (49%) or political scientists (23%). Clickbait headlines were more likely to be considered misinformation by computational scientists (65%), social scientists (57%), and psychologists (54%), than political scientists (22%).

Finding 2: Why do people believe and share misinformation?

We documented broad agreement among experts on the reasons why people believe and share misinformation. The most agreed-upon explanation for why people believe and share misinformation is partisanship. Figure 2 shows that 96% of experts agreed that partisanship is a key reason why people share misinformation, and 93% of experts also agreed that partisanship is key to explaining why people believe in misinformation.

Identity, confirmation bias, motivated reasoning, and lack of trust in institutions received very high levels of agreement (between 73% and 93%). Repeated exposure, inattention, lack of cognitive reflection, and lack of digital/media literacy also received relatively high levels of agreement (between 54% and 84%).

Two determinants were particularly unpopular. Less than 50% of experts agreed that lack of education and lack of access to reliable news were reasons why people believe and share misinformation. This is particularly noteworthy considering prior work highlighting the importance of education (van Prooijen, 2017) and an increase in paywalls limiting access to reliable news (Olsen et al., 2020).

Finding 3: Opinions about misinformation and digital media.

Next, we documented misinformation experts’ opinions about current debates surrounding misinformation, social media, and echo chambers. The most agreed-upon opinion was that social media and platforms made the misinformation problem worse (79% agreement). Experts also agreed that people are exposed to more opposing viewpoints online than offline (65%). There was less agreement regarding the notion that “falsehoods generally spread faster than the truth on social media” (53%), “people can tell the truth from falsehoods” (53%), and “participants sincerely believe the misinformation they report to believe in surveys” (47%).

The least agreed-with statement was that “belief in misinformation & conspiracy theories is higher than ten years ago”—only 32% of experts agreed and 36% disagreed. It is also the statement about which experts were the most uncertain, with 32% of experts selecting the “neither agree nor disagree” option.

The most polarizing statement was that “misinformation played a decisive role in the outcome of the 2016 U.S. election,” with 40% of experts agreeing and 39% disagreeing. This polarization is mostly due to differences in opinion between psychologists and political scientists. Political scientists were very skeptical of this claim, with 73% in disagreement and only 14% in agreement. In contrast, 54% of psychologists agreed that misinformation played a decisive role, while only 26% disagreed.

Finding 4: What are the solutions to the problem of misinformation?

To help researchers and practitioners decide what misinformation solutions to study and develop, we explored what individual-level interventions and system-level actions against misinformation experts favored.

Considering individual-level interventions, experts generally agreed that all the interventions listed in Figure 4 would be effective against misinformation if deployed in the wild and widely adopted by social media companies or institutions. The most popular intervention was digital/media literacy training, with 72% approval, while the least popular was inoculation, with 58% approval.

Regarding system-level actions against misinformation, the most widely agreed-upon solution was platform design changes, with 89% approval, followed by algorithmic changes (84%) and content moderation on social media (80%). Experts were also generally in favor of de-platforming prominent actors sharing misinformation (73%), stronger regulations to hold platforms accountable (70%), crowdsourcing the detection of misinformation (66%), removing misinformation (63%), and even, to some extent, penalizing1Note that the word penalization can be broadly understood as any form of punishment (e.g., reducing the visibility of misinformation spreaders or sending them a warning) and is not restricted to legal penalization. misinformation sharing on social media (53%). By far, the least popular action against misinformation was shadow banning, with more experts disagreeing that this type of action should be taken (46%) than agreeing (33%).

Finding 5: What future for the field of misinformation?

The most agreed-upon future direction for the field of misinformation was to collect more data outside of the United States; 95% of experts agreed with the statement (of which 61% strongly agreed). Experts also agreed on the importance of doing more interdisciplinary work (93%), developing better theories of why people believe and share misinformation (88%), developing and testing more interventions against misinformation (85%), moving away from fake news to study subtler forms of misinformation (81%), moving away from Twitter and Facebook data to study TikTok, WhatsApp, and other platforms (85%), and developing better tools to detect misinformation (81%). These views align with existing calls for further geographical and platform diversity in empirical studies—going beyond the most accessible APIs (e.g., Twitter) and most publicized empirical contexts (e.g., the United States; Matamoros-Fernández & Farkas, 2021).

The least popular future direction was to move away from social media data to study offline misinformation, with 59% of experts agreeing and 25% neither agreeing nor disagreeing. On this point, the opinion of psychologists and computational scientists diverged: 69% of psychologists agreed that we should move away from social media data to study offline misinformation, while only 38% of computational scientists agreed. The least popular future direction was to move away from social media data to study offline misinformation, with 59% of experts agreeing and 25% neither agreeing nor disagreeing. On this point, the opinion of psychologists and computational scientists diverged: 69% of psychologists agreed that we should move away from social media data to study offline misinformation, while only 38% of computational scientists agreed.

Methods

Participants

The experts were contacted between the 6th of December 2022 and the 16th of January 2023. In total, 201 experts responded to the survey. Fifty were excluded because they did not finish the survey (i.e., they stopped before the final quality check question). Two additional participants were excluded because they failed the quality check question. Our final sample was 150 experts (51 women, Mage = 39.11, SDage = 9.66). In Appendix A, we report demographic information about the experts. We did not consider gender in the recruitment of participants and do not have access to the gender breakdown of the respondents we contacted—it is thus unclear whether the gender imbalance in the survey is an artifact of our recruitment techniques or not.

Experts on misinformation were recruited to participate in the survey using four overlapping recruitment strategies. First, the email addresses of 171 experts were retrieved from Google Scholar by using the keywords “misinformation” and “fake news” (first twelve pages since 2018). Second, 93 experts were recruited from the program committee of MISDOOM, an international conference on mis/disinformation. For this, we collected their email address and emailed them directly. Third, 95 experts were contacted via the De Facto mailing list, a network of French experts on misinformation, and 36 experts were contacted because they attended an ICA pre-conference on the future of mis/disinformation studies. Fourth, 190 experts were contacted via snowball sampling: they were recommended by other experts at the end of the survey. The experts were also allowed to share the survey with their lab or colleagues but were instructed not to share it on social media. As we made clear in the survey, we relied on a quite broad definition of expertise: “anyone who has done academic work on misinformation.” We could not ensure that all respondents were experts on misinformation. However, because we did not share the survey publicly and only contacted experts on misinformation (i.e., those who wrote academic articles on misinformation, attended a conference on misinformation, or were recommended by misinformation experts), we are confident that the risk of having non-expert respondents is very low.

Design and procedure

After completing a consent form, participants were asked to answer eight questions divided into eight blocks on (i) the definition of misinformation, (ii) examples of misinformation, (iii) why people believe misinformation, (iv) why people share misinformation, (v) general questions about misinformation and digital media, (vi) individual-level interventions against misinformation, (vii) system-level actions against misinformation, and (viii) the future of the field of misinformation. In Appendix B, we report all the survey questions and response options.

The presentation order of the blocks was fixed. The questions inside the blocks were presented in a randomized order (except for the first block, since the definitions of misinformation were incremental). Participants were asked to report their age, gender, nationality, political orientation, area of expertise, and method used to study misinformation. Participants were also asked if they have answered the survey in a meaningful way and whether we could use their data for scientific purposes. Finally, participants were asked to recommend other experts on misinformation and could share their thoughts about the experiment in a text box.

The questions and response options were determined by a team of interdisciplinary experts on misinformation (cognitive science, sociology, media and communication, psychology, and computer science) and represent the authors’ understanding of the literature on misinformation. The questions and response options are not exhaustive; for instance, there are many more reasons why people believe and share misinformation, and there are many more ways to define misinformation. We focused on what we deemed to be the most relevant given our knowledge of the field. Moreover, one author recently conducted a qualitative survey on misinformation experts and used this knowledge to design the present survey.

In Appendices C and D, we offer a visualization of the results broken down by disciplines and methods. On OSF, readers will find the regression tables of all statistical comparisons between disciplines and methods (for more information about the statistical models, see Appendix E). In the Results section, we only report statistically significant differences and the most meaningful differences across methods and disciplines (based notably on effect size).

Topics

Bibliography

Acerbi, A., Altay, S., & Mercier, H. (2022). Research note: Fighting misinformation or fighting for information? Harvard Kennedy School (HKS) Misinformation Review, 3(1). https://doi.org/10.37016/mr-2020-87

Altay, S. (2022). How effective are interventions against misinformation? PsyArXiv. https://doi.org/10.31234/osf.io/sm3vk

Altay, S., Berriche, M., & Acerbi, A. (2023). Misinformation on misinformation: Conceptual and methodological challenges. Social Media + Society, 9(1). https://doi.org/10.1177/20563051221150412

Bail, C. A., Argyle, L. P., Brown, T. W., Bumpus, J. P., Chen, H., Hunzaker, M. F., Lee, J., Mann, M., Merhout, F., & Volfovsky, A. (2018). Exposure to opposing views on social media can increase political polarization. Proceedings of the National Academy of Sciences, 115(37), 9216–9221. https://doi.org/10.1073/pnas.1804840115

Bak-Coleman, J. B., Kennedy, I., Wack, M., Beers, A., Schafer, J. S., Spiro, E. S., Starbird, K., & West, J. D. (2022). Combining interventions to reduce the spread of viral misinformation. Nature Human Behaviour, 6(10), 1372–1380. https://doi.org/10.1038/s41562-022-01388-6

Cordonier, L., & Brest, A. (2021). How do the French inform themselves on the Internet? Analysis of online information and disinformation behaviors. Fondation Descartes. https://hal.archives-ouvertes.fr/hal-03167734/document

Ecker, U. K. H., Lewandowsky, S., Cook, J., Schmid, P., Fazio, L. K., Brashier, N., Kendeou, P., Vraga, E. K., & Amazeen, M. A. (2022). The psychological drivers of misinformation belief and its resistance to correction. Nature Reviews Psychology, 1(1), 13–29. https://doi.org/10.1038/s44159-021-00006-y

Fazio, L. K., Rand, D. G., & Pennycook, G. (2019). Repetition increases perceived truth equally for plausible and implausible statements. Psychonomic Bulletin & Review, 26(5), 1705–1710. https://doi.org/10.3758/s13423-019-01651-4

Freelon, D., & Wells, C. (2020). Disinformation as political communication. Political Communication, 37(2), 145–156. https://doi.org/10.1080/10584609.2020.1723755

Graham, M. H. (2023). Measuring misperceptions? American Political Science Review, 117(1), 80–102. https://doi.org/10.1017/S0003055422000387

Guay, B., Pennycook, G., & Rand, D. (2022). How to think about whether misinformation interventions work. PsyArXiv. https://doi.org/10.31234/osf.io/gv8qx

Human Rights Watch. (2021). Covid-19 triggers waves of free speech abuse. https://www.hrw.org/news/2021/02/11/covid-19-triggers-wave-free-speech-abuse

Kozyreva, A., Herzog, S. M., Lewandowsky, S., Hertwig, R., Lorenz-Spreen, P., Leiser, M., & Reifler, J. (2023). Resolving content moderation dilemmas between free speech and harmful misinformation. Proceedings of the National Academy of Sciences, 120(7), e2210666120. https://doi.org/10.1073/pnas.2210666120

Matamoros-Fernández, A. & Farkas, J. (2021). Racism, hate speech, and social media: A systematic review and critique. Television & New Media, 22(2), 205–224. http://www.doi.org/10.1177/1527476420982230

Mercier, H., & Altay, S. (2022). Do cultural misbeliefs cause costly behavior? In J. Musolino, P. Hemmer, & J. Sommer (Eds.). The science of beliefs (pp. 193–208). Cambridge University Press. https://doi.org/10.1017/9781009001021.014

Olsen, R. K., Kammer, A., & Solvoll, M. K. (2020). Paywalls’ impact on local news websites’ traffic and their civic and business implications. Journalism Studies, 21(2), 197–216. https://doi.org/10.1080/1461670X.2019.1633946

Osmundsen, M., Bor, A., Vahlstrup, P. B., Bechmann, A., & Petersen, M. B. (2021). Partisan polarization is the primary psychological motivation behind political fake news sharing on Twitter. American Political Science Review, 115(3), 999–1015. https://doi.org/10.1017/S0003055421000290

Pearson, H. (2006). What is a gene? Nature, 441(7092), 398–402. https://doi.org/10.1038/441398a

Pennycook, G., & Rand, D. G. (2022). Accuracy prompts are a replicable and generalizable approach for reducing the spread of misinformation. Nature Communications, 13(1), 2333. https://doi.org/10.1038/s41467-022-30073-5

Reporters Without Borders. (2017). Predators of press freedom use fake news as a censorship tool. https://rsf.org/en/news/predators-press-freedom-use-fake-news-censorship-tool

Righetti, N. (2021). Four years of fake news: A quantitative analysis of the scientific literature. First Monday, 26(7). https://doi.org/10.5210/fm.v26i7.11645

Rogers, R. (2020). The scale of Facebook’s problem depends upon how ’fake news’ is classified. Harvard Kennedy School (HKS) Misinformation Review, 1(6). https://doi.org/10.37016/mr-2020-43

Roozenbeek, J., Freeman, A. L., & van der Linden, S. (2021). How accurate are accuracy-nudge interventions? A preregistered direct replication of Pennycook et al.(2020). Psychological Science, 32(7), 1169–1178. https://doi.org/10.1177/09567976211024535

Simon, F. M., & Camargo, C. (2021). Autopsy of a metaphor: The origins, use, and blind spots of the ‘infodemic.’ New Media & Society, 25(8). https://doi.org/10.1177/14614448211031908

Søe, S. O. (2021). A unified account of information, misinformation, and disinformation. Synthese, 198(6), 5929–5949. https://doi.org/10.1007/s11229-019-02444-x

Uscinski, J., Enders, A., Klofstad, C., Seelig, M., Drochon, H., Premaratne, K., & Murthi, M. (2022). Have beliefs in conspiracy theories increased over time? PLOS ONE, 17(7), e0270429. https://doi.org/10.1371/journal.pone.0270429

Van Bavel, J. J., Harris, E. A., Pärnamets, P., Rathje, S., Doell, K. C., & Tucker, J. A. (2021). Political psychology in the digital (mis) information age: A model of news belief and sharing. Social Issues and Policy Review, 15(1), 84–113. https://doi.org/10.1111/sipr.12077

van Prooijen, J.-W. (2017). Why education predicts decreased belief in conspiracy theories. Applied Cognitive Psychology, 31(1), 50–58. https://doi.org/10.1002/acp.3301

Wardle, C., & Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policymaking. Council of Europe. https://rm.coe.int/information-disorder-toward-an-interdisciplinary-framework-for-researc/168076277c

Funding

This study has partially benefited from the support of the project co-financed by the European Commission within the framework of the Connecting Europe Facility (CEF) – Telecommunications Sector (contract no. INEA/CEF/ICT/A2020/2394372).

Competing Interests

The authors do not report any competing interests.

Ethics

Participants provided informed consent before participating in the survey. Participants had the freedom not to answer the question regarding their gender (they could also select the “non-binary/third gender” option or the “prefer not to say” option).

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/5C3FHW and OSF: https://doi.org/10.17605/OSF.IO/JD9XF.

Authorship

After the first author who led the project, the order of authors was determined by alphabetical order.