Peer Reviewed

Who reports witnessing and performing corrections on social media in the United States, United Kingdom, Canada, and France?

Article Metrics

5

CrossRef Citations

PDF Downloads

Page Views

Observed corrections of misinformation on social media can encourage more accurate beliefs, but for these benefits to occur, corrections must happen. By exploring people’s perceptions of witnessing and performing corrections on social media, we find that many people say they observe and perform corrections across the United States, the United Kingdom, Canada, and France. We find higher levels of self-reported correction experiences in the United States but few differences between who reports these experiences across countries. Specifically, younger and more educated adults, as well as those who see misinformation more frequently online, are more likely to report observing and performing corrections across contexts.

Research Questions

- How often do people perceive themselves seeing and performing corrections?

- Does reported frequency of experiences of observed corrections and performed corrections differ by country?

- To what extent are demographic characteristics, news consumption, and misinformation exposure associated with reported observed corrections and performed corrections?

- Do these associations vary by country?

Essay Summary

- Self-reported observed corrections are relatively common across our samples in the United States, Canada, the United Kingdom, and France, but performed corrections are less so.

- Self-reported observed and performed corrections differ by country, with higher rates in the United States.

- Across countries, older adults are less likely to say they perform or see corrections, while those more educated and those who report seeing misinformation are more likely.

- The contextual or demographic characteristics associated with reported observed and performed corrections do not differ much by country.

Implications

Social media is often criticized as a source of misinformation (Smith et al., 2019; Wang et al., 2019), but social media can also be a source of corrections. Research has consistently found that observed corrections—which occur when people see someone else corrected on social media—reduce misperceptions on that topic among the community witnessing the interaction (Bode & Vraga, 2015; Porter et al., 2023; Vraga & Bode, 2020; Vraga et al., 2020; Walter et al., 2021).

But to truly gauge the societal impact of observed corrections, we need to understand how often people perceive it to occur on social media and how often they say they do it themselves.1It is important to note that what we measure and describe here is just that—perceptions of these experiences (seeing misinformation, seeing correction, performing correction) rather than anything that we can directly observe. For this reason, throughout the manuscript we will refer to perceptions, reports, and self-reports. Previous research has begun to explore this question but is largely limited to exploring who witnesses and performs corrections and related behaviors in a single country—for example, the United States (Amazeen et al., 2018; Bode & Vraga, 2021b; Huber et al., 2022), Brazil (Rossini et al., 2021; Vijaykumar et al., 2021), Singapore (Tandoc et al., 2020), or the United Kingdom (Chadwick & Vaccari, 2019). Only two studies (to our knowledge) have explored performed corrections at a single point in timeacross multiple countries (Singapore, Turkey, and the United States; Kuru et al., 2022, 2023). This reflects a primary focus on the United States within the existing misinformation research, suggesting a need to investigate individuals’ experiences in diverse contexts (Murphy et al., 2023). By including multiple countries in a single study, especially by examining patterns across different contexts, we gain a deeper understanding of how commonly perceived corrections occur.

Additionally, the bulk of existing work focuses on the number of people who say they perform corrections but does not also examine how many people report witnessing these corrections (except Bode & Vraga, 2021b; Rossini et al., 2021). This latter question more fully speaks to the potential scope for observed corrections, as we expect many more people to see corrections than perform them themselves. Given existing research on the power of observed correction in reducing misperceptions among broader communities (Bode & Vraga, 2018; Vraga et al., 2020; Walter et al., 2021), knowing just how many (and the characteristics of) the people who report these experiences helps understand observed corrections’ practical value to society. Therefore, this study expands on previous research by simultaneously examining whosays they see and whosays they perform corrections across four countries at a single point in time, to be able to better compare the contextual or demographic characteristics associated with each experience across cultural contexts.

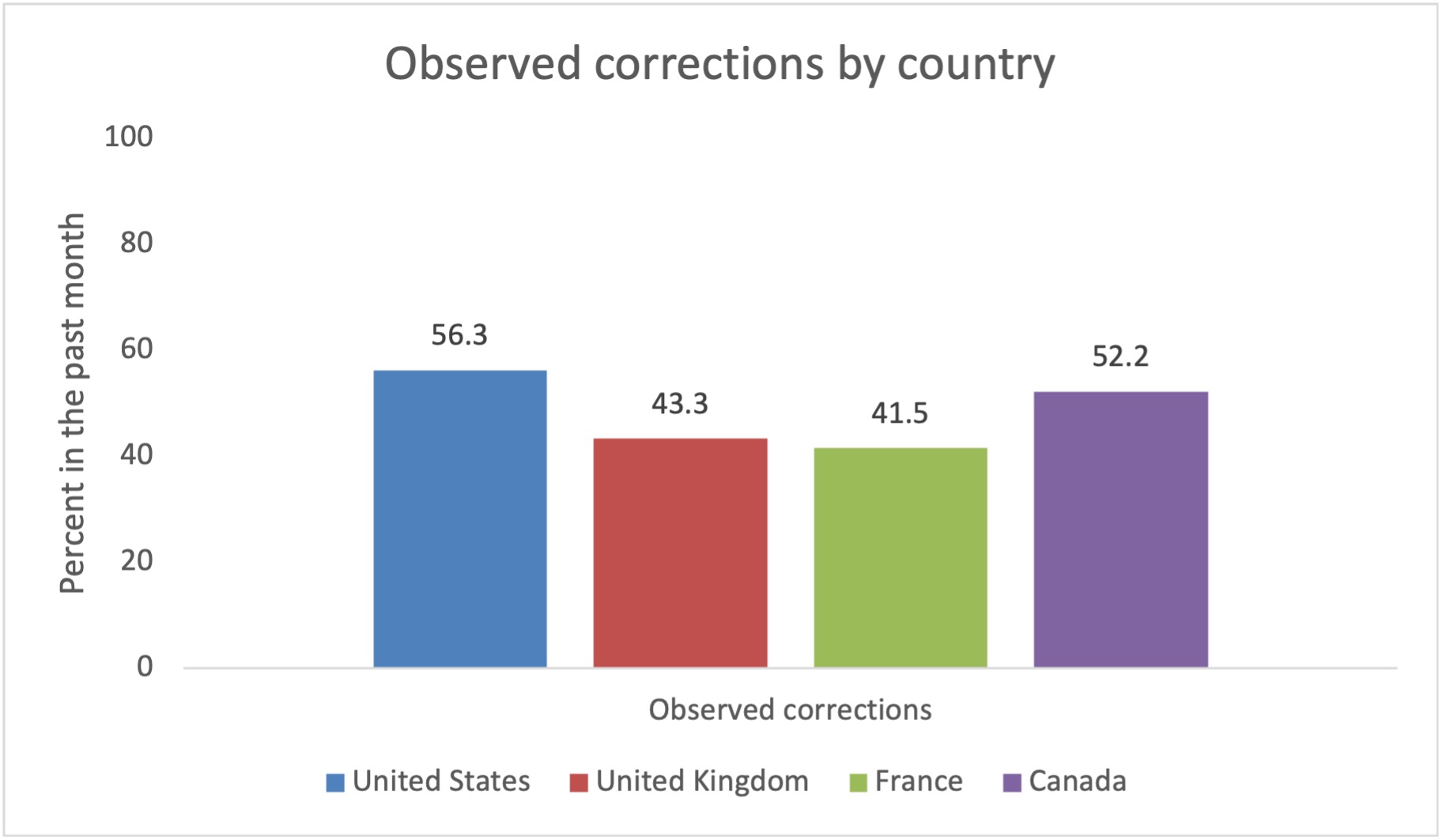

Our descriptive research found that people report that observed corrections happen relatively often across all four countries. Among those who said they saw misinformation at least rarely in the past month, between 41.5–56.3% of them report also having seen a correction. This experience differed modestly by country: People in the United States and Canada, as compared to the United Kingdom and France, were more likely to report having observed corrections. Two explanations seem promising for these differences. First, the spread of misinformation associated with the 2020 U.S. presidential election and the following January 6th insurrection (Mitchell et al., 2021; Prochaska et al., 2023) was more salient to Americans and Canadians at the time of this survey in early 2021. However, previous research (Boulianne et al., 2021) also suggests that reported misinformation exposure was higher in the United States and Canada in 2019 than in the United Kingdom and France, so results are not limited to this specific time period. Also, we expected that online platforms’ focus on the English language (Ilyosovna, 2020) may make corrections of misinformation more visible or frequent for English-speaking social media users. Supporting these explanations, the most important characteristic associated with observed corrections is perceived exposure to misinformation on social media platforms across all four countries examined.

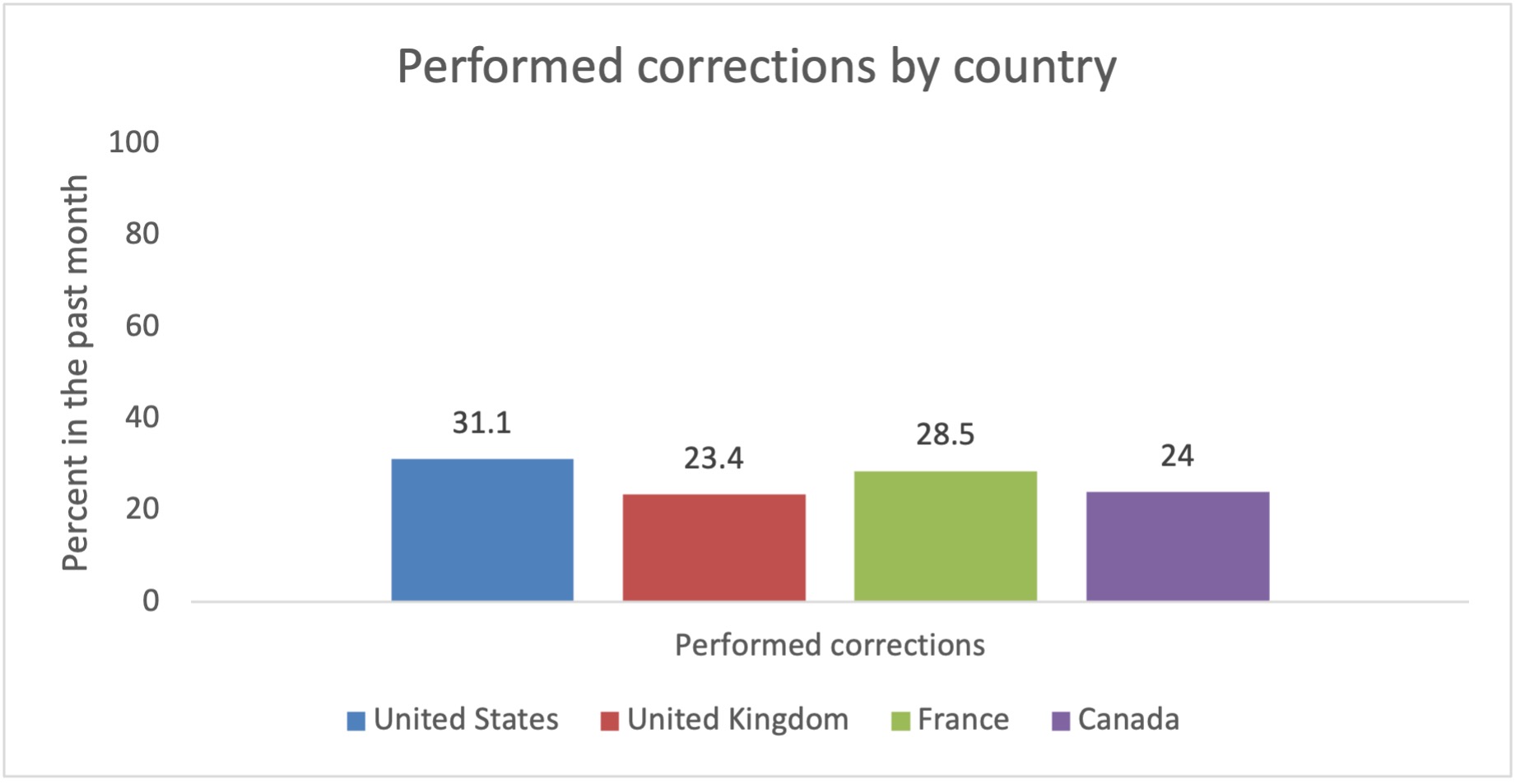

Seeing corrections can only occur if corrections are being performed—something about a quarter of people said they did in the past month. This number appears roughly in line with some previous research—for example, Bode and Vraga (2021b) found that 35% of U.S. respondents who had seen misinformation had also corrected someone in the previous week at the beginning of the COVID-19 pandemic in March of 2020, and Rossini et al. (2021) found that around 32% of Brazilian respondents reported engaging in social corrections on Facebook in 2019. Yet, self-reportedperformed corrections are also markedly higher than other cases—for example, Tandoc et al. (2020) found that around 12% of Singapore social media users would leave a comment when reading fake news in 2016, Amazeen et al. (2018) found 15% of their U.S. survey respondents posted fact-checks on social media in 2017, and Chadwick and Vaccari (2019) found that about 11% of U.K. respondents recalled correcting someone else for sharing inaccurate news in 2018. In our study, Americans were most likely to say they had corrected misinformation, whereas British and Canadians were the least likely (with France in between), which again may reflect heightened sensitivity to misinformation, especially among Americans. Much like with observed corrections, seeing misinformation is the strongest predictor of performed corrections. Future research needs to investigate whether these cross-country differences persist and delve into why some countries produce more self-reported correctors than others.

Despite these differences across countries in terms of whether people reported seeing and performing corrections, the contextual (e.g., news consumption and misinformation exposure) or demographic markers associated with seeing or performing corrections were largely consistent across countries. As no research (to our knowledge) has explored these questions in a cross-national context, the finding that there are few (if any) differences between countries in who says they see and perform corrections is notable. Therefore, we consider which individual differences are associated with observed and performed corrections across the four countries.

Beyond seeing misinformation, several important individual characteristics are associated with correction experiences. First, we found that older people were less likely to report seeing and performing corrections on their social media feeds.This might be problematic, as older people were more likely to be exposed to and share fake news sources during the 2016 U.S. presidential election (Brashier & Schacter, 2020; Grinberg et al., 2019; Guess et al., 2019), accept health misinformation (Pan et al., 2021), and visit fact-checking websites less frequently (Robertson et al., 2020). Older adults were also less inclined to correct others (see also Huber et al., 2022). This may be because they perceived misinformation exposure as less risky (Knuutila et al., 2022; Kuru et al., 2022) and worried more about the risk of corrections on social media (Bode & Vraga, 2021a). However, research shows that in the United States, older adults are more likely to share fact-checks in political conversations on social media (Amazeen et al., 2018), which could mean that although older adults are less likely to directly correct others, they may be willing to share fact-check information to indirectly help reduce other people’s misconceptions—a question future research should explore.

Beyond that, education and gender both help explain who observes and performs corrections across the four countries. Our study found females were more likely to say they see corrections, which may result from higher concerns about misinformation (Almenar et al., 2021) that make them more aware of both misinformation and its correction. Moreover, while this study found that there is no difference in the likelihood of performing corrections between males and females across these four Western democracies, past research suggests there are cross-national differences in whether females are more likely to perform corrections, with females in Turkey more likely to perform corrections and females in Singapore less likely to do so (Kuru et al., 2022), suggesting that different countries’ contexts may influence females’ corrective actions. Future research needs to continue to investigate what influences females’ correction-performing choices in different countries. Additionally, people with higher education were more likely to say they both see and perform corrections. This may be because people with higher education levels are more confident in performing corrections or perceive a greater risk of misinformation (Knuutila et al., 2022; Kuru et al., 2022). While our results align with previous research (Bode & Vraga, 2021b; Vijaykumar et al., 2021), the findings that those with lower educational levels were less likely to report seeing corrections but more likely to accept misinformation (Scherer et al., 2021) is concerning. This underscores that corrections might not be a panacea for all groups of people.

Political orientations and political extremism are also associated with people’s correction experiences. On average, across the four countries, people who identified themselves as more left-wing were more likely to witness corrections compared to those on the right. This is aligned with previous research from the United States, indicating that people who perceived themselves to be more liberal were more likely to visit fact-checking websites (Robertson et al., 2020). This result is also concerning, as prior results have shown that Americans who identify themselves as right-wing were associated with higher susceptibility to misinformation (Garrett & Bond, 2021; Roozenbeek et al., 2022), less accurate discernment between real and false COVID-19 headlines (Calvillo et al., 2020), and a greater likelihood of seeing and sharing misinformation (Grinberg et al., 2019). Furthermore, we found that political extremists were more likely to see and perform corrections. However, given political extremists are more prone to believing in conspiracy theories (van Prooijen et al., 2015), the corrections they reported might be based on unfounded or misleading information, which means some of the “corrections” could be misguided or inaccurate.

Importantly, these individual differences in correction experiences, both in terms of witnessing and performing corrections, highlight the inequalities that pervade the social media environment. If corrections can reduce misperceptions but are not reaching everyone who needs them, it undermines their potential value to society. Likewise, we are concerned when only some voices are represented in performing corrections both because diversity has a normative value for society and because corrections from trusted and close connections are likely to be more effective (Ecker & Antonio, 2021; Margolin et al., 2018). Therefore, more efforts should be made to ensure everyone feels able to correct misinformation safely and that those corrections are reaching across boundaries in society.

While our study provides valuable insights into the observed and performed corrections across various countries, it is not without its limitations. One primary constraint is that our research was built on a cross-sectional survey, which limits our capacity to draw causal inferences from the correlations we identified. Additionally, the data only matches the age and sex of country population characteristics, further limiting generalizability. Future studies need to adopt a longitudinal design and use national representative samples, which could afford a more nuanced understanding of these experiences over time and improve the generalizability of the results. Additionally, our study relied on self-reported data. We expect many people to over-estimate just how often they perform and witness corrections, given observational studies regarding the frequency of corrections on social media that offer much lower estimates (Bond & Garrett, 2023; He et al., 2023; Ma et al., 2023). We do not have any data regarding those actual experiences that we could match to the survey-reported perceptions on which we report. Future research should, therefore, employ other methods to validate how well people’s perceptions align with their actual experiences and to explore how we can encourage more people to correct and to see themselves as correctors. However, understanding people’s perceptionsabout this process remains important, as those perceptions speak to potentially powerful social norms in favor of corrections that might be leveraged to further bolster this response to misinformation (Bode & Vraga, 2021a). Given that we find these perceptions are unevenly distributed among the population, this also suggests important gaps where these social norms operate, furthering potential inequalities in who feels they can participate in online discourse.

Another limitation of this study is that the idiosyncratic differences in information environment between countries, especially in terms of proximity to the U.S. presidential election and its aftermath, may influence the results of this study. While our results broadly align with previous work looking at misinformation experiences in Western democracies (Boulianne et al., 2021), more future research needs to be done to validate the results of this study and continue to explore when and why differences emerge.

Furthermore, this study only focuses on four Western countries, including the United States, the United Kingdom, Canada, and France, and the findings cannot be generalized to other countries, especially non-Western countries, with different social, cultural, and political contexts. This offers opportunities for future research, which can investigate how people report their perceived observed and performed corrections in non-Western contexts and what factors may predict correction experiences in other countries (Murphy et al., 2023). Finally, even though our study considered an important set of independent variables (e.g., frequency of misinformation exposure, age, gender, education, news use, and political orientation), there may be other important contextual factors, such as social media use, media literacy skills, trust in media, and cultural norms, which could influence how people respond to misinformation. Future researchers could dive deeper into what other important factors may influence people’s correction experience on social media.

Findings

Finding 1: Self-reported observed corrections are more common among Canadian and American social media users compared to those in the United Kingdom and France.

Among those who reported seeing misinformation in the past month, many people across all four countries also reported seeing corrections happen on social media. This frequency differed across the four countries, with more Canadians and Americans reporting seeing corrections on social media in the past month than residents in the United Kingdom and France.

Finding 2: Self-reported performed corrections are most frequent in the United States compared with those in the United Kingdom and Canada, with those in France falling in the middle.

Fewer people reported performing corrections on social media as compared to seeing them. Among those who reported seeing misinformation in the past month, the percentage of people who reported performing corrections on social media in the past month differed across the four countries, with Americans reporting a higher number of performed corrections on social media than either British or Canadian residents, with the French falling in between.

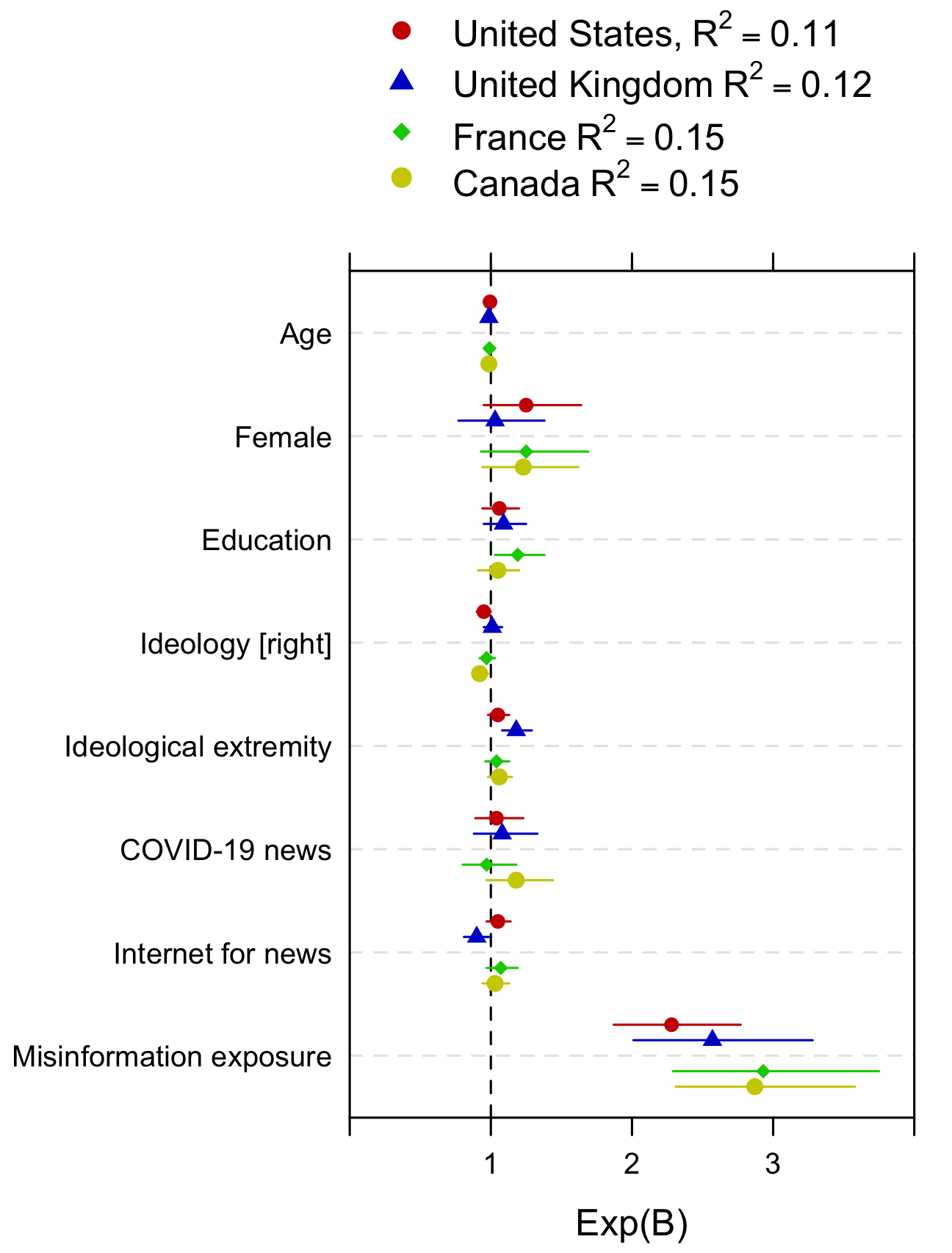

Finding 3: The contextual or demographic characteristics associated with reported observed corrections do not substantially differ by country.

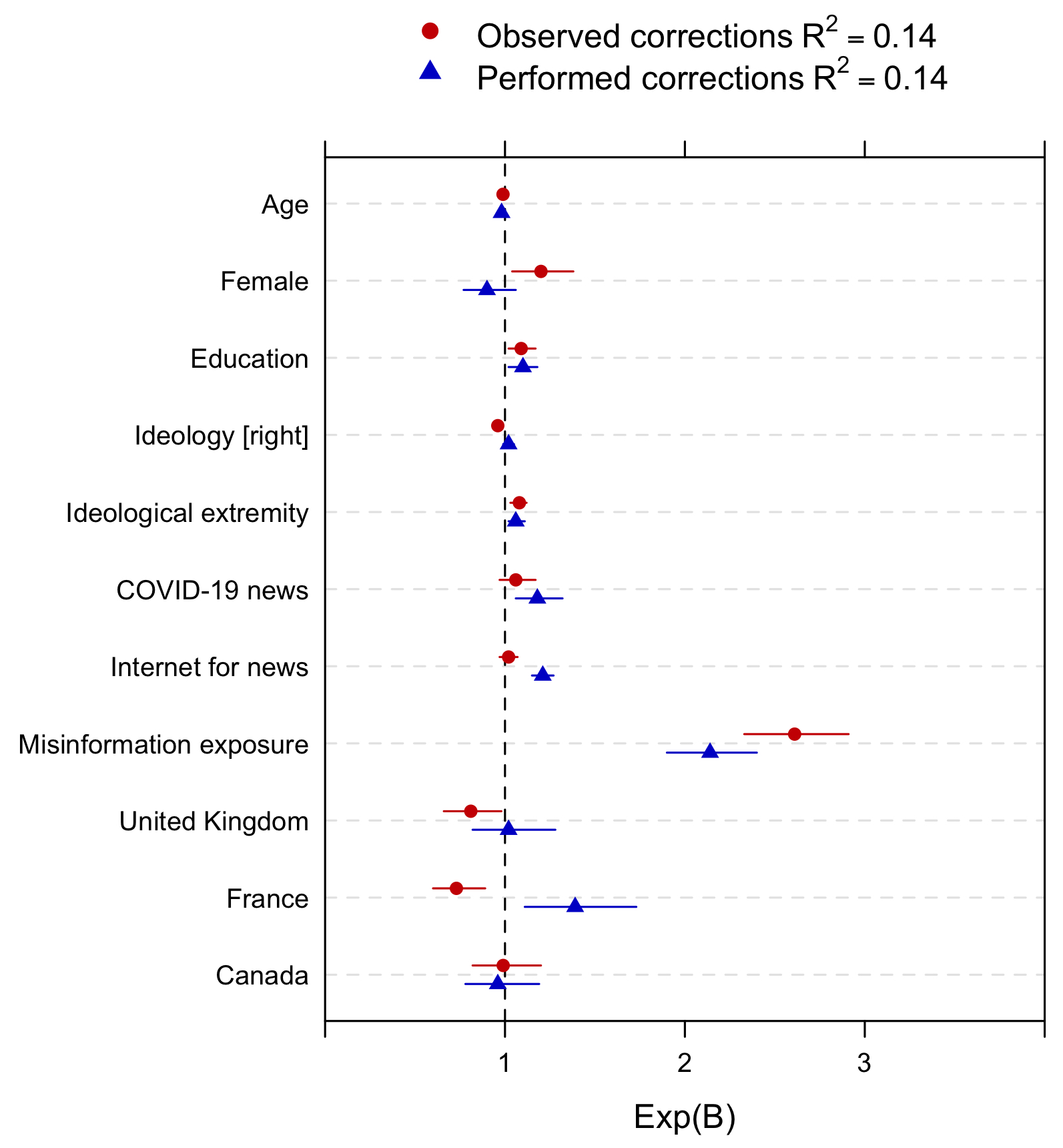

Our results suggest that the individual characteristics associated with observed corrections—including age, gender, education, ideology, ideological extremity, news consumption, and misinformation exposure—do not substantially differ across the four countries. The only consistent significant relationship is higher levels of observed corrections among those with more misinformation exposure. Age is also significantly related to seeing corrections in three of all four countries (except the United States), with older people reporting less frequent experiences of observed corrections. Finally, those who identify more with the right generally report seeing fewer corrections; this pattern is significant in the United States and Canada but not significant for France and the United Kingdom.

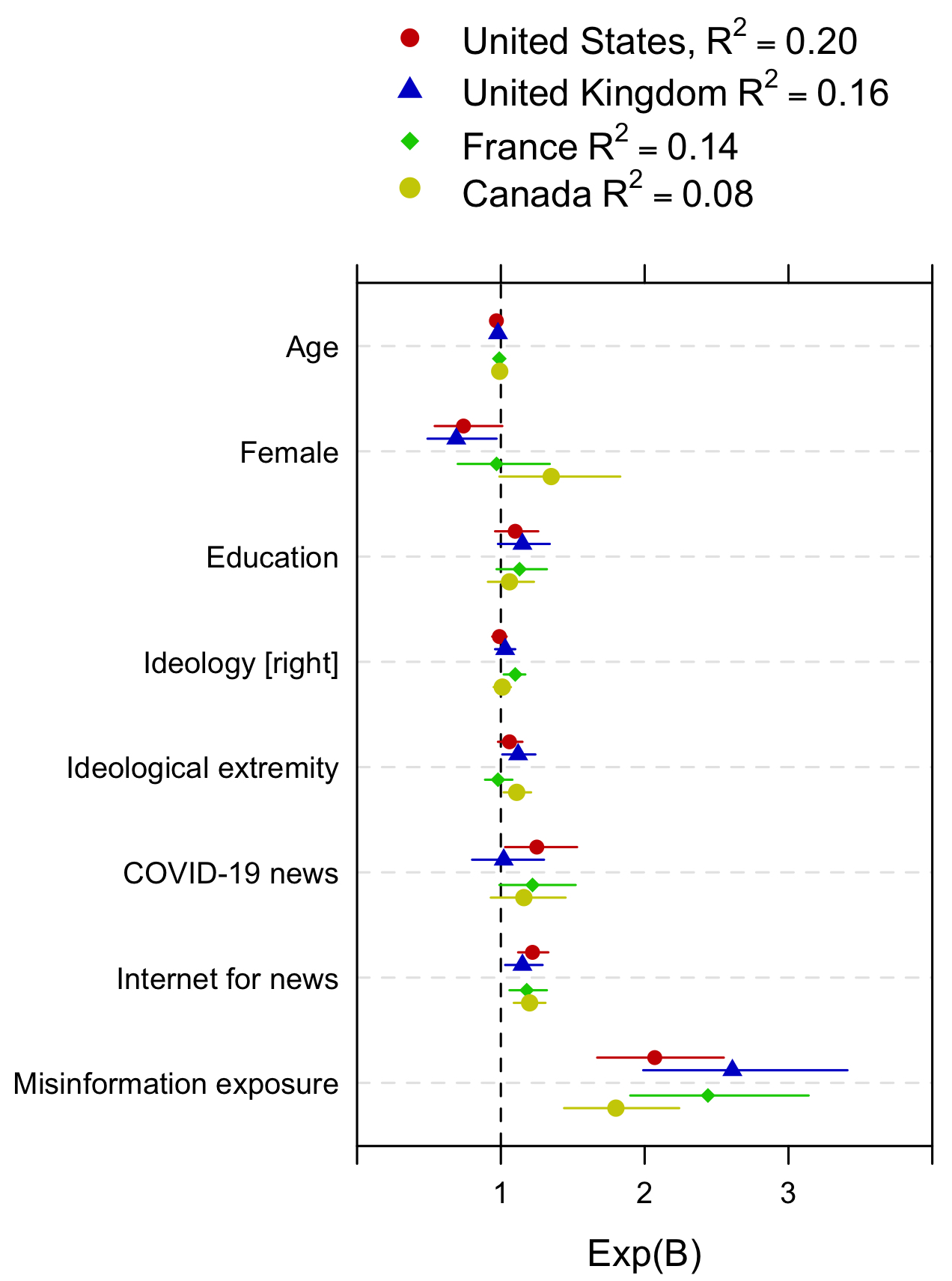

Finding 4: The contextual or demographic characteristics associated with reported performed corrections do not substantially differ by country.

We also did not observe major differences in the predictors of performed corrections by country. Across all four countries, the single strongest and most consistent predictor of performing corrections is seeing misinformation on social media platforms. Additionally, older people are significantly less likely to perform corrections in the United States, the United Kingdom, and France. Finally, the use of the internet for news is consistently, positively, and significantly associated with performing corrections, with news users reporting higher self-reported performed corrections on social media in the past month.

Finding 5: Across countries, older adults are less likely to say they perform or see corrections, while the more educated and those who report seeing misinformation are more likely.

Given the overall consistency of our results across countries, our final analysis looked at the characteristics associated with reported observed and performed corrections amongst all the countries. We entered the three countries as controls, with the United States as the excluded group to account for differences in the frequency of these experiences across contexts. These analyses largely reinforced the results reported above. Misinformation exposure produced the single strongest relationship to both observed and performed corrections. Age also produced a consistent relationship, with older adults less likely to witness or perform corrections. Looking specifically at observed corrections, we found that females, the more educated, those with a more left-leaning ideology, and ideological extremists are all more likely to report witnessing corrections. Looking specifically at performedcorrections, we found that highly educated, the ideologically extreme, and those who consume more news (both political news via the internet and COVID-19-specific news) are more likely to report performing corrections.

Methods

To answer our research questions, we employed Kantar (an international survey firm) to administer a survey to an online panel in January and February of 2021. One thousand five hundred people in each of the three countries (the United States, France, and the United Kingdom) participated, with 1,568 participants from Canada (total N = 6,068). Only adults older than 18 years old were invited to participate, and participants were recruited to matchcountry population characteristics in terms of age and sex (see Appendix B). Data collection was supported by funding from Canadian Heritage’s Digital Citizenship Initiative. The data and replication files are available via the Harvard Dataverse at https://doi.org/10.7910/DVN/IG3WCC.

Participants were asked close-ended questions about their news habits, their social media use, and their misinformation exposure. Misinformation exposure was measured by asking the participants to rate whether they have seen someone share misinformation (defined as information that is false or misleading information) on the social media sites used by the participants in the past month on a four-point scale from 1 (never) to 4 (often). Only participants who reported seeing misinformation at least 2 (rarely) on social media in the past month were asked follow-up questions about whether they saw and performed corrections on social media. To measure observed and performed corrections, participants were asked to respond “yes” or “no” to the following two questions: (1) whether they had seen someone else being told they shared misinformation on social media in the past month and (2) when they saw this misinformation, whether they offered a correction. Please see Appendix C for details on question-wording for all independent and dependent variables.

Topics

Bibliography

Almenar, E., Aran-Ramspott, S., Suau, J., & Masip, P. (2021). Gender differences in tackling fake news: Different degrees of concern, but same problems. Media and Communication, 9(1), 229–238. https://doi.org/10/gppkr3

Amazeen, M. A., Vargo, C. J., & Hopp, T. (2018). Reinforcing attitudes in a gatewatching news era: Individual-level antecedents to sharing fact-checks on social media. Communication Monographs, 86(1), 112–132. https://doi.org/10/ggjk25

Bode, L., & Vraga, E. (2021a). Value for correction: Documenting perceptions about peer correction of misinformation on social media in the context of COVID-19. Journal of Quantitative Description: Digital Media, 1. https://doi.org/10/gr6kzp

Bode, L., & Vraga, E. K. (2015). In related news, that was wrong: The correction of misinformation through related stories functionality in social media. Journal of Communication, 65(4), 619–638. https://doi.org/10.1111/jcom.12166

Bode, L., & Vraga, E. K. (2018). See something, say something: Correction of global health misinformation on social media. Health Communication, 33(9), 1131–1140. https://doi.org/10.1080/10410236.2017.1331312

Bode, L., & Vraga, E. K. (2021b). Correction experiences on social media during COVID-19. Social Media + Society, 7(2), 205630512110088. https://doi.org/10/gr6kxc

Bond, R. M., & Garrett, R. K. (2023). Engagement with fact-checked posts on Reddit. PNAS Nexus, 2(3), pgad018. https://doi.org/10/grqm6x

Boulianne, S., Belland, S., Tenove, C., & Friesen, K. (2021). Misinformation across social media platforms and across countries. Research Online at MacEwan. https://hdl.handle.net/20.500.14078/2244

Brashier, N. M., & Schacter, D. L. (2020). Aging in an era of fake news. Current Directions in Psychological Science, 29(3), 316–323. https://doi.org/10.1177/0963721420915872

Calvillo, D. P., Ross, B. J., Garcia, R. J. B., Smelter, T. J., & Rutchick, A. M. (2020). Political ideology predicts perceptions of the threat of COVID-19 (and susceptibility to fake news about it). Social Psychological and Personality Science, 11(8), 1119–1128. https://doi.org/10/gg56q6

Chadwick, A., & Vaccari, C. (2019). News sharing on UK social media: Misinformation, disinformation, and correction. Loughborough University. https://repository.lboro.ac.uk/articles/report/News_sharing_on_UK_social_media_misinformation_disinformation_and_correction/9471269/1

Ecker, U. K. H., & Antonio, L. M. (2021). Can you believe it? An investigation into the impact of retraction source credibility on the continued influence effect. Memory & Cognition, 49(4), 631–644. https://doi.org/10.3758/s13421-020-01129-y

Garrett, R. K., & Bond, R. M. (2021). Conservatives’ susceptibility to political misperceptions. Science Advances, 7(23), eabf1234. https://doi.org/10/gkzv8m

Grinberg, N., Joseph, K., Friedland, L., Swire-Thompson, B., & Lazer, D. (2019). Fake news on Twitter during the 2016 U.S. presidential election. Science, 363(6425), 374–378. https://doi.org/10/gf3gmt

Guess, A., Nagler, J., & Tucker, J. (2019). Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Science Advances, 5(1), eaau4586. https://doi.org/10.1126/sciadv.aau4586

He, B., Ahamad, M., & Kumar, S. (2023). Reinforcement learning-based counter-misinformation response generation: A case study of COVID-19 vaccine misinformation. In WWW ’23: Proceedings of the ACM web conference 2023 (pp. 2698–2709). Association of Computing Machinery. https://doi.org/10.1145/3543507.3583388

Huber, B., Borah, P., & Gil de Zúñiga, H. (2022). Taking corrective action when exposed to fake news: The role of fake news literacy. Journal of Media Literacy Education, 14(2), 1–14. https://doi.org/10/gr6kzq

Ilyosovna, N. A. (2020). The importance of English language. International Journal on Orange Technologies, 2(1), 22–24. https://journals.researchparks.org/index.php/IJOT/article/view/478

Knuutila, A., Neudert, L.-M., & Howard, P. N. (2022). Who is afraid of fake news? Modeling risk perceptions of misinformation in 142 countries. Harvard Kennedy School (HKS) Misinformation Review, 3(3). https://doi.org/10.37016/mr-2020-97

Kuru, O., Campbell, S. W., Bayer, J. B., Baruh, L., and, & Ling, R. (2022). Encountering and correcting misinformation on WhatsApp. In H. Wasserman & D. Madrid-Morales (Eds.), Disinformation in the Global South (pp. 88–107). John Wiley & Sons. https://doi.org/10.1002/9781119714491.ch7

Kuru, O., Campbell, S. W., Bayer, J. B., Baruh, L., & Ling, R. S. (2023). Reconsidering misinformation in WhatsApp groups: Informational and social predictors of risk perceptions and corrections. International Journal of Communication, 17(2023), 2286–2308. https://ijoc.org/index.php/ijoc/article/view/19590/4110

Ma, Y., He, B., Subrahmanian, N., & Kumar, S. (2023). Characterizing and predicting social correction on Twitter. In WebSci ’23:Proceedings of the 15th ACM web science conference 2023 (pp. 86–95). Association for Computing Machinery. https://doi.org/10.1145/3578503.3583610

Margolin, D. B., Hannak, A., & Weber, I. (2018). Political fact-checking on Twitter: When do corrections have an effect? Political Communication, 35(2), 196–219. https://doi.org/10/cv28

Mitchell, A., Jurkowitz, M., Oliphant, J. B., & Shearer, E. (2021). 3. Misinformation and competing views of reality abounded throughout 2020. In How Americans navigated the news in 2020: A tumultuous year in review. Pew Research Center. https://www.pewresearch.org/journalism/2021/02/22/misinformation-and-competing-views-of-reality-abounded-throughout-2020/

Murphy, G., de Saint Laurent, C., Reynolds, M., Aftab, O., Hegarty, K., Sun, Y., & Greene, C. M. (2023). What do we study when we study misinformation? A scoping review of experimental research (2016-2022). Harvard Kennedy School (HKS) Misinformation Review, 4(6). https://doi.org/10.37016/mr-2020-130

Pan, W., Liu, D., & Fang, J. (2021). An examination of factors contributing to the acceptance of online health misinformation. Frontiers in Psychology, 12, 630268. https://doi.org/10/gpv6bx

Porter, E., Velez, Y., & Wood, T. J. (2023). Correcting COVID-19 vaccine misinformation in 10 countries. Royal Society Open Science, 10(3), 221097. https://doi.org/10/gr2nzt

Prochaska, S., Duskin, K., Kharazian, Z., Minow, C., Blucker, S., Venuto, S., West, J. D., & Starbird, K. (2023). Mobilizing manufactured reality: How participatory disinformation shaped deep stories to catalyze action during the 2020 U.S. presidential election. Proceedings of the ACM on Human-Computer Interaction, 7(CSCW1), 1–39. https://doi.org/10.1145/3579616

Robertson, C. T., Mourão, R. R., & Thorson, E. (2020). Who uses fact-checking sites? The impact of demographics, political antecedents, and media use on fact-checking site awareness, attitudes, and behavior. The International Journal of Press/Politics, 25(2), 217–237. https://doi.org/10/gr6kxs

Roozenbeek, J., Maertens, R., Herzog, S. M., Geers, M., Kurvers, R., Sultan, M., & van der Linden, S. (2022). Susceptibility to misinformation is consistent across question framings and response modes and better explained by myside bias and partisanship than analytical thinking. Judgment and Decision Making, 17(3), 547–573. https://doi.org/10.1017/S1930297500003570

Rossini, P., Stromer-Galley, J., Baptista, E. A., & Veiga de Oliveira, V. (2021). Dysfunctional information sharing on WhatsApp and Facebook: The role of political talk, cross-cutting exposure and social corrections. New Media & Society, 23(8), 2430–2451. https://doi.org/10.1177/1461444820928059

Scherer, L. D., McPhetres, J., Pennycook, G., Kempe, A., Allen, L. A., Knoepke, C. E., Tate, C. E., & Matlock, D. D. (2021). Who is susceptible to online health misinformation? A test of four psychosocial hypotheses. Health Psychology, 40(4), 274. https://doi.org/10/gjw3gm

Smith, A., Silver, L., Johnson, C., & Jiang, J. (2019). Publics in emerging economies worry social media sow division, even as they offer new chances for political engagement. Pew Research Center. https://www.pewresearch.org/internet/2019/05/13/publics-in-emerging-economies-worry-social-media-sow-division-even-as-they-offer-new-chances-for-political-engagement/

Tandoc, E. C., Lim, D., & Ling, R. (2020). Diffusion of disinformation: How social media users respond to fake news and why. Journalism, 21(3), 381–398. https://doi.org/10/ggcgpc

van Prooijen, J.-W., Krouwel, A. P. M., & Pollet, T. V. (2015). Political extremism predicts belief in conspiracy theories. Social Psychological and Personality Science, 6(5), 570–578. https://doi.org/10.1177/1948550614567356

Vijaykumar, S., Rogerson, D. T., Jin, Y., & de Oliveira Costa, M. S. (2021). Dynamics of social corrections to peers sharing COVID-19 misinformation on WhatsApp in Brazil. Journal of the American Medical Informatics Association, 29(1), 33–42. https://doi.org/10/gr6kzs

Vraga, E. K., & Bode, L. (2020). Correction as a solution for health misinformation on social media. American Journal of Public Health, 110(S3), S278–S280. https://doi.org/10/gjmhgh

Vraga, E. K., Kim, S. C., Cook, J., & Bode, L. (2020). Testing the effectiveness of correction placement and type on Instagram. The International Journal of Press/Politics, 25(4), 632–652. https://doi.org/10/gg87jt

Walter, N., Brooks, J. J., Saucier, C. J., & Suresh, S. (2021). Evaluating the impact of attempts to correct health misinformation on social media: A meta-analysis. Health Communication, 36(13), 1776–1784. https://doi.org/10.1080/10410236.2020.1794553

Wang, Y., McKee, M., Torbica, A., & Stuckler, D. (2019). Systematic literature review on the spread of health-related misinformation on social media. Social Science & Medicine, 240, 112552. https://doi.org/10.1016/j.socscimed.2019.112552

Funding

Data collection was supported by funding from Canadian Heritage’s Digital Citizenship Initiative.

Competing Interests

The authors declare no competing interests.

Ethics

The research protocol employed was approved by MacEwan University (File No. 101856) in accordance with Canada’s Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans. Human subjects provided informed consent. We didn’t ask about ethnicity which is difficult to translate across countries. More importantly, there are rules in France about not asking race/ethnicity (to get approval to do so is difficult). As for gender, the respondents self-identified as male, female and non-binary as per AAPOR guidelines back in 2018.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/IG3WCC