Peer Reviewed

US-skepticism and transnational conspiracy in the 2024 Taiwanese presidential election

Article Metrics

3

CrossRef Citations

PDF Downloads

Page Views

Taiwan has one of the highest freedom of speech indexes while it also encounters the largest amount of foreign interference due to its contentious history with China. Because of the large influx of misinformation, Taiwan has taken a public crowdsourcing approach to combatting misinformation, using both fact-checking ChatBots and public dataset called CoFacts. Combining CoFacts with large-language models (LLM), we investigated misinformation across three platforms (Line, PTT, and Facebook) during the 2024 Taiwanese presidential election. We found that most misinformation appears within China-friendly political groups and attacks US-Taiwan relations through visual media like images and videos. A considerable proportion of misinformation does not question U.S. foreign policy directly. Rather, it exaggerates domestic issues in the United States to create a sense of declining U.S. state capacity. Curiously, we found misinformation rhetoric that references conspiracy groups in the West.

Research Questions

- What are the misinformation narratives surrounding the election in Taiwan and how do they target international relations with the United States?

- What geographical or temporal patterns emerge from misinformation data?

- Who are the targets of these misinformation narratives and through what modalities?

Essay Summary

- We leveraged a dataset of 41,291 labeled articles from Line, 911,510 posts from Facebook, and 2,005,972 posts and comments from PTT to understand misinformation dynamics through topic modeling and network analysis.

- The primary form of misinformation is narratives that attack international relations with the United States (henceforth referred to as US-skepticism), specifically referencing the economy, health policy, the threat of war through Ukraine, and other U.S. domestic issues.

- Temporal and spatial evidence suggests VPN-based coordination, focused on U.S. issues and addresses.

- Misinformation is most common among pan-Blue and ROC identity groups on social media and is spread through visual media. These groups share many themes with conspiracy groups in Western countries.

- Our study shows the prevalence of misinformation strategies using visual media and fake news websites. It also highlights how crowdsourcing and advances in large-language models can be used to identify misinformation in cross-platform workflows.

Implications

According to Freedom House, Taiwan has one of the highest indices for free speech in Asia (Freedom House, 2022). Additionally, due to its contentious history with China, it receives significant foreign interference and misinformation, especially during its presidential elections. Due to the large influx of dis- and misinformation, Taiwan has developed many strategies to counter misleading narratives, including fact-checking ChatBots on its most popular chatroom app (Chang et al., 2020). Under this information environment, the 2024 Taiwanese presidential election emerged as one of the most divisive elections in Taiwan’s history, featuring at one point a doubling of presidential candidates in a typically two-party race, from two to four. As such, Taiwan is regarded as a “canary for disinformation” against elections in 2024, as a first indicator to how foreign interference may take place in other democracies (Welch, 2024).

In this paper, we study the misinformation ecosystem in Taiwan starting a year prior to the election. First, our findings highlight the interaction between misinformation and international relations. As was reported in The Economist and The New York Times, a considerable portion of the misinformation spread in Taiwan before the 2024 election is about US-skepticism, which aims at undermining the reputation of the United States among Taiwanese people (“China is flooding Taiwan with disinformation,” 2023; Hsu, Chien, and Myers, 2023). This phenomenon is significant because it does not target specific candidates or parties in the election but may indirectly influence the vote choice between pro- and anti-U.S. parties. Given the US-China global competition and the Russia-Ukraine ongoing conflict, the reputation of the United States is crucial for the strength and reliability of democratic allies (Cohen, 2003). Hence, it is not surprising that misinformation about the United States may propagate globally and influence elections across democracies. However, our findings surprisingly show that US-skepticism also includes a considerable number of attacks on U.S. domestic politics. Such content does not question the U.S. foreign policy but undermines the perceived reliability and state capacity of the United States. Here, state capacity is defined as whether a state is capable of mobilizing its resources to realize its goal, which is conceptually different from motivation and trust.

US-skepticism is commonly characterized as mistrusting the motivations of the United States, as illustrated in the Latin American context due to long histories of political influence (see dependency theory; Galeano, 1997), but our findings suggest that perceived U.S. state capacity is also an important narrative. As most foreign disinformation arises from China, this indicates a greater trend where authoritarian countries turn to sharp power tactics to distort information and defame global competitors rather than winning hearts and minds through soft power. Sharp power refers to the ways in which authoritarian regimes project their influence abroad to pierce, penetrate, or perforate the informational environments in targeted countries (Walker, 2018). In Taiwan’s case, China may not be able to tell China’s story well, but can still influence Taiwanese voters by making them believe that the United States is declining. Our findings suggest that future work analyzing the topics and keywords of misinformation in elections outside the United States should also consider the US-skepticism as one latent category, not just the politicians and countries as is common with electoral misinformation (Tenove et al., 2018). These findings are corroborated by narratives identified by a recent report including drug issues, race relations, and urban decay (Microsoft Threat Intelligence, 2024).

Additionally, our research investigates both misinformation and conspiracy theories, which are closely related. Whereas misinformation is broadly described as “false or inaccurate information” (Jerit & Zhao, 2020), a conspiracy theory is the belief that harmful events are caused by a powerful, often secretive, group. In particular, conspiracy communities often coalesce around activities of “truth-seeking,” embodying a contrarian view toward commonly held beliefs (Enders et al., 2022; Harambam, 2020; Konkes & Lester, 2017). Our findings also provide evidence of transnational similarities between conspiracy groups in Taiwan and the United States. Whereas the domestic context has been explored (Chen et al., 2023; Jerit & Zhao, 2020), the intersection of partisanship and conspiracy groups as conduits for cross-national misinformation flow deserves further investigation.

Second, our findings reemphasize that an IP address is not a reliable criterion for attributing foreign intervention. Previous studies on Chinese cyber armies show that they use a VPN for their activities on Twitter (now X) (Wang et al., 2020) and Facebook (Frenkel, 2023). Commonly known as the Reddit of Taiwan, PTT is a public forum in Taiwan that by default contains the IP address of the poster. Our analysis of PTT located a group of accounts with US IP addresses that have the same activity pattern as other Taiwan-based accounts. Therefore, it is likely that these accounts use VPN to hide their geolocation. Our results provide additional evidence that this VPN strategy also appears on secondary and localized social media platforms. Our results suggest that the analysis of the originating location of misinformation should not be based entirely on IP addresses.

Third, our findings show that text is far from the only format used in the spread of misinformation. A considerable amount of misinformation identified on Facebook is spread through links (47%), videos (21%), and photos (15%). These items may echo each other’s content or even feature cross-platform flow. Proper tools are needed to extract and juxtapose content from different types of media so that researchers can have a holistic analysis of the spread and development of misinformation (Tucker et al., 2018). Such tools are urgent since mainstream social media has adopted and highly encouraged short videos—a crucial area for researchers to assess how misinformation spreads across platforms in the upcoming year of elections. This understanding is also important for fact-check agencies because they must prepare for collecting and reviewing information on various topics found in multiple media types across platforms. Crowdsourcing, data science, expert inputs, and international collaboration are all needed to deal with multi-format misinformation environments.

With prior studies showing that the aggregated fact checks (known as wisdom of the crowds) perform on par with expert ratings (see Arechar et al., 2023; Martel et al., 2023), our case study also evidences how crowdsourcing and LLM approaches can not only quickly fact-check but also summarize larger narrative trends. In Taiwan, this takes form of the CoFacts open dataset, which we use to identify misinformation narratives. CoFacts is a project initiated by g0v (pronounced “gov zero”), a civic hacktivism community in Taiwan that started in 2012. CoFacts started as a fact-checking ChatBot that circumvents the closed nature of chatroom apps, where users can forward suspicious messages or integrate the ChatBot into private rooms. These narratives are then sent to a database. Individual narratives are subsequently reviewed by more than 2,000 volunteers, including teachers, doctors, students, engineers, and retirees (Haime, 2022). As a citizen-initiated project, it is not affiliated with any government entity or party.

Crucially, these reviews provide valuable labels that are used to train AI models and fine-tune LLMs. The dataset is available open source on the popular deep-learning platform HuggingFace. Just as AI and automation can be used to spread misinformation (Chang, 2023; Chang & Ferrara, 2022; Ferrara et al., 2020; Monaco & Woolley, 2022), it can also help combat “fake news” through human-AI collaboration.

Findings

Finding 1: The primary form of misinformation is narratives that attack international relations with the United States (henceforth referred to as US-skepticism), specifically referencing the economy, health policy, the threat of war through Ukraine, and other U.S. domestic issues.

The status quo between China and Taiwan is marked by Taiwan’s self-identification as a sovereign state, which is in contrast to China’s view of Taiwan as part of its territory under the “One China” policy. As brief context, China has claimed Taiwan as its territory since 1949, but the United States has helped maintain the status quo and peace after the outbreak of the Korean War in 1950. After democratization in 1987, Taiwan’s politics have been dominated by a clear blue-green division. The blue camp is led by Kuomintang (Nationalist Party, KMT hereafter), the founding party of the Republic of China (ROC, the formal name of Taiwan’s government based on its constitution) who was defeated by the People’s Republic of China (PRC) and retreated to Taiwan in 1949. The green camp is led by the Democratic Progressive Party (DPP), which pursues revising the ROC Constitution and changing the country’s name to Taiwan. The political cleavage between the blue and green camps is dictated by Taiwan’s relationship with the PRC and the United States. The blue camp’s position is that the PRC and ROC are under civil war but belong to the same Chinese nation, and thus the blue camp appreciates military support from the United States while enhancing economic and cultural cooperation with the PRC. The green camp believes that the necessary conditions for Taiwan to be free and independent are to stand firmly with the United States and maintain distance from the PRC. After 2020, the two major camps’ insufficient attention to domestic and social issues caused the rise of nonpartisans and a third party, the Taiwan People’s Party (TPP or the white camp), which strategically avoids discussing foreign policies. In the 2024 election, the ruling DPP party (green) was reelected with 40% of votes for the third consecutive presidency (from 2016 to 2028), while KMT (blue) and TPP (white) received 33% and 26% of votes, respectively.

The U.S. “One China” policy since 1979 indicates that the United States opposes any change to the status quo unless it is solved peacefully. This has motivated the PRC to persuade Taiwanese citizens to support unification using misinformation targeted at China-friendly political groups, as the cost of unification would be greatly reduced if sufficient Taiwanese citizens opposed U.S. military intervention. This history between the United States and Taiwan serves as the foundation of US-skepticism. In the literature, US-skepticism in Taiwan is composed of two key psychological elements: trust and motivation (Wu & Lin, 2019; Wu, 2023). First, many Taiwanese no longer trust the United States after the United States switched diplomatic ties from Taiwan (ROC) to the PRC in 1979. Many blue-camp supporters doubt the commitment of the United States to send troops should China invade, per the Taiwan Relations Act (Wu & Lin, 2019). Second, Taiwanese citizens question Taiwan’s role as a proxy in a potential war with China instead of sincerely protecting democracy and human rights in Taiwan (Wu, 2023).

The CoFacts dataset contains 140,314 articles submitted by Line users, which are then fact-checked by volunteers as rumor (47%), not a rumor (21%), not an article (19%), and opinion (13%). Here, rumor is synonymous with misinformation. Using the CoFacts dataset, we trained a BERTopic model to identify 34 forms of misinformation and then ranked them by their overlap with the word “elections” in Mandarin Chinese (George & Sumathy, 2023; Nguyen et al., 2020). Table 1 shows the top nine narratives.

| Topic | % Election Evoked | Entries | Keywords |

| Attacking DPP | 18.1% | 2,371 | 民進黨 – 朋友 – 政府 – 吃 DDP – Friend – Government – Endure Hardship |

| Ballot Numbers | 16.2% | 290 | 投票 – 選票 – 公投 – 選舉 – 票數 Vote – Election – Ballots |

| Attacking International Relations | 12.8% | 10,826 | 台灣 – 美國 – 中國 Taiwan – USA – China |

| Gay Marriage and Birthrate | 11.1% | 485 | 教育 – 孩子 – 婚姻 – 公投 – 家長 Education – Child – Marriage – Vote – Parenting |

| Vaccines | 9.7% | 1,492 | 疫苗 – 接種 – 病毒 – 死亡 – 注射 Vaccine – Virus – Death – Shot |

| Nuclear Energy | 9.2% | 462 | 核 – 台灣 – 電 – 廢水 – 電廠 Nuclear – Taiwan – Electricity – Water – Power Plant |

| Biometrics and Privacy | 5.7% | 140 | 指紋 – 台風 – 太陽 – 瀏覽器 – 複製 Fingerprints – Sun – Browser – Copy |

| Egg Imports | 5.0% | 280 | 雞蛋 – 蛋 – 進口 – 農業部 – 吃 Egg – Import – Agriculture – Eat |

| Ukraine | 4.6% | 216 | 烏克蘭 – 俄羅斯 – 美國 – 戰爭 – 北約 Ukraine – Russia – United States – War |

Many of these narratives are directly related to political parties or the democratic process. For instance, the highest-ranked topic is attacking the incumbent party (the DPP) at 18.1%, which contains 2,371 total posts. The subsequent misinformation topics focus on policy issues and specific narratives—international relations, issues of marriage and birth rate, vaccines, nuclear energy, biometrics, egg imports, and the war in Ukraine. These are known cleavage issues and overlap with the eight central concerns during the election cycle—economic prosperity, cross-strait affairs, wealth distribution, political corruption, national security, social reform/stability, and environmental protection (Achen & Wang, 2017; Achen & Wang, 2019; Chang & Fang, 2023).

We focus on the third type of misinformation, which is the relationship between Taiwan, the United States, and China. US-skepticism is not only the largest at 10,826 individual posts, but one flagged by journalists, policymakers, and politicians as one of the most crucial themes. This is a relatively new phenomenon in terms of proportion, which aims to sow distrust toward the United States (“China is flooding Taiwan with disinformation,” 2023). In contrast, questioning the fairness of process (i.e. ballot numbers) and policy positions (i.e. gay marriage) are common during elections. However, US-skeptical misinformation diverges in that there is no explicit political candidate or party targeted. By sampling the topic articles within this category and validating using an LLM-summarizer through the ChatGPT API, we identified three specific narratives:

(a) The United States and the threat of war: Ukraine intersects frequently in this topic, with videos of direct military actions. Example: “Did you hear former USA military strategist Jack Keane say the Ukrainian war is an investment. The USA spends just $66,000,000,000 and can make Ukraine and Russia fight… Keane then mentions Taiwan is the same, where Taiwanese citizens are an ‘investment’ for Americans to fight a cheap war. The USA is cold and calculating, without any actual intent to help Taiwan!”

(b) Economic atrophy due to fiscal actions by the United States: These narratives focus on domestic policy issues in Taiwan such as minimum wage and housing costs. Example: “The USA printed 4 trillion dollars and bought stocks everywhere in the world, including Taiwan, and caused inflation and depressed wages. Be prepared!”

(c) Vaccine supply and the United States: While some narratives focus on the efficacy of vaccines, several describe the United States intentionally limiting supply during the pandemic. Example: “Taiwanese Dr. Lin is a leading scientist at Moderna, yet sells domestically at $39 per two doses, $50 to Israel. Taiwan must bid at least $60! The United States clearly does not value Taiwan.”

These narratives reveal a new element to US-skepticism: state capacity. As previously mentioned, state capacity is defined as whether a state is capable of mobilizing its resources to realize its goal. The Ukraine war and vaccine supply narratives both question the United States’ motivations in foreign policies and perceived trustworthiness. Meanwhile, the economic atrophy narrative is based on the United States’ domestic budgetary deficit and downstream impact on Taiwanese economy. These narratives frame U.S. state capacity as declining and imply that the United States could no longer realize any other commitment due to its lack of resources and capacity. The goal of such a narrative is to lower the Taiwanese audience’s belief that the United States will help. But such a narrative does not include keywords of its target group (e.g., Taiwan) nor the PCR’s goal (e.g., unification) and only works through framing and priming as an example of sharp power.

The specific focus of misinformation narratives related to the United States is composed of Ukraine (28.8%), the economy and fiscal policies (33.1%), technology (25.2%), and vaccine supply (9.9%). Misinformation related to state capacity takes up approximately 52.4%, more than half of all narratives (see Figure A1, part a in the Appendix). In all narratives, political parties are only referenced 27.8% of the time with the DPP the primary target (26.2%), which is almost half of the proportion for state capacity. China is only mentioned in tandem with the United States in 38.4% of the posts (see Figure A1, part b in the Appendix).

Finding 2: Temporal and spatial evidence suggests VPN-based coordination, focused on U.S. issues and addresses.

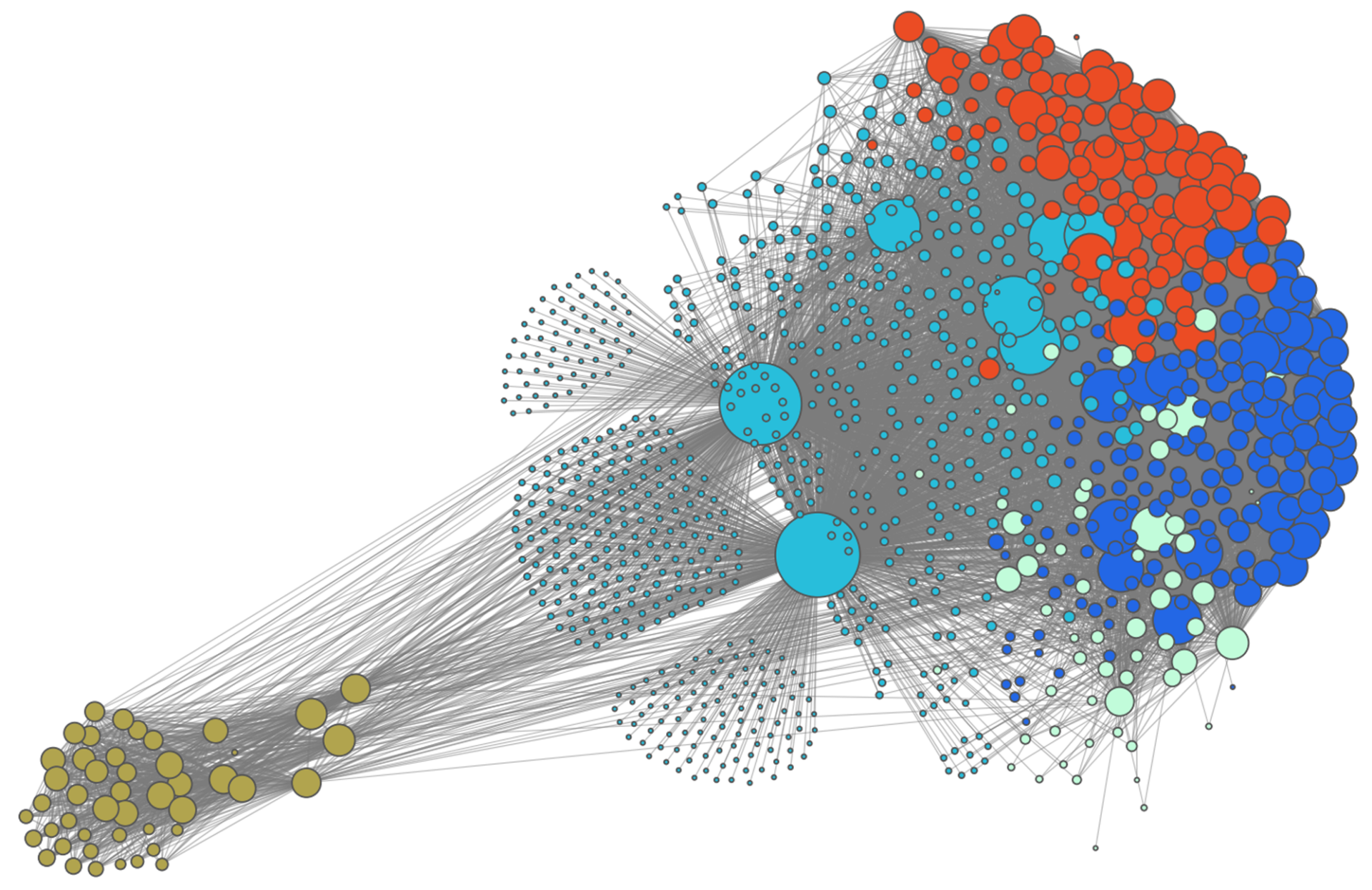

Once we identified the top misinformation narratives using Line, we investigated information operations or coordination. Line is one of Taiwan’s most popular communication apps featuring chatrooms (similar to WhatsApp), with 83% usage. One limitation of Line is that although we can analyze message content, Line chatrooms can be seen as conversations behind “closed doors”—platforms cannot impose content moderation and researchers have no access to the users themselves nor to the private chatroom in which users engage with misinformation (Chang et al., 2020). PTT, on the other hand, provides a public forum-like environment in which users can interact. Figure 1 shows the co-occurrence network of users who post comments under the same forum. Each circle (node) represents a user who posts on PTT. If two users make mutual comments on more than 200 posts, then they are connected (form a tie). Intuitively, this means if two users are connected or “close” to each other by mutual connections, then they are likely coordinating or have extremely similar behaviors. The placement of the users reflects this and is determined by their connections.

Using the Louvain algorithm (Traag, 2015), a common method to identify communities on social networks, five communities emerged from our dataset. Each community is colored separately, with clear clusters, except for teal which is more integrated. In particular, the yellow cluster is significantly separate from the others. This means they share significant activity amongst their own community, but less so with other communities. This suggests premeditated coordination rather than organic discussion, as the users would have to target the same post with high frequency. Prior studies have shown analyzing temporal patterns can provide insight into information operations. Specifically, overseas content farms often follow a regular cadence, posting content before peak hours in Taiwan on Twitter (Wang et al., 2020) and YouTube (Hsu & Lin, 2023).

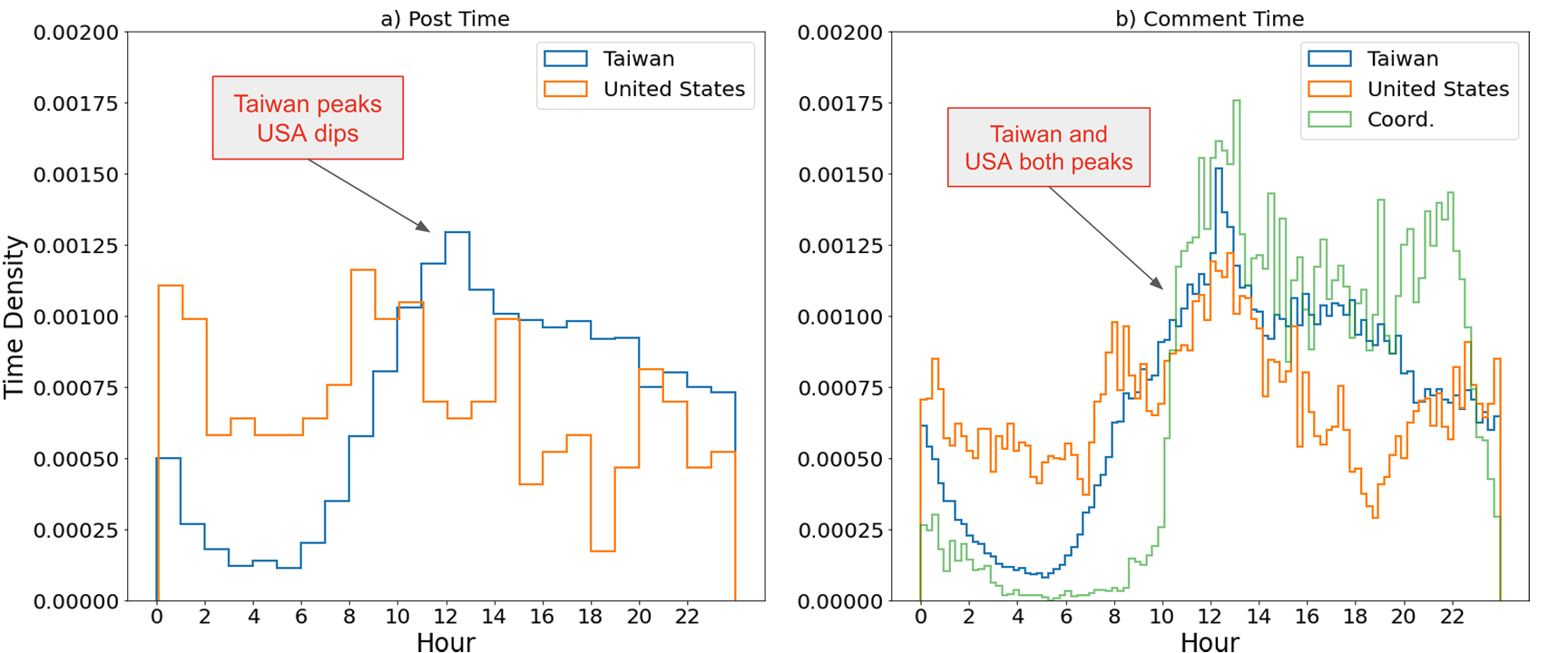

To better understand the temporal dynamics on PTT, we plotted the distribution of posts and comments over a 24-hour period. Specifically, we focused on the top two countries by volume—Taiwan and the United States. Figure 2, part a shows the time of posting. Taiwan’s activity increases from 6 in the morning until it peaks at noon (when people are on lunch break), then steadily declines into the night. In contrast, posts from the United States peak at midnight and 8 a.m. Taipei time, which corresponds to around noon and 8 p.m. in New York, respectively. This provides an organic baseline as to when we might expect people to post.

However, in Figure 2, part b, while the distribution for Taiwan (blue) remains unchanged, the peak for the United States (orange) occurs at the same time as Taiwan. One explanation is that users are responding to posts in Taiwan. The second is that users in Asia—potentially China—are using a VPN to appear as if they are in the United States. This coincides with a report by Meta Platforms that found large numbers of CCP-operated Facebook accounts and subsequently removed them (Frenkel, 2023).

The more curious issue is when considering the activity of the yellow group from Figure 1, the temporal pattern (green) shows a sharp increase in activity at 10 a.m., which then coincides with both the peaks for Taiwan (12) and the United States (22). The sudden burst of activity is consistent with prior findings on content farms from China, where posting behavior occurs when content farm workers clock in regularly for work (Wang et al., 2020). While it is difficult to prove the authenticity of these accounts, the structural and temporal aspects suggest coordination. Figure A2 in the appendix shows further evidence of coordination through the frequency distribution of counts for co-occurring posts. For the US-based group, a distribution akin to a power law appears, commonly found within social systems (Adamic & Huberman, 2000; Chang et al., 2023; Clauset et al., 2009). In contrast, the coordinated group features a significantly heavier tail, with a secondary, “unnatural,” peak at around 15 co-occurrences.

To better understand the content of these groups, Table A1 shows the summary of comments of each group and the originating post, using a large-language model for abstractive summarization (see Methods). We report the top points for comments and posts in Table A1. The coordinated community focuses on businessman Terry Gou, who considered running as a blue-leaning independent. The comments attack the incumbent DPP and their stance toward foreign policy. One popular post features President Tsai’s controversial meeting with Kevin McCarthy, then the Speaker of the U.S. House of Representatives. When a journalist asked McCarthy if he would “invite President Tsai to Congress… or… Washington,” McCarthy replied, “I don’t have any invitation out there right now. Today we were able to meet her as she transits through America, I thought that was very productive.” While this was positively framed, the title of the post itself was translated as “McCarthy will not invite Tsai to the United States” (Doomdied, 2023). This takes on a common tactic in misinformation where statements are intentionally distorted to produce negative framings of a particular candidate.

Comments from U.S. IP addresses between 11 a.m. and 2 p.m. focus on the potential alliance between the KMT and TPP. These posts are KMT-leaning with criticism toward both Lai and Ko, who are two oppositional candidates to the KMT. Some users argue that while the Chinese Communist Party (CCP) is a negative force, the United States is not automatically a positive force, as the United States does not explicitly support Taiwan’s international recognition or economic integration. In general, both posts and comments express that Taiwan should not rely too heavily on either China or the United States. This echoes the element of trust in the US-skepticism from the historical experience between ROC and the United States.

Both the U.S.-based and coordinated groups appear as blue-leaning audiences. What differentiates the first and second case is clear evidence of misinformation in the former through inaccurate framing. While US-skepticism may be a valid political stance, if the ambient information environment contains inaccurate information, then the democratic deliberative process is at risk. The case of US-skepticism is also one where stance and truth-value are often conflated, which may influence the process of voter deliberation.

Finding 3: Misinformation is most common among pan-Blue and ROC identity groups on social media and is spread through visual media. These groups share themes with conspiracy groups in Western countries.

Lastly, we considered the groups in which misinformation is common and the way misinformation is delivered. To do so, we queried CrowdTangle using the titles and links from the CoFacts dataset specific to US-Taiwan relations. This yielded 4,632 posts from public groups. Table 2 shows the groups ranked by the total number of misinformation articles identified.

There are two themes to these groups. First, they are often pan-Blue media outlets (CTI News), politician support groups (Wang Yo-Zeng Support Group), and ROC national identity groups (I’m an ROC Fan). The second type is somewhat unexpected but extremely interesting; it consists of groups that espouse freedom of speech (Support CTI News and Free Speech) and truth-seeking (Truth Engineering Taiwan Graduate School), topics often regarded as conspiracies. These topics are reminiscent of those in the West, such as the rhetoric around “fake news” and “truthers,” and paint a transnational picture of how misinformation coalesces. The second largest group is Trump for the World, which supports a politician known to court conspiracy theory groups such as QAnon. These groups also serve as the “capacity” element of US-skepticism, implying that the United States is in trouble for its domestic issues and is not a reliable partner to Taiwan. Furthermore, these groups have sizable followings—ranging from 8,279 to 43,481. We show the mean, as the total number of members fluctuated over our one-year period.

| Chinese | English Translation | # Misinfo Identified | Mean # of Members |

| 天怒人怨 官逼民反 | Civil Revolt | 77 | 43,481.23 |

| 神使川普建喜樂臺港 | Trump for the World | 64 | 13,531.97 |

| 快樂志工群 | Happy Volunteer Group | 55 | 23,642.05 |

| 王又正後援總會 | Wang Yo-Zeng Support Group | 54 | 27,846.6 |

| 「力挺中天新聞」♥️捍衞新聞言論自由㊗️🈵️ | Support CTI News and Free Speech | 53 | 3,207.782 |

| 真相工程台灣研究所/以色列恐怖份子/美國深層政府/疫苗副作用/斷食療法/新聞時事爆料討論/醫療靈媒/營養療法/抗癌自然療法/大藥廠FDA內幕/假猶太人 | Truth Engineering Taiwan Graduate School / Israel Terrorism / USA Deep Government / Fasting / News Discussion | 42 | 24,204.07 |

| 我是中華民國粉 | I’m an ROC Fan | 42 | 7,5217.38 |

| 彭文正 “加油 讚” | Peng Wen-Jeng Support | 40 | 87,088.3 |

| 捍衛中華民國 | Protecting ROC | 39 | 8,278.644 |

| 中國特色社會主義 中華民族偉大復興 | Chinese Socialism ROC Revival Group | 38 | 10,577.66 |

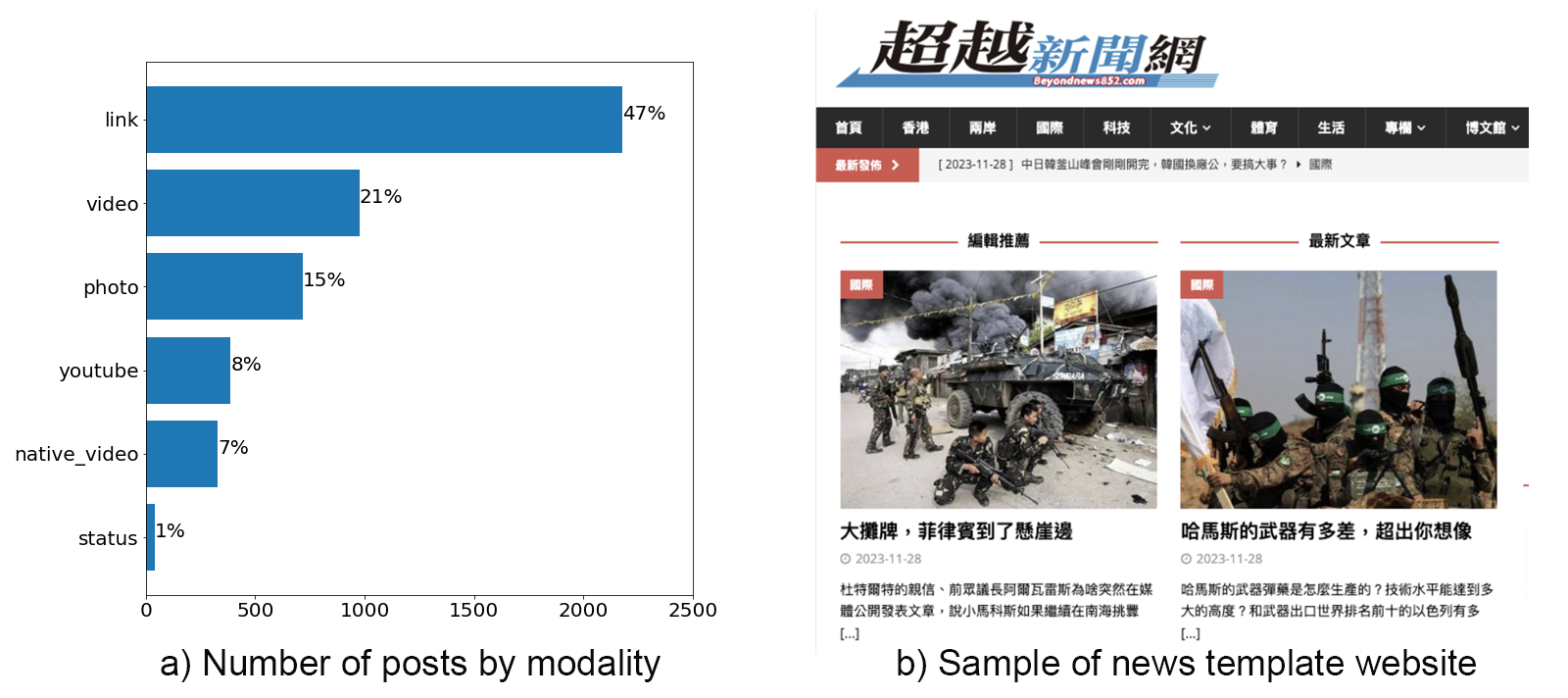

Lastly, we found that the majority of misinformation contains some form of multimedia, such as video (36%) or photos (15%), as shown in Figure 3, part a. Only 1% is a direct status. This may be due to CrowdTangle not surfacing results from normal users, but the ratio of multimedia to text is quite high. This aligns with extant studies showing the growth of multimodal misinformation (Micallef et al., 2022) and also user behavior in algorithm optimization (Chang et al., 2022; Dhanesh et al., 2022; Pulley, 2020)—posts with multimedia tend to do better than posts with only text.

Moreover, 47% contain a URL. Figure 3, part b shows one of the top domains containing misinformation (beyondnews852.com) after filtering out common domains such as YouTube. The site is named “Beyond News Net” and is visually formatted like a legitimate news site to increase the perceived credibility of information (Flanagin & Metzger, 2007; Wölker & Powell, 2021). The ability to rapidly generate legitimate-looking news sites as a tactic for misinformation may become a challenge for both media literacy and technical approaches to fight misinformation.

Methods

We utilized three unique misinformation datasets—Line, Facebook, and PTT—with dates between 01/12/2023 and 11/10/2023. The CoFacts dataset includes 140,193 received messages, 96,432 that have been labeled as misinformation, facts, opinion, or not relevant. Of this, 41,564 entries are misinformation. The CoFacts dataset is not only methodologically useful but exemplifies a crowd-sourced approach to fact-checking misinformation as an actual platform intervention. Moreover, it is public and transparent, allowing for replicability. Using a subset of articles and posts containing misinformation, we trained a topic model using BERTopic (Grootendorst, 2022). On a high level, using BERTopic involves five steps: 1) extract embeddings using a sentence transformer, 2) reduce dimensionality, 3) cluster reduced embeddings, 4) tokenize topics, and 5) create topic representation.

We conducted several trials, experimenting with parameters such as different sentence transformer models and minimum cluster sizes for the HDBSCAN clustering algorithm. The model used to extract topics for this paper utilized paraphrase-multilingual-MiniLM-L12-v2 for our sentence embedding model (Reimers & Gurevych, 2019), had a minimum cluster size of 80 for the clustering algorithm, and used tokenize_zh for our tokenizer. Our model yielded 34 topics. We also trained a model based on latent-Dirichlet allocation (LDA) (Blei et al., 2003), but found the BERTopic results to be more interpretable. We then labeled all messages to indicate whether they included reference to the election or not, and ranked the topics by their election-related percentage to measure electoral salience. For our subsequent analysis, we focused on topic 3 (see Table 1), which captures general discourse about the relations between the United States, China, and Taiwan.

The Facebook dataset was extracted using CrowdTangle. We queried posts containing links and headlines from topic 3. We also cross-sectioned these links and headlines with a general election-based dataset with 911,510 posts. This yielded a total of 4,632 of posts shared on public Facebook groups and 227,125 engagements. Due to privacy concerns, it is not possible to obtain private posts from users on their own Facebook timelines, private groups, or messages. However, public groups are a good proxy for general discourse, in addition to providing ethnic or partisan affiliations via their group name (Chang & Fang, 2023). In other words, while CoFacts provides the misinformation narratives, Facebook public groups give insight into the targets of misinformation.

Lastly, we scraped PTT using Selenium. Commonly known as the Reddit of Taiwan, PTT is unique in that it contains the IP address of the poster, though this could be shrouded by proxy farms or VPNs. First, we scraped all posts that contained reference to the United States and the election, which yielded 22,576 posts and 1,983,396 comments, all with IP addresses, addresses provided by PTT, and the time of posting. We expanded the scope of this analysis as we were interested in the general discourse directly related to the United States, and the geospatial and temporal patterns that arose.

Due to the large amount of data, there are three general approaches we could have taken—local extractive summarization with LLMs, local abstractive summarization with LLMs, and server-based abstractive summarization (such as ChatGPT). Local extractive summarization is a method that embeds each of the input sentences and then outputs five of the most representative sentences. However, this approach is often too coarse, as it returns sentences with the highest centrality but does not summarize general themes across all the different comments or posts. On the other hand, abstractive summarization works by considering the entire context by ingesting many documents and then summarizing across them. This provides a more generalized characterization of key themes. However, the input size is the primary bottleneck as large-language models can only ingest so many tokens (or words), which also need to be held in memory—the case for our project, as we are summarizing more than 10,000 posts.

To circumvent these issues, we sampled the maximum number of posts or comments that could fit within 16,000 tokens and then made a query call using the ChatGPT API. This provided a summary based on a probabilistic sample of the posts and comments.

Bibliography

Achen, C. H., & Wang, T. Y. (2017). The Taiwan voter. University of Michigan Press. https://doi.org/10.3998/mpub.9375036

Achen, C. H., & Wang, T. Y. (2019). Declining voter turnout in Taiwan: A generational effect? Electoral Studies, 58, 113–124. https://doi.org/10.1016/j.electstud.2018.12.011

Adamic, L. A., & Huberman, B. A. (2000). Power-law distribution of the World Wide Web. Science, 287(5461), 2115–2115. https://www.science.org/doi/10.1126/science.287.5461.2115a

Arechar, A. A., Allen, J., Berinsky, A. J., Cole, R., Epstein, Z., Garimella, K., Gully, A., Lu, J. G., Ross, R. M., Stagnaro, M. N., Zhang, Y., Pennycook, G., & Rand, D. G. (2023). Understanding and combatting misinformation across 16 countries on six continents. Nature Human Behaviour, 7(9), 1502–1513. https://doi.org/10.1038/s41562-023-01641-6

Blei, D. M., Ng, A. Y., & Jordan, M. I. (2003). Latent Dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022. https://dl.acm.org/doi/10.5555/944919.944937

Chang, H.-C. H. (2023). Nick Monaco and Samuel Woolley, Bots. [Review of the book Bots, by N. Monaco, & S. Woolley]. International Journal of Communication, 17, 3.

Chang, H.-C. H., & Fang, Y. S. (2024). The 2024 Taiwanese Presidential Election on social media: Identity, policy, and affective virality. PNAS Nexus, 3(4). https://doi.org/10.1093/pnasnexus/pgae130

Chang, H.-C. H., & Ferrara, E. (2022). Comparative analysis of social bots and humans during the COVID-19 pandemic. Journal of Computational Social Science, 5, 1409–1425. https://doi.org/10.1007/s42001-022-00173-9

Chang, H.-C. H., Haider, S., & Ferrara, E. (2021). Digital civic participation and misinformation during the 2020 Taiwanese presidential election. Media and Communication, 9(1), 144–157. https://doi.org/10.17645/mac.v9i1.3405

Chang, H.-C. H., Harrington, B., Fu, F., & Rockmore, D. N. (2023). Complex systems of secrecy: The offshore networks of oligarchs. PNAS Nexus, 2(4), pgad051. https://doi.org/10.1093/pnasnexus/pgad112

Chang, H.-C. H., Richardson, A., & Ferrara, E. (2022). #JusticeforGeorgeFloyd: How Instagram facilitated the 2020 Black Lives Matter protests. PLOS ONE, 17(12), e0277864. https://doi.org/10.1371/journal.pone.0277864

China is flooding Taiwan with disinformation. (2023, September 26). The Economist. https://www.economist.com/asia/2023/09/26/china-is-flooding-taiwan-with-disinformation

Clauset, A., Shalizi, C. R., & Newman, M. E. (2009). Power-law distributions in empirical data. SIAM Review, 51(4), 661–703. https://doi.org/10.1137/070710111

Dhanesh, G., Duthler, G., & Li, K. (2022). Social media engagement with organization-generated content: Role of visuals in enhancing public engagement with organizations on Facebook and Instagram. Public Relations Review, 48(2), 102174. https://doi.org/10.1016/j.pubrev.2022.102174

Doomdied. (2023, April 6). [Hot Gossip] McCarthy: Will not invite President Tsai to congress or DC. PTT. https://disp.cc/b/Gossiping/fXNG

Enders, A., Farhart, C., Miller, J., Uscinski, J., Saunders, K., & Drochon, H. (2022). Are Republicans and conservatives more likely to believe conspiracy theories? Political Behavior, 45, 2001–2024. https://doi.org/10.1007/s11109-022-09812-3

Ferrara, E., Chang, H., Chen, E., Muric, G., & Patel, J. (2020). Characterizing social media manipulation in the 2020 US presidential election. First Monday, 25(11). https://doi.org/10.5210/fm.v25i11.11431

Flanagin, A. J., & Metzger, M. J. (2007). The role of site features, user attributes, and information verification behaviors on the perceived credibility of web-based information. New Media & Society, 9(2), 319–342. https://doi.org/10.1177/1461444807075015

Freedom House (2022). Freedom index by country. https://freedomhouse.org/countries/freedom-world/scores

Frenkel, S. (2023, August 29). Meta’s “biggest single takedown” removes Chinese influence campaign. The New York Times. https://www.nytimes.com/2023/08/29/technology/meta-china-influence-campaign.html

Galeano, E. H., & Galeano, E. (1997). Open veins of Latin America: Five centuries of the pillage of a continent. NYU Press.

George, L., & Sumathy, P. (2023). An integrated clustering and BERT framework for improved topic modeling. International Journal of Information Technology, 15(4), 2187–2195. https://doi.org/10.1007/s41870-023-01268-w

Grootendorst, M. (2022). BERTopic: Neural topic modeling with a class-based TF-IDF procedure. arXiv. https://doi.org/10.48550/arXiv.2203.05794

Haime, J. (2022, September 19). Taiwan’s amateur fact-checkers wage war on fake news from China. Al Jazeera. https://www.aljazeera.com/economy/2022/9/19/taiwan

Harambam, J. (2020). Contemporary conspiracy culture: Truth and knowledge in an era of epistemic instability. Routledge.

Hsu, L.-Y., & Lin, S.-H. (2023). Party identification and YouTube usage patterns: An exploratory analysis. Taiwan Politics. https://taiwanpolitics.org/article/88117-party-identification-and-youtube-usage-patterns-an-exploratory-analysis

Hsu, T., Chien, A. C., & Myers, S. L. (2023, November 26). Can Taiwan continue to fight off Chinese disinformation? The New York Times. https://www.nytimes.com/2023/11/26/business/media/taiwan-china-disinformation.html

Jerit, J., & Zhao, Y. (2020). Political misinformation. Annual Review of Political Science, 23(1), 77–94. https://doi.org/10.1146/annurev-polisci-050718-032814

Konkes, C., & Lester, L. (2017). Incomplete knowledge, rumour and truth seeking. Journalism Studies, 18(7), 826–844. https://doi.org/10.1080/1461670X.2015.1089182

Martel, C., Allen, J., Pennycook, G., & Rand, D. G. (2023). Crowds can effectively identify misinformation at scale. Perspectives on Psychological Science, 19(2), 316–319. https://doi.org/10.1177/17456916231190388

Micallef, N., Sandoval-Castañeda, M., Cohen, A., Ahamad, M., Kumar, S., & Memon, N. (2022). Cross-platform multimodal misinformation: Taxonomy, characteristics and detection for textual posts and videos. Proceedings of the International AAAI Conference on Web and Social Media, 16, 651–662. https://doi.org/10.1609/icwsm.v16i1.19323

Microsoft Threat Intelligence (2024). Same targets, new playbooks: East Asia threat actors employ unique methods. Microsoft Threat Analysis Center. https://www.microsoft.com/en-us/security/security-insider/intelligence-reports/east-asia-threat-actors-employ-unique-methods

Monaco, N., & Woolley, S. (2022). Bots. John Wiley & Sons.

Nguyen, D. Q., Vu, T., & Nguyen, A. T. (2020). BERTweet: A pre-trained language model for English Tweets. arXiv. http://arxiv.org/abs/2005.10200

Pulley, P. G. (2020). Increase engagement and learning: Blend in the visuals, memes, and GIFs for online content. In Emerging Techniques and Applications for Blended Learning in K-20 Classrooms (pp. 137–147). IGI Global.

Reimers, N., & Gurevych, I. (2019). Sentence-BERT: Sentence embeddings using Siamese BERT-networks. arXiv. https://doi.org/10.48550/arXiv.1908.10084

Traag, V. A. (2015). Faster unfolding of communities: Speeding up the Louvain algorithm. Physical Review. E, Statistical, Nonlinear, and Soft Matter Physics, 92(3), 032801. https://doi.org/10.1103/PhysRevE.92.032801

Walker, C. (2018). What Is “Sharp Power”? Journal of Democracy, 29(3), 9–23. https://doi.org/10.1353/jod.2018.0041

Wang, A. H.-E., Lee, M.-C., Wu, M.-H., & Shen, P. (2020). Influencing overseas Chinese by tweets: text-images as the key tactic of Chinese propaganda. Journal of Computational Social Science, 3(2), 469–486. https://doi.org/10.1007%2Fs42001-020-00091-8

Welch, D. (2024, January 19). Taiwan’s election: 2024’s canary in the coal mine for disinformation against democracy. Alliance For Securing Democracy. https://securingdemocracy.gmfus.org/taiwans-election-2024s-canary-in-the-coal-mine-for-disinformation-against-democracy/

Wölker, A., & Powell, T. E. (2021). Algorithms in the newsroom? News readers’ perceived credibility and selection of automated journalism. Journalism, 22(1), 86–103. https://doi.org/10.1177/1464884918757072

Wu, A. (2023). To reassure Taiwan and deter China, the United States should learn from history. Bulletin of the Atomic Scientists, 79(2), 72–79. https://doi.org/10.1080/00963402.2023.2178174

Wu, C. L., & Lin, A. M. W. (2019). The certainty of uncertainty: Taiwanese public opinion on US–Taiwan relations in the early Trump presidency. World Affairs, 182(4), 350–369.

Funding

No funding has been received to conduct this research.

Competing Interests

The authors declare no competing interests.

Ethics

No human subjects were included in this study.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/5SPGDY. The Cofacts database is available on HuggingFace and Facebook via CrowdTangle per regulation of Meta Platforms.

Acknowledgements

H. C. would like to thank Brendan Nyhan, Sharanya Majumder, John Carey, and Adrian Rauschfleish for their comments. H. C. would like to thank the Dartmouth Burke Research Initiation Award.

Authorship

All authors contributed equally.