Peer Reviewed

Not so different after all? Antecedents of believing in misinformation and conspiracy theories on COVID-19

Article Metrics

0

CrossRef Citations

PDF Downloads

Page Views

Misinformation and conspiracy theories are often grouped together, but do people believe in them for the same reasons? This study examines how these conceptually distinct forms of deceptive content are processed and believed using the COVID-19 pandemic as context. Surprisingly, despite their theoretical differences, belief in both is predicted by similar psychological factors—particularly conspiracy mentality and the perception that truth is politically constructed—suggesting that underlying distrust in institutions may outweigh differences in types of deceptive content in shaping susceptibility.

Research Questions

- Are beliefs in misinformation and conspiracy theories on COVID-19 driven by different epistemic, ideological, and social factors?

- Is believing in different kinds of deceptive content associated with a different usage of media for accessing news?

Essay Summary

- The research questions were answered using a representative cross-sectional survey in Germany (March 2024, N = 2,953). The sample was stratified by age, gender, and region.

- Measures included belief in conspiracy theories and misinformation on COVID-19; cognitive, personality, and intergroup-related predictors (intuitive thinking, attitude that truth is political, actively open-minded thinking, political orientation, social dominance orientation, conspiracy mentality); media use (legacy, alternative, and social); and control variables (gender, age, education, religiosity, trust in media, science, and politics).

- We found that having a conspiratorial mindset and believing that truth is shaped by politics are strong predictors of belief in conspiracy theories and misinformation. Both reflect a general distrust of the connection between politics and knowledge.

- The levels of intuitive and actively open-minded thinking were not connected to believing deceptive content.

- Future research should place greater emphasis than before on interventions aimed at reducing distrust in institutions and enhancing understanding of how knowledge is produced in democratic societies.

Implications

In today’s media landscape, deceptive information poses a major societal challenge, shaping cognition and behavior (e.g., Allen et al., 2024; Van Der Linden, 2022). Deceptive information can take various forms of false or inaccurate content, spread either intentionally or unintentionally, such as fake news, misinformation, disinformation, propaganda, pseudoscience, and conspiracy theories (Chen et al., 2023; Zeng, 2021).

Much of the existing research has focused on distinguishing types of deceptive information based on the intent of the creators—specifically, whether false information is disseminated deliberately or due to a lack of knowledge about its veracity. This has led to the common distinction between disinformation and misinformation. Misinformation departs from ground truths (Adams et al., 2023) and includes false claims refuted by evidence (Barua et al., 2020, p. 2), but without deceptive intent (Wardle & Derakhshan, 2017). In contrast, disinformation refers to “information that is false and deliberately created to harm a person, social group, organization or country” (Wardle & Derakhshan, 2017, p. 20). Thus, this distinction primarily concerns the authors of the information and their intentions.

However, for recipients, this intent to deceive may not play any role—or may not be immediately discernible. Instead, a second dimension becomes important: the factuality of the information (Möller et al., 2020; Stubenvoll, 2022; Tandoc et al., 2018), that is, its epistemic foundation or the epistemic justification it provides. In terms of this dimension, different types of deceptive content can also be distinguished, and initial attempts have been made to classify them along a continuum ranging from factually accurate to partially false to completely fabricated (Möller et al., 2020). However, this continuum primarily refers to the truthfulness of the content rather than the broader epistemic approach behind it.

The present study investigates whether deceptive content with different epistemic approaches is believed by different groups of people. The focus is not solely on the factuality of the information but on the type of epistemic justifications offered by the content. Specifically, it examines whether individuals respond differently to deceptive content that provides comprehensive, difficult-to-falsify explanations for events compared to content that consists of isolated, more easily verifiable claims.

To compare different types of deceptive content, this study focuses on misinformation and conspiracy theories. While these two concepts are often treated separately in the literature, their boundaries are not always clear-cut. Nevertheless, important distinctions exist, and these may give rise to different explanatory patterns.

In terms of epistemic foundations, conspiracy theories generally have a broader scope than misinformation (Egelhofer & Lecheler, 2019; Wimmer, 2025). While misinformation typically refers to single false claims, conspiracy theories explain events through broader, overarching narratives. At the same time, individual pieces of misinformation can be integral parts of conspiracy theories, which makes their relationship more entangled than neatly distinct (Egelhofer & Lecheler, 2019). When it comes to facticity, conspiracy theories are less clearly false than misinformation, as some conspiracy theories—such as the Watergate scandal—have been proven true (Peters, 2021; Schatto-Eckrodt & Frischlich, 2024). Moreover, conspiracy theories often draw on correct information but reframe it within a different epistemic justification context (Wimmer, 2025). Thus, conspiracy theories rely on a mix of correct and false information, whereas misinformation is, by definition, false and “unsupported by the best available evidence” (Flynn et al., 2017, p. 129).

These differences in epistemic justification strategies suggest that the two types of deceptive content may appeal to different groups of people. Understanding these distinctions can help determine whether a universal approach to countering deceptive content is feasible or whether tailored interventions are necessary for different audiences. However, both types of deceptive content also conceptually share similarities. While research has examined factors driving belief in such content—like message features, social influences, and individual traits (e.g., Douglas et al., 2019; Pennycook & Rand, 2021)—the field still lacks a thorough comparison of the distinct psychological bases of beliefs in deceptive content (Douglas & Sutton, 2023; Lobato et al., 2014). The current study, therefore, aims to identify similarities and differences between conspiracy theories and misinformation believers as two types of deceptive content.

The context chosen for this study is the COVID-19 pandemic. It generated what has been described as an “infodemic”—a flood of deceptive content spreading rapidly across media channels (Wardle & Derakhshan, 2017). The pandemic provides a particularly suitable setting for investigating the factors that contribute to belief in conspiracy theories and misinformation for several reasons. First, during this period, many people were exposed to deceptive information (van der Linden, 2022), increasing the likelihood that a sample would include believers in both types of content. Second, the (mis)information people received from various sources about COVID-19 had direct consequences for everyday behavior (Barua et al., 2020), making it especially important to study the factors shaping such beliefs.

Factors associated with conspiracy theory and misinformation beliefs

When examining factors associated with belief in conspiracy theories and misinformation, this study places particular emphasis on epistemic attitudes and factors, building on the different epistemic offerings of these two types of content. Accordingly, relevant belief predictors relate to how individuals evaluate evidence, understand truth, and position themselves socially (Hornsey et al., 2022).

At the individual level, cognitive styles are crucial. Intuitive thinking—favoring quick, heuristic-based judgments—heightens susceptibility to both conspiracy theories and misinformation beliefs, though more strongly for conspiracy theories due to the simplified explanations this type of content offers (Epstein et al., 1996; Garrett & Weeks, 2017; Newton et al., 2023). Misinformation beliefs also rise when intuitive thinkers neglect accuracy and sources (Bronstein et al., 2018). While “faith in intuition” increases belief in both types of content, actively open-minded thinking, such as willingness to consider new evidence, acts as a protective factor (Roozenbeek et al., 2022). As a third cognitive factor, the belief that truth is politically constructed links to both conspiracy theories and misinformation beliefs, particularly during politicized events like COVID-19 (Zhou et al., 2024).

At the intergroup level, political ideology plays a key role. Conspiracy theory beliefs are more common among ideological extremes (Imhoff et al., 2022), especially on the right, due to traits such as ambiguity intolerance and a need for order (Van Der Linden et al., 2021). Misinformation beliefs are also influenced by partisan alignment, with conservatives somewhat more prone to belief (Nyhan & Reifler, 2010). Conspiracy theory beliefs are further associated with social dominance orientation (Dyrendal et al., 2021) and conspiracy mentality—the tendency to view societal outcomes as driven by malevolent elites (Imhoff & Bruder, 2014). This mindset strongly predicts conspiracy theory beliefs (Wood et al., 2012) and, to a lesser degree, misinformation beliefs (Landrum & Olshansky, 2019).

Media use and conspiracy theory and misinformation beliefs

Research on media use and misperceptions suggests that belief in conspiracy theories and misinformation is linked to higher social media (Schäfer et al., 2022; Wu et al., 2023; Ziegele et al., 2022 and alternative media (Weeks et al., 2023; Ziegele et al., 2022) use. While some findings suggest reduced legacy media use among conspiracy theory believers (De León et al., 2024; Meirick, 2023), others report mixed results (Schäfer et al., 2022).

Contribution and implications

Although there are some interventions against deceptive content that target specific types, such as conspiracy theories (Costello et al., 2024) and misinformation, there remains a lack of research connecting the underlying drivers of belief in deceptive content with interventions aimed at reducing these beliefs (Ziemer & Rothmund, 2022). Based on the results of this study, I outline which interventions may help counter belief in misinformation, conspiracy theories, or both, and propose future directions for studying their effectiveness.

Many interventions against deceptive content aim to strengthen individuals’ competence in evaluating information and processing it accurately (Ziemer & Rothmund, 2022). Techniques such as prebunking, media literacy training, and inoculation help individuals recognize deceptive information and assess its accuracy before exposure (Van Der Linden, 2022). Debunking focuses on correcting false information after exposure (e.g., Van Erkel et al., 2024). However, this focus on the correctness of information might not be effective for all kinds of deceptive content. For instance, prior research suggests that accuracy-focused interventions may reduce misinformation beliefs but not conspiracy beliefs, which are more strongly influenced by needs for certainty and explanatory frameworks (Mari et al., 2022; Miller et al., 2016).

This study found that intuitive thinking does not significantly predict belief in either conspiracy beliefs or misinformation. Therefore, accuracy-focused interventions that foster analytical thinking, such as inoculation or accuracy nudges, may not help reduce belief in deceptive content.

Across both types of belief, however, the strongest predictors identified in this study are a general conspiracy mentality and the belief that “truth is political.” Both attitudes reflect distrust in the relationship between politics and knowledge, undermining science and shared facts. These attitudes were more pronounced among conspiracy theory believers. People holding such beliefs reject the epistemic justifications offered by established institutions such as science or politics and instead seek alternative explanations that provide different interpretive frameworks. This aligns with the finding that alternative media use is positively associated with belief in both types of deceptive content, alongside lower levels of trust in science, politics, and mainstream media. Collectively, these patterns suggest that these individuals are distancing themselves from shared knowledge foundations. Thus, simply emphasizing the accuracy of information may not be sufficient to change their views.

Instead, interventions such as self-affirmation strategies, which target motivated reasoning, are needed—though these remain underexplored (Ziemer & Rothmund, 2022). For conspiracy theory believers in particular, interventions promoting a deeper understanding of the interplay between science and politics may be especially promising. The pronounced perception of facts as politically constructed and the suspicion of hidden networks controlling knowledge production point to the need for political and media literacy programs. Additionally, efforts should be made to strengthen their ability to critically evaluate media sources. Encouragingly, these individuals can still be engaged through traditional information dissemination methods. For future research, it will be essential to further investigate interventions that target group-based identities.

This study has several limitations. Its cross-sectional design prevents causal inference, and the reliance on self-reported media use may introduce bias. Additionally, we used dichotomous indicators for conspiracy theory and misinformation beliefs because our focus was on whether participants endorsed any such beliefs, rather than the extent or number of items they endorsed. This approach simplifies the classification of believers and non-believers and does not account for potential differences among believers.1However, the results remain robust when applying alternative thresholds, such as classifying individuals as believers only if they endorsed two or more conspiracy theories or misinformation items, rather than using a single item as the threshold. Moreover, the German context—characterized by relatively high institutional trust and low polarization—may limit generalizability. In more polarized countries, group-related attitudes and motivated reasoning may play an even greater role. The focus on COVID-19 also presents constraints. While this topic became highly politicized, it exhibited different belief patterns compared to other often-studied controversial issues, such as climate change or migration. In the case of COVID-19, traditional political divisions were replaced by a strong polarization between those who trust and those who distrust the system (Nielsen & Petersen, 2025). This may explain the strong predictive power of conspiracy mentality and the belief that truth is political, which could play a less prominent role in other domains. Additionally, focusing on a single topic to measure conspiracy theory and misinformation beliefs likely increases the similarity of explanatory factors. This focus on one specific topic does not reflect the actual belief systems of individuals who endorse deceptive information. For example, research has shown that belief in a particular conspiracy theory is often strongly correlated with belief in other, entirely different conspiracy theories (Wood et al., 2012), and that different types of conspiracy theories can be distinguished (Mahl et al., 2021). Thus, the present study may underestimate differences between conspiracy theory believers and misinformation believers by restricting the focus to a single topic. If belief in conspiracy theories and misinformation is instead understood as broader constructs and operationalized through beliefs across different domains, the predictors may diverge more clearly.

The limitations of the cross-sectional design are especially relevant regarding the relationship between media use and beliefs in conspiracy theories or misinformation. It is plausible that these represent a spiral process, where attitudes and media use mutually reinforce one another (Valenzuela et al., 2024), although initial evidence on COVID-19 suggests an exposure effect on attitudes (Adam et al., 2025). Future research should therefore investigate these dynamics using longitudinal and behavioral data across different contexts and belief structures.

In summary, this study revealed only minor differences between individuals who believe in misinformation and those who endorse conspiracy theories. Both belief types are fueled by a general conspiracy mentality, the view that truth is political, and alternative media use.

Findings

Finding 1: Believers in conspiracy theories and misinformation tend to think in more conspiratorial ways and are more likely to view truth as something shaped by political interests.

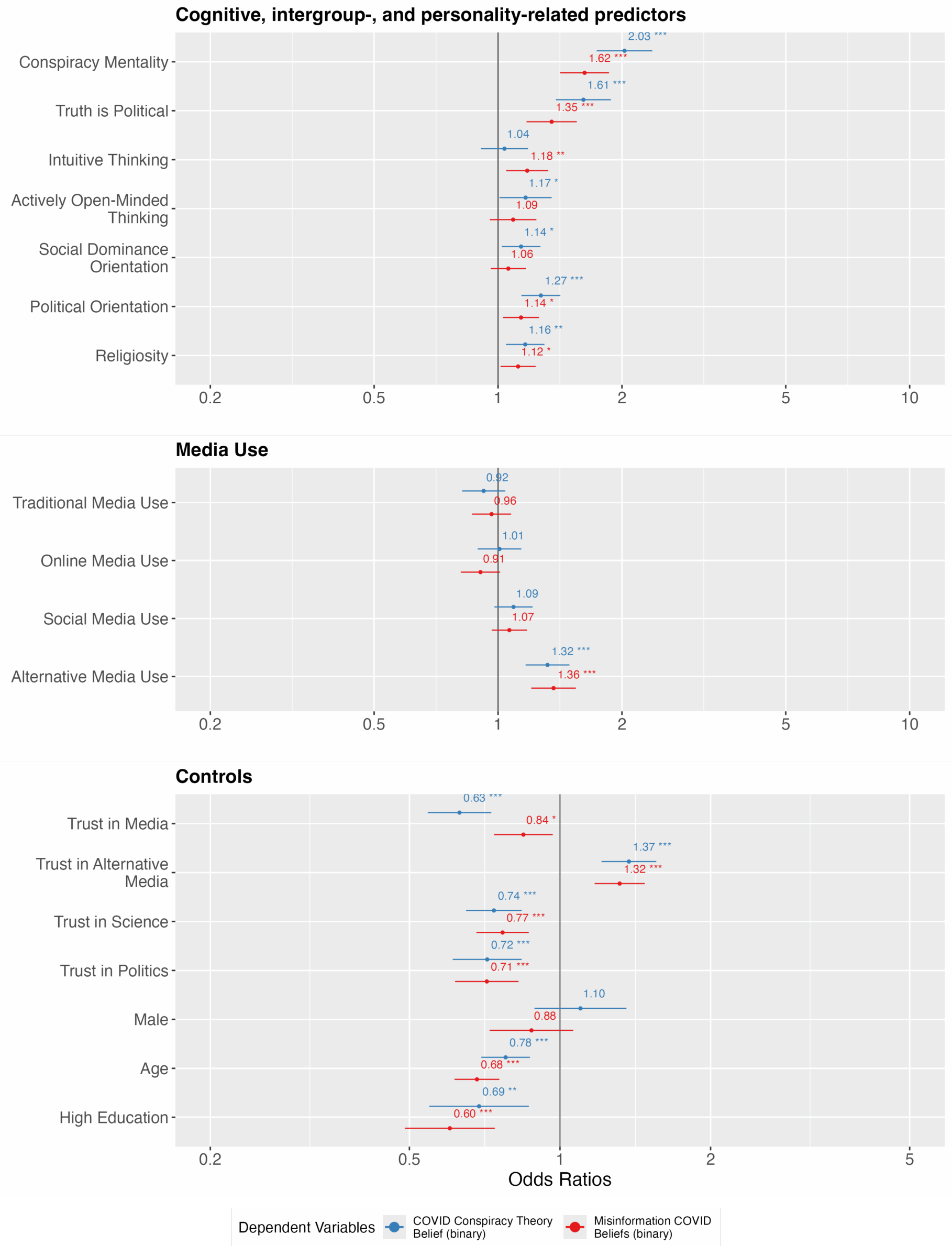

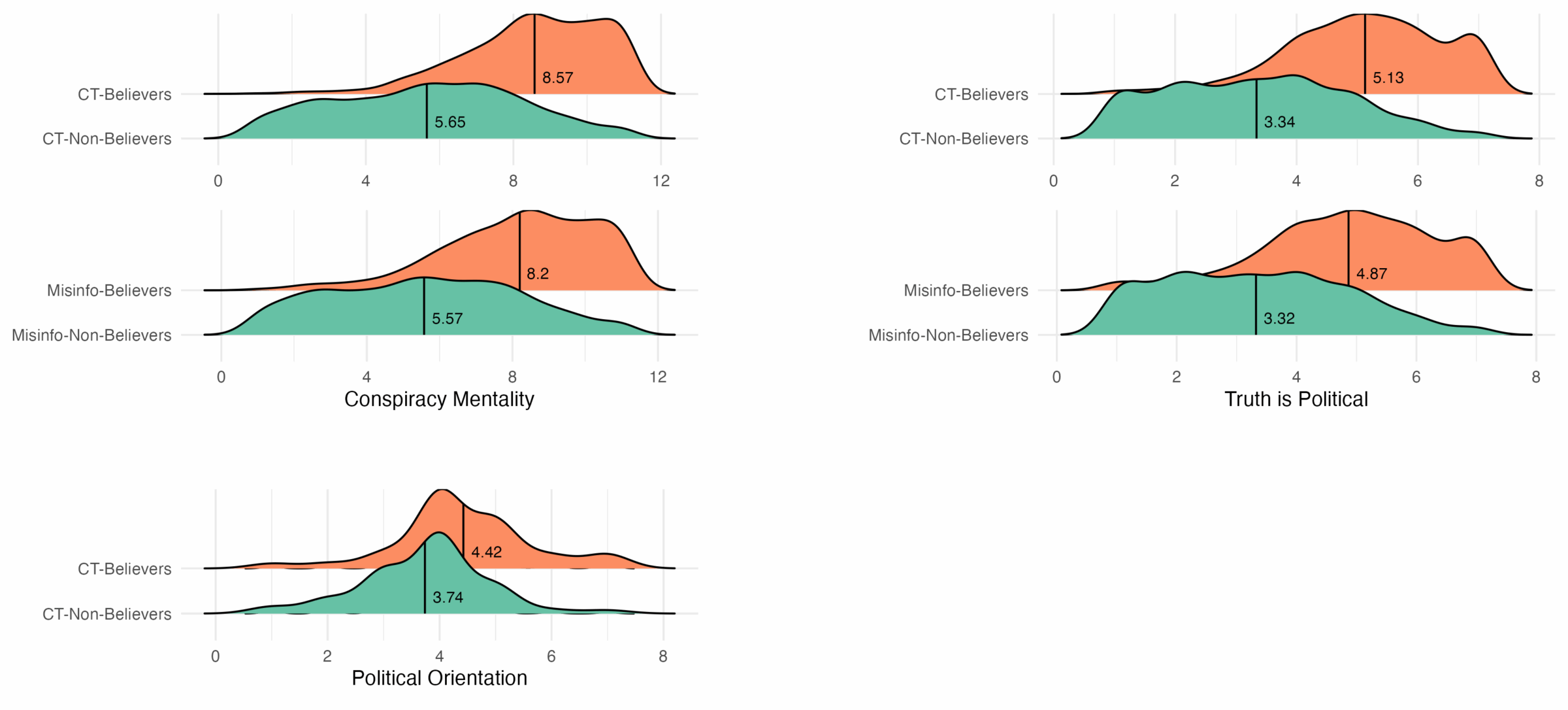

The first research question asks about the factors why people believe in conspiracy theories and misinformation and how they differ in their contribution to explain these two beliefs. Based on logistic regression models distinguishing people who believe in conspiracy theories, those who believe in misinformation, and nonbelievers in either (see Figure 1), we found that the level of conspiracy mentality and the attitude that truth is political is higher among believers of both kinds of deceptive information (p < .001). Conspiracy mentality was the strongest predictor of both beliefs: a one-point increase was associated with a 1.30- (conspiracy theories) and a 1.20- (misinformation) point increase in the odds of believing in conspiracy theories and misinformation (see Tables A1 and A2 in the Appendix for the unstandardized coefficients and the full model details). A one-point increase in the attitude that truth is political was associated with a 1.33 and 1.20 increase in the odds of believing in conspiracy theories and misinformation, respectively.

The level of intuitive thinking seems to be higher for misinformation believers; however, this was not confirmed by a regression model using Bonferroni-adjusted p-values (see Table A2 in the Appendix). There were no significant differences regarding actively open-minded thinking between believers and nonbelievers.2The findings of the logistic regression are robust to continuous operationalizations of conspiracy theory and misinformation beliefs (see Tables A3 and A4 in the Appendix). Most predictors that were significant in the logistic regression model—indicating whether individuals believe in at least one conspiracy theory or piece of misinformation—also significantly predict the number of such beliefs in the linear regression model. However, some differences emerge: actively open-minded thinking and religiosity significantly predict the number of conspiracy theories endorsed in the linear regression model but were not significant in the logistic regression model. Intuitive thinking and political orientation are only significant in the linear regression model for misinformation theory beliefs and do not significantly predict if people believe in misinformation in the logistic regression model. However, the effect sizes of these predictors that were significant in the linear but not the logistic model were too small to be meaningfully interpreted.

The largest difference that we observed between believers and non-believers is their level of conspiracy mentality (see Figure 2). People with a conspiracist mindset are more likely to believe in both kinds of deceptive information. In addition, epistemic factors, such as the attitude that truth is political, are higher among believers than nonbelievers, especially in the case of conspiracy theories. These differences are partly due to the high share of people who say that truth is political. On an 8-point scale for accepting claims saying that truth is political, 30.6% of the conspiracy theory believers and 26.6% of the misinformation believers rank on average on the last two points of the scale.

Finding 2: Believers in conspiracy theories and misinformation often turn to alternative media as a source of news.

With regard to media use (RQ2), people who use many alternative media outlets are more likely to believe in conspiracy theories and misinformation. The other predictors related to media use were not significantly associated with conspiracy theory beliefs or misinformation beliefs. Looking at the controls (see Figure A1 in the Appendix for visualization of the descriptive differences), trust in science3CT: M (SD)believers = 3.59 (1.86), M (SD)non-bel. = 5.19 (1.47), p < .001; Misinformation: M (SD)believers = 3.82 (1.86), M (SD)non-bel. = 5.22 (1.49), p < .001 and politics4CT: M (SD)believers = 2.99 (1.45), M (SD)non-bel. = 4.26 (1.29), p < .01; Misinformation: M (SD)believers = 3.16 (1.46); M (SD)non-bel. = 4.29 (1.30), p < .001 are lower among both believers. Trust in media is lower among conspiracy theory believers,5CT: M (SD)believers = 3.68 (1.66), M(SD)non-bel. = 5.00 (1.22), p < .001 and trust in alternative media is higher for misinformation and conspiracy theory believers.6CT: M (SD)believers = 3.33 (1.45), M (SD)non-bel. = 2.73 (1.15), p < .001; Misinformation: M (SD)believers = 3.28 (1.42), M (SD)nonbel. = 2.69 (1.14), p < .001 Older respondents are less likely to believe in misinformation as well as conspiracy theories.7CT: M (SD)believers = 42.95 (13.74), M (SD)non-bel. = 45.75 (14.86), p < .001; Misinformation: M (SD)believers = 42.6 (14.1), M (SD)nonbel. = 46.5 (14.6), p < .001 Education is lower among misinformation and conspiracy theory believers than among nonbelievers.8CT: 47.3% of non-believers and 30.2% of believers have high education; Misinformation: 49.1% of nonbelievers and 31.0% of believers having high education, p < .001

Taken together, if we look at the odds ratios, all factors contributing to belief in deceptive content, except intuitive thinking, are more strongly connected to conspiracy theory beliefs than to misinformation beliefs. This holds true if z-standardization is used, which is the only way to compare predictor strength across models in logistic regression analysis (Mood, 2010). Specifically, conspiracy mentality and the attitude that truth is political are more strongly connected to belief in conspiracy theories.

Methods

Data

The hypotheses were tested using a cross-sectional survey in Germany conducted by a professional research company in March 2024 through computer-assisted web-based interviews. The sample was stratified by age, gender, and region: 50.2% of participants were female, and 49.8% were male, with an average age of 44.7 years (SD = 16.4, range 18–69). Education levels varied, with 58.7% having completed general or secondary school, 15.2% high school, and 25.1% a university degree, reflecting the general population. The median net income was slightly above the German average (Statistisches Bundesamt Deutschland, 2017), at €2,600–3,599. For data cleaning, cases with more than 30% missing values (n = 28) and those displaying straightlining9Defined as people with an intra-individual response variability (IRV) under 1.26 (following Curran, 2016; Hong et al., 2020). (n = 14) were removed, resulting in a final sample of 2,962 respondents. Missing data were handled through multiple imputations.10Overall, 1.03 % of the values in the dataset were missing; however, the share of missings differed largely between variables. The number of missings for political orientation, and trust in science were above the ignorable threshold (5%, see Kline 1998). Based on a test established by Jamshidian and Jalal (2010), the null-hypotheses that missing values were completely at random was not rejected. Missing values were therefore imputed using the mice package (Buuren & Groothuis-Oudshoorn, 2011).

Measurements

The wording of the items, the descriptives, and the correlations of the constructs are included in the Appendix (Tables B1 and B2).

Belief in conspiracy theories: To measure beliefs in conspiracy theories, we included a pretested scale with five COVID-19-related conspiracy theories (Best et al., 2023; Enders et al., 2020; Stubenvoll, 2022). The respondents indicated whether they believed each statement was true or false or if they were unsure. The scale follows the recommendation of Clifford et al. (2019) to avoid false positives created through other scales, such as agreement or confrontation with people with opposing statements. In the pretest, all items showed sufficient communalities and factor loadings and were kept for the main study. The scale showed sufficient internal consistency (α = .73). For the analyses, the items were dummy-coded (1 = true, 0 = false or unsure) and summarized in an index indicating whether people scored higher than 0 on any of the items (1 = conspiracy theory believer, 0 = conspiracy theory nonbeliever).11We chose to use dichotomous rather than continuous indices for both misinformation and conspiracy theory beliefs because our focus was not on the number of items participants endorsed, but on whether they believed in any of them.

Belief in misinformation: To measure belief in misinformation, we used a pretested scale with five statements on prevalent COVID-19 misinformation, asking the respondents to indicate whether each was true or false or if they were unsure. In pretesting, we tested 11 items from previous studies (Altay et al., 2023; Arechar et al., 2023; Stubenvoll, 2022) and excluded those with low communalities or factor loadings (Carpenter, 2018). The final scale demonstrated good internal consistency (α = .75). For analysis, items were dummy-coded (1 = true, 0 = false or unsure) and summarized into an index, classifying those with any “true” responses as misinformation believers (1) and others as nonbelievers (0).

In our sample, 41.05% (n = 1,216) believed in at least one conspiracy theory and 48.65% (n = 1,441) believed in misinformation on COVID-19. There were 1,007 respondents who believed in both conspiracy theory and misinformation.

Epistemic beliefs: Preference for intuitive thinking and actively open-minded thinking were measured using established scales (Newton et al., 2023) with six items each. The attitude that truth is political was measured using a 4-item scale (Garrett & Weeks, 2017). All scales asked the respondents on a 7-point scale how much they agreed with a statement (1 = strongly disagree, 7 = strongly agree).

Group-based factors: Political orientation was measured on a 7-point scale (1 = left-wing, 7 = right-wing). Social dominance orientation was measured on an 11-point scale, asking the respondents to indicate their agreement (1 = do not agree at all, 11 = agree totally) with four statements on the legitimacy of groups dominating others (Pratto et al., 2013).

Trust: Trust in science was measured with one item on a 5-point scale, asking the respondents how much they trust scientists to act in the public’s best interests (Cologna et al., 2024). Trust in politics was assessed using five items on a 7-point scale, asking how much the respondents trust institutions such as parliament, courts, police, political parties, and politicians (European Social Survey European Research Infrastructure (ESS ERIC, 2024). Trust in media was measured with a 10-item, 7-point scale, covering TV, newspapers, radio, online news, blogs, social networks, video platforms, and messengers (ESS ERIC, 2024). Principal component analysis12The data met criteria for principal component analysis (KMO = .90, Bartlett’s χ² [9] = 274.63, p < .001). indicated two factors: trust in traditional media (α = .93; e.g., newspapers and TV) and alternative media (α = .91; e.g., blogs and social media).

Media use: To measure media use, we used a channel-based measurement and asked the respondents which news sources they used in the previous week (following Newman et al., 2023). We listed TV, news TV, radio, newspapers, magazines, their online representations, social media, and messenger. Principal component analysis indicated three factors:13The data were suitable for principal component analyses with a Kaiser–Meyer–Olkin measure near 1.00 and a significant Barlett’s test of sphericity indicating sufficient correlations among the variables (KMO = .76, Bartletts’ χ² [10] = 701.76, p < .001). online (α = .63), traditional (α = .57), and social media (α = .57) use. They were included as mean indices in the analyses.

Alternative media use: Based on the wording of the question in the digital news report (Newman et al., 2018), alternative media use was measured by asking the respondents which of the listed providers they used to access news in the previous week. The list of news providers was designed to capture a wide range from left-leaning (Junge Welt, Nachdenkseiten) to right-leaning (Junge Freiheit, Deutsche Wirtschaftsnachrichten), as well as elite-critical (KenFM, Rubikon) and foreign-based (RT, Epoch Times) outlets and channels of single persons in messaging apps (Attila Hildmann, Boris Reitschuster). The selection was guided by theoretical considerations of different types of alternative media (Schwaiger, 2022) and the reach of the outlets (Hölig & Hasebrink, 2020). Principal component analysis14The data were suitable for principal component analyses following with a Kaiser–Meyer–Olkin measure near 1.00 and a significant Barlett’s test of sphericity indicating sufficient correlations among the variables (KMO = .87, Bartletts’ χ² [136] = 8835.40, p < .001). indicated one factor that was computed as a sum index.

Control variables: Gender (1 = male, 0 = others) and education (1 = high school or higher) were recoded for analysis. Age was included as a numeric variable. Conspiracy mentality was measured using an 11-point scale (Bruder et al., 2013), asking how much the respondents agree with the statements (1 = strongly disagree, 11 = strongly agree). Religious beliefs were shown to influence beliefs about misinformation (Bronstein et al., 2018) and were measured on a 10-point scale, asking the respondents to indicate how much guidance religion or spirituality offers the respondents in everyday life.

Statistical approach

We preregistered the hypotheses as part of a larger project on misinformation and media use https://aspredicted.org/7f65-h6fc.pdf.

Topics

Bibliography

Adams, Z., Osman, M., Bechlivanidis, C., & Meder, B. (2023). (Why) is misinformation a problem? Perspectives on Psychological Science, 18(6), 1436–1463. https://doi.org/10.1177/17456916221141344

Allen, J., Watts, D. J., & Rand, D. G. (2024). Quantifying the impact of misinformation and vaccine-skeptical content on Facebook. Science, 384(6699), Article eadk3451. https://doi.org/10.1126/science.adk3451

Altay, S., Nielsen, R. K., & Fletcher, R. (2023). News can help! The impact of news media and digital platforms on awareness of and belief in misinformation. The International Journal of Press/Politics, 29(2), 459–484. https://doi.org/10.1177/19401612221148981

Arechar, A. A., Allen, J., Berinsky, A. J., Cole, R., Epstein, Z., Garimella, K., Gully, A., Lu, J. G., Ross, R. M., Stagnaro, M. N., Zhang, Y., Pennycook, G., & Rand, D. G. (2023). Understanding and combatting misinformation across 16 countries on six continents. Nature Human Behaviour, 7(9), 1502–1513. https://doi.org/10.1038/s41562-023-01641-6

Barua, Z., Barua, S., Aktar, S., Kabir, N., & Li, M. (2020). Effects of misinformation on COVID-19 individual responses and recommendations for resilience of disastrous consequences of misinformation. Progress in Disaster Science, 8, Article 100119. https://doi.org/10.1016/j.pdisas.2020.100119

Best, V., Decker, F., Fischer, S., & Küppers, A. (2023). Demokratievertrauen in Krisenzeiten: Wie blicken die Menschen in Deutschland auf Politik, Institutionen und Gesellschaft? [Trust in democracy in crisis: How do people in Germany view politics, institutions and society?]. Friedrich-Ebert-Stiftung. http://www.fes.de/studie-vertrauen-in-demokratie

Bronstein, M. V., Pennycook, G., Bear, A., Rand, D. G., & Cannon, T. D. (2019). Belief in fake news is associated with delusionality, dogmatism, religious fundamentalism, and reduced analytic thinking. Journal of Applied Research in Memory and Cognition, 8(1), 108–117. https://doi.org/10.1037/h0101832

Bruder, M., Haffke, P., Neave, N., Nouripanah, N., & Imhoff, R. (2013). Measuring individual differences in generic beliefs in conspiracy theories across cultures: Conspiracy mentality questionnaire. Frontiers in Psychology, 4(225), 225–225. https://doi.org/10.3389/fpsyg.2013.00225

Buuren, S. van, & Groothuis-Oudshoorn, K. (2011). Mice: Multivariate imputation by chained equations in R. Journal of Statistical Software, 45(3), 1–67. https://doi.org/10.18637/jss.v045.i03

Carpenter, S. (2018). Ten steps in scale development and reporting: A guide for researchers. Communication Methods and Measures, 12(1), 25–44. https://doi.org/10.1080/19312458.2017.1396583

Chen, M., Yu, W., & Liu, K. (2023). A meta-analysis of third-person perception related to distorted information: Synthesizing the effect, antecedents, and consequences. Information Processing & Management, 60(5), Article 103425. https://doi.org/10.1016/j.ipm.2023.103425

Clifford, S., Kim, Y., & Sullivan, B. W. (2019). An improved question format for measuring conspiracy beliefs. Public Opinion Quarterly, 83(4), 690–722. https://doi.org/10.1093/poq/nfz049

Cologna, V., Mede, N. G., Berger, S., Besley, J., Brick, C., Joubert, M., Maibach, E. W., Mihelj, S., Oreskes, N., Schäfer, M. S., van der Linden, S., Abdul Aziz, N. I., Abdulsalam, S., Shamsi, N. A., Aczel, B., Adinugroho, I., Alabrese, E., Aldoh, A., Alfano, M., … Zwaan, R. A. (2025). Trust in scientists and their role in society across 68 countries. Nature Human Behaviour, 9(4), 713–730. https://doi.org/10.1038/s41562-024-02090-5

Curran, P. G. (2016). Methods for the detection of carelessly invalid responses in survey data. Journal of Experimental Social Psychology, 66, 4–19. https://doi.org/10.1016/j.jesp.2015.07.006

De Blasio, E., & Selva, D. (2021). Who is responsible for disinformation? European approaches to social platforms’ accountability in the post-truth era. American Behavioral Scientist, 65(6), 825–846. https://doi.org/10.1177/0002764221989784

De León, E., Makhortykh, M., & Adam, S. (2024). Hyperpartisan, alternative, and conspiracy media users: An anti-establishment portrait. Political Communication, 41(6), 877–902. https://doi.org/10.1080/10584609.2024.2325426

Douglas, K. M., & Sutton, R. M. (2023). What are conspiracy theories? A definitional approach to their correlates, consequences, and communication. Annual Review of Psychology, 74(1), 271–298. https://doi.org/10.1146/annurev-psych-032420-031329

Douglas, K. M., Uscinski, J. E., Sutton, R. M., Cichocka, A., Nefes, T. S., Ang, C. S., & Deravi, F. (2019). Understanding conspiracy theories. Political Psychology, 40, 3–35. https://doi.org/10.1111/pops.12568

Dyrendal, A., Kennair, L. E. O., & Bendixen, M. (2021). Predictors of belief in conspiracy theory: The role of individual differences in schizotypal traits, paranormal beliefs, social dominance orientation, right wing authoritarianism and conspiracy mentality. Personality and Individual Differences, 173, Article 110645. https://doi.org/10.1016/j.paid.2021.110645

Enders, A. M., Uscinski, J. E., Klofstad, C., & Stoler, J. (2020). The different forms of COVID-19 misinformation and their consequences. Harvard Kennedy School (HKS) Misinformation Review, 1(8). https://doi.org/10.37016/mr-2020-48

Epstein, S., Pacini, R., Denes-Raj, V., & Heier, H. (1996). Individual differences in intuitive-experiential and analytical-rational thinking styles. Journal of Personality and Social Psychology, 71(2), 390–405. https://doi.org/10.1037/0022-3514.71.2.390

European Social Survey European Research Infrastructure (ESS ERIC). (2024). ESS11—Integrated file, edition 1.0 [Dataset]. Sikt – Norwegian Agency for Shared Services in Education and Research. https://doi.org/10.21338/ESS11E01_0

Garrett, R. K., & Weeks, B. E. (2017). Epistemic beliefs’ role in promoting misperceptions and conspiracist ideation. PLOS ONE, 12(9), Article e0184733. https://doi.org/10.1371/journal.pone.0184733

Hölig, S., & Hasebrink, U. (2020). Reuters Institute digital news report 2020—Ergebnisse für Deutschland. SSOAR. https://doi.org/10.21241/ssoar.71725

Hong, M., Steedle, J. T., & Cheng, Y. (2020). Methods of detecting insufficient effort responding: Comparisons and practical recommendations. Educational and Psychological Measurement, 80(2), 312–345. https://doi.org/10.1177/0013164419865316

Hornsey, M. J., Bierwiaczonek, K., Sassenberg, K., & Douglas, K. M. (2022). Individual, intergroup and nation-level influences on belief in conspiracy theories. Nature Reviews Psychology, 2(2), 85–97. https://doi.org/10.1038/s44159-022-00133-0

Imhoff, R., & Bruder, M. (2014). Speaking (un–)truth to power: Conspiracy mentality as a generalised political attitude. European Journal of Personality, 28(1), 25–43. https://doi.org/10.1002/per.1930

Imhoff, R., Zimmer, F., Klein, O., António, J. H. C., Babinska, M., Bangerter, A., Bilewicz, M., Blanuša, N., Bovan, K., Bužarovska, R., Cichocka, A., Delouvée, S., Douglas, K. M., Dyrendal, A., Etienne, T., Gjoneska, B., Graf, S., Gualda, E., Hirschberger, G., … Van Prooijen, J.-W. (2022). Conspiracy mentality and political orientation across 26 countries. Nature Human Behaviour, 6(3), 392–403. https://doi.org/10.1038/s41562-021-01258-7

Landrum, A. R., & Olshansky, A. (2019). The role of conspiracy mentality in denial of science and susceptibility to viral deception about science. Politics and the Life Sciences, 38(2), 193–209. https://doi.org/10.1017/pls.2019.9

Lewandowsky, S., Ecker, U. K. H., Seifert, C. M., Schwarz, N., & Cook, J. (2012). Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest, 13(3), 106–131. https://doi.org/10/gdkxfw

Li, J. (2023). Not all skepticism is “healthy” skepticism: Theorizing accuracy- and identity-motivated skepticism toward social media misinformation. New Media & Society, 27(1), 522–544. https://doi.org/10.1177/14614448231179941

Lobato, E., Mendoza, J., Sims, V., & Chin, M. (2014). Examining the relationship between conspiracy theories, paranormal beliefs, and pseudoscience acceptance among a university population: Relationship between unwarranted beliefs. Applied Cognitive Psychology, 28(5), 617–625. https://doi.org/10.1002/acp.3042

Mahl, D., Zeng, J., & Schäfer, M. S. (2021). From “NASA lies” to “reptilian eyes”: Mapping communication about 10 conspiracy theories, their communities, and main propagators on twitter. Social Media + Society, 7(2). https://doi.org/10.1177/20563051211017482

Mari, S., Gil De Zúñiga, H., Suerdem, A., Hanke, K., Brown, G., Vilar, R., Boer, D., & Bilewicz, M. (2022). Conspiracy theories and institutional trust: Examining the role of uncertainty avoidance and active social media use. Political Psychology, 43(2), 277–296. https://doi.org/10.1111/pops.12754

Meirick, P. (2023). News sources, partisanship, and political knowledge in COVID-19 beliefs. American Behavioral Scientist, 0(0). https://doi.org/10.1177/00027642231164047

Miller, J. M., Saunders, K. L., & Farhart, C. E. (2016). Conspiracy endorsement as motivated reasoning: The moderating roles of political knowledge and trust: conspiracy endorsement as motivated reasoning. American Journal of Political Science, 60(4), 824–844. https://doi.org/10.1111/ajps.12234

Newman, N., Fletcher, R., Eddy, K., Robertson, C. T., & Nielsen, R. K. (2023). Digital news report 2023. Reuters Institute for the Study of Journalism. https://doi.org/10.60625/risj-p6es-hb13

Newman, N., Fletcher, R., Kalogeropoulos, A., Levy, D. A. L., & Nielsen, R. K. (2018). Digital news report 2018. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/digital-news-report-2018.pdf

Newton, C., Feeney, J., & Pennycook, G. (2023). On the disposition to think analytically: Four distinct intuitive-analytic thinking styles. Personality and Social Psychology Bulletin, 50(6), 906–923. https://doi.org/10.1177/01461672231154886

Nyhan, B., & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330. https://doi.org/10.1007/s11109-010-9112-2

Pennycook, G., & Rand, D. G. (2021). The psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402. https://doi.org/10.1016/j.tics.2021.02.007

Peters, M. A. (2021). On the epistemology of conspiracy. Educational Philosophy and Theory, 53(14), 1413–1417. https://doi.org/10.1080/00131857.2020.1741331

Pratto, F., Çidam, A., Stewart, A. L., Zeineddine, F. B., Aranda, M., Aiello, A., Chryssochoou, X., Cichocka, A., Cohrs, J. C., Durrheim, K., Eicher, V., Foels, R., Górska, P., Lee, I.-C., Licata, L., Liu, J. H., Li, L., Meyer, I., Morselli, D., … Henkel, K. E. (2013). Social dominance in context and in individuals: Contextual moderation of robust effects of social dominance orientation in 15 languages and 20 countries. Social Psychological and Personality Science, 4(5), 587–599. https://doi.org/10.1177/1948550612473663

Roozenbeek, J., Maertens, R., Herzog, S. M., Geers, M., Kurvers, R., Sultan, M., & van der Linden, S. (2022). Susceptibility to misinformation is consistent across question framings and response modes and better explained by myside bias and partisanship than analytical thinking. Judgment and Decision Making, 17(3), 547–573. https://doi.org/10.1017/S1930297500003570

Schäfer, M. S., Mahl, D., Füchslin, Tobias, Metag, J., & Zeng, J. (2022). From hype cynics to extreme believers: Typologizing the Swiss population’s COVID-19-related conspiracy beliefs, their corresponding information behavior, and social media use. International Journal of Communication, 16, 2885–2910. https://ijoc.org/index.php/ijoc/article/view/18863

Schatto-Eckrodt, T., & Frischlich, L. (2024). Truth, fake news, and conspiracy theories. In A. Pinchevski, P. M. Buzzanell, & J. Hannan (Eds.), The handbook of communication ethics (2nd ed., pp. 304–319). Routledge. https://doi.org/10.4324/9781003274506-25

Schwaiger, L. (2022). Gegen die Öffentlichkeit: Alternative Nachrichtenmedien im deutschsprachigen Raum [Against the public: alternative news media in the German-speaking world] (Vol. 46). transcript Verlag. https://doi.org/10.14361/9783839461211

Stubenvoll, M. (2022). Investigating the heterogeneity of misperceptions: A latent profile analysis of COVID-19 beliefs and their consequences for information-seeking. Science Communication, 44(6), 759–786. https://doi.org/10.1177/10755470221142304

Tandoc, E. C., Lim, Z. W., & Ling, R. (2017). Defining “fake news”: A typology of scholarly definitions. Digital Journalism, 6(2), 137–153. https://doi.org/10/gdgzp5

van der Linden, S. (2022). Misinformation: Susceptibility, spread, and interventions to immunize the public. Nature Medicine, 28(3), 460–467. https://doi.org/10.1038/s41591-022-01713-6

van der Linden, S., Panagopoulos, C., Azevedo, F., & Jost, J. T. (2021). The paranoid style in American politics revisited: An ideological asymmetry in conspiratorial thinking. Political Psychology, 42(1), 23–51. https://doi.org/10.1111/pops.12681

van Erkel, P. F. A., van Aelst, P., De Vreese, C. H., Hopmann, D. N., Matthes, J., Stanyer, J., & Corbu, N. (2024). When are fact-checks effective? An experimental study on the inclusion of the misinformation source and the source of fact-checks in 16 European countries. Mass Communication and Society, 27(5), 851–876. https://doi.org/10.1080/15205436.2024.2321542

Vliegenthart, R., Stromback, J., Boomgaarden, H., Broda, E., Damstra, A., Lindgren, E., Tsfati, Y., & Van Remoortere, A. (2024). Taking political alternative media into account: Investigating the linkage between media repertoires and (mis)perceptions. Mass Communication and Society, 27(5), 877–901. https://doi.org/10.1080/15205436.2023.2251444

Wardle, C., & Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policy making. Council of Europe. https://rm.coe.int/information-disorder-toward-an-interdisciplinary-framework-for-researc/168076277c

Weeks, B. E., Menchen-Trevino, E., Calabrese, C., Casas, A., & Wojcieszak, M. (2023). Partisan media, untrustworthy news sites, and political misperceptions. New Media & Society, 25(10), 2644–2662. https://doi.org/10.1177/14614448211033300

Wood, M. J., Douglas, K. M., & Sutton, R. M. (2012). Dead and alive: Beliefs in contradictory conspiracy theories. Social Psychological and Personality Science, 3(6), 767–773. https://doi.org/10.1177/1948550611434786

Wu, Y., Kuru, O., Campbell, S. W., & Baruh, L. (2023). Explaining health misinformation belief through news, social, and alternative health media use: The moderating roles of need for cognition and faith in intuition. Health Communication, 38(7), 1416–1429. https://doi.org/10.1080/10410236.2021.2010891

Zeng, J. (2021). Theoretical typology of deceptive content (conspiracy theories). DOCA. https://doi.org/10.34778/5g

Zhou, A., Liu, W., & Yang, A. (2024). Politicization of science in COVID-19 vaccine communication: Comparing US politicians, medical experts, and government agencies. Political Communication, 41(4), 649–671. https://doi.org/10.1080/10584609.2023.2201184

Ziegele, M., Resing, M., Frehmann, K., Jackob, N., Jakobs, I., Quiring, O., Schemer, C., Schultz, T., & Viehmann, C. (2022). Deprived, radical, alternatively informed: Factors associated with people’s belief in Covid-19 related conspiracy theories and their vaccination intentions in Germany. European Journal of Health Communication, 3(2), 97–130. https://doi.org/10.47368/ejhc.2022.205

Funding

This research was supported by the Department of Communication, University of Münster, Germany.

Competing Interests

The author declares no competing interests.

Ethics

The research was approved by the Institutional Review Board (IRB) for Research Projects of the Department of Communication at the University of Münster.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/FNTC1J.

Acknowledgements

I want to thank Julia Metag for her collaboration and support throughout the larger project on conspiracy beliefs and media use.