Peer Reviewed

Exploring partisans’ biased and unreliable media consumption and their misinformed health-related beliefs

Article Metrics

1

CrossRef Citations

PDF Downloads

Page Views

This study explores U.S. adults’ media consumption—in terms of the average bias and reliability of the media outlets participants report referencing—and the extent to which those participants hold inaccurate beliefs about COVID-19 and vaccination. Notably, we used a novel means of capturing the (left-right) bias and reliability of audiences’ media consumption, leveraging the Ad Fontes Media ratings of 129 news sources along each dimension. From our national survey of 3,276 U.S. adults, we found that the average bias and reliability of participants’ media consumption are significant predictors of their perceptions of false claims about COVID-19 and vaccination.

Research Questions

- (How) do political ideology, media reliability, and media bias predict holding misinformed beliefs about COVID-19 and vaccination?

- Do partisans’ media diets differ in their average bias and reliability?

Essay Summary

- We surveyed 3,276 U.S. adults, applying Ad Fontes Media’s (2023) ratings of media bias and reliability to measure these facets of participants’ preferred news sources. We also probed their perceptions of inaccurate claims about COVID-19 and vaccination.

- We found participants who tend to vote for Democrats—on average—consume less biased and more reliable media than those who tend to vote for Republicans. We found these (left-leaning) participants’ media reliability moderates the relationship between their media’s bias and their degree of holding false beliefs about COVID-19 and vaccination. Unlike left-leaning media consumers, right-leaning media consumers’ misinformed beliefs seem largely unaffected by their news sources’ degree of (un)reliability.

- This study introduces and investigates a novel means of measuring participants’ selected news sources: employing Ad Fontes’s (2023) media bias and media reliability ratings. It also suggests the topic of COVID-19, among many other scientific fields of recent decades, has fallen prey to the twin risks of a politicized science communication environment and accompanying group-identity-aligned stances so often operating in the polarized present.

Implications

The COVID-19 pandemic demonstrated the effects of inhabiting a polarized media environment—including aspects of selective media exposure and cognitive dissonance avoidance—in the context of an extended global disease scenario the likes of which the world hasn’t experienced in a century. The news-seeking (and avoiding) behaviors in this context highlight the longstanding concern that those who embrace—and subsequently seek out—misinformation, even if inadvertently, constitute a group at risk of endangering their own and others’ health. This exploratory study—the first, to our knowledge, to assess two media credibility dimensions (bias and reliability) in a national survey—set out to analyze these dimensions and their relationships with accepting misinformation among a national U.S. sample, suggesting implications for news media (whose audiences may consist, at least in part, of misinformation believers and seekers) operating in a country sharply divided along partisan lines.

Background: Selective exposure, cognitive dissonance, and COVID-19 news-seeking behaviors

In the polarized age of COVID-19, negative attitudes toward COVID-19 vaccinations (Motta et al., 2021), beliefs in the virus’ relative inertness (Hammad et al., 2021; Šrol et al., 2021), and positive attitudes toward unproven alternative treatments such as hydroxychloroquine (Teovanović et al., 2020) and Ivermectin (Hua et al., 2022) have become identity-defining. This ingrained perception fostered cognitive dissonance as evidence increasingly mounted that the vaccines are safe, the virus is not, and the most effective preventative for COVID-19 hospitalization is vaccination (Christie et al., 2021; Thompson et al., 2021). Even so, the problematic perceptions persisted.

A plausible motivation for individuals to take on the risks of contracting and transmitting COVID-19 in eschewing even less-than-onerous recommended precautions is the desire to forestall cognitive dissonance (Arendt et al., 2016; Festinger, 1957). Anti-vaccine attitudes are known to be tenacious and challenging to counter, unyielding to evidence, and bolstered by persuasive anti-vaccine messaging—which is not difficult to find and immerse oneself in (Moran et al., 2016). In the COVID-19 context, several identity groups appear to have engaged in this immersion.

When perceptions about “decision-relevant science” (Kahan, 2017)—that is, science of importance to the average person’s behavior and choices—become entangled in politicized communications, the results are a polluted science communication environment and identity-protective cognitions (Kahan & Landrum, 2017). The science communication environment is polluted “when the social processes that normally align diverse citizens with what is known from science are disrupted by antagonistic social meanings” (Kahan & Landrum, 2017, p. 2). This is what occurred with COVID-19: citizens were not aligned with the knowledge provided by the scientific enterprise, because the communication environment in which these findings were presented had been polluted by the social meanings that quickly developed along partisan lines.

This study found that right-leaning media consumers seem to be largely unaffected by the degree of (un)reliability present in their selected news sources. This suggests that efforts such as fact-checking and debunking could prove ineffectual for informing misinformed media consumers. Additionally, many in the United States reside in “news deserts”—communities without reliable local news providers whose members tend to turn to social media for (mis)information (Mathews, 2022; Mihailidis, 2022). This study found participants reported engaging with many national news outlets (of varying degrees of reliability and bias), suggesting such outlets have the capacity to spread and reinforce—but also potentially counter—misinformation even in news deserts (at least among those groups that are not resistant to the degree of (un)reliability in their preferred news sources). The implications of the potentially outsized influence of national outlets in areas lacking strong local reporting include the opportunity for these providers to investigate misinformation rampant in such areas (such as via social media studies) and develop reporting designed to counter it. Similarly, these findings suggest that the ineffectiveness of fact-checking and debunking to inform a substantial share of media consumers (e.g., right-leaning constituents) makes a case for further research investigating alternative strategies to provide intractable audiences with corrected information. Such strategies include public awareness campaigns and encouraging influencers to promulgate correct(ed) information (Siwakoti et al., 2021).

Jamieson and Albarracín (2020) found exposure to specific media—most especially conservative and social media—was associated with being misinformed, even when controlling for partisanship, early in the pandemic. They also report these misinformed beliefs are not incidental to behavior: those exposed to conservative media and social media were more likely to express, for example, “unwarranted confidence in vitamin C consumption as a means of preventing infection by SARS-CoV-2” (p. 7). Peterson and Iyengar (2022) also found partisan differences in both preferred news sources and beliefs about COVID-19, with misinformed views being sincerely held, as evidenced by their resistance to change in the face of financial incentives. Moreover, Bridgman et al. (2020) found strong associations linking misinformation exposure to non-compliance with pandemic health measures via misperceptions resulting from misinformation in the media consumed. These studies suggest news media, when providing biased and unreliable content, contribute to a misinformed public—with evident public health implications. A misinformed public may be resistant to efforts to safeguard collective and individual health. Misinformation about vaccines, medications, and standard medical practice threatens compliance with physician recommendations and, consequentially, individual and public health—even in a non-pandemic context.

Such studies, and this one, highlight the associations between partisanship, news source preferences, misinformation susceptibility, and the science communication context that suggest novel communication strategies and continuing scholarly investigations are in order along the fault lines of media consumers’ polarization.

Applying Ad Fontes Media bias and reliability ratings to participants’ selected sources and assessing their misinformed beliefs

The Ad Fontes methodology consists of multi-analyst ratings of news sources along seven categories of bias and eight of reliability. Each source is rated by an equal number of politically left-leaning, politically right-leaning, and politically centrist analysts, whose scores along each dimension are averaged (after any notable score discrepancies are discussed and scores adjusted if the outlier is convinced) (Otero, 2021). Each analyst completes a political identity assessment; all analysts hold at least a bachelor’s degree—and most hold a graduate degree—with one-third holding or in the process of obtaining a doctoral degree (Otero, 2021). Analysts are selected by a panel of application reviewers consulting a rubric of candidate qualifications—including education, political/civic engagement, familiarity with news sources and United States government systems, reading comprehension and analytical skills, among others (Otero, 2021). Once hired, analysts complete a minimum of 20 training hours to learn the content analysis procedure before contributing ratings to the data set (Otero, 2021).

This approach produces aggregated third-party ratings of each source’s reliability and left-to-right bias. Whereas surveys in this context often rely on participants’ or coders’ self-reported perceptions of bias and reliability (Mena et al., 2020)—with the attendant lack of uniformity and empirically investigated reliability—the use of Ad Fontes’s ratings in the research context presents a novel alternative: trained reviewers’ blended perceptions of source bias and reliability. We propose that using Ad Fontes’s ratings presents a viable operationalization of audiences’ media selections, with the current study employing the COVID-19 pandemic as a case study of this method. Lin et al. (2023) found that, despite differences in criteria consulted to rate news sources, there is substantial agreement in evaluations of such sources among six news quality rating sets—including Ad Fontes, along with NewsGuard, Iffy index of unreliable sources, Media Bias/Fact Check, independent professional fact-checkers, and Lasser et al. (2022)—suggesting robustness in such ratings.

While Ad Fontes’s media bias and reliability ratings follow a clearly defined and politically balanced process, they are not without their limitations. Subjectivity and bias are inevitable in coding indicators of bias and reliability, and this limitation is not completely removed by employing multiple coders for each article analyzed to rate each source—though Lin et al.’s (2023) findings suggest the ratings, which share similar scores to other such systems, are reliable. As this study is the first of which we are aware to employ the measure in this research context, its reliability has not been ascertained through the accumulation of data across studies. The method, however, is robust in that it has behind it the collaborative perception of media bias and reliability as judged by ideologically diverse panels of trained coders—rather than individual participants or coders. It does not (and cannot) measure objective media bias and reliability, but it also shares this limitation with other available measures of the phenomena.

Findings

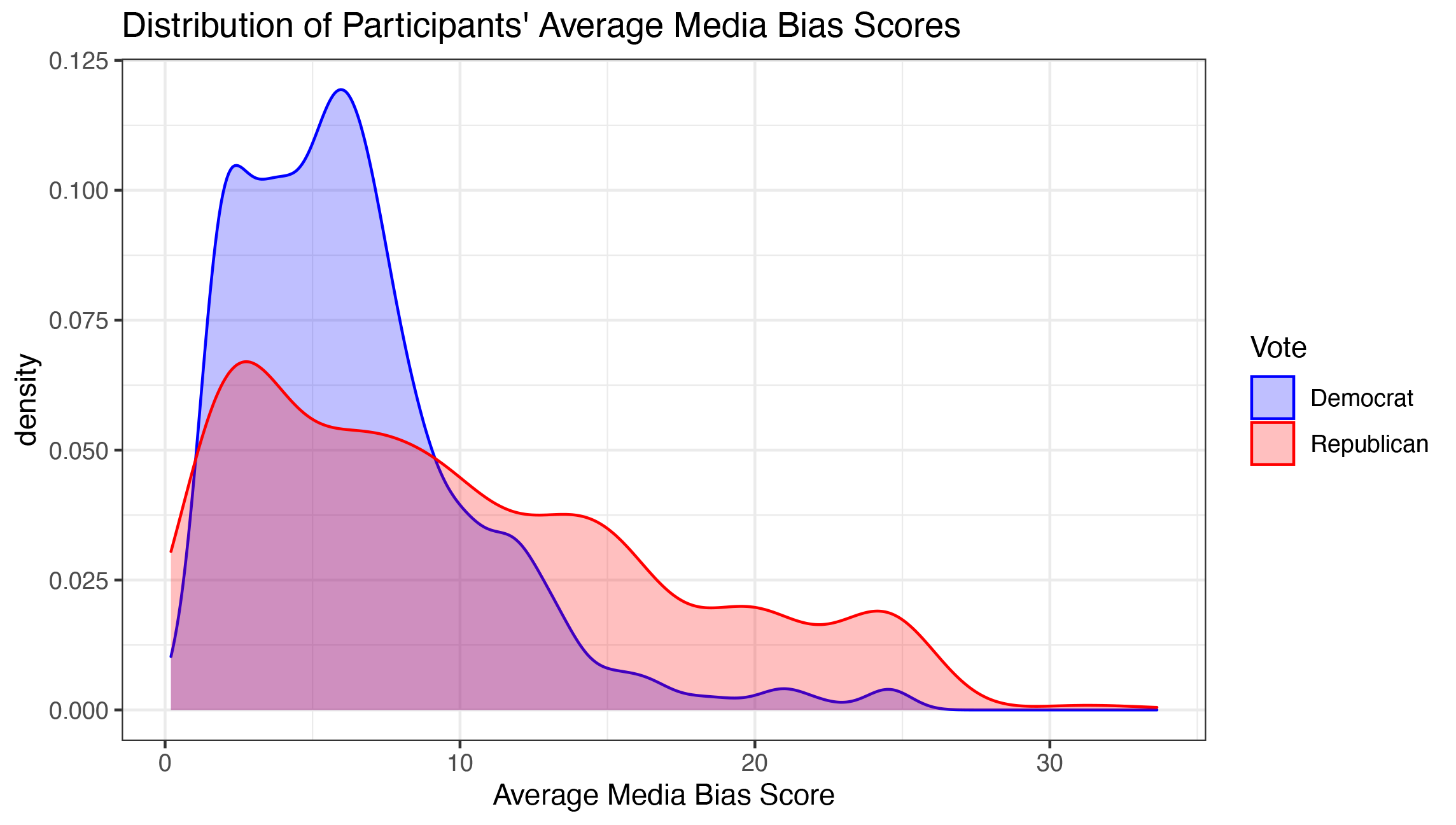

Finding 1: People who tend to vote for Democrats consume less biased media on average than people who tend to vote for Republicans.

In this study, we calculated media bias in two ways. The first, which we use later in our analysis, involved averaging the Ad Fontes media bias scores across participants’ selected news sources. It is worth noting, however, that this method might obscure the extent to which participants engage with partisan and hyper-partisan sources: a participant who only references balanced sources may have an average media bias score similar to a person who references hyper-partisan content on both the right and the left. We refer to this score as the Left to Right (L2R) Media Bias score. A limitation of this study stemming from the use of Ad Fontes media bias scores is that, at the time, we only measured national news sources. This method does not consider the bias and reliability of local news sources or interpersonal communications among people’s social networks, which are also likely to be sources of misinformation. We also did not include in our analysis other types of sociodemographic variables that may strongly influence people’s beliefs about COVID-19 and vaccination, such as personal experience with COVID-19 (e.g., having lost a family member or friend because of the virus).

The second method, which we used for this finding, first takes the absolute value of each source’s media bias score before averaging across participants’ source selections. This way, we can see the average deviation from center for each participant, regardless of the direction of the bias. We refer to this score as the media bias score or the absolute value media bias score.

Results from ANOVA with Tukey correction suggest that those in our sample who tend to vote for Democratic candidates (n = 1,404) select less biased sources on average (M = 6.46, SD = 4.11) than those who typically vote for Republican candidates (n = 923, M = 9.99, SD = 7.06), p < .001, Cohen’s d = 0.71 (Hedges g = 0.65). Democrats also consumed less biased media on average than participants who tend to vote for third-party candidates (n = 133, M = 8.27, SD = 5.77, p = .003, Hedges g = 0.42). But Democrats did not differ significantly from those who said that they do not vote (n = 268, M = 6.12, SD = 5.03, p = .887, Hedges’ g = 0.08). Republicans consumed media that were more biased on average than both third-party participants (p = .007, Hedges’ g = 0.25) and participants who don’t vote (p < .001, Hedges’ g = 0.58). See Figure 1.

One potential explanation for the finding that Republicans consume more biased media than Democrats is that someone who tends to select right-leaning media would consume more biased media by default because there are fewer options that are close to center. However, when looking at the outlets that were selected by more than 10% of Republicans in the sample (as well as those selected by more than 10% of Democrats), most of the popular sources had bias scores near the center (e.g., ABC News and CBS News). However, the cable TV news outlets were frequently chosen and have higher bias ratings, and Fox News (selected by 27% of Republicans in the sample) is rated as more biased (absolute value = 24.56) than both CNN (absolute value = 11.87, selected by 30% of Democrats) and MSNBC (absolute value = 20.87, selected by 20% of Democrats). Thus, the effect may be driven primarily by the differences in the ratings of these popular cable news outlets. For the top 25 most frequently selected sources, see Appendix A.

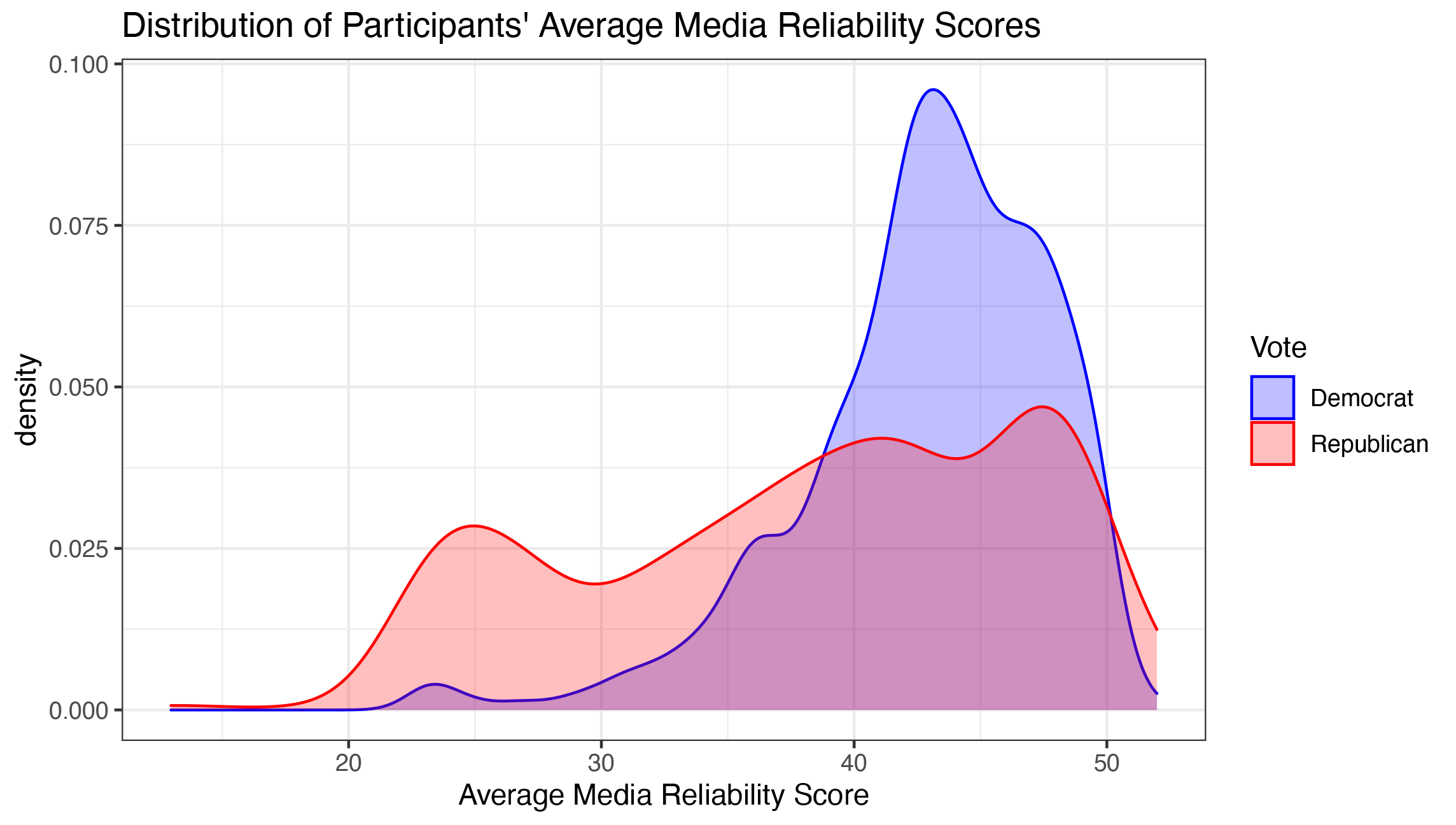

Finding 2: People who tend to vote for Democrats consume more reliable media on average than people who tend to vote for Republicans.

We also found that Democrats selected more reliable sources on average (M = 42.86, SD = 4.89) than Republicans (M = 38.12, SD = 8.63), p < .001, Cohen’s d = 0.68 (Hedges g = 0.71). Democrats also consumed more reliable media than third-party participants (M = 40.38, SD = 6.81, p < .001, Hedges g = 0.49). But Democrats did not differ significantly from those who said that they do not vote (M = 42.53, SD = 6.83, p = .952, Hedges’ g = 0.06). Republicans consumed media that were more biased on average than both third-party participants (p = .003, Hedges’ g = 0.27) and participants who don’t vote (p < .001, Hedges’ g = 0.53). See Figure 2.

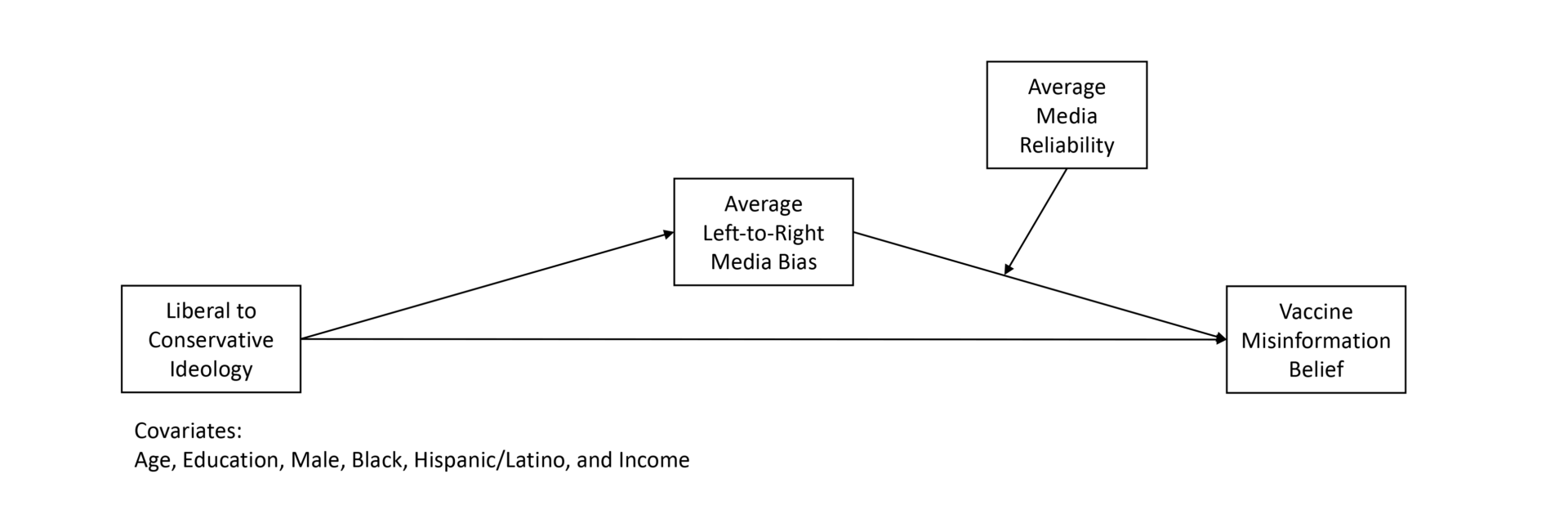

Finding 3: Average reliability scores moderate the relationship between left-to-right media bias scores and holding false beliefs about COVID-19 as well as holding false beliefs about vaccination.

We conducted a moderated mediation model using the Process Macro for R (Hayes, 2022). We hypothesized that participants’ left-to-right political ideology would predict the left-to-right bias of the media consumed (i.e., left-to-right media bias scores), and that their left-to-right media bias scores would predict holding false beliefs. Furthermore, we expected this later relationship to be influenced by the reliability of the media consumed (i.e., media reliability scores). We conducted two models, one predicting holding false beliefs about COVID-19 and one predicting holding false beliefs about vaccination. See Figure 3.

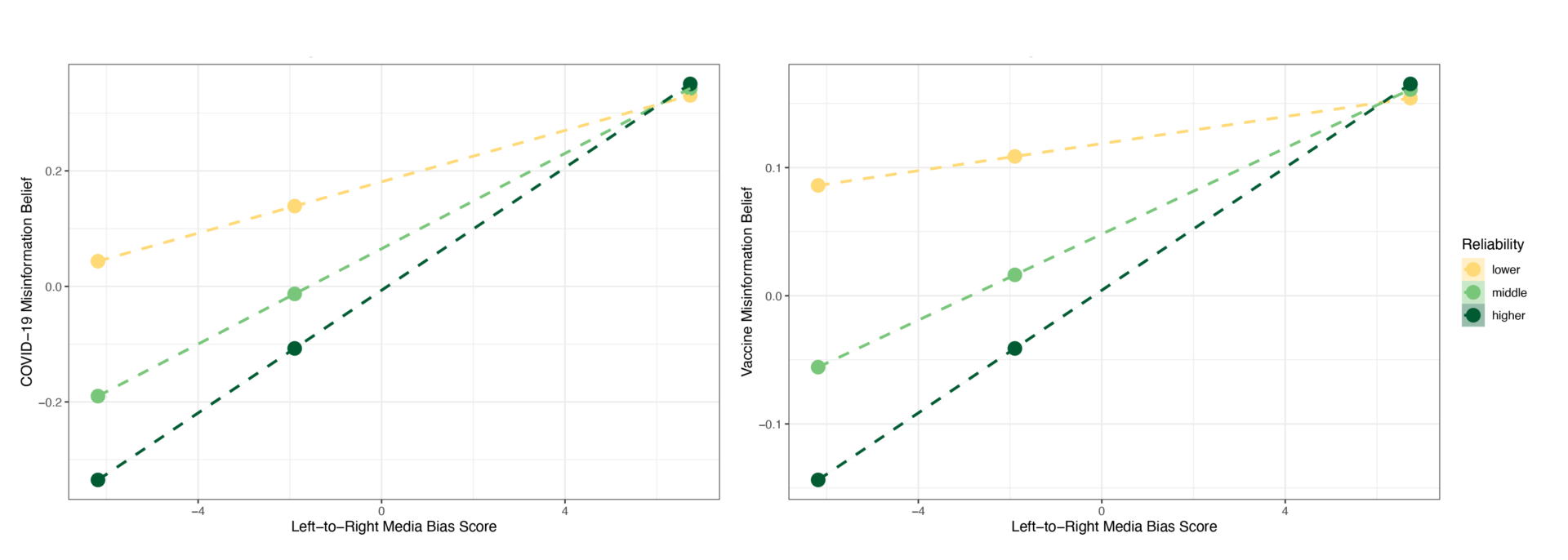

We found that ideology predicts left-to-right media bias scores (b = 2.88, p < .001, r = 0.43). However, though left-to-right media bias is positively correlated with holding misinformed beliefs about COVID-19 (r = 0.21, p < .001), the relationship between these variables in the model is negative (b = -0.06, p < .001). To understand this effect, we probed the significant interaction (b = 0.002, p < .001) using the Johnson-Neyman technique. We found that participants who consume stronger right-leaning media appear to hold more misinformed COVID-19 beliefs regardless of the average reliability of their media selections, consistent with Jamieson and Albarracín (2020). In contrast, participants who consume stronger left-leaning media vary in the strength of their misinformed COVID-19 beliefs, with those consuming more reliable media holding less misinformed beliefs about COVID-19 and those consuming less reliable media holding more misinformed beliefs. See Appendix B.

The results for predicting misinformed beliefs about vaccination echo what we saw when predicting misinformed beliefs about COVID-19: media reliability had a greater impact on those who have stronger left-leaning media bias scores. Among participants with similar left-leaning media bias scores, those who consumed less reliable media on average held greater misinformed beliefs than those who consumed more reliable media on average. Among those with similar right-leaning media bias scores, participants did not appear to differ significantly based on reliability. See Figure 4 and Appendix C.

Finding 4: Participant left-to-right political ideology indirectly influences misinformation belief through left-to-right media bias score and is contingent on media reliability.

Political ideology directly and indirectly influences belief in misinformation. At varying levels of outlet reliability, political ideology influences belief in misinformation through the selection of biased media outlets. Our moderated mediation models also showed that these indirect effects are conditional; for both models, as media reliability scores increase, so do the strengths of the indirect effects. See Table 1. The complete results of both moderated mediation models are available in Appendix B and Appendix C.

| Ideo -> L2R Media Bias -> COVID-19 | Ideo -> L2R Media Bias -> Vaccination | |||||

| Reliability Scores | Effect | 95% CI | Effect | 95% CI | ||

| Lower (W = 34.49) | 0.06 | 0.05 | 0.08 | 0.02 | 0.00 | 0.03 |

| Middle (W = 42.60) | 0.12 | 0.09 | 0.15 | 0.05 | 0.02 | 0.07 |

| Higher (W = 47.64) | 0.15 | 0.12 | 0.19 | 0.07 | 0.04 | 0.10 |

Methods

We conducted a secondary analysis using data collected from a national sample of 3,276 individuals recruited using the Lucid Theorem tool. Our sample was 58.04% female, 40.85% male, and 0.75% other genders (e.g., non-binary, trans male; n = 23); 11 participants declined to report their gender. The average age of participants was 48.09, and 34% of participants reported having earned at least a college degree (n = 1044). Ten percent of participants report identifying as Black, 9% identified as Hispanic/Latino/a/x, and 5% identified as Asian or Asian American. The sample differs from the sociodemographic breakdowns of a nationally representative sample based on values from the U.S. census: our sample was older, had more females, and had fewer people who identify as Black or Hispanic/Latino. Thus, we entered the sociodemographic variables into the PROCESS models as covariates. See Appendix D.

Data for the current study was originally collected as part of the NSF-funded project COLLABORATIVE RESEARCH: RAPID: Influencing Young Adults’ Science Engagement and Learning with COVID-19 Media Coverage. The survey was conducted between January 20, 2021, and February 2, 2021, approximately one year into the COVID-19 pandemic. In the online survey, participants were asked what they knew and thought about topics related to germs (viruses and bacteria), COVID-19, and vaccines. Consenting participants were asked to what extent statements about viruses, bacteria, COVID-19, and vaccinations were likely to be true on a 4-point scale. Then, participants were asked to select—from a list of 129—the news sources that they used to get information about COVID-19. Lastly, participants answered a series of standard demographic questions. Participants who completed the survey were compensated by Lucid consistent with their survey panel agreements.

Our first outcome variable for this study is an index of false beliefs about COVID-19. We used the 18 inaccurate statements about COVID-19 that were asked in the survey, such as hydroxychloroquine has been scientifically proven to be effective in preventing and/or treating COVID-19; there is a cure for COVID-19 that is being withheld from the public; disinfectants can safely be swallowed to treat COVID-19; and COVID-19 is less dangerous than the flu. Participants were asked whether each statement was definitely true (4), likely true (3), likely false (2), or definitely false (1). Participants’ responses to these items were combined into an index and evaluated using item response theory (grm model, Samejima, 1969; for R package, see Rizopoulos, 2006). Participants’ scores ranged from –1.84 to 3.6 (M = 0.02, SD = 0.91).

Our second outcome variable for this study is an index of false beliefs about vaccinations. We used the six inaccurate statements about vaccines, such as vaccinations work by giving you a mild case of the disease; some childhood vaccinations can cause autism, and all vaccines are made from living viruses. As with the COVID-19 items, participants were asked whether each statement was definitely true (4), likely true (3), likely false (2), or definitely false (1). Participant responses to these items were combined into an index and evaluated using item response theory. Participant scores ranged from -1.91 to 2.24 (M = 0.01, SD = 0.86).

To measure average media reliability and average left-to-right media bias, we provided participants with a list of 129 news outlets for which Ad Fontes had posted reliability and left-right bias ratings at the time the data were collected (Ad Fontes Media, 2023). Each listed outlet’s left-to-right bias score could range from –42 to +42, with stronger negative scores indicating stronger politically left bias and stronger positive scores indicating stronger politically right bias. Reliability scores, on the other hand, range from 0 to 64, with lower scores reflecting content that may contain inaccurate or fabricated information and higher scores indicating factual reporting.

As stated earlier, survey participants were asked to select all the sources from which they have gotten information about COVID-19. If participants selected a source, they had a “1” in that variable column. If they did not select the source, they had an “NA” (i.e., the way R understands missing data). Then, we recoded this data, creating two columns for each news source—one for each source’s reliability score and one for each source’s left-to-right bias score. In R, we wrote a script to replace the “1” with the Ad Fontes reliability rating for that source in the reliability column and the Ad Fontes left-to-right bias rating for that source in the bias column. Next, we averaged the source reliability scores across each participant’s set of selected sources, and we did the same for the left-to-right bias scores. One limitation of this method is that we did not weight the scores by frequency of each source’s use; this was because we were concerned that it would make the survey too long, given that participants could have selected up to 129 sources. That said, the average number of sources selected was 4 (median = 3), and the vast majority of participants (95%) selected fewer than 16 sources. Participants’ average source reliability scores ranged from 7.41 to 51.98 (M = 41.05 of 64, SD = 7.07), and their average left-to-right media bias scores ranged from –23.46 to 33.63 (M= -0.11, SD = 8.42).

It is worth noting that across the 129 sources included in this study that were rated by Ad Fontes at the time we collected data, the average bias rating was slightly left-leaning at -1.2 (Median = -2.44, SD = 14.99, skew = 0.33, kurtosis = -0.6) and the average reliability rating was 35.13 (of 64, Median = 36, SD = 10.96). A total of 83 sources fell on the left side of center (bias = 0), whereas 46 sources fell on the right side. But, even though there are more left-leaning sources than right-leaning sources, the average bias (absolute value) of the left-leaning sources (M = 10.2, SD = 8.45) is lower than the average bias of the right-leaning sources (M = 15.03, SD = 9.51, t(84.20) = -2.88, p = .005, Hedges’ g = 0.55).

Topics

Bibliography

Ad Fontes Media. (2023). Methodology. https://adfontesmedia.com/how-ad-fontes-ranks-news-sources/

Arendt, F., Steindl, N., & Kümpel, A. (2016). Implicit and explicit attitudes as predictors of gatekeeping, selective exposure, and news sharing: Testing a general model of media-related selection. Journal of Communication, 66(5), 717–740. https://doi.org/10.1111/jcom.12256

Bridgman, A., Owen, T., Zhilin, O., Merkley, E., Ruths, D., Loewen, P. J., & Teichmann, L. (2020). The causes and consequences of COVID-19 misperceptions: Understanding the role of news and social media. Harvard Kennedy School (HKS) Misinformation Review, 1(3). https://doi.org/10.37016/mr-2020-028

Christie, A., Brooks, J. T., Hicks, L. A., Sauber-Schatz, E. K., Yoder, J. S., Honein, M. A., & CDC COVID-19 Response Team. (2021). Guidance for implementing COVID-19 prevention strategies in the context of varying community transmission levels and vaccination coverage. MMWR Morbidity and Mortality Weekly Report, 70(30), 1044–1047. https://doi.org/10.15585/mmwr.mm7030e2

Festinger, L. (1957). A theory of cognitive dissonance. Stanford University Press.

Hammad, A. M., Hamed, R., Al-Qerem, W., Bandar, A., & Hall, F. S. (2021). Optimism bias, pessimism bias, magical beliefs, and conspiracy theory beliefs related to COVID-19 among the Jordanian population. American Journal of Tropical Medicine and Hygiene, 104(5), 1661–1671. https://doi.org/10.4269/ajtmh.20-1412

Hayes, A. F. (2022). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach (3rd ed.). The Guilford Press.

Hua, Y., Jiang, H., Lin, S., Yang, J., Plasek, J. M., Bates, D. W., & Zhou, L. (2022). Using Twitter data to understand public perceptions of approved versus off-label use for COVID-19-related medications. Journal of the American Medical Informatics Association, 29(10), 1668–1678. https://doi.org/10.1093/jamia/ocac114

Jamieson, K. H., & Albarracín, D. (2020). The relation between media consumption and misinformation at the outset of the SARS-COV-2 pandemic in the US. Harvard Kennedy School (HKS) Misinformation Review, 1(3). https://doi.org/10.37016/mr-2020-012

Kahan, D. M. (2017). Misconceptions, misinformation, and the logic of identity-protective cognition (Cultural Cognition Project Working Paper No. 164). SSRN. http://dx.doi.org/10.2139/ssrn.2973067.

Kahan, D. M., & Landrum, A. R. (2017). A tale of two vaccines—and their science communication environments. In K. H. Jamieson, D. M. Kahan, & D. A. Scheufele (Eds.), The Oxford handbook of the science of science communication (pp. 165–172). Oxford University Press. https://doi.org/10.1093/osfordhb/9780190497620.013.18

Lin, H., Lasser, J., Lewandowsky, S., Cole, R., Gully, A., Rand, D. G., & Pennycook, G. (2023). High level of correspondence across different news domain quality rating sets. PNAS Nexus, 2(9), 286. https://doi.org/10.1093/pnasnexus/pgad286

Mathews, N. (2022). Life in a news desert: The perceived impact of a newspaper closure on community members. Journalism, 23(6), 1250–1265. https://doi.org/10.1177/1464884920957885

Mena, P., Barbe, D., & Chan-Olmsted, S. (2020). Misinformation on Instagram: The impact of trusted endorsements on message credibility. Social Media + Society, 6(2). https://doi.org/10.1177/2056305120935102

Mihailidis, P. (2022). News literacy practice in a culture of infodemic. In S. Allan (Ed.), The Routledge companion to news and journalism (pp. 388–397) (2nd ed.). Routledge. https://doi.org/10.4324/9781003174790

Moran, M. B., Lucas, M., Everhart, K., Morgan, A., & Prickett, E. (2016). What makes anti-vaccine websites persuasive? A content analysis of techniques used by anti-vaccine websites to engender anti-vaccine sentiment. Journal of Communication in Healthcare, 9(3), 151–163. https://doi.org/10.1080/17538068.2016.1235531

Motta, M., Callaghan, T., Sylvester, S., & Lunz-Trujillo, K. (2021). Identifying the prevalence, correlates, and policy consequences of anti-vaccine social identity. Policies, Groups, and Identities, 11(1), 108–122. https://doi.org/10.1080/21565503.2021.1932528

Otero, V. (2021). White paper: Multi-analyst content analysis methodology September 2021 [White paper]. Ad Fontes Media. https://adfontesmedia.com/white-paper-2021/

Peterson, E., & Iyengar, S. (2022). Partisan reasoning in a high stakes environment: Assessing partisan informational gaps on COVID-19. Harvard Kennedy School (HKS) Misinformation Review, 3(2). https://doi.org/10.37016/mr-2020-96

Rizopoulos, D. (2006). ltm: An R package for latent variable modeling and item response theory analyses. Journal of Statistical Software, 17(5), 1–25. https://doi.org/10.18637/jss.v017.i05

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika, 34(S1), 1–97. https://doi.org/10.1007/bf03372160

Siwakoti, S., Yadav, K., Bariletto, N., Zanotti, L., Erdogdu, U., & Shapiro, J. N. (2021). How COVID drove the evolution of fact-checking. Harvard Kennedy School (HKS) Misinformation Review, 2(3). https://doi.org/10.37016/mr-2020-69

Šrol, J., Ballová Mikušková, E., & Čavojová, V. (2021). When we are worried, what are we thinking? Anxiety, lack of control, and conspiracy beliefs amidst the COVID-19 pandemic. Applied Cognitive Psychology, 35(3), 720–729. https://doi.org/10.1002/acp.3798

Teovanović, P., Lukić, P., Zupan, Z., Lazić, A., Ninković, M., & Žeželj, I. (2020). Irrational beliefs differentially predict adherence to guidelines and pseudoscientific practices during the COVID-19 pandemic. Applied Cognitive Psychology, 35(2), 486–496. https://doi.org/10.1002/acp.3770

Thompson, M. G., Stenehjem, D., Grannis, S., Ball, S. W., Naleway, A. L., Ong, T. C., DeSilva, M. B., Natarajan, K., Bozio, C. H., Lewis, N., Dascomb, K., Dixon, B. E., Birch, R. J., Irving, S. A., Rao, S., Kharbanda, E., Han, J., Reynolds, S., Goddard, K. … & Klein, N. P. (2021). Effectiveness of COVID-19 vaccines in ambulatory and inpatient care settings. The New England Journal of Medicine, 385(15), 1355–1371. https://doi.org/10.1056/NEJMoa2110362

Funding

This research was funded by the National Science Foundation as part of the project COLLABORATIVE RESEARCH: RAPID: Influencing Young Adults’ Science Engagement and Learning with COVID-19 Media Coverage (DRL #2028473 to A. R. Landrum and DRL 2028469 to S. E. McCann and S. Eris).

Competing Interests

The authors have no competing interests to declare.

Ethics

The research protocol employed for the larger study from which this data was a part was approved as exempt research by the institutional review board of Texas Tech University on 6/19/2018. All study participants provided informed consent. Gender and race/ethnicity (among other demographic variables) were collected to be able to describe the study sample and to be able to control for demographic factors in the analyses. Researchers used the race and ethnicity categories outlined by the U.S. Census. In addition, an “other” option was provided with the ability to explain or describe additional categorizations in a text box. Participants were asked to select all that apply. For gender, researchers provided participants with four options: “Male,” “Female,” “Diverse (e.g., trans, nonbinary, etc.; please specify),” and “I choose not to answer.” For the “Diverse…” option, participants were provided with a text box to self-identify.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via OSF: https://osf.io/wqxfy/. Simplified data and code for reproducing the analyses are also available via the Harvard Dataverse: https://doi.org/10.7910/DVN/FCTPPM.

Acknowledgements

The authors would like to express their gratitude to Dr. Kelsi Opat (Missouri State University), Sevda Eris, and Sue Ellen McCann (KQED public media) for their input on the original survey design. We would also like to thank Dr. Melissa Gotlieb for her feedback on the analysis.