Peer Reviewed

When knowing more means doing less: Algorithmic knowledge and digital (dis)engagement among young adults

Article Metrics

1

CrossRef Citations

PDF Downloads

Page Views

What if knowing how social media algorithms work doesn’t make you a more responsible digital citizen, but a more cynical one? A new survey of U.S. young adults finds that while higher algorithmic awareness and knowledge are linked to greater concerns about misinformation and filter bubbles, individuals with greater algorithmic awareness and knowledge are less likely to correct misinformation or engage with opposing viewpoints on social media—possibly reflecting limited algorithmic agency. The findings challenge common assumptions about algorithmic literacy and highlight the need for deeper educational and policy interventions that go beyond simply teaching how algorithms function.

Research Questions

- How are young adults’ algorithmic awareness and knowledge connected to their attitudes and behaviors regarding social media content?

- What factors predict young adults’ algorithmic awareness and knowledge?

- What is young adults’ experience of receiving algorithmic literacy education during secondary school?

Essay Summary

- This study examined the level of algorithmic awareness and knowledge among young adults in the U.S. (ages 18–25, N = 348) and its relationship to attitudes and behaviors related to social media content.

- Higher algorithmic awareness and knowledge are associated with greater concerns about misinformation and filter bubbles.

- Paradoxically, individuals with greater algorithmic awareness and knowledge are less likely to correct misinformation or engage with opposing viewpoints on social media.

- Frequent social media use and conservative political orientation are linked to lower levels of algorithmic awareness and knowledge.

- Young adults generally report receiving little formal education about social media algorithms during secondary schooling.

- These findings suggest that algorithmic awareness and knowledge alone may not promote constructive digital engagement. They underscore the need for educational and policy strategies that go beyond conveying knowledge to also support psychological and behavioral engagement with digital content.

Implications

Young adults—defined as individuals in a developmental period between the ages of 18 and 25 (Higley, 2019)—constitute a unique demographic among social media users, as they are the first generation to grow up within a profoundly digitized society (Shannon et al., 2022). For many young adults, social media platforms serve as their primary information source: in 2024, 84% of young adults in the United States reported regular use of at least one social media platform (Gottfried, 2024).

However, such extensive engagement with social media comes with significant costs. What users see on social media is filtered and curated by algorithms—automated systems that analyze individual user behavior, such as clicks, likes, shares, and viewing history, along with the activity of similar users, to predict preferences and generate personalized content feeds. This system, while efficient at capturing user attention, can also create fertile ground for misinformation, filter bubbles, and polarization. Algorithms tend to prioritize content that is engaging, emotionally charged, or controversial, regardless of its accuracy, because such content is more likely to keep users on the platform (Milli et al., 2023). As a result, misinformation can spread rapidly, especially when it aligns with a user’s existing beliefs or fears. Furthermore, by continuously reinforcing similar viewpoints and filtering out dissenting perspectives, algorithms generate filter bubbles, narrow information ecosystems that limit exposure to diverse opinions or factual corrections (Pariser, 2011). This dynamic is particularly concerning for young people, whose identities and worldviews are still developing and who may lack the literacy skills necessary to critically evaluate the information they encounter.

In this light, scholars have increasingly emphasized the importance of algorithmic literacy—defined as “being aware of the use of algorithms in online applications, platforms, and services, knowing how algorithms work, being able to critically evaluate algorithmic decision making as well as having the skills to cope with or even influence algorithmic operations” (Dogruel et al., 2022, p. 4)—to help young people better navigate digital media environments (Brodsky et al., 2020; Head et al., 2020; Powers, 2017). Nevertheless, there is little consensus on how such literacy initiatives should be designed and implemented. Many scholars mainly underscore the cognitive aspect such as algorithmic awareness (being aware of the use of algorithms in online applications) or algorithmic knowledge (understanding how algorithms work). However, since algorithmic literacy is a multidimensional construct encompassing cognitive, affective, and behavioral components (Oeldorf-Hirsch & Neubaum, 2023), effective literacy interventions must address all three dimensions in an integrated manner. Second, the conditions under which algorithmic awareness and knowledge promote or inhibit critical thinking and action among social media users remain underexplored.

Against this backdrop, this paper advances the discussion on algorithmic literacy among young adults across multiple fronts. First, by comprehensively examining how young adults’ algorithmic awareness and knowledge are connected to their attitudes toward social media content and behaviors when using social media, this study sheds light on the path leading (or not leading) to empowered and critical use of social media. Notably, although algorithmic awareness and knowledge are often considered a precursor to more critical and reflective engagement with social media content (Chung & Wihbey, 2024; Oeldorf-Hirsch & Neubaum, 2023), the finding suggests a more nuanced dynamic. While young adults with higher levels of algorithmic awareness and knowledge were more likely to recognize the limitations of the algorithmically curated media environment, such as exposure to misinformation and the risk of filter bubbles, this critical assessment did not translate into constructive behaviors aimed at correcting misinformation or seeking diverse perspectives. Rather, greater algorithmic awareness and knowledge were associated with weaker intentions to take corrective actions against misinformation and to engage in perspective-taking behaviors aimed at breaking out of filter bubbles.

This discrepancy between expressed concern and protective behavior parallels the well-documented privacy paradox, where users express concern about their digital privacy but fail to engage in protective behaviors (Baruh et al., 2017; Kokolakis, 2017). To explain this, Hoffmann et al. (2016) introduced the concept of privacy cynicism: a state of resignation or disillusionment toward data privacy, rooted in information asymmetry and a perceived lack of agency. In a similar vein, this study proposes that algorithmic cynicism may emerge as a psychological coping mechanism in response to increasing concerns about social media content. As users become more aware of how algorithmic systems shape their social media experience, often without transparency or accountability, they may come to believe that individual efforts to resist or correct algorithmic influence are ineffective, leading to inaction rather than engagement. For example, although it may seem intuitive that perceived risk of filter bubble (i.e., lower perceived exposure to diverse viewpoints) would encourage more active seeking of alternative perspectives, my findings suggest that this perception may instead lead to a sense of helplessness, reducing motivation to seek out differing views on social media.

Extending this reasoning, algorithmic cynicism could be considered as part of algorithmic literacy. Algorithmic literacy conceptualized in this study is a comprehensive model encompassing algorithmic awareness and knowledge (cognitive dimension), which in turn influence attitudes (affective dimension) and behaviors (behavioral dimension). Within this framework, algorithmic cynicism may emerge as an affective response—feeling increasingly powerless in the face of social media algorithms and choosing not to waste energy fighting the proverbial algorithmic windmill. While the current data do not allow us to confirm whether algorithmic cynicism is truly part of algorithmic literacy since it was not directly measured in this study, future research should consider this possibility and explore a broader conceptualization of algorithmic literacy. Moreover, if algorithmic cynicism (i.e., the belief that individual actions cannot alter larger trends) has become embedded within algorithmic literacy, it is crucial to investigate strategies to overcome this mindset.

Another important finding of this study concerns the relationship between social media use and algorithmic awareness and knowledge. Contrary to previous findings that suggest greater social media use is positively associated with higher algorithmic awareness and knowledge (e.g., Chung & Wihbey, 2024; Min, 2019), this study reveals the opposite: more frequent social media use was linked to lower levels of algorithmic awareness and knowledge. This finding should be interpreted with attention to the unique characteristics of the sample (ages 18–25). As a generation that has grown up immersed in social media, these young users may develop a kind of technological familiarity that discourages critical reflection. For them, algorithms are often taken for granted—seen as natural parts of the digital experience—leading users to engage passively with content rather than questioning how or why it is presented. This tendency may be partly explained by the illusion of explanatory depth (Rozenblit & Keil, 2002), wherein individuals overestimate their understanding of complex systems. When combined with a sense of intellectual sufficiency (Fisher et al., 2015), this overconfidence can reduce curiosity and suppress motivation to learn about influential technologies such as social media algorithms. As a result, heavy social media users may paradoxically possess a diminished practical understanding of the very systems that shape their online experiences. Moreover, for many young users, social media serves primarily as a space for entertainment and social interaction (Auxier & Anderson, 2021), rather than as a site for critical engagement with technological infrastructures. Their focus tends to be on consuming engaging content rather than understanding the mechanisms behind its delivery. When considered alongside the additional finding that young adults report limited formal education about social media algorithms during their secondary schooling, this underscores the urgent need for systematic integration of topics such as algorithmic content curation and algorithmic ethics into formal educational curricula.

The findings in this study carry important implications for social media platforms, educational institutions, governments, and civil society seeking to empower young adults to navigate algorithm-driven social media environments more effectively and responsibly. Younger generations have often been regarded as relatively algorithmically aware and knowledgeable (e.g., Chung & Wihbey, 2024; Cotter & Reisdorf, 2020). However, they are also the first generation to have grown up fully immersed in social media, exposed to its harms from an early age, yet without receiving adequate education about how algorithms shape their digital experiences. In this light, assumptions about their digital savviness may obscure important efforts to prepare them to effectively respond to algorithmic influence. Furthermore, as demonstrated in this study, it is critical to recognize that even among those with higher levels of algorithmic awareness and knowledge, such cognitive understanding alone may not be sufficient to prompt action against the negative effects of algorithmically curated content. In particular, whether this inaction stems from a lack of awareness and knowledge or from a growing sense of algorithmic cynicism has significant implications for both practice and policy. Understanding the drivers of low engagement in critical media consumption behaviors among young users is essential for designing effective interventions. While improving algorithmic awareness and knowledge is important, it must be accompanied by efforts to address the psychological and structural barriers that undermine a sense of digital agency. Specifically, educational efforts should actively foster a belief in young users’ capacity to act, empowering them to critically engage with algorithmic systems in their daily digital lives and to recognize that their actions can meaningfully shape their online experiences. For example, given the possibility of algorithmic cynicism spotted in this study, encouraging young people to seek information beyond social media—such as public service broadcasters (including international outlets like the BBC) or newspapers—may offer an effective strategy to address this challenge. My finding that perceived exposure to diverse viewpoints strongly correlates with perspective-taking suggests that increasing such exposure could yield significant benefits.

Collective efforts from government(s), media, and educational institutions are also indispensable. While individual agency matters, it is often undermined by the immense, unregulated power of tech giants. Stricter federal and state regulations on these corporations and their algorithmic practices are necessary to mitigate their negative societal effects. Without this structural oversight, the potential for meaningful change remains limited. The media—both traditional and digital—also play a vital role in demystifying algorithms. By clearly explaining what algorithms are, how they shape the information we see (and don’t see), and what we can do about it, the media can foster greater public understanding and agency. Finally, higher education must fill the gap left by secondary schools, which have largely failed to teach algorithmic literacy. Colleges and universities should integrate this subject into general education curricula, equipping students with the critical tools needed to navigate and analyze today’s complex digital landscape.

Some limitations of this study warrant consideration for future research. First, my measurement of algorithmic awareness and knowledge focused specifically on content recommendation algorithms for personal feeds. However, respondents may have varying levels of familiarity with other types of algorithms (e.g., facial recognition, automated moderation, or targeted advertising), which means my findings may not fully capture the breadth and complexity of users’ algorithmic awareness and knowledge. Future research would benefit from expanding the scope of measurement to encompass a wider range of algorithmic functions and contexts. Second, while the proposed mediation model is theoretically grounded in a directional process (i.e., algorithmic awareness/knowledge influencing perceptions, which in turn affects corrective action and perspective taking), the cross-sectional design of this study does not allow for causal inference. Thus, although the model is intended to imply a causal process at the theoretical level, I explicitly recognize a gap between this theoretical framing and the empirical limitations of the data. In particular, I cannot empirically verify the key assumption of sequential ignorability that underlies causal mediation analysis, and thus the reported path coefficients and indirect effects should be interpreted as correlational rather than causal. At the same time, this study relied on survey data from a crowdwork platform (Prolific), which introduces additional limitations. Mediational claims are especially fragile in observational research, as the assumption of no omitted variables rarely holds in convenience samples. Moreover, my analyses were exploratory and not pre-registered, further underscoring the need for caution in interpretation. Third, although I employed a structural equation model (SEM) to comprehensively examine the relationships among variables, I acknowledge that this approach does not establish definitive causal pathways. As Rohrer et al. (2022) argue, correlational path models such as the one estimated here primarily reveal patterns of association and should not be overinterpreted as evidence of causal mechanisms. Taken together, these considerations suggest that the SEM results should be viewed as exploratory evidence of associations consistent with the theoretical framework, rather than as definitive evidence of causal mechanisms. Future research employing longitudinal or experimental designs, and potentially sensitivity analyses, would be valuable in testing the plausibility of causal assumptions more rigorously.

Overall, however, this study adds important nuance to ongoing discussions about how to strengthen young adults’ capacity to navigate algorithm-driven social media environments. The emerging frontier of algorithmic literacy research may focus on psychological constructs highlighted in this study, such as algorithmic cynicism and intellectual sufficiency. Future research should explore these areas further, deepening our understanding of the cognitive, psychological, and structural barriers that influence young users’ engagement with algorithmic systems. Such insights can inform the development of more effective educational, technological, and policy interventions aimed at fostering critical and empowered digital citizenship.

Findings

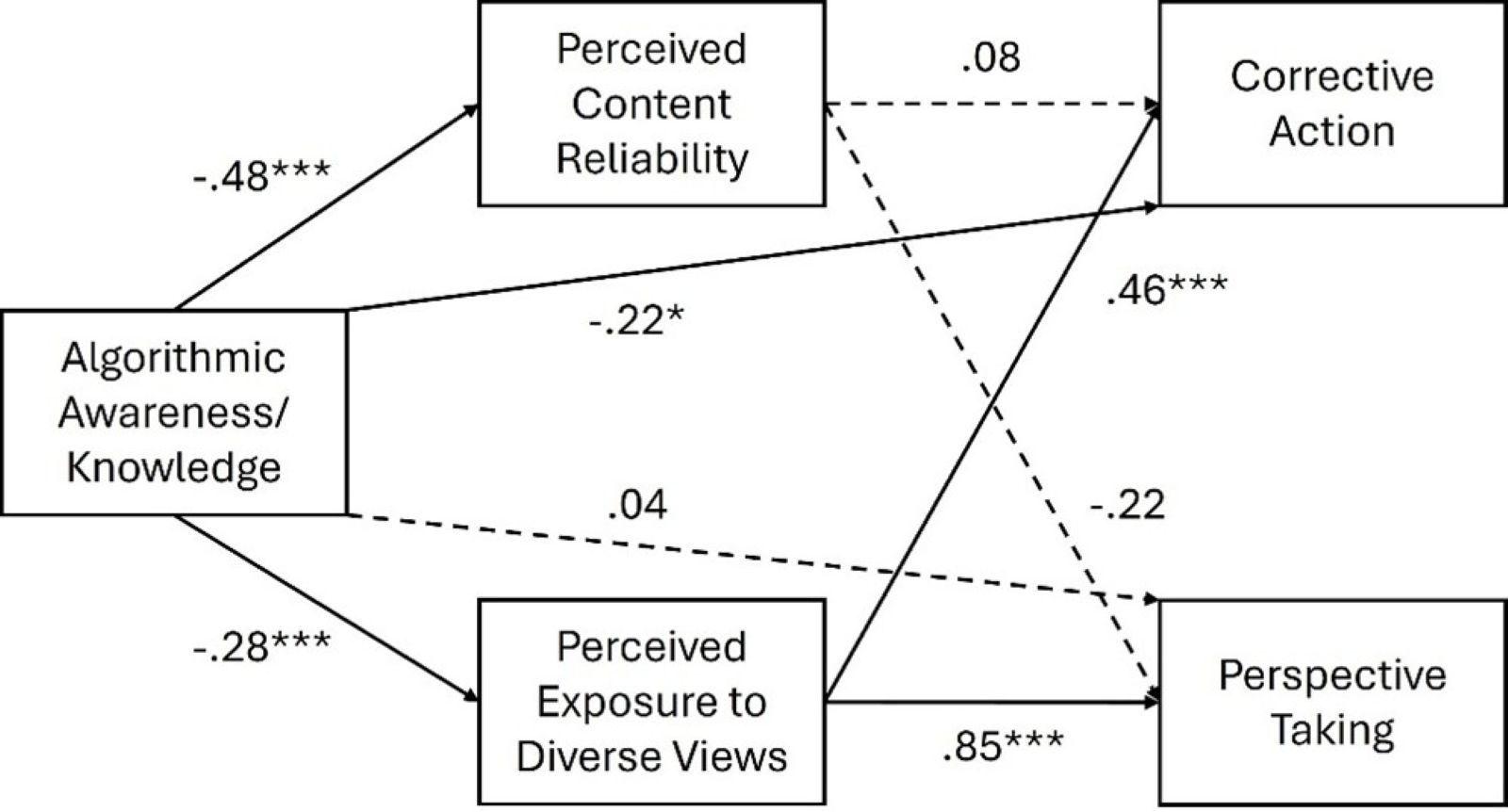

To examine the relationships between young adults’ algorithmic awareness and knowledge, attitudes toward social media content, and intentions to engage in critical media consumption behaviors, I conducted bivariate correlation, ordinary least squares (OLS) regression, and structural equation model (SEM) analyses. Correlations (see Table A1 in Appendix A) showed that algorithmic awareness and knowledge were negatively associated with perceived content reliability (r = –.40, p < .01), perceived exposure to diverse views (r = –.20, p < .01), and corrective action (r = –.23, p < .01), but not with perspective taking (r = –.08, n.s.). Consistent with these patterns, OLS regressions (see Tables A2–A5 in Appendix A) indicated that algorithmic awareness and knowledge significantly predicted lower perceptions of content reliability (β = –.438, p < .001) and corrective action (β = –.172, p < .05), but not perceived exposure to diverse views or perspective taking. While OLS provides a foundational view of associations, it treats outcomes separately and assumes no measurement error. SEM, in contrast, models latent constructs and multiple paths simultaneously, offering a more rigorous test of my theoretical model. Accordingly, I rely on SEM as the primary basis for interpretation, while using correlations and OLS as complementary evidence.

Finding 1: Greater algorithmic awareness and knowledge are associated with critical assessment of social media content.

Algorithmic awareness and knowledge predicted how young users view and assess social media content. Specifically, algorithmic awareness and knowledge were negatively associated with perceived reliability of social media content and perceived exposure to diverse perspectives (see Figure 1). Users with greater algorithmic awareness and knowledge expressed more concerns about social media content being inaccurate and biased, as well as themselves being trapped in algorithmically constructed filter bubbles.

Finding 2: Greater algorithmic awareness and knowledge are associated with weaker intentions to critically engage with social media content.

As shown in Figure 1, higher levels of algorithmic awareness and knowledge were associated with lower intentions among young users to take corrective actions in response to misinformation, such as commenting to warn others about potential biases or risks in media messages, sharing counter-information or alternative viewpoints, highlighting flaws in the content, or reporting misinformation to the platform.

Moreover, increased algorithmic awareness and knowledge were also associated with lower perspective-taking intentions, such as seeking out information that challenges their beliefs and values, having online discussions with people whose ideas and values are different from their own, and seeking out information that makes them think about things from a different perspective. The key reason behind this was how much exposure people think they have to diverse viewpoints on social media. People with higher algorithmic awareness and knowledge often feel that the range of perspectives they see on social media is quite limited. When they believe they aren’t really encountering diverse viewpoints, they feel less motivated to try to seek out alternative perspectives on social media. In other words, when people think their information environment is narrow or biased, they may give up on trying to broaden their information intake.

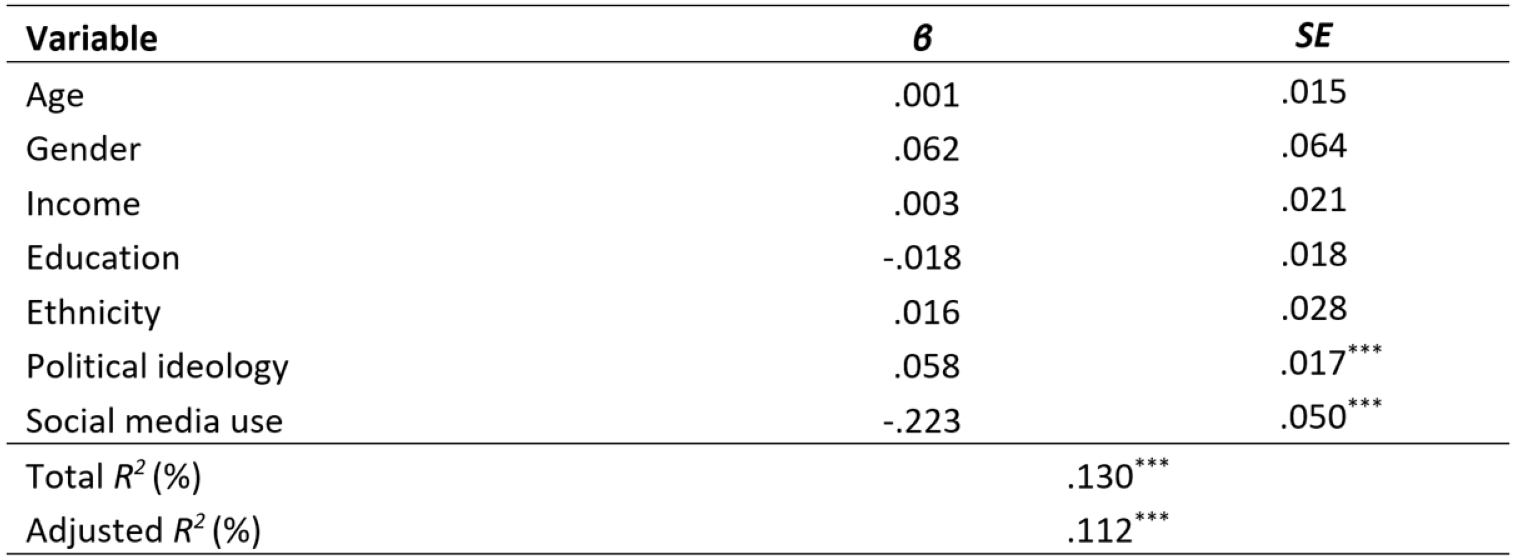

Finding 3: Frequent social media use and conservative political orientation predict lower algorithmic awareness and knowledge.

As illustrated in Table 1, the multiple linear regression analysis revealed that respondents’ social networking service (SNS) use (B = -.228, SE = .050, p < .001) and political views (B = .059, SE = .017, p < .001) were significant predictors of algorithmic awareness and knowledge. Specifically, higher SNS use was associated with lower algorithmic awareness and knowledge, and as political views shifted towards “very conservative,” algorithmic awareness and knowledge tended to be lower. Conversely, demographic variables including age, gender, annual household income, highest level of education completed, ethnicity, and identification as a person with a developmental disability were not statistically significant predictors of algorithmic awareness and knowledge (all ps > .05).

Finding 4: Young adults report limited formal education about social media algorithms in secondary school.

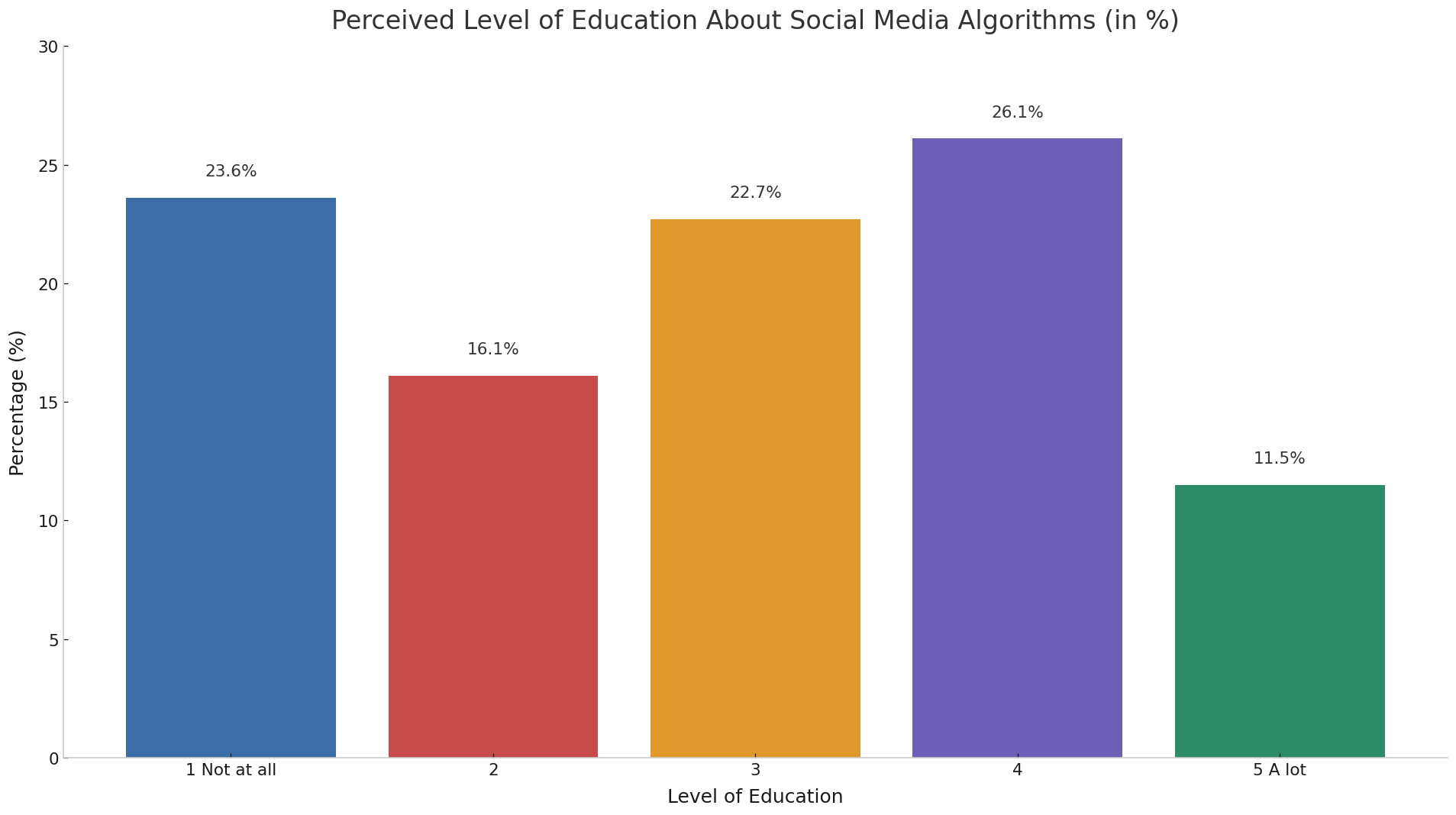

Young social media users reported a generally low level of exposure to formal education about social media algorithms during middle and high school days (M = 2.86, SD = 1.36). Notably, almost 40% indicated they had received little to no education on the topic (see Figure 2). The lack of formal education on how algorithmic systems influence online information environments raises important questions about educational preparedness in the digital age.

Methods

A national survey of young adults (ages 18–25) in the United States (N = 348, Female 48.3%, M age = 22.6) was conducted via an online survey platform Prolific in May 2025. I used a non-probability quota sample, which matches participants on key demographic variables such as age and gender based on simplified U.S. Census data.1In terms of educational background, the sample is generally comparable to the national average, though slightly more educated. Nationally, 93.3% of 18–24-year-olds have completed high school or an equivalent, and about 39% are enrolled in college or graduate school (Educational Statistics Initiative, 2025). By contrast, 99.7% of the sample hold at least a high school diploma, and 44.3% have earned a four-year college degree. While this suggests the sample is somewhat more highly educated than the general population, such discrepancies are common in online survey panels (DiSogra & Callegaro, 2010). Additionally, data on respondents’ regions were not collected, though doing so in future studies could help assess representativeness more precisely.

Algorithmic awareness and knowledge was measured with Zarouali et al.’s (2021) Algorithmic Media Content Awareness (AMCA) scale (see Table B1 in Appendix B for question items). This scale measured understanding of social media algorithms across four dimensions, such as content filtering, automated decisions, human-algorithm interplay, and ethical considerations. We also added a fifth dimension to capture motivations behind algorithms, as called for in Zarouali et al. (2021). The overall scale is reliable (M = 3.99, SD = .65, α = .80), as are sub-dimensions (α = .79 – .83).

Perceived reliability of social media content was measured by asking respondents how confident they are that the social media content shows (a) factual, true, or accurate information, and (b) balanced and objective (modified from Reisdorf & Blank, 2021;1 = very doubtful, 5 = very confident, M = 2.97, SD = .95, r = .67, p < .001).

Perceived exposure to diverse viewpoints was measured by asking respondents the extent to which they believe the content recommendation system on social media provides them with (a) news or information that helps them discover new perspectives they wouldn’t have found elsewhere, and (b) news or information that presents opinions and worldviews that are different from mine (modified from Matt et al., 2014; 1 = none at all, 5 = a great deal, M = 3.33, SD = .92, r = .54, p < .001).

Intention to take actions to counter misinformation (i.e., corrective action)was measured by asking respondents how likely they are to take the corrective actions when they encounter misinformation on social media (see Table B2 in Appendix B for question items adopted from Chung & Wihbey, 2024; 1 = extremely unlikely, 5 = extremely likely, M = 3.34, SD = 1.01, α = .83).

Intentions to expose oneself to different perspectives (i.e., perspective taking) was measured by asking respondents how likely they are to take perspective taking actions when using social media (see Table 2 in Appendix B for question items modified from Karsaklian, 2020; 1 = extremely unlikely, 5 = extremely likely, M = 3.70, SD = .84, α = .78).

Algorithm education was measured by asking respondents how much education they received about social media algorithms during their middle and high school years (1 = not at all, 5 = a lot, M = 2.86, SD = 1.36).

Sociodemographic variables, including age, gender, education (1 = less than high school, 9 = doctoral degree), ethnicity, monthly household income, and political views (1 = very conservative, 7 = very liberal), were measured. Social media use was measured by asking respondents how often they use the following social media platforms in a typical day: Facebook, Instagram, LinkedIn, Pinterest, Reddit, Signal, Snapchat, TikTok, Twitter, WhatsApp, and YouTube. Scores for each platform were averaged to create a composite social media use score (Oeldorf-Hirsch & Neubaum, 2023; 1 = never, 5 = several times a day, M = 3.34, SD = .71).

Topics

Bibliography

Auxier, B., & Anderson, M. (2021, April 7). Social media use in 2021. Pew Research Center. https://www.pewresearch.org/internet/2021/04/07/social-media-use-in-2021/

Baruh L., Secinti E., Cemalcilar Z. (2017). Online privacy concerns and privacy management: A meta- analytical review. Journal of Communication, 67(1), 26–53. https://doi.org/10.1111/jcom.12276

Brodsky, J. E., Zomberg, D., Powers, K. L., & Brooks, P. J. (2020). Assessing and fostering college students’ algorithm awareness across online contexts. Journal of Media Literacy Education, 12(3), 43–57. https://doi.org/10.23860/JMLE-2020-12-3-5

Chung, M., & Wihbey, J. (2024). The algorithmic knowledge gap within and between countries: Implications for combatting misinformation. Harvard Kennedy School (HKS) Misinformation Review, 5(4). https://doi.org/10.37016/mr-2020-155

Cotter, K., & Reisdorf, B. C. (2020). Algorithmic knowledge gaps: A new dimension of (digital) inequality. International Journal of Communication, 14, 745–765. https://ijoc.org/index.php/ijoc/article/view/12450

DiSogra, C., Callegaro, M.(2010). Computing response rates for probability-based online panels. In Proceedings of the joint statistical meeting, survey research methods section (pp. 5309–5320). American Statistical Association. http://www.asasrms.org/Proceedings/y2009/Files/305748.pdf

Dogruel, L., Masur, P., & Joeckel, S. (2022). Development and validation of an algorithm literacy scale for internet users. Communication Methods and Measures, 16(2), 115–133. https://doi.org/10.1080/19312458.2021.1968361

Education Data Initiative. (2025). Education attainment statistics. https://educationdata.org/education-attainment-statistics

Fisher, M., Goddu, M. K., & Keil, F. C. (2015). Searching for explanations: How the Internet inflates estimates of internal knowledge. Journal of Experimental Psychology: General, 144(3), 674–687. https://doi.org/10.1037/xge0000070

Gottfried, J. (2024, January 31). How Americans use social media. Pew Research Center. https://www.pewresearch.org/internet/2024/01/31/americans-social-media-use/

Hargittai, E., Gruber, J., Djukaric, T., Fuchs, J., & Brombach, L. (2020). Black box measures? How to study people’s algorithm skills. Information, Communication, and Society, 23(5), 764–775. https://doi.org/10.1080/1369118X.2020.1713846

Head, A.J., Fister, B., & MacMillan, M. (2020). Information literacy in the age of algorithms: Student experiences with news and information, and the need for change. Project Information Research Institute. https://www.projectinfolit.org/algo_study.html

Higley, E. (2019). Defining young adulthood. DNP Qualifying Manuscripts. 17. https://repository.usfca.edu/dnp_qualifying/17

Hoffmann C. P., Lutz C., Ranzini G. (2016). Privacy cynicism: A new approach to the privacy paradox. Cyberpsychology: Journal of Psychosocial Research on Cyberspace, 10(4), Article 7. https://doi.org/10.5817/cp2016-4-7

Kokolakis S. (2017). Privacy attitudes and privacy behaviour: A review of current research on the privacy paradox phenomenon. Computers & Security, 64, 122–134. https://doi.org/10.1016/j.cose.2015.07.002

Matt, C., Benlian, A., Hess, T., & Weiß, C. (2014). Escaping from the filter bubble? The effects of novelty and serendipity on users’ evaluations of online recommendations. In Proceedings of the Thirty-Fifth International Conference on Information Systems (ICIS), 67. https://aisel.aisnet.org/icis2014/proceedings/HumanBehavior/67

Milli, S., Carroll, M., Wang, Y., Pandey, S., Zhao, S., & Dragan, A. D. (2023). Engagement, user satisfaction, and the amplification of divisive content on social media. arXiv. https://doi.org/10.48550/arXiv.2305.16941

Min, S. J. (2019). From algorithmic disengagement to algorithmic activism: Charting social media users’ responses to news filtering algorithms. Telematics and Information, 43, Article 101251. https://doi.org/10.1016/j.tele.2019.101251

Oeldorf-Hirsch, A., & Neubaum, G. (2023). Attitudinal and behavioral correlates of algorithmic awareness among German and US social media users. Journal of Computer-Mediated Communication, 28(5), Article zmad035. https://doi.org/10.1093/jcmc/zmad035

Pariser, E. (2011). The filter bubble: How the new personalized web is changing what we read and how we think. Penguin Books.

Powers, E. (2017). My news feed is filtered? Awareness of news personalization among college students. Digital Journalism, 5(10), 1315–1335. https://doi.org/10.1080/21670811.2017.1286943

Reisdorf, B. C., & Blank, G. (2021). Algorithmic literacy and platform trust. In E. Hargittai (Ed.), Handbook of digital inequality (pp. 341–357). Edward Elgar Publishing.

Rohrer, J. M., Hünermund, P., Arslan, R. C., & Elson, M. (2022). That’s a lot to process! Pitfalls of popular path models. Advances in Methods and Practices in Psychological Science, 5(2). https://doi.org/10.1177/25152459221095827

Rozenblit, L., & Keil, F. C. (2002). The misunderstood limits of folk science: An illusion of explanatory depth. Cognitive Science, 26(5), 521–562.https://doi.org/10.1207/s15516709cog2605_1

Shannon, H., Bush, K., Villeneuve, P. J., Hellemans, K. G., & Guimond, S. (2022). Problematic social media use in adolescents and young adults: Systematic review and meta-analysis. JMIR Mental Health, 9(4), Article e33450. https://doi.org/10.2196/33450

Zarouali, B., Helberger, N., & De Vreese, C. H. (2021). Investigating algorithmic misconceptions in a media context: Source of a new digital divide? Media and Communication, 9(4), 134–144. https://doi.org/10.17645/mac.v9i4.4090

Funding

This study was supported by the IDI (Internet Democracy Initiative) Seed Grant from Northeastern University.

Competing Interests

The author declares no competing interests.

Ethics

The research protocol employed was approved by an institutional review board (IRB#: 21-03-22) at Northeastern University. All survey participants were provided with informed consent.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/1O7WSW.