Peer Reviewed

The origin of public concerns over AI supercharging misinformation in the 2024 U.S. presidential election

Article Metrics

6

CrossRef Citations

PDF Downloads

Page Views

We surveyed 1,000 U.S. adults to understand concerns about the use of artificial intelligence (AI) during the 2024 U.S. presidential election and public perceptions of AI-driven misinformation. Four out of five respondents expressed some level of worry about AI’s role in election misinformation. Our findings suggest that direct interactions with AI tools like ChatGPT and DALL-E were not correlated with these concerns, regardless of education or STEM work experience. Instead, news consumption, particularly through television, appeared more closely linked to heightened concerns. These results point to the potential influence of news media and the importance of exploring AI literacy and balanced reporting.

Research Questions

- To what extent are Americans concerned about the applications of AI for spreading misinformation during the 2024 U.S. presidential election?

- Do knowledge of and direct interactions with generative AI (GAI) tools contribute to the concerns?

- Does consuming AI-related news or information contribute to the concerns?

- What information sources contribute to people’s concerns?

research note Summary

- In August 2023, we surveyed U.S. public opinion on new AI technologies and the upcoming U.S. presidential election via Dynata. In asking about AI’s potential role in spreading misinformation, we found that roughly four out of five Americans (83.4%) expressed some level of concern regarding AI being used to spread misinformation in the election at the time. The high prevalence of concern was consistent across various demographic groups.

- News consumption, particularly through television, is linked to the high prevalence of concerns. However, the correlation was more notable among older adults. In contrast, knowledge of ChatGPT development and direct experience with GAI tools, such as ChatGPT and DALL-E, appear to have little association with reduced concerns.

- The high prevalence of concerns about AI being used to spread misinformation in the 2024 U.S. presidential election was likely due to a combination of worries about election integrity in general, fear of the disruptive potential of AI technology, and its sensationalized news coverage. Although it remains uncertain whether these concerns were warranted, our findings highlight the need for AI literacy campaigns that focus on building knowledge rather than fostering fear in the public.

Implications

In January 2024, voters in New Hampshire received phone calls mimicking President Joe Biden’s voice, urging them not to participate in the primary election. These calls were later revealed to be AI-generated, marking an instance of generative AI tools being used for political manipulation and interference in U.S. elections.1https://www.doj.nh.gov/news/2024/20240206-voter-robocall-update.html OpenAI has subsequently released information about broad attempts by threat actors to use generative models in information operations aimed at manipulating public opinion (OpenAI, 2024).

As the AI revolution unfolds, the capabilities of GAI have become increasingly evident. Tools like ChatGPT and Midjourney can produce human-like text and photorealistic images. Research indicates that average consumers of online content often cannot distinguish between content created by AI and that created by humans (Nightingale & Farid, 2022). Unlike traditional forms of political manipulation, which typically involve human-crafted disinformation or propaganda, AI tools can be used to not only generate vast amounts of content swiftly and inexpensively but also tailor this content to individuals (Matz et al., 2024). Studies have shown that personalized and targeted political messages produced by advanced generative AI tools can be highly persuasive (Bai et al., 2023; Costello et al., 2024; Goldstein et al., 2024; Karinshak et al., 2023), sometimes more so than human-generated messages (Huang & Wang, 2023). However, some research suggests the effectiveness of AI-generated political messages may not always rely on personalization (Hackenburg & Margetts, 2023), and the impact can vary depending on the measures used (Simchon et al., 2024).

While AI’s potential is being harnessed for social good—such as expanding healthcare access, enhancing educational resources, and reducing online incivility (e.g., Argyle et al., 2023; Demszky et al., 2023; Guo et al., 2022; Tomašev et al., 2020)—its misuse remains a significant concern. As demonstrated in the robocall incident, AI has already been exploited for political ends (Cresci, 2020; Menczer et al., 2023). The World Economic Forum’s 2024 Global Risks Report suggested that experts across academia, business, government, international organizations, and NGOs believe the biggest short-term risk the world faces is AI-powered misinformation.2https://www.weforum.org/agenda/2024/01/ai-disinformation-global-risks While some researchers argue that such concern is overblown (Simon et al., 2023), others increasingly agree that GAI tools threaten to empower malign actors (Feuerriegel et al., 2023; Kreps et al., 2022).

The present study builds on recent research (e.g., AP-NORC, 2024) by surveying public perceptions of AI’s role in election just before the 2024 U.S. presidential election. Our data provide a baseline to track how these perceptions evolve through the election. Understanding public sentiment towards AI-driven misinformation, along with the available evidence, aids policymakers in crafting effective, targeted regulations. Beyond shaping regulatory policies, public opinion also impacts the ethical development of AI technologies.

This research also marks one of the first endeavors to explore the origins of public concerns about AI aiding in misinformation dissemination during elections. We specifically investigate whether these concerns are related to direct interactions with AI tools, news consumption, or both. The answer to this line of inquiry can inform the appropriate response strategies. For instance, if fear predominantly circulates via the media, the focus may shift towards enhancing media literacy and promoting accurate, balanced reporting.

In our survey of 1,000 participants from the United States, roughly four out of five (83.4%) expressed some concern regarding the spread of AI-generated misinformation in the upcoming presidential election. News consumption, particularly through television, appears to be associated with the high prevalence of concerns. This relationship between concerns and TV news consumption is more notable among older adults (65 or older). Knowledge of ChatGPT development and direct experience with generative AI tools, such as ChatGPT and DALL-E, appear to do little to alleviate these concerns.

The high prevalence of concerns about AI-facilitated spread of misinformation in the upcoming elections may be due to a combination of worries about election integrity in general, fear of the disruptive potential of AI technology, and its sensationalized news coverage. While further research is needed to determine whether these concerns are proportionate to actual risks, our findings suggest that effective AI literacy campaigns require broad societal participation and support. These campaigns should incorporate up-to-date materials about GAI and its potential impact on society and focus on building knowledge rather than fostering fear. Helping the public stay informed about AI’s rapidly evolving capabilities is crucial, as our data show that even those with technical STEM backgrounds—who may not be keeping pace with recent AI developments—share these widespread concerns.

Although we hypothesized that higher levels of experience with GAI tools might be linked to lower levels of concerns and fears, our results did not support this expectation. Instead, we observed that people who reported more AI experience tended to be slightly more concerned, though this trend wasn’t statistically strong enough to be conclusive. Nevertheless, we argue that encouraging the public to engage with these new tools, along with AI literacy intervention strategies, would help demystify the new generative AI technologies and help build a more accurate understanding of them. Future studies should explore how people interpret and engage with GAI tools and why their experiences may or may not always translate into reduced concerns about AI-driven misinformation.

The significant association between frequent TV news consumption and increased fear of AI-powered misinformation aligns with the long-standing critiques of TV news. Historically, television has been criticized for over-representing violence (Romer et al., 2003; Signorielli et al., 2019), which is associated with what scholars call the “mean world syndrome”—higher perceptions of real-world dangers among heavy viewers of television (Gerbner et al., 2002). A similar pattern appears in our findings regarding perceptions of AI risks. However, it should be noted that our study did not directly measure or establish evidence for negativity bias in TV news coverage of AI, and the observed correlation between TV news consumption and AI fears may be influenced by other confounding factors.

One of these potential confounding factors noted in our study is age: Among TV news consumers aged 65 and older, concerns about AI misinformation were more pronounced. This age-related pattern suggests broader generational differences in technology adoption and risk perception, rather than television’s direct influence. While previous research has found varying coverage of AI risks across media platforms (Chuan et al., 2019; Ouchchy et al., 2020), negativity bias in AI coverage likely exists across multiple media formats, including newspapers, web blogs, and social media. Further studies should systematically explore how AI is framed across different media platforms and how these frames shape concerns among various age groups differently. Research should also extend beyond the United States to include cross-country comparisons, recognizing that media systems and public perceptions of AI may vary significantly across cultural contexts. Moreover, it is important to acknowledge the limitations of self-reported media use data. Respondents may overestimate or underestimate their actual consumption, and recall bias may influence their responses (Parry et al., 2021). Future research could consider digital tracing data to strengthen the validity of these findings.

Additionally, our findings are limited by the cross-sectional nature of the survey. Public opinion on AI and misinformation evolves rapidly, and our results may not hold over time, particularly in response to major events, such as the recent AI-generated images of Taylor Swift shared by Donald Trump. Future research should track these fluctuations and how events shape public concerns. We also only focused on misinformation to reflect public concerns about AI-generated content misleading people, regardless of intent. Given recent trends in AI-generated disinformation and propaganda (e.g., Walker et al., 2024), future studies should explore how intentional manipulation leveraging AI impacts perceptions differently from unintentional misinformation.

Nevertheless, given the results of the current study, we recommend journalists, especially those in the science and technology sectors, consider balanced reporting. Our findings indicate a correlation between TV news consumption and public opinion about AI, highlighting the potential importance of ensuring a fair depiction of both potential benefits and risks associated with new AI technologies. Future research should investigate whether balanced reporting could help foster more informed public discourse and thoughtful policy development regarding AI.

Findings

Finding 1: A high prevalence of public concerns over AI supercharging misinformation during the 2024 U.S. presidential election.

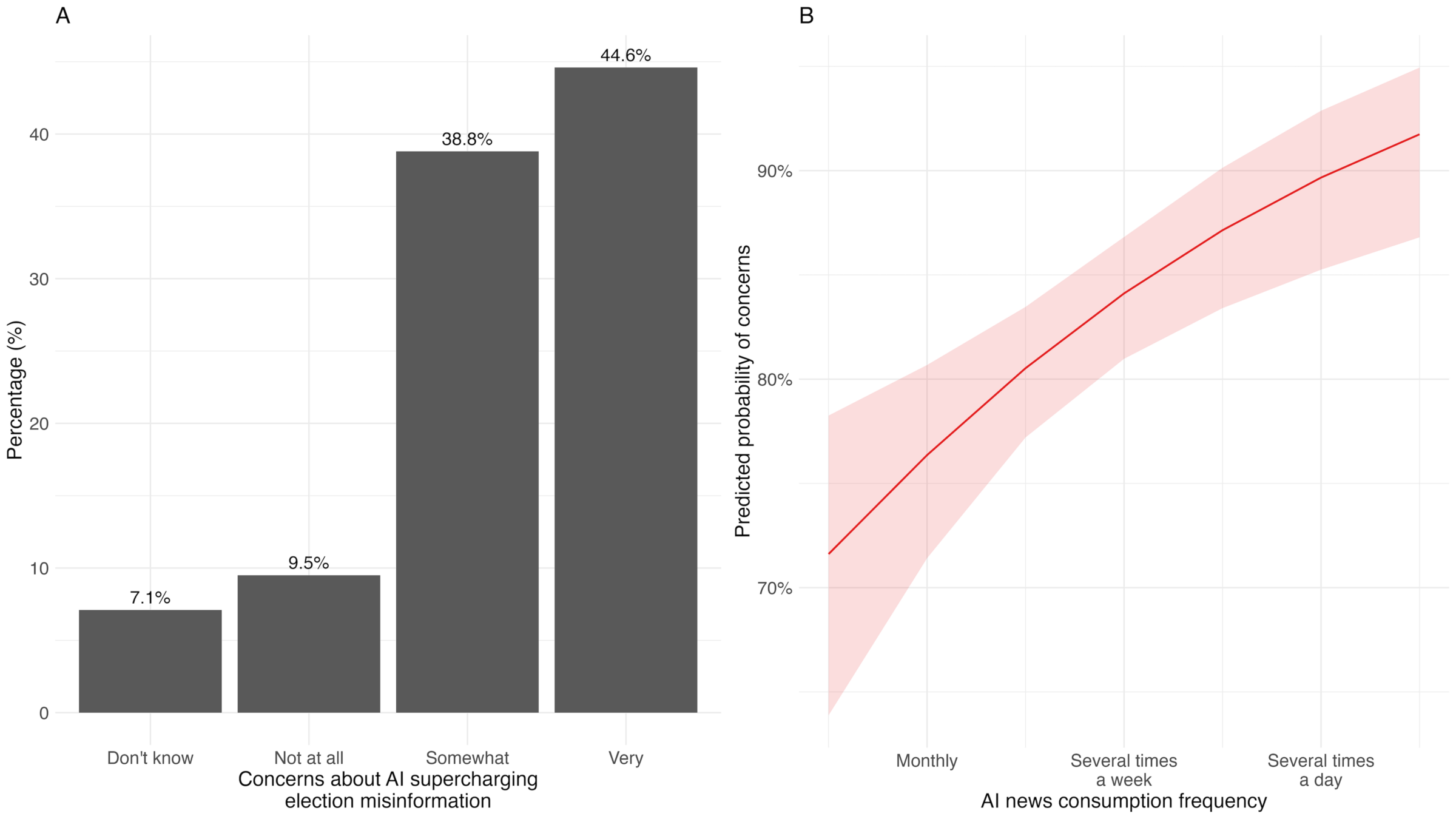

In a survey of 1,000 participants, 38.8% reported being somewhat concerned, and 44.6% expressed high concern about AI’s role in the spread of misinformation during the 2024 U.S. presidential election. In contrast, 9.5% had no concerns, and 7.1% were unaware of the issue. Figure 1A illustrates the prevalence of such concerns.

Results of the statistical model show that older participants (odds ratio [OR] = 1.11, 95% CI [1.00, 1.24]), those with higher education levels (OR = 1.09, 95% CI [0.95, 1.26]), and individuals working in STEM-related fields (9.7% of our sample; OR = 1.63, 95% CI [0.87, 3.35]) were all more likely to express concerns about AI disseminating election misinformation in 2024. However, these differences were not statistically significant.

Finding 2: AI news consumption, rather than direct Interactions with GAI, contributes to rising concerns about the AI-facilitated spread of misinformation.

We analyzed two sets of predictors concerning worries about AI-facilitated spread of election misinformation: direct interactions with GAI tools like ChatGPT and DALL-E and learning about AI through the news. Overall, we found the latter to be a more reliable predictor.

GAI knowledge and direct interaction

In our sample, 21.11% of individuals correctly identified the most recent version of ChatGPT at the time (version 4), and 34.48% reported having used at least one popular GAI tool. Individuals who could identify the latest version of ChatGPT, compared to those who couldn’t, were less likely to express concern about the exploitation of AI for spreading misinformation in the 2024 U.S. presidential election (Odds-Ratio [OR] = 0.66, 95% CI [0.40, 1.05]). However, this difference was not statistically significant. Similarly, users who have used GAI tools did not significantly differ in their concern levels from those who hadn’t (OR = 1.04, 95% CI [0.86, 1.28]). These results indicate that accurate knowledge of ChatGPT and direct experience using GAI tools may not significantly influence concerns about AI-facilitated spread of misinformation.

AI news consumption

The majority of respondents (76.8%) reported consuming AI-related news at least weekly, with 29.0% reporting several times per week. Incorporating self-reported consumption of AI news into the regression model, we found that individuals more frequently consuming AI-related news were significantly more likely to express concerns about AI-facilitated spread of misinformation (OR = 1.28, 95% CI [1.13, 1.46]). Figure 1B illustrates the predicted effects of AI news consumption on concerns about AI-facilitated spread of election misinformation.

| Demographics | Direct interaction | AI news consumption | Information sources | |

| Predictors | Log odds | Log odds | Log odds | Log odds |

| Intercept | .93** | 1.22*** | .34 | .94* |

| Education | .09 | .07 | .06 | .04 |

| Age | .11 | .13* | .14* | .10 |

| STEM major | .49 | .39 | .26 | .26 |

| Know ChatGPT | -.42 | -.33 | -.33 | |

| Use GAI | .04 | -.08 | .02 | |

| AI News Freq | .25*** | |||

| TV | .38* | |||

| .36 | ||||

| Social Media | .13 | |||

| Streaming | -.03 | |||

| Podcast | .25 | |||

| Word-of-mouth | .14 | |||

| Other | .16 |

We substituted the general AI news consumption frequency measure with seven measures regarding specific sources. As shown in Table 1 under information sources, individuals who relied on television for AI-related news (51.2% of participants in our sample) were significantly more likely to express concerns about AI spreading misinformation in elections compared to those who did not rely on TV (OR = 1.47, 95% CI [1.03, 2.11]). None of the other information sources predicted a significantly higher likelihood of concern. TV viewers of AI-related news tended to be older (median age group: 45-54 years) than non-viewers (median age group: 35-44 years; W = 90038, z = -7.64, p < .001). Therefore, we examined how TV consumption related to AI concerns separately for each age group. The results revealed that the disparity of concerns was particularly pronounced among individuals aged 65 and older (b = 1.38, p = .015; see Table A1 for detailed regression model). Overall, the results highlighted the distinct association between greater TV news consumption and heightened concerns about AI misinformation, though this relationship may vary across different age groups.

Methods

In August 2023, we contracted the research firm Dynata to administer a survey to investigate U.S. public opinion of AI. Using non-random quota sampling, Dynata recruited a sample of 1,001 U.S. citizens aged 18 years and older to represent the demographic makeup of the national adult population. One respondent declined to report their age group and was excluded from the analysis, resulting in a final sample of N = 1,000. Compared to the 2020 U.S. census, the final sample is representative in terms of region, STEM work experience, gender, and race/ethnicity, with variations within plus or minus 5%. The final sample slightly overrepresents individuals with some college or associate degrees and those with a household income above $150,000 per year and underrepresents those who are older than 65. It also underrepresents individuals with a college degree or higher, as well as individuals with a household income below $50,000 per year (see the comparison between our sample and 2020 U.S. census data in Table A2 in the Appendix). The survey, administered by Dynata between August 15 and August 29, was conducted as part of a larger study looking at public opinion across three countries: the United States, the United Kingdom, and Canada. In this article, we present the results of only the United States sample and their concerns regarding the relationship between AI and misinformation in the 2024 U.S. presidential election.

In this survey, respondents were asked about their news consumption habits regarding information about AI, their knowledge and usage of AI tools such as ChatGPT and Dall-E, their perceptions of how AI may affect society (such as through employment dislocation), their views of the acceptability of certain AI applications (such as using AI for filing taxes or diagnosing health problems), their views of AI regulation and the future development of AI, and their views of AI as a tool for misinformation and other forms of electoral or democratic interference. The survey questions can be found in Appendix B. For most questions, participants were asked to respond using a four- or five-point Likert-type scale with a neutral option.

Our dependent variable question was, “How concerned are you that AI could be used to spread misinformation during the 2024 U.S. presidential election?” The answers included the following options: not concerned at all, somewhat concerned, very concerned, or don’t know/not sure. For the independent variables, we used measures of respondents’ demographics, their knowledge and interaction with GAI tools (such as ChatGPT), and their general news consumption habits, including different information sources. For demographics, we used age (measured ordinally as decadal groups), education (measured ordinally in six groups from 1 = some high school to 6 = graduate degree), and whether the respondent has a STEM background (1 = Yes; 0 = No). For knowledge and interaction with generative AI tools, we used two measures: a knowledge question where respondents were asked to name which ChatGPT model is the most up to date at the time (i.e., ChatGPT-4) and whether or not someone has used ChatGPT, Bard, DALL-E, or any other GAI program (measured binarily). For AI news consumption, we used the frequency of how often a respondent watches, reads, hears, or sees news about AI (measured ordinally in seven groups from 1 = never to 7 = several times an hour) and whether or not they get news and information about AI from specific sources (measured binarily across seven categories: television, print, social media, streaming, podcasts, word-of-mouth, or other).

We were interested in the prevalence, rather than the degree, of concerns about AI empowering misinformation. Therefore, in this analysis, we collapsed responses into two categories: 1) not concerned (combining “not concerned” and “don’t know/not sure” options) and 2) concerned (combining “somewhat concerned” and “very concerned” options).3Research on survey response behavior suggests that “don’t know” responses often indicate lower engagement or a lack of clear opinion (e.g., Krosnick, 1991), so we collapsed the “not concerned” and “don’t know/not sure” categories as both reflect an absence of expressed concern. We use regression models4We used stepwise logistic regression because it is well-suited for predicting the likelihood of a binary outcome—in this case, whether someone is concerned about AI spreading misinformation or not. to draw inferences on how four sets of factors contribute to the concerns about AI-facilitated spread of misinformation during the 2024 U.S. presidential election. These factors included demographic factors, knowledge about and direct interaction with GAI tools, frequency of overall AI consumption, and AI news consumption through specific media channels.

Topics

Bibliography

Argyle, L. P., Bail, C. A., Busby, E. C., Gubler, J. R., Howe, T., Rytting, C., Sorensen, T., & Wingate, D. (2023). Leveraging AI for democratic discourse: Chat interventions can improve online political conversations at scale. Proceedings of the National Academy of Sciences, 120(41), e2311627120. https://doi.org/10.1073/pnas.2311627120

The Associated Press-NORC Center for Public Affairs Research (AP-NORC). (2024). There is bipartisan concern about the use of AI in the 2024 elections. https://apnorc.org/projects/there-is-bipartisan-concern-about-the-use-of-ai-in-the-2024-elections/

Bai, H., Voelkel, J., Eichstaedt, J., & Willer, R. (2023). Artificial intelligence can persuade humans on political issues. Research Square. https://doi.org/10.21203/rs.3.rs-3238396/v1

Chuan, C. H., Tsai, W. H. S., & Cho, S. Y. (2019). Framing artificial intelligence in American newspapers. AIES’19: Proceedings of the 2019 AAAI/ACM conference on AI, ethics, and society (pp. 339–344). Association for Computing Machinery. https://doi.org/10.1145/3306618.3314285

Costello, T. H., Pennycook, G., & Rand, D. G. (2024). Durably reducing conspiracy beliefs through dialogues with AI. Science, 385(6714), eadq1814. https://doi.org/10.1126/science.adq1814

Cresci, S. (2020). A decade of social bot detection. Communications of the ACM, 63(10), 72–83. https://doi.org/10.1145/3409116

Demszky, D., Yang, D., Yeager, D. S., Bryan, C. J., Clapper, M., Chandhok, S., Eichstaedt, J. C., Hecht, C., Jamieson, J., Johnson, M., Jones, M., Krettek-Cobb, D., Lai, L., JonesMitchell, N., Ong, D. C., Dweck, C. S., Gross, J. J., & Pennebaker, J. W. (2023). Using large language models in psychology. Nature Reviews Psychology, 2(11), 688–701. https://doi.org/10.1038/s44159-023-00241-5

Feuerriegel, S., DiResta, R., Goldstein, J. A., Kumar, S., Lorenz-Spreen, P., Tomz, M., & Pröllochs, N. (2023). Research can help to tackle AI-generated disinformation. Nature Human Behaviour, 7(11), 1818–1821. https://doi.org/10.1038/s41562-023-01726-2

Gerbner, G., Gross, L., Morgan, M., Signorielli, N., & Shanahan, J. (2002). Growing up with television: Cultivation processes. In J. Bryant & D. Zillmann (Eds.), Media effects: Advances in theory and research (pp. 53–78). Routledge.

Goldstein, J. A., Chao, J., Grossman, S., Stamos, A., & Tomz, M. (2024). How persuasive is AI-generated propaganda? PNAS Nexus, 3(2), pgae034. https://doi.org/10.1093/pnasnexus/pgae034

Guo, Z., Schlichtkrull, M., & Vlachos, A. (2022). A survey on automated fact-checking. Transactions of the Association for Computational Linguistics, 10, 178–206. https://doi.org/10.1162/tacl_a_00454

Huang, G., & Wang, S. (2023). Is artificial intelligence more persuasive than humans? A meta-analysis. Journal of Communication, 73(6), 552–562. https://doi.org/10.1093/joc/jqad024

Hackenburg, K., & Margetts, H. (2023). Evaluating the persuasive influence of political microtargeting with large language models. Proceedings of the National Academy of Sciences, 121(24), e2403116121. https://doi.org/10.1073/pnas.2403116121

Karinshak, E., Liu, S. X., Park, J. S., & Hancock, J. T. (2023). Working with AI to persuade: Examining a large language model’s ability to generate pro-vaccination messages. Proceedings of the ACM on Human-Computer Interaction, 7(CSCW1), 1–29. https://doi.org/10.1145/3579592

Kreps, S., McCain, R. M., & Brundage, M. (2022). All the news that’s fit to fabricate: AI-generated text as a tool of media misinformation. Journal of Experimental Political Science, 9(1), 104–117. https://doi.org/10.1017/XPS.2020.37

Krosnick, J. A. (1991). Response strategies for coping with the cognitive demands of attitude measures in surveys. Applied Cognitive Psychology, 5(3), 213–236. https://doi.org/10.1002/acp.2350050305

Matz, S. C., Teeny, J. D., Vaid, S. S., Peters, H., Harari, G. M., & Cerf, M. (2024). The potential of generative AI for personalized persuasion at scale. Scientific Reports, 14, 4692. https://doi.org/10.1038/s41598-024-53755-0

Menczer, F., Crandall, D., Ahn, Y. Y., & Kapadia, A. (2023). Addressing the harms of AI-generated inauthentic content. Nature Machine Intelligence, 5(7), 679–680. https://doi.org/10.1038/s42256-023-00690-w

Nightingale, S. J., & Farid, H. (2022). AI-synthesized faces are indistinguishable from real faces and more trustworthy. Proceedings of the National Academy of Sciences, 119(8), e2120481119. https://doi.org/10.1073/pnas.2120481119

Ouchchy, L., Coin, A., & Dubljević, V. (2020). AI in the headlines: The portrayal of the ethical issues of artificial intelligence in the media. AI & Soceity, 35, 927–936. https://doi.org/10.1007/s00146-020-00965-5

OpenAI. (May 2024). Disrupting deceptive uses of AI by covert influence operations. https://openai.com/index/disrupting-deceptive-uses-of-AI-by-covert-influence-operations

Parry, D. A., Davidson, B. I., Sewall, C. J. R., Fisher, J. T., Mieczkowski, H., & Quintana, D. S. (2021). A systematic review and meta-analysis of discrepancies between logged and self-reported digital media use. Nature Human Behaviour, 5(11), 1535–1547. https://doi.org/10.1038/s41562-021-01117-5

Romer, D., Jamieson, K. H., & Aday, S. (2003). Television news and the cultivation of fear of crime. Journal of Communication, 53(1), 88–104. https://doi.org/10.1111/j.1460-2466.2003.tb03007.x

Simchon, A., Edwards, M., & Lewandowsky, S. (2024). The persuasive effects of political microtargeting in the age of generative artificial intelligence. PNAS Nexus, 3(2), pgae035. https://doi.org/10.1093/pnasnexus/pgae035

Simon, F. M., Altay, S., & Mercier, H. (2023). Misinformation reloaded? Fears about the impact of generative AI on misinformation are overblown. Harvard Kennedy School (HKS) Misinformation Review, 4(5). https://doi.org/10.37016/mr-2020-127

Signorielli, N., Morgan, M., & Shanahan, J. (2019). The violence profile: Five decades of cultural indicators research. Mass Communication & Society, 22(1), 1–28. https://doi.org/10.1080/15205436.2018.1475011

Tomašev, N., Cornebise, J., Hutter, F., Mohamed, S., Picciariello, A., Connelly, B., Belgrave, D. C. M., Ezer, D., Cachat van der Haert, F., Mugisha, F., Abila, G., Arai, H., Almiraat, H., Proskurnia, J., Snyder, K., Otake-Matsuura, M., Othman, M., Glasmachers, T., de Wever, W., … Clopath, C. (2020). AI for social good: Unlocking the opportunity for positive impact. Nature Communications, 11, 2468. https://doi.org/10.1038/s41467-020-15871-z

Walker, C. P., Schiff, D. S., & Schiff, K. J. (2024). Merging AI Incidents Research with Political Misinformation Research: Introducing the Political Deepfakes Incidents Database. Proceedings of the AAAI Conference on Artificial Intelligence, 38(21), 23053–23058. https://doi.org/10.1609/aaai.v38i21.30349

Funding

Northeastern University’s internal resources funded the fielding of the survey for this research report.

Competing Interests

The authors declare no competing interests.

Ethics

The survey was approved by the Northeastern University institutional review board under the protocol IRB# 23-06-08. Participants were provided informed consent. Ethnicity and gender categories were defined by the institutional review board. Gender was defined by multiple categories, including transgender options, and ethnicity was defined as non-mutually exclusive ethnic options where respondents could select multiple ethnicities. While gender and ethnicity were not reported in this analysis, they were important in other studies related to the survey regarding AI literacy.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/UDGWGO

Acknowledgments

We thank Joanna Weiss and Michael Workman of Northeastern University for their contributions in developing and fielding the survey.