Peer Reviewed

Ridiculing the “tinfoil hats:” Citizen responses to COVID-19 misinformation in the Danish facemask debate on Twitter

Article Metrics

4

CrossRef Citations

PDF Downloads

Page Views

We study how citizens engage with misinformation on Twitter in Denmark during the COVID-19 pandemic. We find that misinformation regarding facemasks is not corrected through counter-arguments or fact-checking. Instead, many tweets rejecting misinformation use humor to mock misinformation spreaders, whom they pejoratively label wearers of “tinfoil hats.” Tweets rejecting misinformation project a superior social position and leave the concerns of misinformation spreaders unaddressed. Our study highlights the role of status in people’s engagement with online misinformation.

Research Questions

- How much COVID-19 misinformation related to the facemask debate can we identify on Danish social media?

- What types of arguments are used to spread and correct misinformation on Twitter? How do these arguments differ in how they engage with the topic?

- What role do humor and irony play in people’s engagement with misinformation?

Essay Summary

- We studied the spread and rejection of misinformation on Twitter during the first wave of the coronavirus pandemic in Denmark. As an example, we focused on the local facemask debate.

- Using a mixed-methods design, we collected all Danish-language tweets mentioning “facemask” and COVID-related terms posted from February to November 2020. We manually coded each tweet as either spreading, rejecting or unrelated to misinformation, and then again for the use of humor. Finally, tweets were qualitatively analyzed in terms of themes, style, rhetoric, and addressee.

- Misinformation accounts for a small portion of the overall facemask-related tweets with an almost equal number of misinformation spreaders and rejectors. In the first phase of the pandemic, the number of tweets rejecting misinformation exceeded the number of tweets spreading misinformation; over time, however, tweets spreading misinformation outnumbered those rejecting it.

- While other studies show people spread misinformation to appeal to their own social circles, we found that status concerns also characterize tweets rejecting misinformation. In most cases, tweets rejecting misinformation do not engage with substantive claims, but, instead, stigmatize and ridicule misinformation spreaders.

- Further studies are needed to assess the generalizability of these patterns, but our analysis suggests that future initiatives to limit online misinformation should consider status-seeking dynamics among both misinformation spreaders and rejectors.

Implications

At the start of the coronavirus pandemic, the World Health Organization warned that an infodemic jeopardized pandemic-quelling efforts and encouraged social media platforms to retaliate against the spread of online misinformation (WHO, 2020). Here, we understand misinformation as verifiably false claims presented as factually true, regardless of the disseminators’ cognizance of the falsehood (Allcott & Gentzkow, 2017). By focusing on misinformation rather than disinformation—false information created for the strategic purpose of deceit (Allcott & Gentzkow, 2017)—we explored users’ interactions with false claims regardless of the motivation behind their spread. We simply investigated whether the tweet text supported or countered a false claim. These false claims were drawn from the largest independent Danish fact-checking institution, TjekDet. We relied on the facemask debate, the misinformation theme identified via TjekDet that engaged most tweets. Additionally, we suspected this debate to be a fertile ground for misinformation due to the Danish authorities’ change of stance on facemasks partway through the pandemic (Krakov, 2020; Statsministeriet, 2020b) and the often-misinterpreted inconclusive mask study (see Abbasi, 2020), leaving citizens to navigate through changing and conflicting statements regarding the efficacy of facemasks.

At the start of the coronavirus pandemic, the World Health Organization warned that an infodemic jeopardized pandemic-quelling efforts and encouraged social media platforms to retaliate against the spread of online misinformation (WHO, 2020). Here, we understand misinformation as verifiably false claims presented as factually true, regardless of the disseminators cognizance of the falsehood (Allcott & Gentzkow, 2017). By focusing on misinformation rather than disinformation—false information created for the strategic purpose of deceit (Allcott & Gentzkow, 2017)—we explored users’ interactions with false claims regardless of the motivation behind their spread. We simply investigated whether the tweet text supported or countered a false claim. These false claims were drawn from the largest independent Danish fact-checking institution, TjekDet. We relied on the facemask debate, the misinformation theme identified via TjekDet that engaged most tweets. Additionally, we suspected this debate to be a fertile ground for misinformation due to the Danish authorities’ change of stance on facemasks partway through the pandemic (Krakov, 2020; Statsministeriet, 2020b) and the often-misinterpreted inconclusive mask study (see Abbasi, 2020), leaving citizens to navigate through changing and conflicting statements regarding the efficacy of facemasks.

Most studies of misinformation during the pandemic focus on the disseminators (Caldarelli et al., 2021; Cinelli et al., 2020; Gallotti et al., 2020), while few scholars have explored how citizens combat false information online (Abidin, 2020; Micallef et al., 2020; Pulido et al., 2020). We investigated all tweets engaging with misinformation. We found that misinformation-related discussion accounted for just 5.04% of the Danish Twitter debate on facemasks. Moreover, stigmatizing tweets, either ridiculing or criticizing their opponents, were not only created by people spreading false claims about COVID-19 but also by those rejecting false claims. Our findings stem from a limited Twitter dataset with a narrow focus within the COVID-19 debate in a high trust environment (see Methods section). They may not, therefore, be directly applicable to countries with lower trust, other misinformation topics, or different social media platforms. However, they do suggest an interesting pattern that may have wider implications for our understanding of digital misinformation and the role citizens can be expected to play in quelling it.

Other studies have shown that misinformation is spread by people who are in opposition to the established “system” and seek to defend their social status (Petersen et al., 2020). We found a similar dynamic among tweets rejecting misinformation: they do not correct false information but fortify the poster’s status and devalue those who believe in false stories. When tweets reject misinformation, they are, to a large extent, appealing to those already critical of misinformation rather than converting those they label as wearers of tinfoil hats1Tinfoil hat is a derogatory term used to refer to people “falling for” conspiracy theories and misinformation. or similar derogatory terms. While misinformation spreaders have previously been painted as the bullies of the internet (Petersen et al., 2020), our study suggests that this also holds true for rejectors. Tweets spreading misinformation stories make consistent arguments (on their own skewed terms), but only 28% of those rejecting misinformation explicitly address false or misleading claims. Most tweets rejecting misinformation mock, ridicule, or stigmatize those spreading misinformation stories, often through irony or sarcasm. Using ironic or humorous comments to correct misinformation can be counterproductive. For example, Abidin (2020) shows that originally satirical Instagram memes shared by young people evolved into misinformation among the elderly on WhatsApp. Our results suggest that rejection is largely aimed at the rejector’s own audience, not the misinformation spreaders. Future initiatives and research on misinformation would benefit from investigating status-seeking attempts to increase the respect one has in the eyes of others as a driver for people fighting online misinformation (Magee & Galinsky, 2008).

In this study, we identify one key argumentative strategy in tweets rejecting misinformation: stigmatization. Existing literature is inconclusive when it comes to the effects of misinformation correction. Some scholars find that only corrections from public institutions or organizations are effective (Van der Meer & Jin, 2020; Vraga & Bonde, 2017), highlighting the importance of the corrector’s credibility. Other studies argue that combating online hostility requires the mobilization of a sense of connection and we-feeling (Berinsky, 2017; Hannak et al., 2014; Malhorta, 2020; Margolin et al., 2018; Munger, 2017). This suggests that misinformation correction could only work if those spreading misinformation perceive correction as a peer dialogue. In contrast, other scholars argue that corrections of citizens’ false claims by strangers can have a positive effect. This is important as Micallef et al. (2020) show: 96% of all tweets combating misinformation (and those most retweeted) are effectuated by concerned citizens (not professional fact checkers). Focusing on the arguments presented by the corrector and their group membership, one study finds that effective correction requires a proper explanation for why the claim is false (Nyhan & Reifler, 2015) and that logic-based arguments correct misinformation better than arguments using humor (Vraga et al., 2019). However, Karlsen et al. (2017) show that neither confirmation nor contradiction appear to effectively change people’s attitudes. Online debates, instead, tend to reinforce preexisting beliefs. However, presenting people with two-sided arguments can, in some instances, alter people’s attitudes.

While counter-arguments are unlikely to be successful in changing the fundamental attitude of misinformation spreaders, perhaps the criticism and ridiculing of misinformation signals to passive observers that spreading misinformation is unacceptable (for the importance of passive audiences, see Marett & Joshi, 2009; Schmidt et al., 2021). However, stigmatization could also harden the position of those being stigmatized (Goffman, 1963). If this is the case, the challenge going forward is to explore how citizens can correct misinformation without stigmatizing or ridiculing opponents. Our findings suggest that there is a need for more research focused on understanding which types of arguments, tactics, and issue positions are most likely to create backfire effects when engaging with misinformation (see Bail et al., 2018).

Findings

Finding 1: Misinformation accounts for a small portion of the overall facemask-related tweets in Denmark during the pandemic; we observed slightly more tweets spreading misinformation than rejecting it.

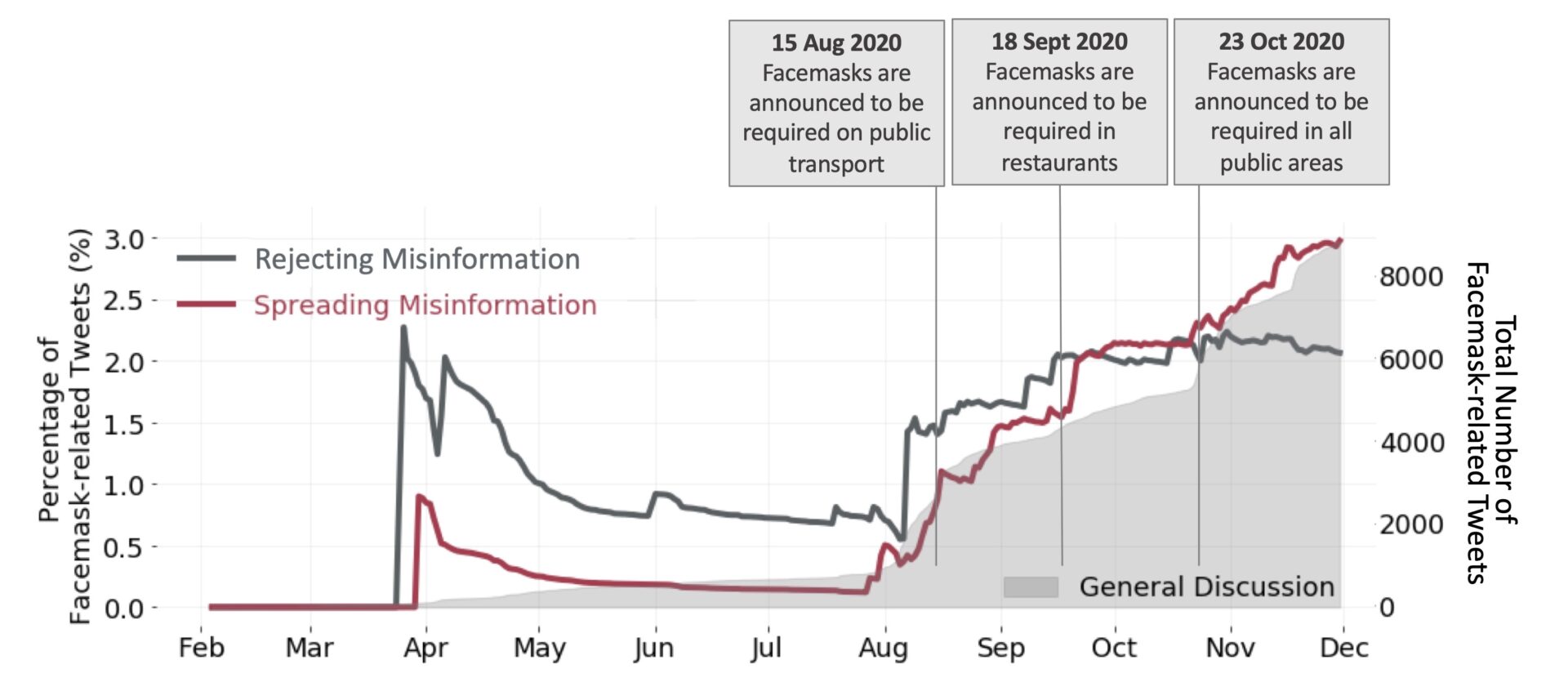

Out of 9,345 sampled Danish tweets about COVID-19 and facemasks, only 5.04% (471 tweets and retweets) of the tweets engage with misinformation. A closer investigation of these misinformation-linked tweets shows that slightly more tweets spreading misinformation were created than tweets rejecting misinformation (see Figure 1). As we live-collected the tweets (to collect all misinformation tweets before their potential deletion), we did not capture the full reach of each tweet (i.e., the population that has actually seen the tweet). The raw number of tweets (which includes retweets) shows the relative proportions of tweets spreading and rejecting misinformation and was our first step in mapping the arguments used.

When mapping the arguments over time, we saw an initial jump in facemask-related misinformation tweets in late March 2020 with the onset of the pandemic, where the number of facemask-related tweets was low. We saw an additional spike across all arguments in August just before facemasks became mandatory on public transportation (Statsministeriet, 2020b). While, initially, misinformation rejection anticipated the growth of misinformation, the share of misinformation tweets gradually increased just before the first mandatory facemask requirement and continued to increase every time the government announced new facemask-related regulations (Statsministeriet, n.d.). By October, the number of tweets spreading misinformation exceeded those rejecting it. By December, 2.97% of all Danish tweets about facemasks in the time period spread misinformation, and only 2.07% rejected it.

Finding 2: There is a near equal number of users spreading and rejecting misinformation, but those spreading misinformation are more active on the topic.

The number of unique users rejecting (n = 161) and spreading misinformation (n = 158) are almost equal. However, student t-tests show that users spreading misinformation tweet significantly more about facemasks (𝜇 = 1.76 ± 2.24 tweets) than users rejecting it (𝜇 = 1.20 ± 0.67 tweets), t(318) = 3.04 , p = .003, d = 0.34 ). The size of this effect is moderate, and we see no difference between the two groups in their overall frequency of posts. In sum, the groups in our dataset resemble each other in size, but the misinformation spreaders tweet more about the subject.

Finding 3: Tweets rejecting misinformation are three times more likely to use humor than tweets spreading misinformation.

We find that those rejecting misinformation are over three times more likely to use humor (via emoji use, image use, or jokes) (33.2%) than those spreading misinformation (8.3%), t(469) = 7.20 , p < .0001, d = 0.67). Our coders found that posts using humor were difficult to interpret (they are 14.3 times more likely to be coded as difficult to score, t(469) = 15.07, p < .0001, d = 1.62); however, there is no significant difference (p > .01) in the scoring difficulty between tweets spreading and rejecting misinformation.

Finding 4: Users spreading misinformation put forward explicit and agitated arguments for why COVID-19 isn’t real or, more often, that the use of facemasks is dangerous.

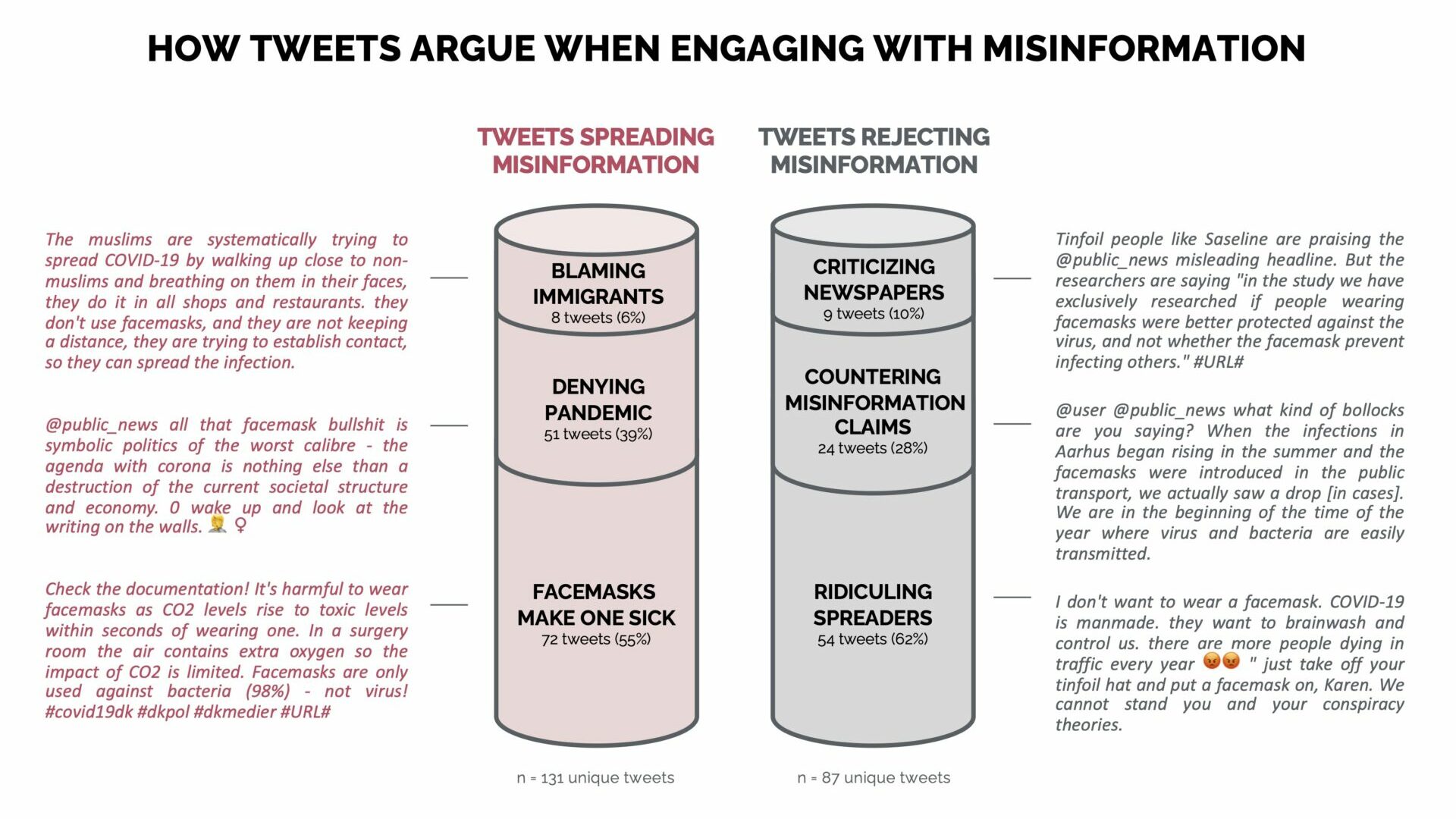

The majority of unique misinformation tweets (non-retweets) warn against using facemasks. As shown in Figure 3, these arguments either claim that facemasks are unnecessary as COVID-19 doesn’t exist (39%) or that using facemasks makes one sick (55%). Among the latter, many tweets rely on research and technical explanations to prove their point: “Check the documentation! It’s harmful to wear facemasks as CO2 levels rise to toxic levels within seconds of wearing one.” A smaller fraction of tweets do not contain concrete arguments against the use of facemasks but instead blame immigrants for improper donning of facemasks (6%). As shown in Figure 2, only 8.3% of misinformation tweets use humor to support their arguments, of which some ridicule those following corona guidelines using words such as ”lemmings,” “fakefluenze,” “selfish boomers,” and “corona-mafia.” However, these ridiculing comments are mainly present in tweets propagating racist arguments or denying the existence of COVID-19. They are rarely present in tweets claiming that facemasks make one sick. Across all arguments, the discussions can become charged and use profanity (e.g., “All the facemask bullshit is just symbolic politics of the worst caliber,” “tear off the mouth diaper and burn it”) or aggressive punctuation (“when will we drop the need to wear facemasks?!”). Overall, tweets spreading misinformation often have a condescending tone and aim to refute the dominant discourse.

Finding 5: Most tweets rejecting misinformation didn’t address the misinformation explicitly; they instead joked to their own followers about people believing in misinformation.

The majority of tweets rejecting misinformation are not aimed at correcting false or misleading claims. As shown in Figure 3, more than half of the tweets rejecting misinformation do not argue explicitly against misinformation; instead, they stigmatize or mock the misinformation spreaders (62%). Some tweets put forward arguments criticizing misguided newspaper articles (10%), and only a quarter of the tweets actually counter-argued misinformation claims (28%).

Crucially, the majority of tweets rejecting misinformation talked about the misinformation spreaders and not with them. In these tweets, misinformation spreaders were described as “idiots” and “wearers of tinfoil hats” or stigmatized as an “anti-facemask-faction.” Most of these tweets solely contained ridiculing comments like this example: “Now, due to coronavirus, we don’t just need to wear a facemask outside, but also to walk around carrying our tinfoil hats… #URL#.” These tweets are not characterized by engaging in debates or putting forward arguments to convince misinformation spreaders of their misguided positions, but rather stigmatizing and ridiculing.

Methods

We studied facemask-related misinformation on Twitter in Denmark. The nature of our single case study limits generalizability (see Appendix A).

Data collection

Our data was collected between February 1 to November 30, 2020. We primarily live-collected data from Twitter via its API to ensure that we did not underestimate the number of tweets spreading misinformation. In recent years, Twitter has introduced several defense mechanisms to hinder the spread of misinformation by banning or deleting content. Therefore, we live-collected as many tweets as possible and relied only on historical tweets from the Premium Twitter API to fill gaps.

The live collection of tweets occurred between April 15 to June 23 and April 3 to November, 2020.2The Danish Twitter dataset was collected by Rebekah Brita Baglini and Kristoffer Laigaard Nielbo at the Center for Humanities Computing Aarhus, which is part of Aarhus University, Faculty of Arts. These tweets were queried using the most common Scandinavian words from the Opensubtitles word frequency lists (Lison & Tiedemann, 2016). We removed words non-specific to Scandinavian languages and combined the 100 highest frequency unique words from each language to query live-streamed tweets via the Twitter API using DMI-TCAT (Borra & Rieder, 2014). Finally, the Twitter native classifier was used to identify Danish tweets.

The Premium Twitter API was used to fill in the remainder of the dataset (February 1 to April 15, 2020; June 23 to August 3, 2020, and October 28, 2020). These tweets were queried using most the frequent Danish words obtained from the Snowball library (Snowball, n.d). We used language as a proxy for a country given the local specificity of the Scandinavian languages.

Our dataset may consist of bots and cyborgs. Although methods have been developed to detect bots (Davis et al., 2016; Wojcik et al., 2018), many are not yet available in the Danish language.

Coding

To collect misinformation, we identified all Danish tweets containing at least one COVID-related and one facemask-related keyword,3The Danish word for facemask (mundbind) is unambiguous. leaving 9,345 tweets (5,712 unique tweets) (see Appendix B).

Six coders were trained on a pre-defined codebook to score each unique tweet as irrelevant, spreading, or rejecting misinformation. Additionally, for each unique tweet, they noted if a) it contained humor and b) it was difficult to code (see Appendix D). The codebook describes every verified Danish misinformation story from the fact-checking site TjekDet.dk (see Appendix C & D).

Intercoder reliability was calculated on fifty randomly selected tweets from our dataset; the coders scored a Krippendorffs alpha of 0.81 for the codes spreading misinformation, rejecting misinformation, or irrelevant; a value of 0.80 is sufficient to suggest coder agreement (Krippendorff, 2004). Humor annotation had a Krippendorf’s alpha of 1.0, suggesting perfect agreement. Finally, we matched the coded unique tweets to the duplicate tweets to determine the total number of tweets spreading and rejecting facemask-related misinformation.

Quantitative and qualitative analysis

We used Student’s t-tests with a p-value threshold of 0.01—the standard test for significant differences in means of two groups—to compare misinformation spreaders and rejectors. We report our t values with the degrees of freedom alongside the obtained p-value, following APA convention. In addition, Cohens d was calculated to determine the effect sizes for each comparison. During our qualitative analysis, each tweet was given only one code category. The six categories were identified through an open coding of a sample of tweets. Based on these categories, we analyzed the full sample of misinformation-related tweets (n = 218 unique tweets) (see Appendix E).

Bibliography

Abbasi, K. (2020). The curious case of the Danish mask study. The BMJ, 371. https://doi.org/10.1136/bmj.m4586

Abidin, C. (2020). Meme factory cultures and content pivoting in Singapore and Malaysia during COVID-19. Harvard Kennedy School (HKS) Misinformation Review, 1(3). https://doi.org/10.37016/mr-2020-031

Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211

Bail, C. A., Argyle, L. P., Brown, T. W., Bumpus, J. P., Chen, H., Fallin Hunzaker, M. B., Lee, J., Mann, M., Merhout, F., & Volfovsky, A. (2018). Exposure to opposing views on social media can increase political polarization. Proceedings of the National Academy of Sciences of the United States of America, 115(37), 9216–9221. https://doi.org/10.1073/pnas.1804840115

Berinsky, A. J. (2017). Rumors and health care reform: Experiments in political misinformation. British Journal of Political Science, 47(2), 241–262. https://doi.org/10.1017/S0007123415000186

Borra, E., & Rieder, B. (2014). Programmed method: Developing a toolset for capturing and analyzing tweets. Aslib Journal of Information Management, 66(3), 262–278. https://doi.org/10.1108/AJIM-09-2013-0094

Caldarelli, G., de Nicola, R., Petrocchi, M., Pratelli, M., & Saracco, F. (2021). Flow of online misinformation during the peak of the COVID-19 pandemic in Italy. EPJ Data Science, 10(1), 1–23. https://doi.org/10.1140/EPJDS/S13688-021-00289-4

Chen, E., Lerman, K., & Ferrara, E. (2020). Tracking social media discourse about the COVID-19 pandemic: Development of a public coronavirus Twitter data set. JMIR Public Health and Surveillance, 6(2), e19273. https://doi.org/10.2196/19273

Cinelli, M., Quattrociocchi, W., Galeazzi, A., Valensise, C. M., Brugnoli, E., Schmidt, A. L., Zola, P., Zollo, F., & Scala, A. (2020). The COVID-19 social media infodemic. Scientific Reports, 10(1), 16598. https://doi.org/10.1038/s41598-020-73510-5

Dam, P. S. (2019, February 4). Hvem bruger sociale medier? [Who uses social media?]. Berlingske. https://www.berlingske.dk/nyheder/hvem-bruger-sociale-medier

Davis, C. A., Varol, O., Ferrara, E., Flammini, A., & Menczer, F. (2016). BotOrNot: A system to evaluate social bots. In WWW 16 Companion: Proceedings of the 25th International Conference Companion on World Wide Web (pp. 273–274). International World Wide Web Conferences Committee. https://doi.org/10.1145/2872518.2889302

Gallotti, R., Valle, F., Castaldo, N., Sacco, P., & De Domenico, M. (2020). Assessing the risks of ‘infodemics’ in response to COVID-19 epidemics. Nature Human Behaviour, 4(12), 1285–1293. https://doi.org/10.1038/s41562-020-00994-6

Goffman, E. (1963). Stigma: Notes on the management of spoiled identity. Simon and Schuster.

Hannak, A., Margolin, D., Keegan, B., & Weber, I. (2014). Get back! You dont know me like that: The social mediation of fact checking interventions in Twitter conversations. In Proceedings of the 8th International Conference on Weblogs and Social Media (pp. 187–196). Association for the Advancement of Artificial Intelligence. https://www.aaai.org/ocs/index.php/ICWSM/ICWSM14/paper/view/8115

Illumi. (2021, June 30). 6 populære sociale medier [6 popular social media]. https://www.illumi.dk/viden/de-mest-populaere-sociale-medier/

Karlsen, R., Steen-Johnsen, K., Wollebæk, D., & Enjolras, B. (2017). Echo chamber and trench warfare dynamics in online debates. European Journal of Communication, 32(3), 257–273. https://doi.org/10.1177/0267323117695734

Krakov, R. (2020, August 15). Sundhedsmyndighedernes vej fra afvisning af mundbind til krav om mundbind [The health authorities way from dismissing to requiring facemasks]. Berlingske. https://www.berlingske.dk/samfund/sundhedsmyndighedernes-vej-fra-afvisning-af-mundbind-til-krav-om-mundbind

Krippendorff, K. (2004). Reliability in content analysis. Human Communication Research, 30(3), 411–433. https://doi.org/10.1111/j.1468-2958.2004.tb00738.x

Lison, P., & Tiedemann, J. (2016). OpenSubtitles2016: Extracting large parallel corpora from movie and TV subtitles. In N. Calzolari, K. Choukri, T. Declerck, S. Goggi, M. Grobelnik, B. Maegaard, J. Mariana, H. Mazo, A. Moreno, J. Odijk, & S. Piperidis (Eds.), Proceedings of the 10th International Conference on Language Resources and Evaluation (pp. 923–929). European Languages Resources Association. http://www.lrec-conf.org/proceedings/lrec2016/pdf/947_Paper.pdf

Magee, J. C., & Galinsky, A. D. (2008). Social hierarchy: The self‐reinforcing nature of power and status. Academy of Management Annals, 2(1), 351–398. https://doi.org/10.1080/19416520802211628

Marett, K., & Joshi, K. D. (2009). The decision to share information and rumors: Examining the role of motivation in an online discussion forum. Communications of the Association for Information Systems, 24(1), 47–68. https://doi.org/10.17705/1CAIS.02404

Malhotra, P. (2020). A relationship-centered and culturally informed approach to studying misinformation on COVID-19. Social Media + Society, 6(3). https://doi.org/10.1177/2056305120948224

Margolin, D. B., Hannak, A., & Weber, I. (2018). Political fact-checking on Twitter: When do corrections have an effect? Political Communication, 35(2), 196–219. https://doi.org/10.1080/10584609.2017.1334018

Micallef, N., He, B., Kumar, S., Ahama, M., & Memon, N. (2020). The role of the crowd in countering misinformation: A case study of the COVID-19 infodemic. In Proceedings of the 2020 IEEE International Conference on Big Data(pp. 748–757). IEEE. https://doi.org/10.1109/BigData50022.2020.9377956

Munger, K. (2017). Tweetment effects on the tweeted: Experimentally reducing racist harassment. Political Behavior, 39(3), 629–649. https://doi.org/10.1007/s11109-016-9373-5

Nyhan, B., & Reifler, J. (2015). The effect of fact-checking on elites: A field experiment on U.S. state legislators.American Journal of Political Science, 59(3), 628–640. https://doi.org/10.1111/ajps.12162

OECD. (2021). Trust in government (indicator). https://doi.org/10.1787/1de9675e-en

Petersen, M. B., Osmundsen, M., & Arceneaux, K. (2020). The “need for chaos” and motivations to share hostile political rumors. PsyArXiv. https://doi.org/10.31234/osf.io/6m4ts

Pew Research Center. (2018, May 17). Facts on news media & political polarization in Italy. https://www.pewresearch.org/global/fact-sheet/news-media-and-political-attitudes-in-italy/

Pulido, C. M., Villarejo-Carballido, B., Redondo-Sama, G., & Gómez, A. (2020). COVID-19 infodemic: More retweets for science-based information on coronavirus than for false information. International Sociology, 35(4), 377–392. https://doi.org/10.1177/0268580920914755

Schmidt, T., Salomon, E., Elsweiler, D., & Wolff, C. (2021). Information behavior towards false information and “fake news” on Facebook: The influence of gender, user type and trust in social media. In Information Between Data and Knowledge (pp. 125-154). Werner Hülsbusch. https://doi.org/10.5283/epub.44942

Shahi, G. K., Dirkson, A., & Majchrzak, T. A. (2021). An exploratory study of COVID-19 misinformation on Twitter. Online Social Networks and Media, 22, 100104. https://doi.org/10.1016/j.osnem.2020.100104

Snowball (n.d.). String processing language for creating stemming algorithms. https://snowballstem.org/

Statsministeriet (2020b, August 15). Pressemøde den 15. august 2020 [Press confrence of August 15, 2020]. https://www.stm.dk/presse/pressemoedearkiv/pressemoede-den-15-august-2020/

Statsministeriet (n.d.). Pressemødearkiv [Press conference archive]. https://www.stm.dk/presse/pressemoedearkiv

Van der Meer, T. G. L. A., & Jin, Y. (2020). Seeking formula for misinformation treatment in public health crises: The effects of corrective information type and source. Health Communication, 35(5), 560–575. https://doi.org/10.1080/10410236.2019.1573295

Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. https://doi.org/10.1126/science.aap9559

Vraga, E. K., & Bode, L. (2017). Using expert sources to correct health misinformation in social media. Science Communication, 39(5), 621–645. https://doi.org/10.1177/1075547017731776

Vraga, E. K., Kim, S. C., & Cook, J. (2019). Testing logic-based and humor-based corrections for science, health, and political misinformation on social media. Journal of Broadcasting & Electronic Media, 63(3), 393–414. https://doi.org/10.1080/08838151.2019.1653102

Wojcik, S., Messing, S., Smith, A., Rainie, L., & Hitlin, P. (2018). Bots in the Twittersphere. Pew Research Center. https://www.pewresearch.org/internet/2018/04/09/bots-in-the-twittersphere/

WHO. (2020, February 8). Director-Generals remarks at the media briefing on 2019 novel coronavirus on 8th of February 2020. https://www.who.int/director-general/speeches/detail/director-general-s-remarks-at-the-media-briefing-on-2019-novel-coronavirus—8-february-2020

Funding

Grant CF20-0044, HOPE: How Democracies Cope with Covid-19, from the Carlsberg Foundation funded the study.

Competing Interests

The authors declare no competing interests.

Ethics

Our research complies with University of Copenhagen’s Code of Conduct for Responsible Research.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/KIUWDB

Acknowledgements

We wish to thank the editors, the two reviewers, Michael Bang Petersen, Andreas Roepstorff, Sune Lehmann, Frederik Hjorth, Asmus Leth Olsen, Gregory Eady, Amalie Pape, Kristoffer Laigaard Nielbo, Matias Piqueras, SODAS employees and all the members of the HOPE project for helpful comments.