Peer Reviewed

Research note: Examining how various social media platforms have responded to COVID-19 misinformation

Article Metrics

27

CrossRef Citations

PDF Downloads

Page Views

We analyzed community guidelines and official news releases and blog posts from 12 leading social media and messaging platforms (SMPs) to examine their responses to COVID-19 misinformation. While the majority of platforms stated that they prohibited COVID-19 misinformation, the responses of many platforms lacked clarity and transparency. Facebook, Instagram, YouTube, and Twitter had largely consistent responses, but other platforms varied with regard to types of content prohibited, criteria guiding responses, and remedies developed to address misinformation. Only Twitter and YouTube described their systems for applying various remedies. These differences highlight the need to establish general standards across platforms to address COVID-19 misinformation more cohesively.

Research Questions

- Do SMPs prohibit COVID-19 misinformation? What types of COVID-19 content do they prohibit, and what criteria do they use to inform action on misleading content?

- What remedies have SMPs developed to address COVID-19 misinformation? How are different remedies applied?

Essay Summary

- We conducted a content analysis of community guidelines, official news releases, and blog posts published between February 1, 2020, and April 1, 2021, for 10 social media platforms (Facebook, YouTube, Twitter, Instagram, Reddit, Snapchat, LinkedIn, TikTok, Tumblr, and Twitch) and two messaging platforms (Messenger and WhatsApp). This initial analysis was updated by a rapid review of the same data sources on November 23, 2021.

- The majority of the SMPs explicitly prohibited COVID-19 misinformation (N = 8), but only four (Facebook, Instagram, YouTube, and Twitter) had an independent COVID-19 misinformation policy. Twitch, Tumblr, Messenger, and WhatsApp did not prohibit COVID-19 misinformation.

- The majority of SMPs (N = 10) developed one or more remedies to address misinformation and connect users with credible information. Remedies included soft measures, such as attaching warning labels, and hard measures, such as content removal and account bans. Only YouTube and Twitter described their systems for applying these different remedies, with progressively harsher remedies applied to repeat violators.

- The lack of clarity and transparency from many SMPs regarding their responses to COVID-19 misinformation and systems for applying various remedies makes it difficult for policymakers, researchers, and the general public to determine whether platforms are doing enough to address COVID-19 misinformation.

- Establishing a general set of policies and practices might be necessary to address COVID-19 misinformation in the broader social media ecosystem.

Implications

Efforts to contain the COVID-19 pandemic have been complicated by a parallel infodemic, defined as an overabundance of information, including misinformation, which occurs during a disease outbreak (WHO, 2021). With over 3.8 billion users globally (DataReportal, 2021), social media and messaging platforms (hereafter referred to as SMPs) have become one of the major means of seeking and sharing COVID-19–related information. While the wide reach of SMPs has benefits in democratizing information access, it has also contributed to facilitating the rapid spread of mis- and disinformation (Cinelli et al., 2020; Kouzy et al., 2020). Some examples of COVID-19 misinformation1In the interest of brevity, in this paper we use the term “misinformation” broadly to cover information and claims that are both intentionally and unintentionally false and misleading. As noted elsewhere in this paper, the platforms we examine define the scope of “misinformation” in various ways. that have spread widely on SMPs include claims that 5G causes the virus and that COVID-19 vaccines alter DNA (Islam et al., 2021, 2020; Naeem et al., 2021). Such types of misinformation have been widely viewed and shared on SMPs (Nielsen et al., 2020). A recent study found that exposure to such types of misinformation was negatively associated with vaccination intention (Loomba et al., 2021), illustrating that misinformation has real consequences for containing the pandemic.

The magnitude of the COVID-19 pandemic has resulted in urgent calls from all sectors of society for SMPs to do more to address COVID-19 misinformation (Disinformation nation: Social media’s role in promoting extremism and misinformation, 2021; Donovan, 2020). Donovan, 2020). However, SMPs face a number of challenges in determining how best to do this, including the massive volume of content and nuanced nature of misinformation. Thus, SMPs will need to decide what types of content to prioritize, when to take action, and what type of action to take. Legal scholars have proposed typologies of content moderation remedies in internet platforms (Goldman, 2021; Grimmelmann, 2015). These include more lenient “soft” remedies (e.g., warning labels) and more stringent “hard” remedies (e.g., removing content) (Goldman, 2021; Grimmelmann, 2015). Given that the different types of remedies have different consequences for users, it is important to understand how platforms apply these remedies. Additionally, as many SMP users use multiple platforms (DataReportal, 2021), as newer platforms are gaining popularity, and as platforms are increasingly interconnected (e.g., TikTok videos can be shared on Facebook), effectively addressing misinformation requires responses from all platforms.

We examined responses to COVID-19 misinformation by 12 leading SMPs and found that the majority (eight out of 12) prohibited COVID-19 misinformation. This finding by itself is noteworthy, as for several SMPs, it represents a stark reversal from their previous stance on content regulation. As recently as two years ago, companies such as Facebook2Facebook, Inc. changed its name to Meta on October 28, 2021. As the company’s name was Facebook at the time of the initial analysis, we have used the name Facebook, when referring to Facebook, Inc. and Twitter refused to act on misinformation, arguing that doing so would infringe on free speech and was outside of their platform mission (Conger, 2019; Kang & Isaac, 2019). While this change is encouraging, there is much room for improvement in how SMPs address COVID-19 misinformation.

Descriptions of the responses of many SMPs lacked clarity, and their implementation lacked transparency. Many SMPs did not clearly articulate the types of content prohibited. This might be partly attributable to the challenges inherent in moderating scientific content, which is dynamic and often equivocal (Baker et al., 2020). In the COVID-19 context, this has been reflected in evolving scientific positions regarding the nature of COVID-19 transmission (WHO, 2020) and lack of consensus on boosters (Krause et al., 2021), to give just a few examples. Challenges notwithstanding, clearly outlining prohibited content is important. It can help researchers assess whether the types of content SMPs prohibit correspond with the types of content associated with real-world harm. Such evidence could guide SMPs on prioritizing and updating the types of content they prohibit. For instance, Facebook initially did not prohibit personal anecdotes or first-person accounts, but evidence showed that this type of content might be contributing to vaccine hesitancy (Dwoskin, 2021). Facebook has since updated its policy on content related to COVID-19 vaccines and now reduces the distribution of alarmist or sensationalist content about vaccines. Additionally, few platforms have a specific COVID-19 misinformation policy. During an evolving emergency such as a pandemic, such a policy could make it possible for platforms to communicate their stance on various types of content, changes to their responses, and the consequences of violating policies in a transparent and accessible manner.

Similar to previous studies (Nunziato, 2020; Sanderson et al., 2021), we found that most SMPs employed a combination of soft and hard remedies to address misinformation. Most platforms, however, did not clearly describe their systems for applying these different types of remedies. Clear visibility of consequences can deter bad behavior (Seering et al., 2017), and the U.S. surgeon general’s report on health misinformation calls for platforms to “impose clear consequences for accounts that repeatedly violate platform policies” (Office of the Surgeon General, 2021). YouTube’s and Twitter’s strike systems are consistent with the responsive regulation approach, which advocates using soft remedies before escalating to hard remedies (Ayres & Braithwaite, 1992). Given the findings of a recent report that 12 people were responsible for 73% of misinformation on SMPs (Center for Countering Digital Hate, 2021; Dwoskin, 2021), this approach could be well suited to deter superspreaders and repeat violators. Interestingly, Facebook refuted the conclusion of that report, claiming that these 12 individuals were responsible for only 0.05% of all views of vaccine-related content on that platform (Bickert, 2021). Additional analyses by independent researchers could provide more evidence regarding the extent to which specific individuals contribute to the spread of misinformation and the effectiveness of responsive regulation in curbing the spread of misinformation. More transparency from platforms regarding their application of different remedies is key to determining whether they are doing enough to address misinformation.

Facebook, Instagram, YouTube, and Twitter had largely similar responses, but the responses of other platforms differed. There is recognition among scholars that a one-size-fits-all approach to content moderation is not practical as SMPs vary with regard to their functions, audiences, and capacity to moderate content (Gillespie et al., 2020; Goldman, 2021). For instance, the encrypted nature of communication on messaging platforms means that content moderation approaches for these platforms will need to preserve user privacy (Gillespie et al., 2020). Thus, total alignment of responses across platforms is not feasible. However, previous research has shown that even if some platforms act on misinformation, it can continue to spread on other platforms (Sanderson et al., 2021). Additionally, conspiracy theorists might be migrating to alternative platforms (e.g., Parler, Twitch) as a result of mainstream platforms (e.g., Facebook, YouTube) cracking down on misinformation (Browning, 2021). Therefore, some degree of synergy in platform responses might be necessary to address COVID-19 misinformation in the broader social media ecosystem. Public–private co-regulation models are considered well suited to achieve this (Gillespie, 2018; Gorwa, 2019). Platforms could collaborate for mutual benefit and have taken steps in that direction (Shu & Shieber, 2020). Public policies could outline general standards that apply to all SMPs.

While the policies and remedies identified by this analysis represent an important first step, enforcement is vital for them to have an impact. Some platforms, such as Facebook, Instagram, YouTube, and Twitter, release some enforcement metrics in their transparency reports, such as the number of posts taken down or accounts disabled. However, without a meaningful denominator, it is impossible to determine the extent to which these policies are being enforced. External analyses indicate poor enforcement of misinformation policies by SMPs. For instance, one analysis found that a large proportion of content rated false by fact-checkers remained up on Twitter (59%), and a substantial amount also remained up on YouTube (27%) and Facebook (24%) (Brennen et al., 2020). A number of analyses have examined enforcement of misinformation policies by Facebook and identified several gaps. One report found that only 16% of all health information analyzed on Facebook had a warning label (Avaaz, 2020a). Even when Facebook took action, it was slow in doing so, taking up to 22 days to downgrade or attach warning labels to misleading content (Avaaz, 2020b). There are also significant language gaps in enforcement of policies. One analysis found that approximately 70% of misleading COVID-19 content in Spanish and Italian (vs. 29% of misleading content in English) had not received warning labels on Facebook (Avaaz, 2020b). Enforcement also varies by type of content. Although Facebook’s vaccine misinformation policy applies to all content on the platform, warning labels are not applied to content in the comments section. A case study found that misinformation is rife in the Facebook comments section, with roughly one in five comments found to contain misinformation about the vaccines or the pandemic (Chan et al., 2021).

Additionally, recent revelations in the Facebook Papers, such as special treatment given by Facebook to high-profile users when it comes to content moderation (Allyn et al., 2021), hinder the credibility of any enforcement claims made by SMPs. There are growing calls for platforms to make their responses more transparent and data more easily available to researchers (Doctors for America, 2021; MacCarthy, 2020; Office of the Surgeon General, 2021). Such independent monitoring of enforcement of policies and remedies, and evaluation of specific remedies (e.g., labeling content) and their systems of application (e.g., strike system) identified by this analysis, could hold SMPs accountable. By laying out COVID-19 misinformation responses and remedies used by a wide range of SMPs, this work can also serve as a starting point to help policymakers identify a general set of policies that might be applicable to all platforms, as well as those that might be applicable to similar types of platforms (e.g., messaging platforms).

Evidence

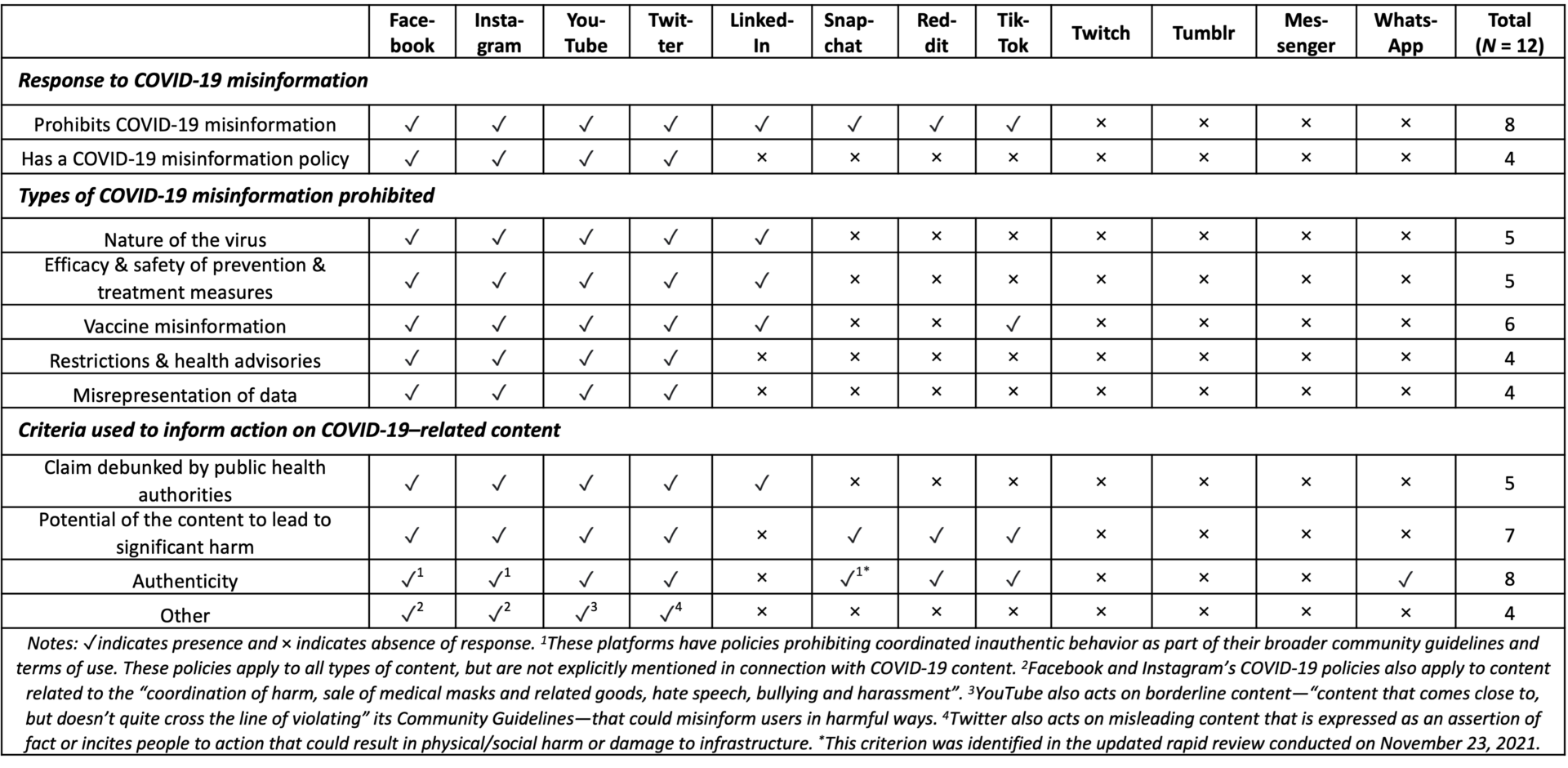

Table 1 summarizes SMP responses to COVID-19 misinformation (see Appendix Table 1 for examples). The majority of SMPs explicitly prohibited COVID-19 misinformation (N = 8), but only four (Facebook, Instagram, YouTube, and Twitter) had an independent COVID-19 misinformation policy. Twitch, Tumblr, Messenger, and WhatsApp did not prohibit COVID-19 misinformation. Half the SMPs delineated one or more types of COVID-19 content that they prohibited (Table 1, Appendix Table 2). Facebook, Instagram, YouTube, and Twitter all prohibited misinformation regarding the nature of the virus, the efficacy and safety of prevention and treatment measures, COVID-19 vaccines, and restrictions and health advisories, as well as content that misrepresented data. LinkedIn prohibited content that fell under the first three of the above categories. TikTok did not publish a detailed list of types of COVID-19 misinformation it prohibited, but it stated that COVID-19 vaccine misinformation was prohibited. Snapchat and Reddit did not articulate the specific types of COVID-19 content they prohibited.

Most platforms (N = 9) listed at least one criterion they used to guide action on COVID-19–related content (Table 1, Appendix Table 3). A majority of SMPs indicated that they would take action on content that had the potential to cause significant harm (N = 7) or was inauthentic (N = 8). Five SMPs also acted on COVID-19 content if it contained claims that had been debunked by public health authorities. Some platforms outlined other criteria. For instance, Facebook and Instagram prohibited content that was considered to be hate speech or harassment, YouTube also acted on borderline content, (i.e., content that almost but not actually violated their misinformation policy), and Twitter took action against misleading COVID-19 content that could cause social unrest or was expressed as an assertion of fact.

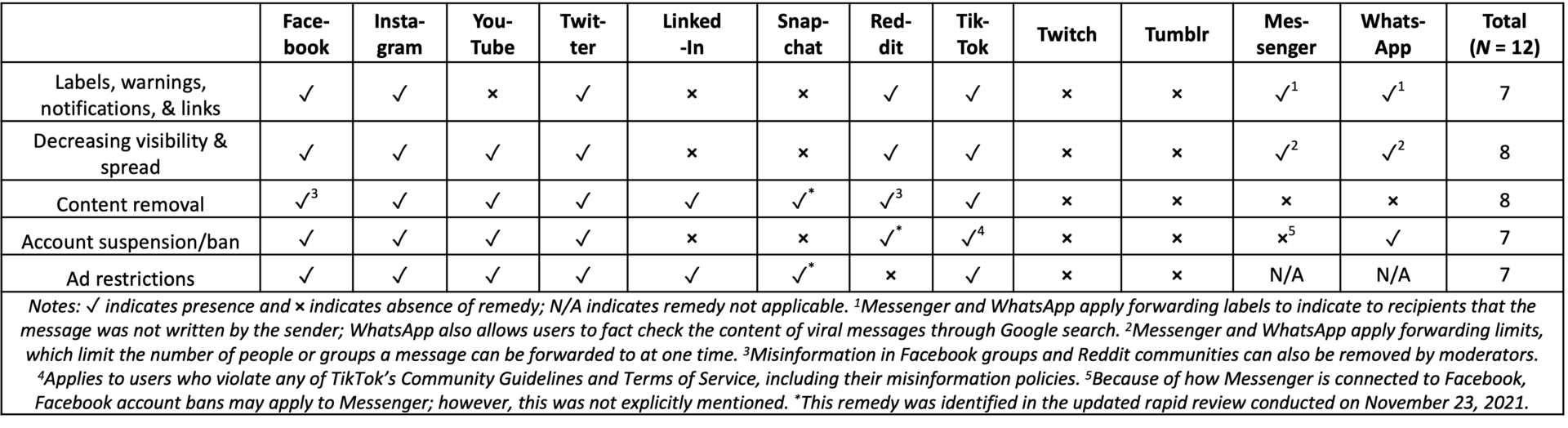

The majority of SMPs developed one or more remedies to address misinformation (N = 10) and proactively connect users with credible information (N = 10). Table 2 summarizes the former type of remedies (see Appendix Table 4 for examples). Seven SMPs used labels, warnings, notifications, and links. A different group of seven SMPs imposed restrictions on advertisements that had the potential to spread misinformation or cause harm. Eight SMPs modified their search and recommendation algorithms or disabled “like,” “share,” and forwarding features to reduce the visibility and distribution of misleading content. However, platforms differed slightly in how they used this remedy. For instance, Facebook and Instagram stated that they would reduce the distribution of content from users that had repeatedly shared misleading content or previously violated their policies, while YouTube also applied this remedy to borderline content. Eight SMPs removed some types of content, and seven SMPs temporarily suspended or permanently banned accounts or groups.

Most SMPs did not clearly describe the manner in which they implemented these wide-ranging remedies, except for YouTube and Twitter, which used a strike system. Under this system, individuals received strikes for each violation. For instance, YouTube provided a warning to first-time violators with no strikes. However, if a user had previously violated the COVID-19 content policy, each subsequent violation resulted in a strike, with three strikes leading to channel termination. On Twitter, content that was labeled received one strike, while content that was deleted received two strikes. For the first strike, no account-level action was taken. For each subsequent strike, the following actions were taken: 12-hour account lock (two or three strikes), seven-day account lock (four strikes), and permanent suspension (five or more strikes). Reddit did not have a formal strike system but implied that it also took action in a graduated manner by stating that “our goal is always to start with education and cooperation and only escalate to quarantine or ban if necessary.” Similarly, Facebook stated that profiles “that repeatedly post misinformation related to COVID-19, vaccines, and health may face restrictions, including (but not limited to) reduced distribution, removal from recommendations, or removal from our site,” but it was unclear on what basis these different remedies were applied.

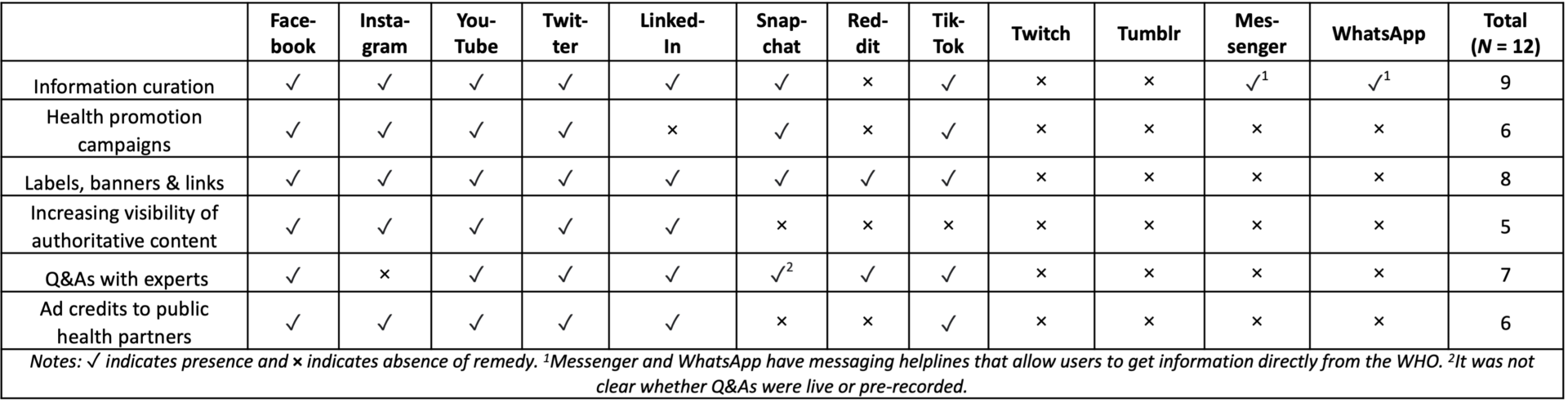

Actions to proactively connect users with credible information are summarized in Table 3 (see Appendix Table 5 for examples). Common measures included information curation (N = 9); labels, banners and links (N = 8); and Q&As with experts (N = 7). Six SMPs used health promotion campaigns to increase awareness and promote appropriate practices advised by health authorities, such as mask-wearing, handwashing, and social distancing. The nature of campaigns tended to vary by platform design. For instance, platforms that allowed users to post temporary stories, such as Instagram and Snapchat, launched stickers and filters to help people share recommended COVID-19 prevention practices and encourage vaccination. Video-based platforms, such as YouTube, launched video campaigns in collaboration with external public health partners to reach underserved populations. Meanwhile, Twitter promoted hashtags, such as #vaccinated and #WearAMask, and TikTok used hashtags to promote challenges, such as the #safehandschallenge. Facebook, Instagram, YouTube, Twitter, and LinkedIn described adjustments to their algorithms to increase visibility of authoritative content in searches and recommendations. These five platforms and TikTok also provided free advertising credits to public health partners for disseminating COVID-19 information.

Due to the end-to-end encrypted features and manner in which information is shared on messaging platforms, these platforms had different strategies to deal with misinformation compared to traditional social media platforms. While Messenger and WhatsApp did not explicitly state that they prohibited COVID-19 misinformation, they implemented some actions to limit its spread. These included attaching forwarding labels to messages that did not originate with the sender and introducing forwarding limits to reduce the spread of viral messages. Additionally, both platforms collaborated with the WHO to provide users with accurate and timely information about COVID-19 via free messaging. WhatsApp also collaborated with external fact-checking organizations and used advanced machine learning approaches to identify and ban accounts engaged in mass messaging. It was unclear whether Facebook account bans also applied to Messenger.

Given the evolving SMP policy environment, we undertook a rapid review of community guidelines, news releases, and blog posts for these 12 SMPs on November 23, 2021, to determine whether there were any changes to their responses to COVID-19 misinformation since our initial analysis. While the majority of SMPs did not have major updates to their responses, a few platforms had some noteworthy changes. Facebook had begun to reduce the distribution of misleading vaccine-related content that did not violate its policies but had the potential to discourage vaccinations. Facebook also stated that it would target certain remedies at misinformation superspreaders. These primarily included soft remedies, such as providing a label or warning when users liked a page found to repeatedly share misinformation, decreasing visibility of posts in the News Feed from individual Facebook accounts repeatedly spreading misinformation, and notifying users that their posts might be demoted in the News Feed if they repeatedly shared misinformation. Facebook also applied some hard remedies, such as removal of some Facebook pages and groups and Instagram accounts linked to 12 high-volume superspreaders identified in an external report (Center for Countering Digital Hate, 2021), despite questioning the findings of the report.

Other platforms that have updated or provided more transparency about their responses to addressing COVID-19 misinformation since our initial analysis are YouTube, Snapchat, Twitter, and Reddit. YouTube extended its ban on COVID-19 vaccine misinformation to misinformation about all vaccines that are approved and considered safe and effective by the WHO and other health authorities. In August 2021, Snapchat released more information regarding its approach to handling misinformation, which differs from other platforms’ approaches in a few ways. For example, Snapchat’s newsfeed is proactively moderated; group chats are not recommended by algorithms; and, rather than applying soft remedies, all content that violates guidelines is removed. Twitter announced that it was testing a new feature that allowed users to report content as misleading. Additionally, Twitter began collaborating with the Associated Press and Reuters to provide more context to conversations and improve information curation. Reddit reversed its decision to not act on misinformation by banning a subreddit. However, the subreddit was banned on the grounds of harassment rather than misinformation, and it remains to be seen whether Reddit takes further steps to address misinformation.

Methods

Data

We conducted a content analysis of documents from 10 leading social media platforms and two messaging platforms in the United States (U.S.). There is no single database that monitors the volume of users across all SMPs, making it difficult to rank platforms in terms of popularity. However, a recent nationally representative survey assessed prevalence of use of 10 social media platforms (Facebook, YouTube, Twitter, Instagram, Reddit, Snapchat, LinkedIn, TikTok, Tumblr, and Twitch), and one messaging platform (WhatsApp) (Shearer & Mitchell, 2021). Prevalence of use ranged from 74% for YouTube to 4% for Tumblr (Shearer & Mitchell, 2021). This group of SMPs3We analyzed Messenger independently from Facebook, as Messenger is a messaging platform, and approaches to addressing misinformation by messaging platforms will likely differ from approaches used by social media platforms. Hence, our sample consisted of 12 SMPs. was chosen because prevalence data was available.

Data sources included: (i) terms of use and community guidelines and (ii) blog posts and news releases published on the official website of each SMP between February 1, 2020, and April 1, 2021. All SMP websites provided a listing of blog posts and news releases in reverse chronological order. All blog posts and news releases published within this time frame were reviewed, and those that were relevant to addressing COVID-19 misinformation or misinformation, in general, were retained for further analysis. To be included, documents had to contain descriptions of any of the following: (1) the platform’s response to COVID-19 misinformation or misinformation generally, (2) types of COVID misinformation it prohibited and criteria it used to make this determination, or (3) remedies developed to address misinformation or proactively connect users to credible information. We included responses and remedies that were explicitly linked to addressing COVID-19 misinformation as well as those intended to address misinformation broadly, under the assumption that broader misinformation responses and remedies also applied to COVID-19 misinformation. One analyst conducted a rapid review using the same data sources for all platforms on November 23, 2021, to determine whether any SMPs had updated their responses to COVID-19 misinformation.

Development of the codebook

As there are no well-established frameworks for misinformation classification and intervention in the digital environment, a codebook was developed using an inductive approach. One analyst reviewed documents from a single platform (Twitter) and developed an initial codebook.

Description of codes

- Response to COVID-19 misinformation: Codes under this category included whether the platform prohibited COVID-19 misinformation and whether it had a COVID-19 misinformation policy.

- Types of COVID-19 misinformation prohibited: included false or misleading content about (i) the nature of the virus (including the existence, origin, causes, diagnosis, and transmission of COVID-19), (ii) the efficacy and safety of prevention and treatment measures (e.g., hydroxychloroquine), (iii) COVID-19 vaccines, (iv) restrictions and health advisories (including established mitigation measures such as masks, social distancing and handwashing), and (v) misrepresentation of data (e.g., prevalence of COVID-19 in an area or availability of resources such as hospital beds).

- Criteria used to inform action on COVID-19 related content: included (i) debunking of claims by public health authorities, (ii) potential of the content to lead to significant harm, (iii) authenticity of content (e.g., platform manipulation, fake accounts, or deep fakes), and (iv) other.

- Actions to address misinformation: included (i) labels, warnings, and notifications to inform users that the content they are viewing or sharing is misleading, and links to credible organizations such as the World Health Organization (WHO); (ii) decreasing visibility and spread of misleading content by lowering such content in searches and newsfeeds, and by restricting a user’s ability to engage with or share such content; (iii) content removal; (iv) temporary account bans or permanent account suspensions; and (v) advertising restrictions to limit the promotion of unverified cures or products with unverified claims regarding their prevention and treatment efficacy.

- Actions to promote access to credible information: included (i) information curation, whereby SMPs curate and compile credible information that is easily accessible to users (e.g., a resource center or newsletter); (ii) health promotion and communication campaigns to promote evidence-based measures to contain the pandemic, such as vaccination, social distancing, and mask-wearing; (iii) labels, banners and links to credible organizations, such as WHO or curated information hubs within the platform proactively provided to users; (iv) increasing visibility of authoritative content by elevating such content in searches and newsfeeds; (v) Q&As with public health experts through chats and live streams; and (vi) advertising credits to government and public health organizations to disseminate COVID-19 information.

Coding and analysis

For each SMP, each of the codes described above was marked as present if it was described in their documents at least once, and absent if it was not mentioned. As we were interested in identifying all types of responses used by SMPs to address COVID-19 misinformation, we coded a policy or remedy as present if it was in effect at any point during our time frame of interest (February 1, 2020, to April 1, 2021), even if it was subsequently removed. It should be noted that Instagram, Messenger, and WhatsApp are owned by Facebook, and while some Facebook policies apply to all these platforms, it is not clear which do. Therefore, we treated all these platforms as separate entities. When coding for Instagram, Messenger, and WhatsApp, we applied text segments from Facebook’s documents to these platforms only if the text explicitly referenced the platforms. Coding and analysis occurred in an iterative fashion. Two analysts independently coded documents from six SMPs using the initial version of the codebook, met to discuss their findings, and modified the codebook. Both analysts then independently coded documents from all 12 SMPs using the modified codebook. Cohen’s kappa was calculated to assess intercoder reliability for coding pertaining to each table and for all tables combined, with raters achieving high agreement (overall κ: 0.94, 95% CI: 0.90–0.99; Table 1 κ: 0.95, 95% CI: 0.89–1.00;4For Table 1, the code labeled “other” was not included in the calculation of κ, as this code was added to the codebook and defined through discussion between the two analysts after the second round of coding. Table 2 κ: 0.96, 95% CI: 0.88–1.00; Table 3 κ: 0.93, 95% CI: 0.83–1.00). The analysts met again to discuss their coding, made minor changes to the codebook, and resolved all disagreements through discussion. The presence of each code was summed across platforms to facilitate comparisons.

Limitations

A few limitations must be noted. We did not analyze news articles, which might have captured additional SMP responses and remedies not noted on their websites. However, media coverage could be uneven and biased towards bigger platforms, such as Facebook and Twitter. We, therefore, decided to limit our analysis to documents published by SMPs. Some of these policies were dynamic and changed over the course of the pandemic, but our analysis was not designed to capture such changes over time. Lack of clarity from Facebook regarding the relationship between Facebook’s Community Standards and Community Guidelines and its other platforms, such as Instagram, may have resulted in miscoding certain responses and remedies as absent when they were actually present on these platforms. It is possible that our search strategy could have missed certain documents, which could also have resulted in miscoding certain responses or remedies as absent when they might have been present. Finally, the descriptive nature of this analysis precludes us from making any conclusions about the effectiveness or implementation of policies for any platform, but these findings can inform future work on these topics.

Topics

Bibliography

Allyn, B. (2021, October 21). Oversight board slams Facebook for giving special treatment to high-profile users. NPR. https://www.npr.org/2021/10/21/1047928585/oversight-board-slams-facebook-for-giving-special-treatment-to-vip-users

Avaaz. (2020a). Facebook’s algorithm: A major threat to public health. https://secure.avaaz.org/campaign/en/facebook_threat_health

Avaaz. (2020b). How Facebook can flatten the curve of the coronavirus infodemic. https://secure.avaaz.org/campaign/en/facebook_coronavirus_misinformation

Ayres, I., & Braithwaite, J. (1992). Responsive regulation: Transcending the deregulation debate. Oxford University Press.

Baker, S. A., Wade, M., & Walsh, M. J. (2020). The challenges of responding to misinformation during a pandemic: Content moderation and the limitations of the concept of harm. Media International Australia, 177(1), 103–107. https://doi.org/10.1177/1329878X20951301

Bickert, M. (2021, August 18). How we’re taking action against vaccine misinformation superspreaders. https://about.fb.com/news/2021/08/taking-action-against-vaccine-misinformation-superspreaders

Brennen, J. S., Simon, F. M., Howard, P. N., & Nielsen, R. K. (2020). Types, sources, and claims of COVID-19 misinformation. Reuters Institute. https://reutersinstitute.politics.ox.ac.uk/types-sources-and-claims-covid-19-misinformation

Browning, K. (2021, April 27). Extremists find a financial lifeline on Twitch. The New York Times. https://www.nytimes.com/2021/04/27/technology/twitch-livestream-extremists.html

Center for Countering Digital Hate. (2021, March 24). The disinformation dozen. https://www.counterhate.com/disinformationdozen

Chan, E., Beaman, L., & Zhang, S. (2021, May 6). Vaccine misinformation in Facebook comment sections: A case study. First Draft. https://firstdraftnews.org/articles/vaccine-misinformation-in-facebook-comment-sections-a-case-study

Cinelli, M., Quattrociocchi, W., Galeazzi, A., Valensise, C. M., Brugnoli, E., Schmidt, A. L., Zola, P., Zollo, F., & Scala, A. (2020). The COVID-19 social media infodemic. Scientific Reports, 10(1), 16598. https://doi.org/10.1038/s41598-020-73510-5

Conger, K. (2019, November 2). Twitter stands by Trump amid calls to terminate his account. The New York Times. https://www.nytimes.com/2019/10/15/technology/trump-twitter-account.html

DataReportal. (2021). Global social media stats. Retrieved August 3, 2021, from https://datareportal.com/social-media-users

Disinformation nation: Social media’s role in promoting extremism and misinformation: Hearing before the U.S. House Committee on Energy and Commerce, 117th Cong. (2021). https://www.congress.gov/event/117th-congress/house-event/111407

Doctors for America. (2021, November 23). Letter to Facebook: Disclose your data now. https://doctorsforamerica.org/letter-to-facebook-disclose-your-data-now

Donovan, J. (2020, April 14). Social-media companies must flatten the curve of misinformation. Nature. https://doi.org/10.1038/d41586-020-01107-z

Dwoskin, E. (2021, March 14). Massive Facebook study on users’ doubt in vaccines finds a small group appears to play a big role in pushing the skepticism. The Washington Post. https://www.washingtonpost.com/technology/2021/03/14/facebook-vaccine-hesistancy-qanon

Gillespie, T. (2018). Custodians of the internet: Platforms, content moderation, and the hidden decisions that shape social media. Yale University Press.

Gillespie, T., Aufderheide, P., Carmi, E., Gerrard, Y., Gorwa, R., Matamoros-Fernández, A., Roberts, S., Sinnreich, A., & West, S. M. (2020). Expanding the debate about content moderation: Scholarly research agendas for the coming policy debates. Internet Policy Review, 9(4). https://doi.org/10.14763/2020.4.1512

Goldman, E. (2021). Content moderation remedies. SSRN. http://dx.doi.org/10.2139/ssrn.3810580

Gorwa, R. (2019, October 28). Regulating them softly. In Models for platform governance, 39–43. Centre for International Governance Innovation. https://www.cigionline.org/models-platform-governance/

Grimmelmann, J. (2015). The virtues of moderation. Yale Journal of Law and Technology, 17(1), 42–108. https://digitalcommons.law.yale.edu/cgi/viewcontent.cgi?article=1110&context=yjolt

Islam, M. S., Mostofa Kamal, A.-H., Kabir, A., Southern, D. L., Khan, S. H., Hasan, S. M. M., Sarkar, T., Sharmin, S., Das, S., Roy, T., Harun, M. G. D., Chughtai, A. A., Homaira, N., & Seale, H. (2021). COVID-19 vaccine rumors and conspiracy theories: The need for cognitive inoculation against misinformation to improve vaccine adherence. PloS ONE, 16(5), e0251605. https://doi.org/10.1371/journal.pone.0251605

Islam, M. S., Sarkar, T., Khan, S. H., Mostofa Kamal, A.-H., Hasan, S. M. M., Kabir, A., Yeasmin, D., Islam, M. A., Amin Chowdhury, K. I., Anwar, K. S., Chughtai, A. A., & Seale, H. (2020). COVID-19–related infodemic and its impact on public health: A global social media analysis. The American Journal of Tropical Medicine and Hygiene, 103(4), 1621–1629. https://doi.org/10.4269/ajtmh.20-0812

Kang, C., & Isaac, M. (2019, October 21). Defiant Zuckerberg says Facebook won’t police political speech. The New York Times. https://www.nytimes.com/2019/10/17/business/zuckerberg-facebook-free-speech.html

Kouzy, R., Abi Jaoude, J., Kraitem, A., El Alam, M. B., Karam, B., Adib, E., Zarka, J., Traboulsi, C., Akl, E. W., & Baddour, K. (2020). Coronavirus goes viral: Quantifying the COVID-19 misinformation epidemic on Twitter. Cureus, 12(3), e7255. https://doi.org/10.7759/cureus.7255

Krause, P. R., Fleming, T. R., Peto, R., Longini, I. M., Figueroa, J. P., Sterne, J. A. C., Cravioto, A., Rees, H., Higgins, J. P. T., Boutron, I., Pan, H., Gruber, M. F., Arora, N., Kazi, F., Gaspar, R., Swaminathan, S., Ryan, M. J., & Henao-Restrepo, A.-M. (2021). Considerations in boosting COVID-19 vaccine immune responses. The Lancet, 398(10308), 1377–1380. https://doi.org/10.1016/S0140-6736(21)02046-8

Loomba, S., de Figueiredo, A., Piatek, S. J., de Graaf, K., & Larson, H. J. (2021). Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nature Human Behaviour, 5(3), 337–348. https://doi.org/10.1038/s41562-021-01056-1

MacCarthy, M. (2020). Transparency requirements for digital social media platforms: Recommendations for policy makers and industry. SSRN. http://dx.doi.org/10.2139/ssrn.3615726

Naeem, S. B., Bhatti, R., & Khan, A. (2021). An exploration of how fake news is taking over social media and putting public health at risk. Health Information and Libraries Journal, 38(2), 143–149. https://doi.org/10.1111/hir.12320

Nielsen, R. K., Fletcher, R., Newman, N., Brennen, J. S., & Howard, P. N. (2020, April 15). Navigating the ‘infodemic’: How people in six countries access and rate news and information about coronavirus. Reuters Institute. https://reutersinstitute.politics.ox.ac.uk/infodemic-how-people-six-countries-access-and-rate-news-and-information-about-coronavirus

Nunziato, D. C. (2020). Misinformation mayhem: Social media platforms’ efforts to combat medical and political misinformation. First Amendment Law Review, 19, 32. https://scholarship.law.gwu.edu/faculty_publications/1502/

Office of the Surgeon General. (2021). Confronting health misinformation: The U.S. Surgeon General’s advisory on building a healthy information environment. U.S. Department of Health and Human Services. https://www.surgeongeneral.gov/healthmisinformation

Sanderson, Z., Brown, M. A., Bonneau, R., Nagler, J., & Tucker, J. A. (2021). Twitter flagged Donald Trump’s tweets with election misinformation: They continued to spread both on and off the platform. Harvard Kennedy School (HKS) Misinformation Review, 2(4). https://doi.org/10.37016/mr-2020-77

Seering, J., Kraut, R., & Dabbish, L. (2017). Shaping pro and anti-social behavior on Twitch through moderation and example-setting. Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, pp. 111–125. https://dl.acm.org/doi/10.1145/2998181.2998277

Shearer, E., & Mitchell, A. (2021, January 12). News use across social media platforms in 2020. Pew Research Center. https://www.journalism.org/2021/01/12/news-use-across-social-media-platforms-in-2020/

Shu, C., & Shieber, J. (2020, March 16). Facebook, Reddit, Google, LinkedIn, Microsoft, Twitter and YouTube issue joint statement on misinformation. Tech Crunch. https://techcrunch.com/2020/03/16/facebook-reddit-google-linkedin-microsoft-twitter-and-youtube-issue-joint-statement-on-misinformation/

World Health Organization. (2020). Transmission of SARS-CoV-2: Implications for infection prevention precautions. https://www.who.int/news-room/commentaries/detail/transmission-of-sars-cov-2-implications-for-infection-prevention-precautions

World Health Organization. (2021). Infodemic. https://www.who.int/health-topics/infodemic

Funding

This research is supported by the John S. and James L. Knight Foundation through a grant to the Institute for Data, Democracy & Politics at The George Washington University.

Competing Interests

Rebekah Tromble has received funding from Facebook and Twitter in support of her research. All other authors have no competing interests.

Ethics

As this study consisted of analysis of publicly available documents and did not meet the definition of human subjects research, IRB approval was not required.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/GCOJDX