Peer Reviewed

Not just conspiracy theories: Vaccine opponents and proponents add to the COVID-19 ‘infodemic’ on Twitter

Article Metrics

72

CrossRef Citations

PDF Downloads

Page Views

In February 2020, the World Health Organization announced an ‘infodemic’—a deluge of both accurate and inaccurate health information—that accompanied the global pandemic of COVID-19 as a major challenge to effective health communication. We assessed content from the most active vaccine accounts on Twitter to understand how existing online communities contributed to the ‘infodemic’ during the early stages of the pandemic. While we expected vaccine opponents to share misleading information about COVID-19, we also found vaccine proponents were not immune to spreading less reliable claims. In both groups, the single largest topic of discussion consisted of narratives comparing COVID-19 to other diseases like seasonal influenza, often downplaying the severity of the novel coronavirus. When considering the scope of the ‘infodemic,’ researchers and health communicators must move beyond focusing on known bad actors and the most egregious types of misinformation to scrutinize the full spectrum of information—from both reliable and unreliable sources—that the public is likely to encounter online.

Research Questions

- How did existing online communities of vaccine opponents and proponents respond to early news of the novel coronavirus?

- What were the dominant topics of conversation related to COVID-19? What types of misleading or false information were being shared?

- Which types of accounts shared misleading or false content? How do these topics vary between and within vaccine opponent and vaccine proponent Twitter communities?

- What do these findings mean for efforts to limit online health misinformation?

Essay Summary

- We identified the 2,000 most active Twitter accounts in the vaccine discourse from 2019, identifying both vaccine opponents and proponents. Only 17% of this sample appeared to be bots. In addition to tweeting about vaccines, vaccine opponents also tweeted about conservative politics and conspiracy theories. Vaccine proponents tended to represent doctors, researchers, or health organizations, but also included non-medical accounts.

- On February 20, we collected the most recent tweets for each account and automatically extracted 35 distinct topics of conversation related to COVID-19 (roughly 80,000 tweets). Topics were categorized as: more reliable (public health updates & news), less reliable (discussion), and unreliable (misinformation). Misinformation included conspiracy theories, unverifiable rumors, and scams promoting untested prevention/cures.

- Vaccine opponents shared the greatest proportion (35.4%) of unreliable information topics including a mix of conspiracy theories, rumors, and scams. Vaccine proponents shared a much lower proportion of unreliable information topics (11.3%).

- Across both vaccine proponents and vaccine opponents, the largest single topic of conversation was “Disease & Vaccine Narratives,” a discussion-based topic where users made comparisons between COVID-19 and other diseases—most notably influenza. These messages likely added to public confusion around the seriousness and nature of COVID-19 that endures months later.

- In the context of an ‘infodemic,’ efforts to address and correct misinformation are complicated by the high levels of scientific uncertainty. Focusing on only the most conspicuous forms of misinformation—blatant conspiracy theories, bot-driven narratives, and known communities linked by conspiracist ideologies—is one approach to addressing misinformation, but given the complexity of the current moment, this strategy may fail to address the more subtle types of falsehoods that may be shared more broadly.

Implications

On February 2, 2020, the World Health Organization declared that the spread of the novel coronavirus, nCov-2019, was accompanied by an ‘infodemic’ described as “an overabundance of information—some accurate and some not” that was inhibiting the spread of trustworthy and reliable information (World Health Organization, 2020). Even under the best circumstances, effective health communication during the early stages of a pandemic is challenging, as it can be difficult to communicate high levels of scientific uncertainty to the public and information is constantly changing (Vaughan & Tinker, 2009). With the ‘infodemic,’ health information is competing against a veritable “tsunami” of competing claims, many of which are amplified across social media with greater speed and reach (Zarocostas, 2020). While much of the ensuing media attention has focused narrowly on misinformation, an ‘infodemic’ is characterized by volume, not by the quality of information. Online health misinformation, defined as “a health-related claim of fact that is currently false due to a lack of scientific evidence” (Chou et al., 2018), was a growing issue prior to the pandemic, but the scope of the problem has increased dramatically with the spread of COVID-19.

Misinformation is commonly attributed to sources with low journalistic integrity and, therefore, low credibility (e.g., Grinberg et al., 2019). These sources typically promote conspiracy theories and other fringe opinions. In this study, we argue that even high-credibility sources emphasizing factual and accurate reporting can be vectors of misinformation when faced with a highly uncertain context (see also, Reyna, 2020). It is important to recognize the full spectrum of misinformation—from false facts, to misleading use of data, unverifiable rumors, and fully developed conspiracy theories—as all types can impede effective communication.

In this analysis, we focused on communication within existing vaccine-focused communities on Twitter. Recent studies have demonstrated that messages pertaining to vaccination are one of the most active vectors for the spread of health misinformation and disinformation (Broniatowski et al., 2018; Larson, 2018; Walter et al., 2020). Although a small fraction of the general public, vaccine opponents have an outsized presence online and especially on Twitter (Pew Research Center, 2017). Anti-vaccine arguments have also been amplified by known malicious actors including bots and state sponsored trolls (Broniatowski et al., 2018). Despite Twitter’s recent efforts to limit the spread of misleading and false health claims, many vaccine opponent accounts remain active on the platform. As news of a novel coronavirus outbreak in Wuhan, China started to spread in the American media, accounts that frequently tweet about vaccines—both those in favor and those opposed—were among the first to start regularly tweeting about the novel virus and the public health community’s reaction.

It is also important to recognize the diversity of viewpoints within the broader Twitter vaccine community. Although some accounts tweet almost exclusively about vaccines, many others also discuss other types of content, making it possible to identify subgroups based on shared interests such as politics, public health, or current events. To better understand the spread of online health misinformation, and potentially mitigate its impact, we believe it is necessary to first understand the communication norms and topics of conversation that shape these varied subgroups. Qualitative research has demonstrated that vaccine-hesitant attitudes likely serve as a signal of social identity and can form a type of social capital in community development (Attwell & Smith, 2017). We believe a similar function may help explain the emergence of online subgroups with shared interests and communication norms, for both vaccine opponents and proponents. While a great deal of research has focused on “anti-vaxxers” online (i.e., vaccine opponents), less has studied their pro-vaccine counterparts, many of whom use Twitter as a platform not to promote vaccines directly, but to debunk and refute claims made by vaccine opponents.

To date, the majority of news coverage on COVID-19 misinformation has tended to focus on unmistakably false claims including conspiracy theories, unchecked rumors, false prevention methods, and dubious “cures” (see also Liu et al., 2020). Many of these claims have been promulgated by vaccine opponents. This should not be a surprise; many of these accounts are adept at quickly adapting new information to fit it into existing narratives that align with worldviews. Most recently this took the form of criticizing US public health measures to limit the disease spread—from launching the #FireFauci hashtag calling for Dr. Anthony Fauci’s dismissal from the President’s COVID-19 Response Team (Nguyen, 2020), to the dissemination of once-fringe conspiracy theories targeting Bill Gates and 5G wireless to more mainstream audiences (Wakabayashi et al., 2020), to appearing as a prominent presence in the multiple “resistance” protests held in state capitals to protest social distancing measures (Wilson, 2020). Most egregious was the Plandemic propaganda undermining and discrediting research on a future COVID-19 vaccine (Zadrozny & Collins, 2020). These instances also suggest an increasing overlap between the alt-right and vaccine opposition in the United States (Frenkel et al., 2020; Zhou et al., 2019). Although this type of highly visible misinformation can be dangerous, it is often easily debunked and may primarily appeal to fringe audiences. We wanted to know what vaccine opponents were sharing, beyond conspiracy theories, and whether topics varied by subgroup. We found that while roughly ⅓ of topics were likely misinformation, another ⅓ were discussion topics, and slightly under ⅓ relied on more reliable information sources, including public health announcements.

We found that vaccine advocates, and especially those who did not have medical, scientific, or public health expertise (i.e., they were not doctors, researchers, or affiliated with health organizations), but were primarily engaging in debates with vaccine opponents, have promoted mixed messages, some of which overlapped with vaccine opponents’ messages downplaying the severity of the pandemic. This is where the nuances of types of misinformation are important—tweeting a fact misrepresenting disease severity is not the same as tweeting a conspiracy theory that suggests vaccines are a government plot to track citizens—they are both part of what makes an ‘infodemic’ so challenging. COVID-19 has proven to be both highly contagious and deadly, a combination that makes the current pandemic particularly challenging to contain (CDC COVID-19 Response Team et al., 2020). By focusing on the greater risks of influenza and urging Americans to get an influenza vaccine, early messaging on COVID-19’s severity was not clearly communicated. In late May, a Gallup Poll found that while roughly two-thirds of Americans believed that coronavirus was deadlier than seasonal flu, there were still a significant number who persisted in believing the virus was less deadly including a majority of Republicans (Ritter, 2020). Singh et al. found “Flu Comparisons” to be among the most prevalent myths on Twitter early in the COVID-19 pandemic (2020). Recent work suggests that exposure to misinformation on social media—including messages downplaying disease severity—was associated with a lower likelihood of individuals engaging in social distancing practices (Bridgman et al., 2020).

What does this mean for efforts to address the COVID-19 ‘infodemic’ on Twitter? First, we need to know which accounts are spreading misinformation. To the best of our knowledge, most these highly active vaccine accounts are genuine users — not bots. While recent reports suggest that up to 50% of accounts tweeting about COVID-19 could be likely bots, our findings highlight the role of real people disseminating information within existing online communities (Roberts, 2020). Limiting fully automated accounts and misuse of amplification tools is still useful to weed out the most egregious violations but is unlikely to be helpful in this specific context. Fact checking content may be more effective, but may still not capture more nuanced forms of misinformation, especially in discussion-based topics. Online communities are not homogenous; our findings suggest online rumors tend to circulate within smaller subgroups. While many topics overlap, specific arguments are likely to vary. This suggests that a one-size-fits-all approach to combating misinformation is unlikely to work on both vaccine-oriented vaccine opponents and vaccine opponents that are motivated by conservative politics. Similarly, while health organizations and health professionals may be sharing reliable information on Twitter, some of their well-meaning allies, especially the self-proclaimed vaccine activists, may inadvertently be sending out conflicting health information. Staying aware of this subgroup of proponents and developing easily shared evidence-based online resources may be one way to improve the quality of information being shared.Second, we need to recognize that while the most obvious sources of misinformation (for instance, known anti-vaccine and conspiracy theory accounts) pre-COVID-19 will continue to spread misinformation during the pandemic, even trusted and reliable sources can still contribute to the ‘infodemic’ by spreading falsehoods and misleading facts. Further, given that these sources may be more trusted by the public, these claims are less easily debunked or dismissed. An ‘infodemic’ consists of information from multiple sources: the scientific community, policy and practice, the media, and social media (Eysenbach, 2020). As information is translated between sources, distortions can occur particularly as complex science is reinterpreted by lay audiences. As the most accessible and least filtered source, social media is likely to be most vulnerable to misinformation. By focusing only on the most blatant forms of misinformation and the actors sharing them, both the media and scholars may inadvertently mislead the public into believing that they haven’t been exposed to misinformation about COVID-19, or that this egregious forms of misinformation are the most common. By drawing attention to fringe theories, well-meaning users may also further amplify misinformation (Ahmed et al., 2020). This is why we urge scholars of misinformation to go beyond the most visible forms of misinformation to highlight the complexities and subtleties that make an ‘infodemic’ so challenging.

Findings

Finding 1: A plurality of top accounts oppose vaccination, and likely represent human users.

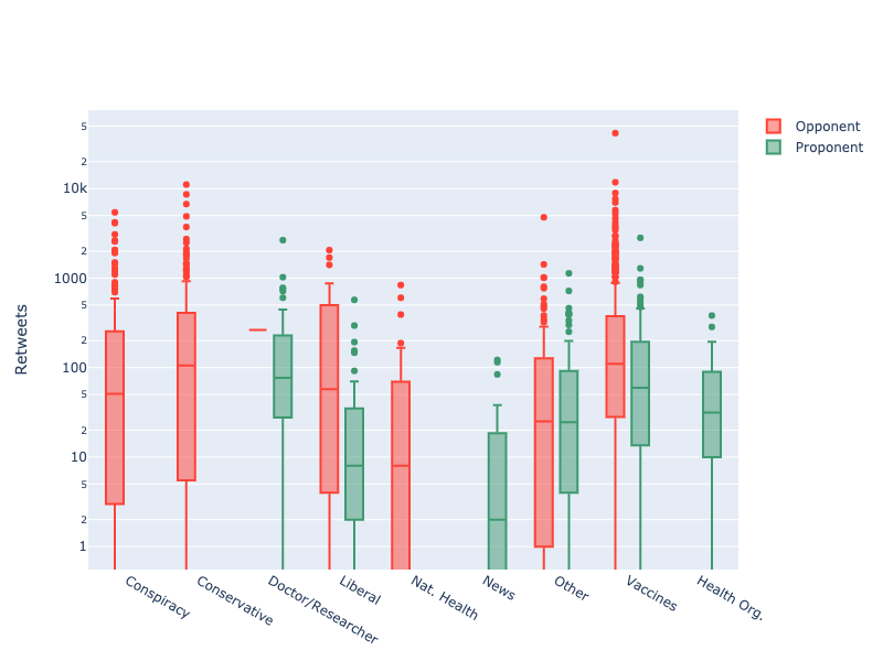

Of the 2,000 accounts in our sample, 45% (n=905) opposed vaccination, 24% (n=479) were in favor of vaccination, 15% (n=311) were no longer publicly available on Twitter, and 15% (n=305) did not indicate a clear position on vaccines. Among accounts that were retweeted at least once, vaccine opponents were retweeted significantly more frequently than vaccine proponents, t(1210)=6.86, p<0.001, after applying a logarithmic transform to correct for skewed data.

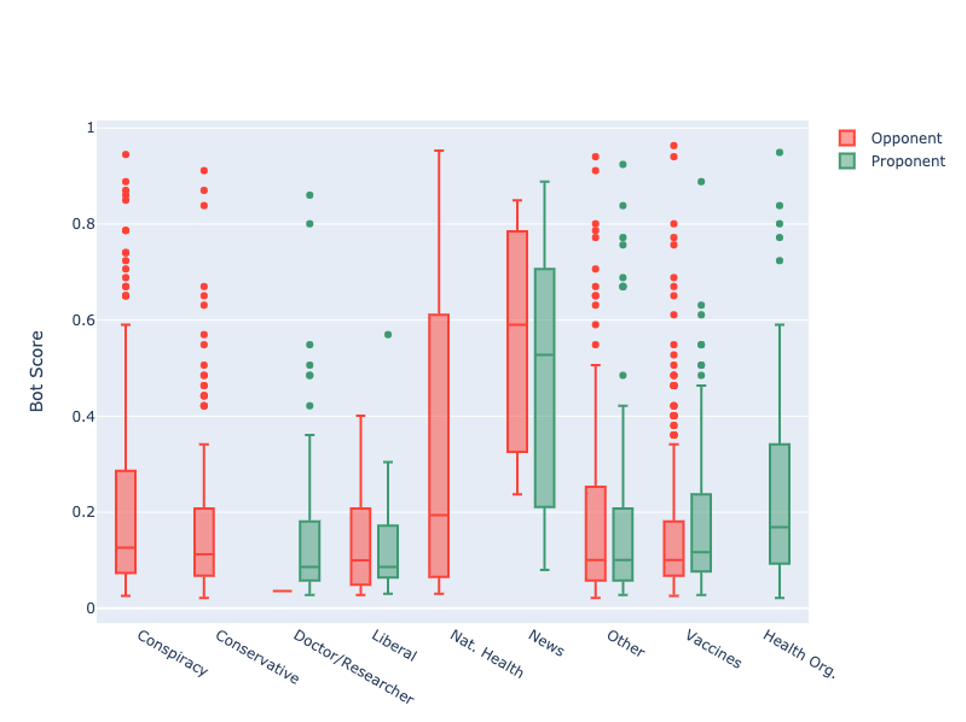

Using Botometer (Davis et al., 2016), the majority of accounts had low scores (< 0.2), indicating a low likelihood of automation. After applying a logistic transform to control for floor and ceiling effects, we did not detect any significant difference in bot scores between vaccine opponent and proponent accounts, t(1368) = 0.79, p=0.43. Pew Research Center uses a threshold of 0.43 to determine likely bots (Wojcik et al., 2018). Applying this criterion, of the 1,754 accounts with complete data, 17% (n=298) were likely to be bots. Additionally, 12% of all accounts (n=246) did not have available bot scores, suggesting the accounts had switched privacy settings (n=198, 10%) or had been closed (n=48, 2%) either voluntarily or because they had been removed and purged from Twitter for violation of terms of service (Twitter Help Center, n.d.).

Finding 2: A significant proportion of both opponents and proponents’ tweet primarily about vaccines. Vaccine opponent subgroups emerged around conservative politics and conspiracy theories. Vaccine proponent subgroups emerged around doctors and researchers and health organizations.

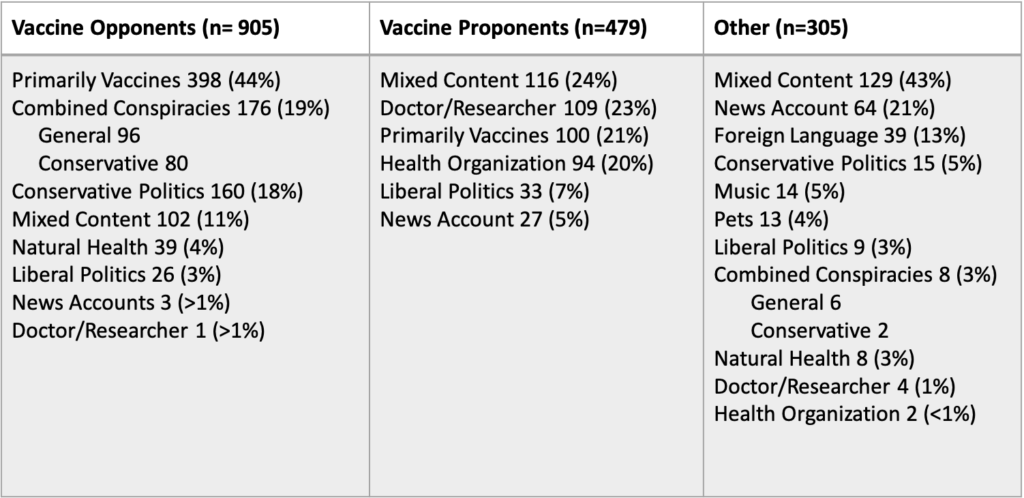

Roughly a quarter of all accounts (n=498; 25%) posted primarily vaccine content. These dedicated accounts disproportionately opposed vaccination (oppose n= 398, 80%, favor n=100, 20%), (X2 = 72.56, p<0.001). For vaccine opponents, vaccine-focused accounts are those that post almost entirely vaccine related content with few posts about other topics. This is one of the more cohesive communities we observed, with accounts retweeting information disseminated from a handful of anti-vaccine activists. For vaccine proponents, vaccine-focused accounts represent a combination of health advocates who tweet almost solely about vaccines but also accounts we dubbed “anti anti-vax” who use Twitter to engage in debates with anti-vaccine accounts and to debunk or mock anti-vaccine arguments. Another 347 (17%) accounts were sharing “mixed content” not easily classified into a single category. These represented a significantly larger proportion of vaccine proponents (favor n= 116, 33%, oppose n=102, 29%, X2 = 39.56, p <0.001).

Thirteen percent shared political content, with 9% (n=175) of accounts posting conservative content and 4% (n=78) sharing liberal content. We defined “conservative” as tweeting in support of major Republican political figures (most commonly President Trump) or against major Democratic political figures or identities (e.g. disparaging “libs”). We defined “liberal” as the reverse. Accounts sharing conservative political content were more likely to also oppose vaccines (oppose n= 160, 91%, favor n=0, 0%). Although a similar number of accounts supporting vaccines (n=33) and opposing vaccines (n=26) also shared liberal politics content, this represented a significantly larger proportion of the vaccine proponents than vaccine opponents (7% vs 4%, X2 = 12.38, p =0.0004).

Nine percent (n=184) shared conspiracist content, split into general conspiracy theories (n=104, 5%) and conservative conspiracy theories (e.g. QAnon, New World Order, etc.) (n=82, 4%). By conspiracist, we are describing attempts to “explain events as secret acts of powerful, malevolent forces”(Jolley & Douglas, 2014). In this dataset this included allusions to shadowy groups like the Illuminati, complex theories that assume technology (e.g. 5G Wireless, “chemtrails”, etc.) is part of a plot to harm citizens, and more extreme theories proposing cover-ups, wrong-doing, and corruption within public health agencies, pharmaceutical companies, and charitable organizations. We made a distinction between conspiracy theories that were largely apolitical and did not explicitly name political figures, and those that were political, and frequently overlapped with conservative politics. Accounts tended to use distinctive hashtags representing both (e.g. using #QAnon or #WWG1WGA alongside #MAGA and #Trump2020). Recognizing that this distinction may be arbitrary, we also ran analysis using the combined conspiracy theory group. Almost all (96%, n=176) conspiracist content was shared by accounts also opposing vaccines.

Doctor/researcher accounts represented 6% (n= 114) and health organizations represented 5% (n= 102) of all accounts, with the majority (95%) of accounts in favor of vaccines. Both types of accounts tended to tweet about health issues more generally, which sometimes included vaccines.

Retweet counts for vaccine opponents (X2(7) =62.02 p<0.001) and proponents (X2(5)=64.12, p<0.001) both differed significantly by category using a non-parametric Kruskal-Wallis rank sum test. Among vaccine opponents, accounts that focused primarily on vaccination, and those focusing on conservative politics, had the most retweets — both with a median of more than 100. In contrast, no accounts promoting vaccination achieved this degree of engagement. The most popular account category—doctors/researchers— achieved a median of 77 retweets and accounts focused primarily on vaccines were retweeted a median of 59.5 times.

After applying a logistic transform to control for floor and ceiling effects, bot scores also varied significantly by category among both vaccine proponents, F(5, 469) = 15.25, p < 0.001, and vaccine opponents, F(7, 887) = 7.14, p < 0.001, with news aggregators the most bot-like accounts for both opponents and proponents.

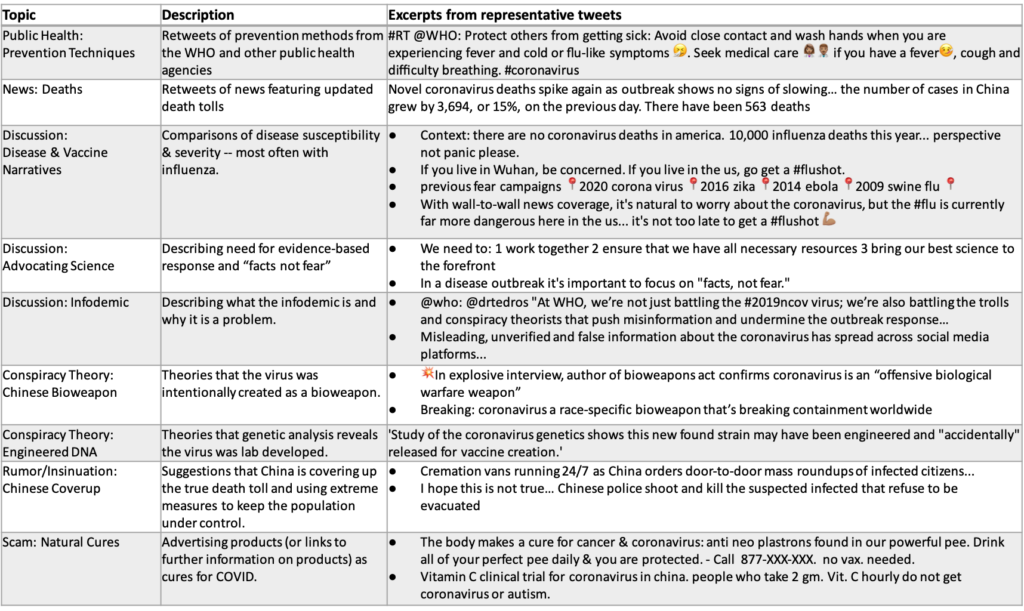

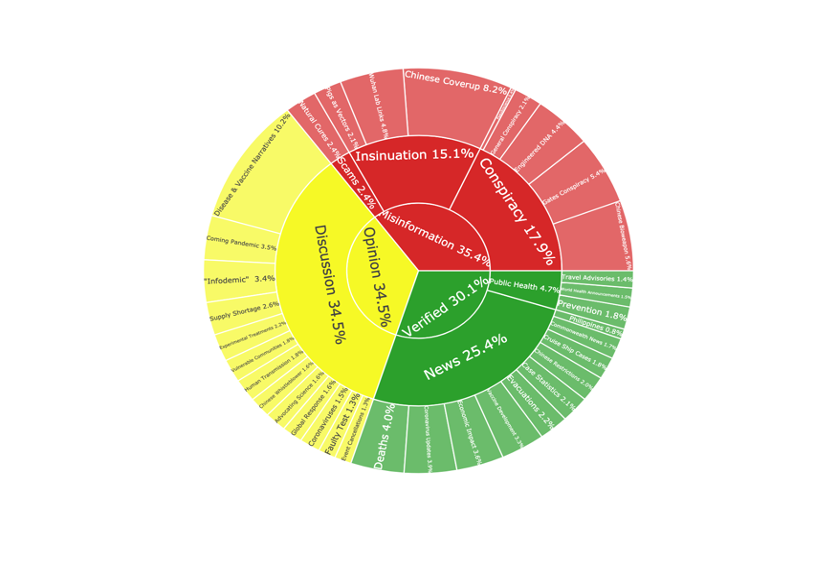

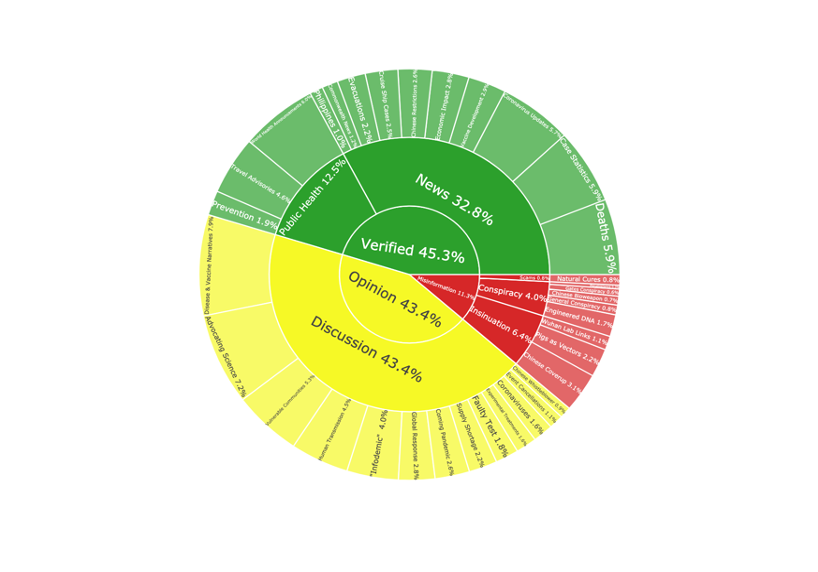

Finding 3: The quality of COVID-19 information varied. The most reliable information included public health announcements and retweets of news, less reliable information came from discussion-oriented topics. Unreliable information included 5 distinct topics on conspiracy theories, 3 on rumors and insinuations, and 1 featuring scam “cures.”

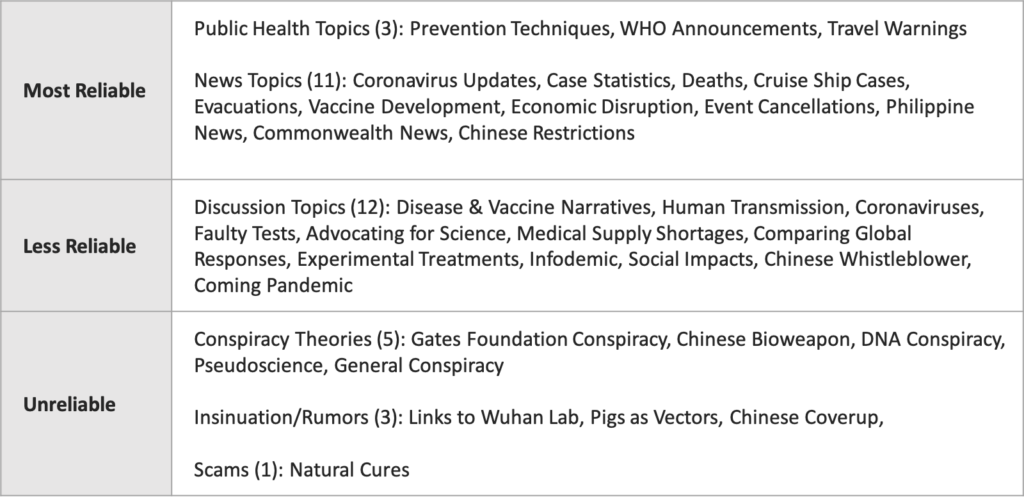

We extracted 35 topics from COVID-19 related tweets generated by these accounts (more details provided in supplemental files). Only 5 of these 35 topics featured vaccines. We grouped these four categories: public health updates, news, discussion, and misinformation. These categories can also be thought of as more reliable information (public health updates and news topics), less reliable information and opinions (discussion topics), and unreliable information (misinformation topics).

Among the most reliable information topics, public health topics tended to feature direct retweets from health organizations (See Table 3, example: “Prevention Techniques”). News topics also tended to feature direct retweets of news headlines, often without additional commentary (example: “Deaths”). Most news topics focused on disease updates and the public health response, including emerging facts about the disease, continually updated case and death statistics, and news of vaccines in development.

Discussion topics featured fewer direct retweets and more personal comments, making these topics less reliable than news and public health topics. Several of these topics are very straightforward, with a clear theme that is consistent across most tweets (example: “Advocating Science”). Other discussion topics featured users agreeing on a general problem but disagreeing on specifics (example: “Infodemic”). The most debated topics were those that combined two or more similar arguments built around shared content (example: “Disease and Vaccine Narratives”). These claims were used both for and against vaccination, but the majority were used to downplay the severity of COVID-19. Many ended with appeals to vaccinate, but others took a different tack and decried “fear campaigns” from the media and “big pharma.”

Among the unreliable topics, we identified 5 topics on conspiracy theories, 3 based on rumors and insinuation, and 1 promoting scam cures. These conspiracy theory topics featured secret plots that connected powerful individuals and institutions to intentional harm. Some of these theories have already been widely covered in the mainstream media (example: “Chinese Bioweapon”) others were less widely circulated (example: “Engineered DNA”). The category of rumors/insinuation included topics that hinted at or suggested misdeeds, but often failed to commit fully to those claims. These topics were less disease focused and seemed to have more political agendas (example: “Chinese Coverup”). The single scam topic featured attempts to market natural cures.

Finding 4: Anti-vaccine accounts were more likely to share unreliable information, including conspiracy theories about disease origins and criticism of China’s disease response. Pro-vaccine accounts shared more public health information, but also more discussion topics. Both types of accounts shared comparisons of disease severity, often downplaying the risks of COVID-19.

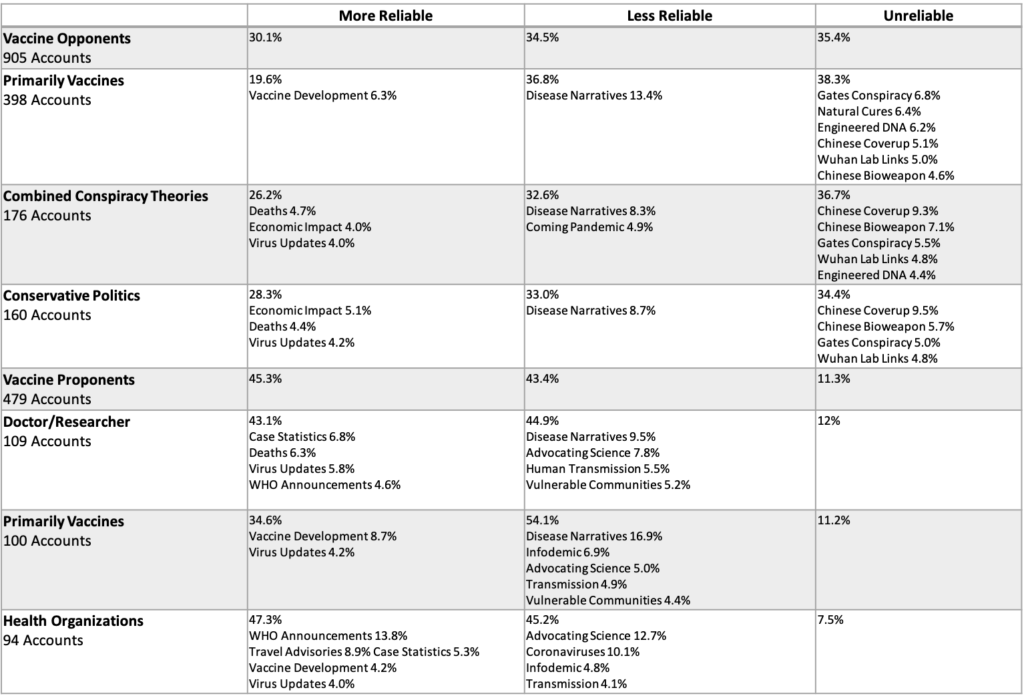

Assessing the distribution of topics among vaccine opponents we found approximately ⅓ of topics fell into each category: more reliable (30.1%), less reliable (34.5%), and unreliable (35.4%). In contrast, the distribution for vaccine proponents was roughly evenly split between more reliable (45.3%) and less reliable (43.4%), with only 11.3% unreliable topics. There are two important takeaways here: first, that vaccine opponents use a variety of content including both reliable and unreliable sources to make their arguments, and second, although it is less common, vaccine proponents are not exempt from spreading misinformation.

To focus on specific subgroups, we assessed the top three subgroups for both vaccine opponents and proponents. For vaccine opponents, the top three subgroups — primarily vaccines, combined conspiracy theories, and conservative politics — all presented similar topic breakdowns as the overall anti-vaccine topic model. Slight differences emerged: accounts tweeting primarily about vaccines and conspiracy theories shared a greater proportion of conspiracy topics than those tweeting about conservative politics. Accounts tweeting primarily about vaccines were also more clearly vaccine focused, featuring news of vaccine development and the greatest proportion of natural cures topics (6.4%). The vaccine-focused subgroup shared roughly the same proportion of conspiracy theory topics as the combined conspiracist subgroup (19.7% vs 19.8%). In addition to conspiracy theory topics, the combined conspiracy theories subgroup also incorporated reliable news (in the form of death rates and economic impacts) and discussion topics (both “disease narratives” downplaying severity, but also “coming pandemic” amplifying concerns).

Among vaccine proponents, doctors/researchers and health organizations were more closely aligned, featuring a greater proportion of reliable news and public health information. Health organizations were the only subgroup sharing a greater proportion of reliable news and public health topics than opinion topics and shared the lowest proportion of misinformation topics (7.5%). The accounts tweeting primarily about vaccines (not affiliated with doctors, researchers, or health organizations) shared a higher proportion of less reliable discussion topics (54.1%) including the largest share of the category “Disease & Vaccine Narratives”. We surmise that this subgroup was most active in spreading the “flu is worse so get a flu vaccine” type narratives within this topic.

Methods

We utilized an archive of vaccine-related tweets (Dredze et al. 2014) to compile a list of the 2,000 most active vaccine-related accounts on Twitter. Accounts were included based on the total number of messages containing the keyword “vaccine” from January 1 to December 31, 2019. The most active account in our dataset shared 16,924 tweets meeting our inclusion criterion and the least active accounts shared 120 tweets. The distribution was skewed, with a max of 16,924 mentions, median of 218 mentions (IQR: 152.75-376.25), and minimum of 120 mentions.

We examined the screen name, Twitter handle, and if available, user-provided description for each account. Over a 12-day period in March 2020, two independent annotators (AJ, AS) manually assigned each account into one of 3 categories: pro-vaccine, anti-vaccine, or other. Annotators accessed each users’ Twitter page and public profile to assess recent content. Any accounts that were no longer available on Twitter, or had switched privacy settings to be private, were excluded from annotation.

After assessing vaccine sentiment, annotators noted what other types of content were shared by each account. While many accounts posted almost exclusively about vaccines, we also identified groups of accounts focusing on both liberal and conservative politics (e.g., expressing support for, or opposition to, specific political candidates or political parties), conservative conspiracy theories (e.g. QAnon, “Deep State,” etc.), general conspiracy theories (e.g. eugenics plots, opposition to 5G wireless, theories regarding mind control, chemtrails, etc.), natural/alternative health (e.g. veganism, chiropractors, naturopaths), doctors/researchers (e.g. “tweetatricians,” science journalists, epidemiologists), news accounts (including both original content and news aggregators), and a broad category of other accounts (e.g. joke bots, pet vaccines, music stations, general content) that fell under “mixed content.” If an account posted content from across multiple content areas or no discernable pattern was observed, it was also included as “mixed content.” Intercoder reliability was assessed with a random sample of 45 accounts, with 93% agreement on vaccine sentiment (Fleiss Kappa of 0.91, 0.81-1.00) and 78% agreement on subgroup assignment (Fleiss Kappa 0.75, CI: 0.61-0.89). Discrepancies in subgroup assignments were most common between accounts as doctor/researcher and health organization, conservative politics and conservative conspiracy theories, and in assignment of “mixed.” To address inconsistencies, annotators agreed that if no clear pattern emerged, “mixed” was the best choice.

Simultaneously, using Latent Dirichlet Allocation (LDA) (Blei et al., 2003), as implemented using the MALLET software package, we fit a topic model to 80,153 tweets collected from the 2,000 accounts (McCallum, 2002). These tweets all contained keywords related to COVID-19 including: ‘coronavirus,’ ‘wuhan,’ ‘2019ncov,’ ‘sars,’ ‘mers,’ ‘2019-ncov,’ ‘ncov,’ ‘wuflu,’ ‘covid-19,’ ‘covid,’ ‘#covid19,’ ‘covid19,’ ‘sars2,’ ‘sarscov19,’ ‘covid–19,’ ‘caronavirus,’ ‘#trumpvirus,’ ‘#pencedemic,’ ‘#covid19us,’ ‘#covid19usa,’ ‘#trumpliesaboutcoronavirus,’ ‘#pencepandemic.’ Using Bayesian hyperparameter optimization (Wallach et al., 2009), we de-rived 35 topics and generated 50 representative tweets from each topic. LDA Topic modelling assumes that words that commonly co-occur are likely to belong to a topic and that documents—in this instance tweets—that share common words are likely to share a similar topic. Each tweet receives a score that indicates how likely it is that the given tweet is related to each topic, higher scores reflect a higher degree of relatedness to the topic.

After reading the most representative tweets from each topic, the same annotators (AJ, AS) independently assigned a descriptive label to each topic. Labels were assessed as having high (nearly synonymous), moderate (significant overlap), or low agreement (little overlap). The majority (77%) were found to have high agreement; for instance, for topic 1, both annotators provided labels that were roughly synonymous: “How to protect yourself from coronavirus: washing hands, covering sneezes, etc.” and “How to protect yourself, and others, from the coronavirus.” In instances of moderate or low agreement, a third team member (DAB) was introduced and the team discursively addressed concerns until a unified topic was agreed upon. For instance, topic 6 was ultimately labelled “disease and vaccine”. It included both pro-vaccine sentiment (e.g. “Flu vaccines won’t prevent coronavirus but are still necessary”) and anti-vaccine sentiment (e.g. “a COVID-19 vaccine is unnecessary”) as well as general comparisons of disease severity between COVID-19 and other diseases. Annotators agreed on the broader label to capture the overlap between the multiple perspectives reflected in the topic. The two topics with low agreement were specific responses in non-US locales (Philippines and Hong Kong) and annotators may not have recognized place names.

Topics were also labelled into information categories. These included; “public health” meaning content was largely retweets from official public health sources; “news” meaning content was largely retweets of neutral news headlines (broadly-defined); “discussion” topics included more original content from users as well as retweets of commentary; and “misinformation” which included topics sharing conspiracy theories, unverified prevention and/or treatment options, as well as topics presenting unverifiable rumors or insinuations.

Limitations

Topic models rely on probabilistic clustering algorithms to capture clusters of words that tend to co-occur in a given set of messages. Tweets that are the most representative of the underlying clusters are considered most relevant. By assessing the full-text versions of the most relevant tweets annotators were generally able to determine the prevailing sentiment of a topic (e.g. disseminating misinformation vs. debunking misinformation). However, especially for less relevant tweets, it is possible that tweets sharing similar keywords, but not similar sentiment were included but these tweets were less representative and therefore would have had minimal impact on analysis results. While this is important, given a dataset of this size it becomes less of a consideration.

We limited our analysis to English-language accounts and tweets but did not include any geographic bounds. A recent study over the same period found that while English language tweets accounted for only 34% of all tweets, they accounted for 58.7% of COVID-19 tweets (Singh et al., 2020).

Topics

Bibliography

Ahmed, W., Vidal-Alaball, J., Downing, J., & López Seguí, F. (2020). COVID-19 and the 5G conspiracy theory: Social network analysis of Twitter data. Journal of Medical Internet Research, 22(5), e19458. https://doi.org/10.2196/19458

Attwell, K., & Smith, D. T. (2017). Parenting as politics: Social identity theory and vaccine hesitant communities. International Journal of Health Governance, 22(3), 183–198. https://doi.org/10.1108/IJHG-03-2017-0008

Bridgman, A., Merkley, E., Loewen, P. J., Owen, T., Ruths, D., Teichmann, L., & Zhilin, O. (2020). The causes and consequences of covid-19 misperceptions: Understanding the role of news and social media. Harvard Kennedy School (HKS) Misinformation Review. https://doi.org/10.37016/mr-2020-028

Broniatowski, D. A., Jamison, A. M., Qi, S., AlKulaib, L., Chen, T., Benton, A., Quinn, S. C., & Dredze, M. (2018). Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. American Journal of Public Health, 108(10), 1378–1384.

CDC COVID-19 Response Team, CDC COVID-19 Response Team, Bialek, S., Boundy, E., Bowen, V., Chow, N., Cohn, A., Dowling, N., Ellington, S., Gierke, R., Hall, A., MacNeil, J., Patel, P., Peacock, G., Pilishvili, T., Razzaghi, H., Reed, N., Ritchey, M., & Sauber-Schatz, E. (2020). Severe Outcomes Among Patients with Coronavirus Disease 2019 (COVID-19)—United States, February 12–March 16, 2020. MMWR. Morbidity and Mortality Weekly Report, 69(12), 343–346. https://doi.org/10.15585/mmwr.mm6912e2

Dredze, M., Cheng, R., Paul, M.J., & Broniatowski, D. (2014). HealthTweets.org: A platform for public health surveillance using Twitter. Workshop at the 28th AAAI Conference on Artificial Intelligence, 593-596.

Eysenbach, G. (2020). How to fight an infodemic: The four pillars of infodemic management. Journal of Medical Internet Research, 22(6), e21820. https://doi.org/10.2196/21820

Frenkel, S., Decker, B., & Alba, D. (2020, May 21). How the ‘Plandemic’ movie and its falsehoods spread widely online. The New York Times. https://www.nytimes.com/2020/05/20/technology/plandemic-movie-youtube-facebook-coronavirus.html

Grinberg, N., Joseph, K., Friedland, L., Swire-Thompson, B., & Lazer, D. (2019). Fake news on Twitter during the 2016 US presidential election. Science, 363(6425), 374–378.

Jolley, D., & Douglas, K. M. (2014). The effects of anti-vaccine conspiracy theories on vaccination intentions. PloS One, 9(2), e89177.

Larson, H. J. (2018). The biggest pandemic risk? Viral misinformation. Nature, 562(309). https://doi.org/10.1038/d41586-018-07034-4

Liu, M., Caputi, T. L., Dredze, M., Kesselheim, A. S., & Ayers, J. W. (2020). Internet Searches for Unproven COVID-19 Therapies in the United States. JAMA Internal Medicine. https://doi.org/10.1001/jamainternmed.2020.1764

McCallum, A. K. (2002). Mallet: Machine learning for language toolkit. http://mallet.cs.umass.edu/about.php

Nguyen, T. (2020, April 13). How a pair of anti-vaccine activists sparked a #FireFauci furor. Politico. https://www.politico.com/news/2020/04/13/anti-vaccine-activists-fire-fauci-furor-185001

Pew Research Center. (2017). Vast majority of Americans say benefits of childhood vaccines outweigh risks. Pew Research Center. https://www.pewresearch.org/science/2017/02/02/vast-majority-of-americans-say-benefits-of-childhood-vaccines-outweigh-risks/

Reyna, V. F. (2020). A scientific theory of gist communication and misinformation resistance, with implications for health, education, and policy. Proceedings of the National Academy of Sciences.

Ritter, Z. (2020). Republicans Still Skeptical of COVID-19 Lethality. Gallup. https://news.gallup.com/poll/311408/republicans-skeptical-covid-lethality.aspx

Roberts, S. (2020, June 16). Who’s a bot? Who’s not? The New York Times.https://www.nytimes.com/2020/06/16/science/social-media-bots-kazemi.html

Singh, L., Bansal, S., Bode, L., Budak, C., Chi, G., Kawintiranon, K., Padden, C., Vanarsdall, R., Vraga, E., & Wang, Y. (2020). A first look at COVID-19 information and misinformation sharing on Twitter. ArXiv:2003.13907 [Cs].http://arxiv.org/abs/2003.13907

Vaughan, E., & Tinker, T. (2009). Effective health risk communication about pandemic influenza for vulnerable populations.American Journal of Public Health, 99(S2), S324–S332. https://doi.org/10.2105/AJPH.2009.162537

Wakabayashi, D., Alba, D., & Tracy, M. (2020, April 17). Bill Gates, at odds with Trump on virus, becomes a right-wing target. The New York Times. https://www.nytimes.com/2020/04/17/technology/bill-gates-virus-conspiracy-theories.html

Wallach, H. M., Mimno, D. M., & McCallum, A. (2009). Rethinking LDA: Why priors matter. 1973–1981.

Walter, D., Ophir, Y., & Jamieson, K. H. (2020). Russian Twitter accounts and the partisan polarization of vaccine discourse, 2015–2017. American Journal of Public Health, 110(5), 718–724. https://doi.org/10.2105/AJPH.2019.305564

Wilson, J. (2020, April 17). The rightwing groups behind wave of protests against Covid-19 restrictions. The Guardian. https://www.theguardian.com/world/2020/apr/17/far-right-coronavirus-protests-restrictions

Zadrozny, B., & Collins, B. (2020, May 7). As “#Plandemic” goes viral, those targeted by discredited scientist’s crusade warn of “dangerous” claims. NBC News. https://www.nbcnews.com/tech/tech-news/plandemic-goes-viral-those-targeted-discredited-scientist-s-crusade-warn-n1202361?campaign_id=158&emc=edit_ot_20200508&instance_id=18329&nl=on-tech-with-shira-ovide®i_id=78245561&segment_id=26965&te=1&user_id=aedd

Zarocostas, J. (2020). How to fight an infodemic. The Lancet, 395(10225), 676. https://doi.org/10.1016/S0140-6736(20)30461-X

Zhou, Y., Dredze, M., Broniatowski, D. A., & Adler, W. D. (2019). Gab: The alt-right social media platform. https://pdfs.semanticscholar.org/04f5/48097b166eefddef2815ccc83ca71ce09463.pdf

Funding

This research was supported by the National Institute of General Medical Sciences, National Institutes of Health (NIH; award 5R01GM114771).

Competing Interests

The authors report no conflicting interests.

Ethics

Our research project and protocols were reviewed and approved by the Institutional Review Board’s at the Johns Hopkins Homewood University and were determined to be exempt from human subjects review (approval no. 2011123).

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All materials needed to replicate this study are available via the Harvard Dataverse: https://doi.org/10.7910/DVN/9ICICY