Peer Reviewed

Conservatives are less accurate than liberals at recognizing false climate statements, and disinformation makes conservatives less discerning: Evidence from 12 countries

Article Metrics

2

CrossRef Citations

PDF Downloads

Page Views

Competing hypotheses exist on how conservative political ideology is associated with susceptibility to misinformation. We performed a secondary analysis of responses from 1,721 participants from twelve countries in a study that investigated the effects of climate disinformation and six psychological interventions to protect participants against such disinformation. Participants were randomized to receiving twenty real climate disinformation statements or to a passive control condition. All participants then evaluated a separate set of true and false climate-related statements in support of or aiming to delay climate action in a truth discernment task. We found that conservative political ideology is selectively associated with increased misidentification of false statements aiming to delay climate action as true. These findings can be explained as a combination of expressive responding, partisanship bias, and motivated reasoning.

Research Questions

- How does political ideology influence people’s discernment of true and false statements about climate change and climate mitigation action?

- How does exposure to climate disinformation influence the impact of political ideology on truth discernment of true and false statements about climate change and climate mitigation actions?

Research note Summary

- We performed a secondary analysis of a data set (Spampatti, Hahnel et al., 2023) to study the effects of political ideology on climate truth discernment.

- There was strong evidence that participants with conservative political ideology selectively misidentify more false statements aiming to delay climate action as true.

- There was suggestive evidence that climate disinformation selectively lowered conservative participants’ discernment of true statements supporting climate action.

- These findings challenge current hypotheses about motivated reasoning and bias-of-the-right and seem to reflect a combination of expressive responding and partisanship bias with (motivated) reasoning.

Implications

Despite the scientific consensus and the urgency of implementing climate change mitigation actions to limit loss of ecosystems, natural disasters, and forced migration (Pörtner et al., 2022; Romanello et al., 2023), climate objectives are not being reached (Richardson et al., 2023; Stoddard et al., 2021). Disinformation about climate science and action, spread by vested interests, is a main cause of this inertia (Hornsey & Lewandowsky, 2022; Oreskes & Conway, 2010; Pörtner et al., 2022).

Conservative political ideology is a prominent risk factor for susceptibility to misinformation and to skepticism about climate science (Ecker et al., 2022; Hornsey et al., 2018; van Bavel et al., 2021). Non-experimental evidence shows that people who endorse a conservative ideology are more frequently exposed to false information about climate change (Falkenberg et al., 2022), are more skeptical of climate change (Hornsey et al., 2018), and share misinformation more often (Guess et al., 2019; Nikolov et al., 2019). Conservatives may furthermore be resistant to behavioral interventions against misinformation (Pretus et al., 2023; Rathje et al., 2022).

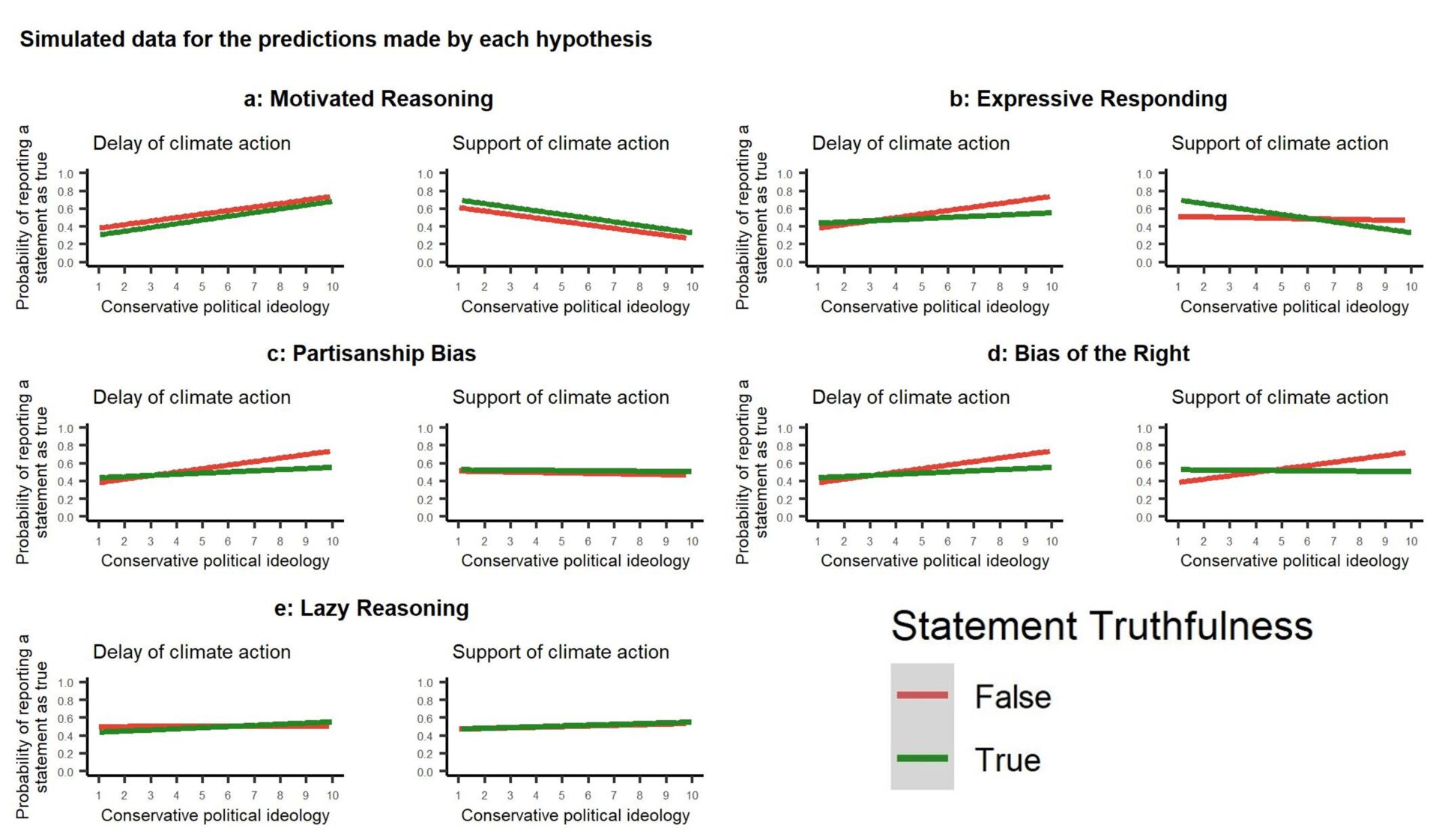

Different hypotheses have been proposed to describe the mechanisms behind conservatives’ misinformation susceptibility (Borukhson et al., 2022). Overall, these hypotheses refer to two dimensions of information that can affect the ability to discriminate between true and false information (i.e., truth discernment): 1) being true or false, and 2) being congruent or incongruent with a person’s political ideology. Crucially, each hypothesis leads to different predictions of how political ideology may influence truth discernment (see Figure 1 in Appendix B):

- Motivated reasoning hypothesis: People preferentially process information congruent with their own political ideology (Druckman & McGrath, 2019; Kunda, 1990). This suggests that conservatives (liberals) are more likely to rate statements congruent with their conservative (liberal) ideology as true and are more likely to rate statements incongruent with their ideology as false. In this perspective, climate misinformation “fits” conservatives’ pre-existing belief network better (Hornsey et al., 2018; Jylhä & Akrami, 2015), and thus climate misinformation enjoys less epistemic scrutiny during information processing.

- Expressive responding hypothesis: People agree with information that reflects the positions expressed in their political environment (Jerit & Zhao, 2020; Ross & Levy, 2023). This suggests that conservatives (liberals) are more likely to rate false (true) statements congruent with their ideology as true because such statements are more present in their information ecosystem (Falkenberg et al., 2022).

- Partisanship bias hypothesis: Whereas expressive responding predicts that people will agree with any political position prevalent in their political environment, the partisanship bias hypothesis propounds that people interpret information in a biased manner if it is aligned with a cherished ideological worldview or has been internalized into the identity of the political ingroup. As climate skepticism became internalized in conservative political ideology and identity (Doell et al., 2021), conservatives may be more likely to agree with climate misinformation as it now reflects their ideology and political identity (van Bavel et al., 2021). The partisanship bias thus suggests that conservatives are more likely to rate false statements congruent with their ideology as true than liberals.

- Bias of the right hypothesis: People who endorse a conservative ideology might be more susceptible to misinformation (Baron & Jost, 2019). This could be due to conservatives’ political views more strongly affecting truth discernment (Jost et al., 2018) while being less aware of this influence (Geers et al., 2024). This suggests that conservatives are more likely to rate (both ideologically congruent and ideologically incongruent) false statements as true than liberals.

- Lazy reasoning hypothesis: Misinformation susceptibility is primarily driven by lack of careful reasoning when encountering information (Pennycook & Rand, 2019). This suggests that conservatives and liberals are equally likely to rate both ideologically congruent and ideologically incongruent statements as true.

To understand how political ideology influences truth discernment of climate information and misinformation, we conducted a secondary analysis of a cross-cultural study on susceptibility to climate misinformation (Spampatti, Hahnel et al., 2023). In the original study, 1,721 participants from the United States, Canada, the United Kingdom, Ireland, Australia, New Zealand, Singapore, Philippines, India, Pakistan, Nigeria, and South Africa had to discern whether twenty statements about climate change were true or false (climate truth discernment task; see Methods). The statements were distributed on two dimensions: 1) true and false statements about climate change and climate mitigation action (see Table 1), and 2) statements congruent with conservative ideology—i.e., delaying climate action (Lamb et al., 2020)—and statements incongruent with a conservative ideology—i.e., supporting climate action (Berkebile-Weinberg, Goldwert, et al., 2024; Hornsey et al., 2018). We measured how each dimension (true/false, ideologically congruent/incongruent, and their interaction) interacts with political ideology when people discern the veracity of climate statements and compared the results to the predictions of the different hypotheses.

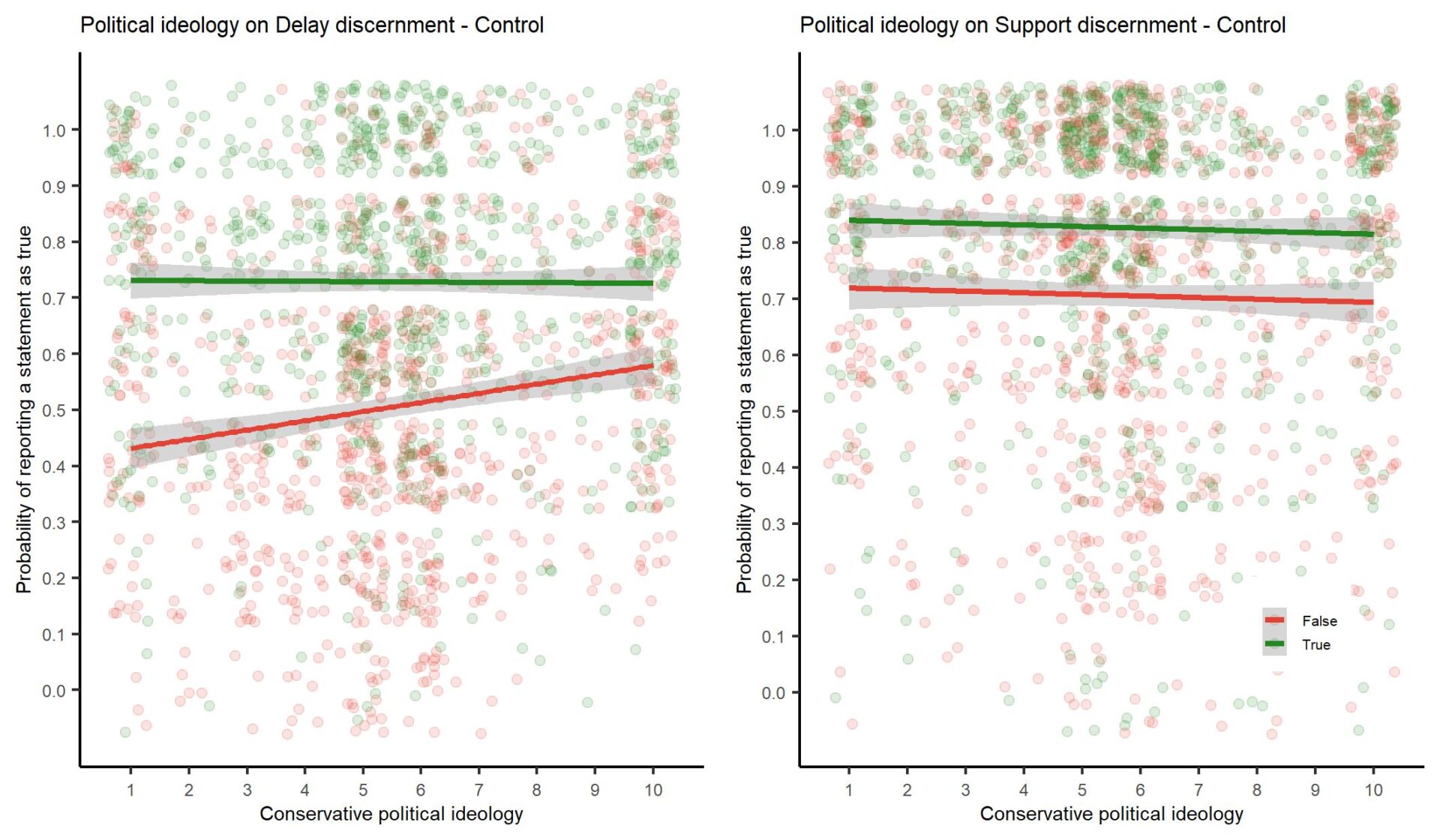

Conservatives were worse at recognizing false statements aiming to delay climate action as false (see Figure 2). The more conservative the participants, the more likely they were to evaluate these false, but ideologically congruent, statements to be true. The effect of political ideology did not extend to evaluating true statements supporting climate action as false more often. Among the simulations, these findings best overlapped with the visualization of the partisanship bias hypothesis (see Figure 1c and Figure 2): More conservative participants were more closely aligned to false, ideologically congruent statements about climate change. The categorization of true, ideologically incongruent statements was not impeded and was quite high, as in previous findings (Pennycook et al., 2023). Alternatively, the data depicted in Figure 2 also matched the expressive responding simulation (see Figure 1b). Congruent with this hypothesis, conservative participants who received no disinformation (i.e., passive control condition) may have recognized both the false information aiming to delay climate action and the true information in support of climate action as true because they are both present in their information environment (Effrosynidis et al., 2022; Falkenberg et al., 2022; Flamino et al., 2023; Lamb et al., 2020). The findings do not suggest that conservatives are more susceptible to disinformation (Baron & Jost, 2019), nor that people engage in motivated reasoning (Kunda, 1990), as evidenced by the largely non-overlapping slopes of the simulations (see Figure 1a–d) and the actual data (see Figure 2).

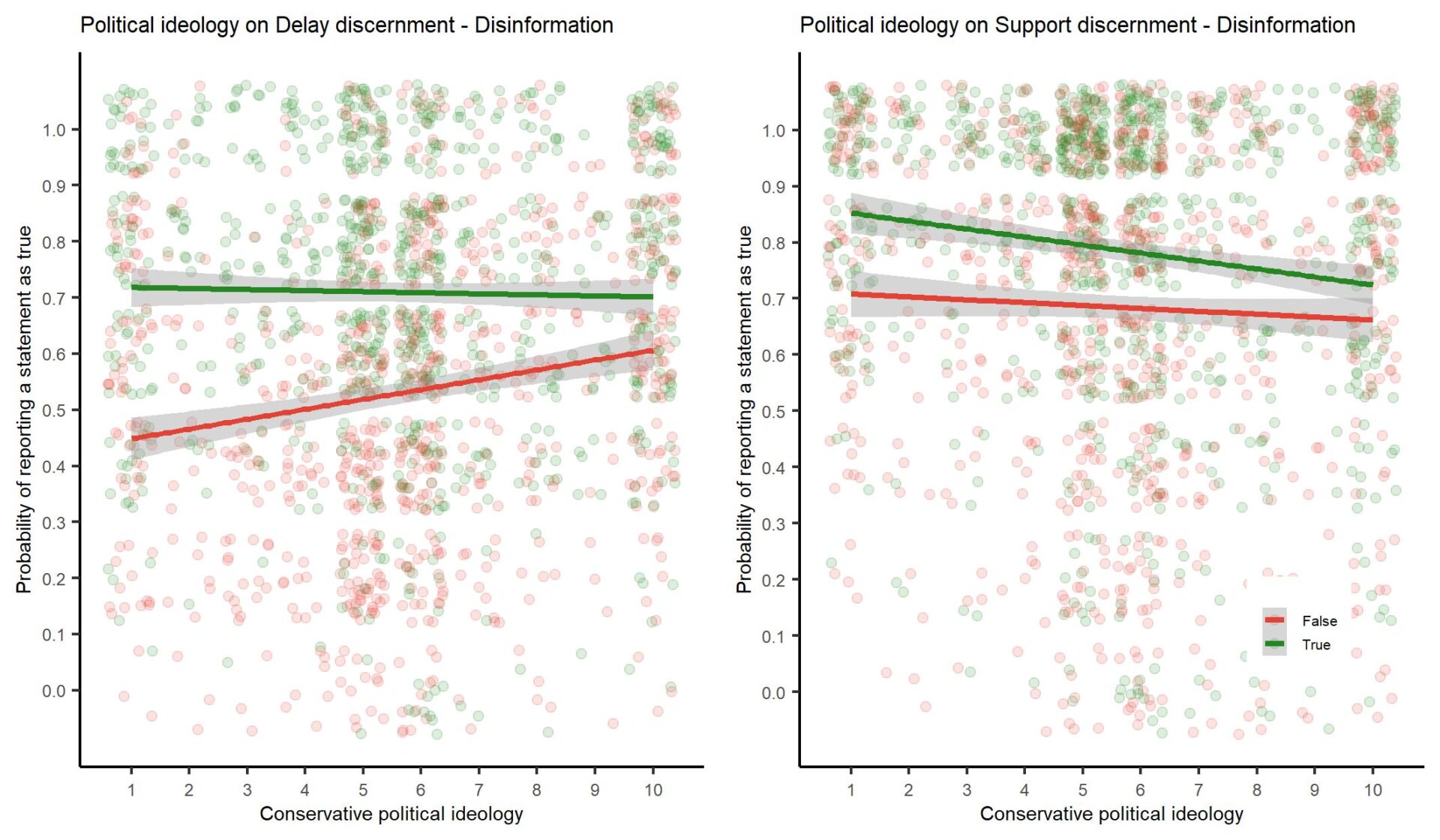

After reading twenty climate disinformation statements before completing the truth discernment task, conservative participants became more likely to also report true statements supporting climate action as false (see Figure 3). This evidence was suggestive, as the p-value was between 0.05 and 0.005 (Benjamin et al., 2018). In other words, processing disinformation stimulated conservative participants to engage in reasoning and accept false statements delaying climate action while rejecting true statements supporting climate action. This reasoning was not fully motivated: If it were, truth ratings about ideologically congruent false statements arguing for delay (i.e., ideologically congruent) should have been increasingly impaired (Kunda, 1990).

Overall, the findings suggest that conservatives can accurately recognize and categorize true information supporting climate action but inaccurately categorize ideologically congruent but false statements. Their truth ratings of true information supporting climate action is only impaired if they are exposed to climate disinformation. Comparing these findings with the literature-derived hypotheses (i.e., visually comparing the slopes from Figure 1 with the slopes from Figures 2 and 3) showcases how truth discernment of climate statements is best explained by a mix of expressive responding (see Figure 1b), motivated reasoning (see Figure 1a), and partisanship bias (see Figure 1c).

Both findings also have practical implications. Although recent work calls to boost true information (e.g., Acerbi et al., 2022), our results suggest that this strategy may suffer from a ceiling effect in the climate domain because people across the ideological spectrum recognize true information supporting climate action. Instead, conservatives misidentify misinformation delaying climate action as true more and their recognition of true information supporting climate action is more affected by disinformation. Redirecting conservatives from their information environment where false information delaying climate action is more prevalent (Falkenberg et al., 2022) towards accurate climate communicators may reduce exposure to and belief in this type of misinformation (van Bavel et al., 2021). Fighting disinformation also remains important but requires the development of better interventions, tested against validated stimuli (Spampatti, Brosch et al., 2023) and tailored to conservative audiences (Pretus et al., 2023).

Findings

Finding 1: Conservatives are selectively susceptible to false statements arguing for the delay of climate action.

We analyzed data from the passive control condition with a multilevel model, with the sum of statements categorized as true as the dependent variable (following Maertens et al., 2023; see FigureB1 in Appendix B) predicted by political ideology, true and false statements (factor), statements supporting or delaying climate action (factor), and their interactions. The model also contained a random intercept per participant, a random intercept per country, and age and gender as covariates. The results were replicated using signal detection theory (see Appendix D).

Crucially, the three-way interaction between political ideology and the two dimensions of climate statements was significant, F(1, 2604) = 9.1016, p < .001. Simple slopes within the four types of climate statements show that the more conservative participants were, the more frequently they evaluated false statements delaying climate action as being true, F-ratio = 20.176, p < .001 (see Figure 2). Political ideology did not influence evaluating true statements delaying climate action (F-ratio = 0.400, p = .53), false statements supporting climate action (F-ratio = 1.739, p = .19), nor true statements supporting climate action (F-ratio = 1.664, p = .20). Equivalence tests (Lakens, 2017) confirmed that the associations between political ideology and truth ratings of true statements supporting climate action, z(868) = 2.0684, p = .02, r = -0.03, 90% CI[-0.09, 0.03], and delaying climate action, z(868) = 2.7686, p = .003, r = -0.006, 90% CI[-0.06, 0.05]; and false of statements supporting climate action, z(868) = 2.1773, p = .015, r = -0.03, 90% CI[-0.08, 0.03], were small enough to be practically meaningless (significantly smaller than r = 0.1).

Finding 2: Climate disinformation only hampers conservatives’ ability to accurately evaluate true statements supporting climate action.

We analyzed data from the disinformation condition with the same multilevel model as the passive control condition (see Figure B2 in Appendix B).1We analyzed the two conditions separately to avoid testing the significance of and interpreting a four-way interaction. The three-way interaction between political ideology and the dimensions of climate information was not significant, F(1, 2559) = 9.1016, p = .08. Upon visual inspection of the data and because three-way interactions are frequently underpowered (Baranger et al., 2023), we directly tested the association between political ideology and true statements supporting climate action. This revealed a significant negative correlation, z(853) = -4.1087, p <. 001, r = -0.14, 95% CI[-0.21, -0.07]: more conservative participants were more likely to evaluate true statements supporting climate action as false. The association between political ideology and false statements delaying climate action was also significant, z(853) = 4.9128, p < .001, r = 0.17, 95% CI[0.10, 0.23], and an equivalence test suggested that this association was practically the same between the two experimental conditions (z = 0.0908, Δr = -0.01, p = .03). Equivalence tests confirmed that the association between political ideology and number of true statements about climate delay rated as true, z(853) = 2.3461, p = .009, r= -0.02, 90% CI[-0.08, 0.04], and false statements supporting climate action rated as true, z(868) = 1.6271, p = .052, r = -0.04, 90% CI[-0.10, 0.01], was small enough to be practically meaningless in the disinformation condition.

Methods

The experimental methods are described in full in Spampatti, Hahnel et al. (2023; open materials are available at https://osf.io/m58zx). After consenting, participants reported their demographics (gender, age, education), completed an individual differences measure (Cognitive Reflection Task Version 2; Thomson & Oppenheimer, 2016), and responded to their political ideology in a single item with a 10-point scale presented in a random order. The single item stated: “Conservative/Right and Liberal/Left are terms that are frequently used to describe somebody’s political ideology. Please indicate in the following scale how you would place yourself in terms of your political ideology. 10-point scale: 1 = Extreme liberalism/left to 10 = Extreme conservativism/right.” A “two-strikes-you’re out” attention check (“Please select ‘3’ to make sure you are paying attention”) first triggered a warning and a time penalty, then was presented a second time to screen out inattentive participants (n = 10). The remaining participants were randomly allocated to one of eight conditions, two of which are of current interest: the passive control condition and the disinformation condition. In the disinformation condition, participants received twenty real climate disinformation statements (in randomized order, as a screenshot of an anonymous post with a 2s time lock), taken from a validated set of climate disinformation statements (Spampatti, Brosch, et al., 2023; see Table A2 in Appendix A).

Participants then responded to the climate change perceptions scale (van Valkengoed et al., 2021) and completed the Work for Environmental Protection Task (Lange & Dewitte, 2021), two dependent variables not of interest for this article, and the climate-related truth discernment task, inspired by a domain-general truth discernment task (Maertens et al., 2023). Participants categorized 20 climate-related statements as false or real (“Please categorize the following statements as either ‘False Statement’ or ‘Real Statement’”; binary choice: [Real]; [False], item and response order randomized). All statements of the truth discernment task were generated with an AI tool (ChatGPT, Version 4), fact-checked, and unanimously selected by the authors. The statements were equally divided between true and false headlines and between supporting or delaying climate science and action (see Table 1). The survey duration was 28 minutes.

Topics

Bibliography

Acerbi, A., Altay, S., & Mercier, H. (2022). Research note: Fighting misinformation or fighting for information? Harvard Kennedy School (HKS) Misinformation Review, 3(1). https://doi.org/10.37016/mr-2020-87

Baranger, D. A. A., Finsaas, M. C., Goldstein, B. L., Vize, C. E., Lynam, D. R., & Olino, T. M. (2023). Tutorial: Power analyses for interaction effects in cross-sectional regressions. Advances in Methods and Practices in Psychological Science, 6(3). https://doi.org/10.1177/25152459231187531

Baron, J., & Jost, J. T. (2019). False equivalence: Are liberals and conservatives in the United States equally biased? Perspectives on Psychological Science, 14(2), 292–303. https://doi.org/10.1177/1745691618788876

Batailler, C., Brannon, S. M., Teas, P. E., & Gawronski, B. (2022). A signal detection approach to understanding the identification of fake news. Perspectives on Psychological Science, 17(1), 78–98. https://doi.org/10.1177/1745691620986135

Benjamin, D. J., Berger, J. O., Johannesson, M., Nosek, B. A., Wagenmakers, E.-J., Berk, R., Bollen, K. A., Brembs, B., Brown, L., Camerer, C., Cesarini, D., Chambers, C. D., Clyde, M., Cook, T. D., De Boeck, P., Dienes, Z., Dreber, A., Easwaran, K., Efferson, C., … & Johnson, V. E. (2018). Redefine statistical significance. Nature Human Behaviour, 2, 6–10. https://doi.org/10.1038/s41562-017-0189-z

Berkebile-Weinberg, M., Goldwert, D., Doell, K., van Bavel, J. J., & Vlasceanu, M. (2024). The differential impact of climate interventions along the political divide. Nature Communications 15(1), 3885. https://doi.org/10.1038/s41467-024-48112-8

Borukhson, D., Lorenz-Spreen, P., & Ragni, M. (2022). When does an individual accept misinformation? An extended investigation through cognitive modeling. Computational Brain & Behavior, 5(2), 244–260. https://doi.org/10.1007/s42113-022-00136-3

Doell, K. C., Pärnamets, P., Harris, E. A., Hackel, L. M., & van Bavel, J. J. (2021). Understanding the effects of partisan identity on climate change. Current Opinion in Behavioral Sciences, 42, 54–59. https://doi.org/10.1016/j.cobeha.2021.03.013

Druckman, J. N., & McGrath, M. C. (2019). The evidence for motivated reasoning in climate change preference formation. Nature Climate Change, 9(2), 111–119. https://doi.org/10.1038/s41558-018-0360-1

Ecker, U. K. H., Lewandowsky, S., Cook, J., Schmid, P., Fazio, L. K., Brashier, N., Kendeou, P., Vraga, E. K., & Amazeen, M. A. (2022). The psychological drivers of misinformation belief and its resistance to correction. Nature Reviews Psychology, 1(1), 13–29. https://doi.org/10.1038/s44159-021-00006-y

Effrosynidis, D., Karasakalidis, A. I., Sylaios, G., & Arampatzis, A. (2022). The climate change Twitter dataset. Expert Systems with Applications, 204, 117541. https://doi.org/10.1016/j.eswa.2022.117541

Falkenberg, M., Galeazzi, A., Torricelli, M., Di Marco, N., Larosa, F., Sas, M., Mekacher, A., Pearce, W., Zollo, F., Quattrociocchi, W., & Baronchelli, A. (2022). Growing polarization around climate change on social media. Nature Climate Change, 12(12), 1114–1121. https://doi.org/10.1038/s41558-022-01527-x

Flamino, J., Galeazzi, A., Feldman, S., Macy, M. W., Cross, B., Zhou, Z., Serafino, M., Bovet, A., Makse, H. A., & Szymanski, B. K. (2023). Political polarization of news media and influencers on Twitter in the 2016 and 2020 US presidential elections. Nature Human Behaviour, 7(6), 904–916. https://doi.org/10.1038/s41562-023-01550-8

Geers, M., Fischer, H., Lewandowsky, S., & Herzog, S. M. (2024). The political (a)symmetry of metacognitive insight into detecting misinformation. Journal of Experimental Psychology: General, 153(8), 1961–1972. https://doi.org/10.1037/xge0001600

Guess, A., Nagler, J., & Tucker, J. (2019). Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Science Advances, 5(1). https://doi.org/10.1126/sciadv.aau4586

Guest, O., & Martin, A. E. (2021). How computational modeling can force theory building in psychological science. Perspectives on Psychological Science, 16(4), 789–802. https://doi.org/10.1177/1745691620970585

Hornsey, M. J., Harris, E. A., & Fielding, K. S. (2018). Relationships among conspiratorial beliefs, conservatism and climate scepticism across nations. Nature Climate Change, 8(7), 614–620. https://doi.org/10.1038/s41558-018-0157-2

Hornsey, M. J., & Lewandowsky, S. (2022). A toolkit for understanding and addressing climate scepticism. Nature Human Behaviour, 6(11), 1454–1464. https://doi.org/10.1038/s41562-022-01463-y

Jerit, J., & Zhao, Y. (2020). Political misinformation. Annual Review of Political Science, 23(1), 77–94. https://doi.org/10.1146/annurev-polisci-050718-032814

Jost, J. T., van der Linden, S., Panagopoulos, C., & Hardin, C. D. (2018). Ideological asymmetries in conformity, desire for shared reality, and the spread of misinformation. Current Opinion in Psychology, 23, 77–83. https://doi.org/10.1016/j.copsyc.2018.01.003

Jylhä, K. M., & Akrami, N. (2015). Social dominance orientation and climate change denial: The role of dominance and system justification. Personality and Individual Differences, 86, 108–111. https://doi.org/10.1016/j.paid.2015.05.041

Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498. https://doi.org/10.1037/0033-2909.108.3.480

Lakens, D. (2017). Equivalence tests: A practical primer for t tests, correlations, and meta-analyses. Social Psychological and Personality Science, 8(4), 355–362. https://doi.org/10.1177/1948550617697177

Lamb, W. F., Mattioli, G., Levi, S., Roberts, J. T., Capstick, S., Creutzig, F., Minx, J. C., Müller-Hansen, F., Culhane, T., & Steinberger, J. K. (2020). Discourses of climate delay. Global Sustainability, 3, e17. https://doi.org/10.1017/sus.2020.13

Lange, F., & Dewitte, S. (2021). The Work for Environmental Protection Task: A consequential web-based procedure for studying pro-environmental behavior. Behavior Research Methods, 54(1), 133–145. https://doi.org/10.3758/s13428-021-01617-2

Maertens, R., Götz, F. M., Golino, H. F., Roozenbeek, J., Schneider, C. R., Kyrychenko, Y., Kerr, J. R., Stieger, S., McClanahan, W. P., Drabot, K., He, J., & van der Linden, S. (2023). The misinformation susceptibility test (MIST): A psychometrically validated measure of news veracity discernment. Behavior Research Methods, 56(3), 1863–1899. https://doi.org/10.3758/s13428-023-02124-2

Nikolov, D., Lalmas, M., Flammini, A., & Menczer, F. (2019). Quantifying biases in online information exposure. Journal of the Association for Information Science and Technology, 70(3), 218–229. https://doi.org/10.1002/asi.24121

Oreskes, N., & Conway, E. M. (2010). Merchants of doubt: How a handful of scientists obscured the truth on issues from tobacco smoke to global warming. Bloomsbury Publishing.

Pennycook, G., Bago, B., & McPhetres, J. (2023). Science beliefs, political ideology, and cognitive sophistication. Journal of Experimental Psychology: General, 152(1), 80–97. https://doi.org/10.1037/xge0001267

Pennycook, G., & Rand, D. G. (2019). Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188, 39–50. https://doi.org/10.1016/j.cognition.2018.06.011

Pörtner, H.-O., Roberts, D. C., Tignor, M., Poloczanska, E. S., Mintenbeck, K., Alegría, A., Craig, M., Langsdorf, S., Löschke, S., Möller, V., Okem, A., & Rama, B. (eds.) (2022). Climate Change 2022: Impacts, adaptation and vulnerability. Working Group II Contribution to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change. Intergovernmental Panel on Climate Change (IPCC). Cambridge University Press. https://doi.org/10.1017/9781009325844

Pretus, C., Servin-Barthet, C., Harris, E. A., Brady, W. J., Vilarroya, O., & van Bavel, J. J. (2023). The role of political devotion in sharing partisan misinformation and resistance to fact-checking. Journal of Experimental Psychology: General, 152(11), 3116–3134. https://doi.org/10.1037/xge0001436

Rathje, S., Roozenbeek, J., Traberg, C. S., Bavel, J. J. V., & van der Linden, D. S. (2022). Letter to the editors of Psychological Science: Meta-analysis reveals that accuracy nudges have little to no effect for U.S. conservatives: Regarding Pennycook et al. (2020). PsyArXiv. https://doi.org/10.31234/osf.io/945na

Richardson, K., Steffen, W., Lucht, W., Bendtsen, J., Cornell, S. E., Donges, J. F., Drüke, M., Fetzer, I., Bala, G., von Bloh, W., Feulner, G., Fiedler, S., Gerten, D., Gleeson, T., Hofmann, M., Huiskamp, W., Kummu, M., Mohan, C., Nogués-Bravo, D., … Rockström, J. (2023). Earth beyond six of nine planetary boundaries. Science Advances, 9(37), eadh2458. https://doi.org/10.1126/sciadv.adh2458

Romanello, M., di Napoli, C., Green, C., Kennard, H., Lampard, P., Scamman, D., Walawender, M., Ali, Z., Ameli, N., Ayeb-Karlsson, S., Beggs, P. J., Belesova, K., Ford, L. B., Bowen, K., Cai, W., Callaghan, M., Campbell-Lendrum, D., Chambers, J., Cross, T. J., … Costello, A. (2023). The 2023 report of the Lancet Countdown on health and climate change: The imperative for a health-centred response in a world facing irreversible harms. The Lancet, 402(10419), 2346–2394. https://doi.org/10.1016/S0140-6736(23)01859-7

Ross, R. M., & Levy, N. (2023). Expressive responding in support of Donald Trump: An extended replication of Schaffner and Luks (2018). Collabra: Psychology, 9(1), 68054. https://doi.org/10.1525/collabra.68054

Spampatti, T., Brosch, T., Mumenthaler, C., & Hahnel, U. J. J. (2023). Blueprint of a smokescreen: Introducing the validated climate disinformation corpus for behavioral research on combating climate disinformation. PsyArXiv. https://doi.org/10.31234/osf.io/v7895

Spampatti, T., Hahnel, U. J. J., Trutnevyte, E., & Brosch, T. (2023). Psychological inoculation strategies to fight climate disinformation across 12 countries. Nature Human Behaviour, 8(2), 380–398. https://doi.org/10.1038/s41562-023-01736-0

Stoddard, I., Anderson, K., Capstick, S., Carton, W., Depledge, J., Facer, K., Gough, C., Hache, F., Hoolohan, C., Hultman, M., Hällström, N., Kartha, S., Klinsky, S., Kuchler, M., Lövbrand, E., Nasiritousi, N., Newell, P., Peters, G. P., Sokona, Y., … Williams, M. (2021). Three decades of climate mitigation: Why haven’t we bent the global emissions curve? Annual Review of Environment and Resources, 46(1), 653–689. https://doi.org/10.1146/annurev-environ-012220-011104

Thomson, K. S., & Oppenheimer, D. M. (2016). Investigating an alternate form of the cognitive reflection test. Judgment and Decision Making. 11, 99–113. https://doi.org/10.1017/S1930297500007622

van Bavel, J. J., Harris, E. A., Pärnamets, P., Rathje, S., Doell, K. C., & Tucker, J. A. (2021). Political psychology in the digital (mis)information age: A model of news belief and sharing. Social Issues and Policy Review, 15(1), 84–113. https://doi.org/10.1111/sipr.12077

van Valkengoed, A. M., Steg, L., & Perlaviciute, G. (2021). Development and validation of a climate change perceptions scale. Journal of Environmental Psychology, 76, 101652. https://doi.org/10.1016/j.jenvp.2021.101652

Funding

The study was funded by the Canton of Geneva and the Service Industriels de Genève.

Competing Interests

The authors declare no competing interests.

Ethics

The study has been approved by the ethical commission of the University of Geneva. Participants explicitly provided informed consent. Participants were asked to self-identify in terms of their gender; options given where “male,” “female,” “non-binary/other,” “prefer not to disclose” (defined by the investigator). Gender self-identification was collected as it is a moderator for beliefs about climate change (Hornsey et al., 2016).

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited

Data Availability

Data can be retrieved from the parent article (Spampatti, Hahnel et al., 2023). The R code necessary to reproduce our results is available via the Harvard Dataverse at https://doi.org/10.7910/DVN/NMFRU9.