Peer Reviewed

How trust in experts and media use affect acceptance of common anti-vaccination claims

Article Metrics

65

CrossRef Citations

PDF Downloads

Page Views

Surveys of nearly 2,500 Americans, conducted during a measles outbreak, suggest that users of traditional media are less likely to be misinformed about vaccines than social media users. Results also suggest that an individual’s level of trust in medical experts affects the likelihood that a person’s beliefs about vaccination will change.

Research Questions

- How does the use of traditional media vs social media affect the belief in false information regarding vaccines in the US population?

- What is the relationship between trust in medical experts and acceptance or rejection of common anti-vaccination claims?

Essay Summary

- Drawing on evidence gathered in two periods in 2019 (i.e., February 28-March 25, 2019 and September 13-October 2, 2019) from a nationally representative survey panel of Americans, we studied how anti-vaccination claims are widely held, persist, and relate to an individual’s media consumption and levels of trust in medical experts.

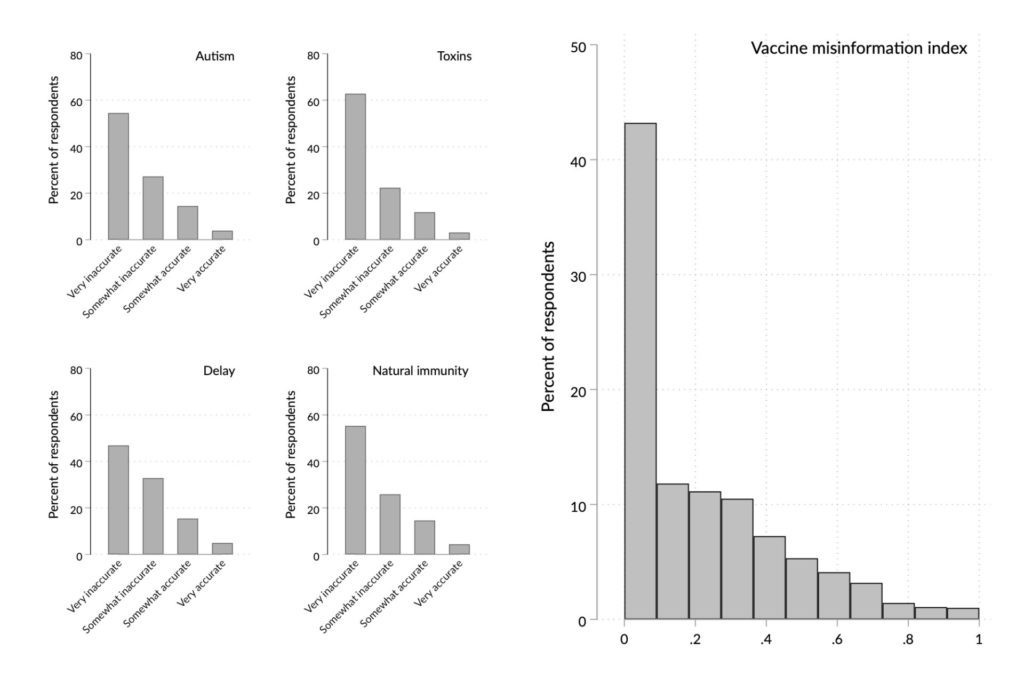

- We found that a relatively high number of individuals are at least somewhat misinformed about vaccines: 18% of our respondents mistakenly state that it is very or somewhat accurate to say that vaccines cause autism, 15% mistakenly agree that it is very or somewhat accurate to say that vaccines are full of toxins, 20% wrongly report that it is very or somewhat accurate to say it makes no difference whether parents choose to delay or spread out vaccines instead of relying on the official CDC vaccine schedule, and 19% incorrectly hold that it is very or somewhat accurate to say that it is better to develop immunity by getting the disease than by vaccination.

- In many cases, those who reported low trust in medical authorities were the same ones who believed vaccine misinformation (i.e., distrust of medical authorities is positively related to vaccine misinformation). Of note, this was true across different demographic groups and political beliefs.

- Not only was the percent of individuals holding misinformed beliefs high, but mistaken beliefs also were remarkably persistent over a five months period.

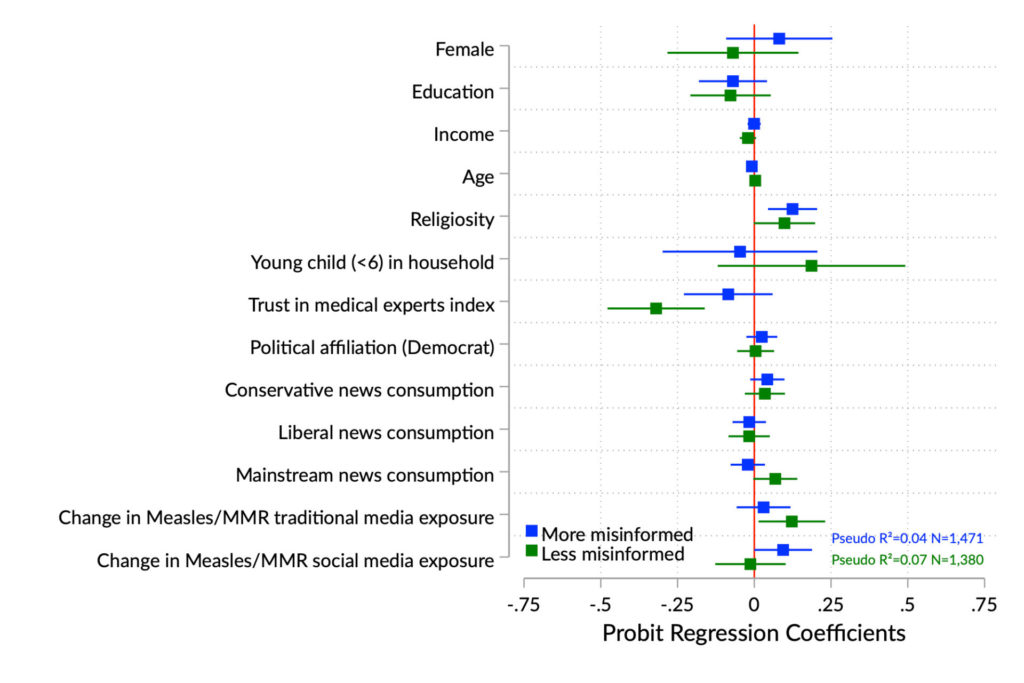

- Among those whose level of misinformation changed over time, the individuals who said that they were exposed to an increased amount of content about measles or the Measles, Mumps, and Rubella (MMR) vaccine on social media were more likely to have grown more misinformed.

- By contrast, those who reported to have saw, read, or heard information on these topics in traditional media were more likely to respond accurately.

- This result underscores the value of efforts to educate the public through traditional sources and minimize exposure to vaccine misinformation on social media.

- Future work should move beyond establishing correlations to examine whether the relationships among trust in medical experts, media exposure, and vaccine misinformation are causal.

Implications

Even though our findings can only show correlations between exposure to media coverage and individual attitudes, rather than establishing that the former causes the latter, they nonetheless suggest implications relevant to several aspects of the vaccine misinformation phenomenon, and specifically about 1) the role of health professionals in preventing and addressing vaccine misinformation, 2) the effectiveness of coordinated national pro-vaccination campaigns, and 3) the impact of social media misinformation on vaccination intent and behavior.

We found that a potentially consequential number of US adults is misinformed about vaccines. Importantly, among them, low trust in medical experts coincides with believing vaccine misinformation. Since health experts are a source of trustworthy information about vaccination, the finding that those who are misinformed about vaccination are distrustful of these experts complicates their ability to serve as ambassadors to this group. However, research suggests that those who distrust expert sources may nonetheless accept the recommendations of their own doctor if that professional takes the time to listen and explain (Brenner et al., 2001) and presumes the value of vaccines (Dempsey & O’Leary, 2018). For example, a doctor’s use of interpersonal motivational interviewing (MI) has been shown to increase the likelihood that a patient will agree to vaccinate their child. Four principles ground MI: “1) expressing empathy toward clients, 2) developing discrepancy between their current and desired behaviors, 3) dealing with resistance without antagonizing, preserving effective communication, and allowing clients to explore their views, and 4) supporting self-efficacy (confidence in their ability to change)” (Gagneur et al., 2018). One problem with using an interpersonal strategy could be that those who are critical of vaccination may seek out physicians disposed to rationalize exemptions from it, a phenomenon that increases the importance of vaccination mandates (Abrevaya & Mulligan, 2011; Richwine, Dor, & Moghtaderi, 2019).

This study also shows the tenacity of vaccine misinformation. Most of those in our study were roughly as informed or misinformed in February and March as they were in September and October. During the intervening time, health experts at the National institutes of Health (NIH) and Centers for Disease Control and Prevention (CDC) responded to the measles outbreak by appearing in both mainstream and social media to make the case for vaccination, while those hostile to immunization found a more hospitable venue online than in traditional venues (Bode & Vraga, 2015; McDonald, 2019; Zadrozny, 2019). However, since familiarity with a message increases perceptions that it is accurate (DiFonzo, Beckstead, Stupak, & Walders, 2016; Wang, Brashier, Wing, Marsh, & Cabeza, 2016), increasing the sheer amount of pro-vaccination content in media of all types may be of value over the longer term. So too may be efforts by the platforms to draw attention to material that contextualizes or corrects misinformation (cf., Facebook Media and Publisher Help Center, 2019).

Reducing exposure to deceptive information is of course a better way to protect the public from it than trying to counteract it after it is in place. Overall, our results suggest that the media on which one relies matter, with mainstream media exposure more beneficial and reliance on social media more problematic. These conclusions are consistent with ones showing that even brief exposure to websites that are critical of vaccination can affect attitudes (Betsch, Renkewitz, Betsch, & Ulshöfer, 2010). They also reinforce a March 2019 plea from the American Medical Association to the social media platforms that argued that “it is extremely important that people who are searching for information about vaccination have access to accurate, evidence-based information grounded in science” (Madara, 2019).

Finally, our findings underscore the importance of decisions by Facebook (Zadrozny, 2019), Twitter (Harvey, 2019), YouTube (Offit & Rath, 2019), and Pinterest (Caron, 2019; Chou, Oh, & Klein, 2018; Stampler, 2019) to reduce or block access to anti-vaccination misinformation. The complexity of these media systems means that changes, including those in algorithms, should be closely observed for unintended consequences of the sort that occurred when Facebook’s attempts to increase transparency led to the removal of ads promoting vaccination and communicating scientific findings (Jamison et al., 2019). Platform initiatives worthy of ongoing scrutiny include YouTube’s “surfacing [of] more authoritative content across [the] site for people searching for vaccination-related topics” downgrading “recommendations of certain anti-vaccination videos” as well as “showing information panels with more sources” to permit viewers to “fact check information for themselves” (Sarlin, 2019). These changes by social media companies in managing the information environment, at a time of abundant policy discussion on vaccination, also highlight the ongoing debates on the merits and limits of the self-regulation by technology companies (Kreiss & McGregor, 2019).

Findings

We start with a fundamental set of questions investigating how common are specific misconceptions about vaccination (Smith, 2017), and specifically false beliefs that:

- Vaccines contain toxins;

- Vaccines can be delayed without risk;

- “Natural immunity” through contraction of the disease is safer than vaccination;

- Vaccination causes autism.

Despite the Centers for Disease Control and Prevention’s (CDC) assurances about the safety of vaccines (Centers for Disease Control, 2019b), we find that a worrisome percent of US adults subscribe to anti-vaccination tropes. Specifically, 18% of our respondents mistakenly state that it is very or somewhat accurate to say that vaccines cause autism, 15% mistakenly agree that it is very or somewhat accurate to say that vaccines are full of toxins, 20% inaccurately report that it is very or somewhat accurate to say it makes no difference whether parents choose to delay or spread out vaccines instead of relying on the official CDC vaccine schedule, and 19% inaccurately hold that it is very or somewhat accurate to say that it is better to develop immunity by getting the disease than by vaccination. 1These percentages are from the April, 2019 survey. September percentages are, in order, 19%, 13%, 18%, and 22%. Although most respondents do not embrace these erroneous beliefs, the percent that is misinformed is sufficiently high to undercut community immunity which for measles, for example, requires vaccination rates in excess of 90% (Fine, Eames, & Heymann, 2011; Oxford Vaccine Group, 2016).

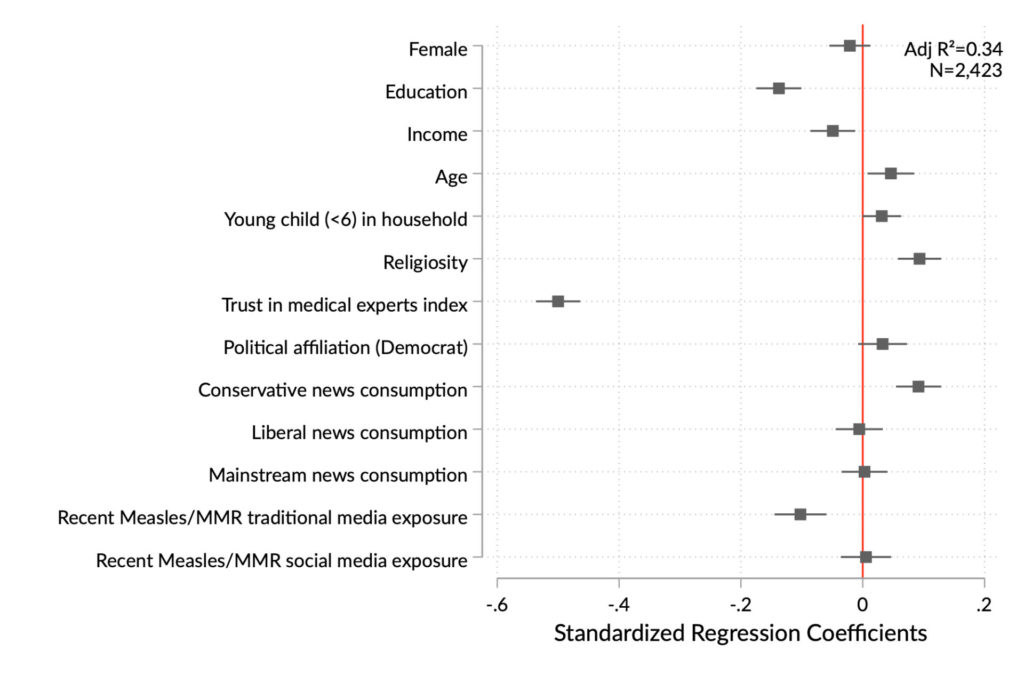

Not only is holding inaccurate beliefs about vaccines associated with distrust of medical experts, but negative trust is a stronger predictor of being misinformed than education, income, age, religiosity, and conservative news media consumption. Of note, recent exposure to traditional media content about measles and MMR was associated with more accurate beliefs (see Figure 2).

To the experimental studies of misinformation persistence, our work adds information about whether and how a national sample’s level of vaccine misinformation changed over a five-month period in which the media were focusing on a measles outbreak. As meta-analyses confirm, correcting mistaken beliefs is difficult (Chan, Jones, Jamieson, & Albarracín, 2017; Walter & Murphy, 2018). Efforts to do so can potentially elicit backfire effects, strengthening inaccurate views (Pluviano, Watt, & Della Sala, 2017). Nonetheless, successful communication interventions do exist (Dixon, McKeever, Holton, Clarke, & Eosco, 2015).

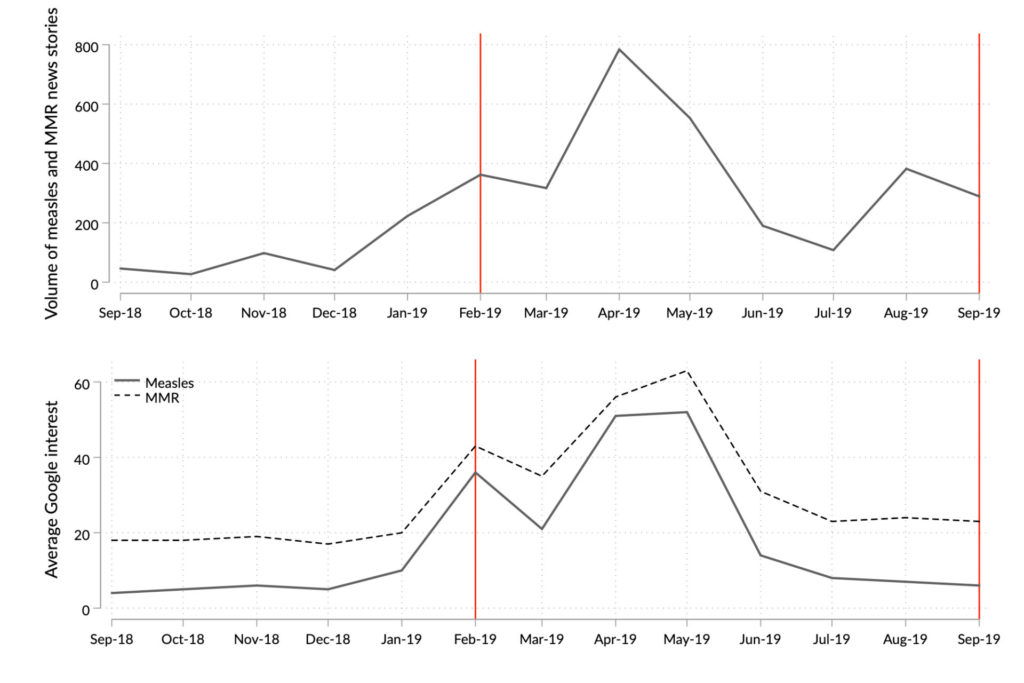

Unsurprisingly, then, vaccine misinformation proved resilient over time; most of those in the sample (81%) were as informed or misinformed in February/March as they were five months later (in September/October). Between these two periods, the director of the CDC called on “all healthcare providers to assure patients about the efficacy and safety of the measles vaccine” (Centers for Disease Control, 2019a) and news media extensively covered the largest measles outbreak in the US in over a quarter of a century. Figure 3 shows reporting on measles and the measles, mumps, and rubella vaccine (MMR) in the major news media outlets, as well as Google searches for the terms measles and MMR. One troubling finding in our study is that, during this period, among the 19% of our respondents whose level of vaccination misinformation changed in a substantive way (defined here as a change in misinformation level of more than one standard deviation), 64% were more misinformed in Fall than in Spring (N=238), while 36% were less so (N=132).

Our examination of factors related to these changes in levels of misinformation revealed that, although those with greater trust in medical experts were less likely to hold mistaken beliefs to start with, their misconceptions largely persisted five months later. Although we cannot fully account for how much exposure a given individual had to the CDC publicity campaign and the related media coverage, this result suggests that five months of additional exposure to medical experts in media seems to have failed to undercut their misperceptions.

However, when changes in a respondent’s level of misinformation did occur, media consumption patterns help explain whether that person would be more likely to move toward improved or reduced accuracy of information. As a person’s reported exposure to measles and MMR-related content on social media increased, so too did that individual’s level of vaccine misinformation. By contrast, as exposure to measles and MMR vaccine content in traditional media increased, one’s level of misinformation tended to decrease. This conclusion is consistent with research suggesting that social media contain a fair amount of misinformation about vaccination (Evrony & Caplan, 2017; Kata, 2010; Keelan, Pavri-Garcia, Tomlinson, & Wilson, 2007; Larson et al., 2016), while traditional media are more likely to reflect the scientific consensus on its benefits and safety (Merkley, 2019). Future research should focus on determining whether these associations are causal.

Methods

A random sample of nearly 2,500 US adults drawn from a national panel responded to questions at two different times (February 28-March 25, 2019) and (September 13-October 2, 2019). The larger project in which this study was embedded included six interview periods. The results we report here come from the fourth and sixth waves, which are the times when vaccine misinformation was measured. For clarity, in this report, we refer to them as time 1 and 2. Information about the subjects’ age, race, gender, educational level, income, and other demographic factors not available from the panel vendor was gathered during an initial wave (September 21–October 6, 2018). The respondents’ media preferences, which gauged their consumption of conservative, liberal, and mainstream news, were measured during wave three (January 15–February 4, 2019). We have no reason to believe that the answers to these questions would have differed meaningfully had the questions been repeated in the waves on which we rely here.

Our research plans were approved by the local Institutional Review Board (see the “Ethics” section for more information). Our interviewees were part of the AmeriSpeak panel of the National Opinion Research Center (NORC) at the University of Chicago. That panel is a probability-based nationally-representative sample of American adults created using a two-stage stratified sampling frame that covers 97% of U.S. households. More details about the NORC sampling procedures appear on its website.2 https://tessexperiments.org/NORC%20AmeriSpeak%20Information%20for%20IRBs%202016%2010%2018.pdf

The key outcome, vaccine misinformation, was measured using four items that make up common claims of the anti-vaccination movement:

- Vaccines given to children for diseases like measles, mumps, and rubella can cause neurological disorders like autism.

- Vaccines in general are full of toxins and harmful ingredients like “antifreeze.”

- It makes no difference if parents choose to delay or spread out vaccines instead of relying on the official CDC vaccine schedule.

- It is better to develop natural immunity by getting the disease than by receiving the vaccine.

Respondents rated each item on the four points scale: very inaccurate, somewhat inaccurate, somewhat accurate, very accurate. The responses were then averaged into a continuous index, ranging from 0 to 1. The Cronbach’s alpha for the misinformation index was 0.79 at the time of the first interview and 0.75 at the second. The regression model in Figure 2 uses the data from the first interview (T1) because this constitutes respondents’ initial level of misinformation. Additionally, there are more respondents at the T1 stage. A replication of the model in Figure 2, in which we replace T1 misinformation levels with the T2 misinformation levels as the outcome variable, does not materially change the results. Since the distribution of misinformation at time 1 was skewed, we also ran the regression with the log transformed version of the variable. It did not affect the results in a meaningful way.

The key predictors (i.e., independent variables) are trust in medical experts and media preferences. Trust in medical experts is an index composed of trust that the respondent has in the CDC and in their primary care doctor will give them accurate information about the benefits and risks of vaccination. Cronbach’s alpha for the index is 0.77.

Media preferences were assessed using self-reported items asking about frequency of use of different media outlets on a six-point scale from none (0) to a lot (5). Mainstream sources were ABC News, CBS News, NBC News, or CNN. Liberal sources were MSNBC, Rachel Maddow, NPR, Bill Maher, or Huffington Post. Conservative sources were Fox News, Rush Limbaugh, The Drudge Report, or The Mark Levin Show.

MMR vaccine message exposure captured self-reported exposure to both traditional and social media. We asked respondents, at both T1 and T2, how often, in the past month, they had read, seen or heard information on the MMR vaccine and on measles in traditional media (such as newspapers, magazines, radio, or television), or content on social media (on platforms such as Facebook, Twitter, YouTube or Instagram). The four response options were never, rarely, sometimes, and often. We combined the questions about MMR and measles into a simple additive index. At time 1 the Cronbach’s alpha was 0.92 for traditional media and 0.95 for social media. At time 2, the Cronbach’s alpha was 0.94 for traditional media and 0.95 for social media. To measure the difference in exposure to the messages, we calculated a difference score, with a higher score indicating an increase in self-reported exposure to stories about measles and the MMR vaccine in traditional or social media from Spring (time 1) to Fall (time 2).

To measure change in vaccine misinformation, we subtracted the measurement in February/March from that in September/October. We coded the results into a categorical variable based on the magnitude of change. Since change scores converged around 0, it is not likely that these small changes were meaningful, and more likely that they represent random error or measurement noise. To account for this measurement error and identify meaningful shifts in vaccine misinformation, we coded changes that fell beyond one standard deviation below and above no change (zero) value. This resulted in two dummy variables in which a positive (coded 1) score indicates an increase in vaccine misinformation or decrease in vaccine misinformation respectively, rather than no change or no substantive change (coded 0) that covers the values between -1 and +1 sd. This approach allows us to measure and predict vaccine misinformation stability and change by identifying those respondents who exhibited meaningful shifts. Figure 4 depicts coefficients from two models: predicting those respondents who became substantially (more than one standard deviation) more misinformed (blue), as well as those who became substantially less so (green).

Acknowledgments

We thank the APPC 2018-2019 ASK Group, which includes the survey design and administration team: Ozan Kuru, Ph.D., Dominik Stecula, Ph.D., Hang Lu, Ph.D., Yotam Ophir, Ph.D., Man-pui Sally Chan, Ph.D., Ken Winneg, Ph.D., Kathleen Hall Jamieson, Ph.D., and Dolores Albarracin, Ph.D.

Topics

Bibliography

Abrevaya, J., & Mulligan, K. (2011). Effectiveness of state-level vaccination mandates: Evidence from the varicella vaccine. Journal of Health Economics, 30(5), 966–976. https://doi.org/10.1016/J.JHEALECO.2011.06.003

Betsch, C., Renkewitz, F., Betsch, T., & Ulshöfer, C. (2010). The Influence of Vaccine-critical Websites on Perceiving Vaccination Risks. Journal of Health Psychology, 15(3), 446–455. https://doi.org/10.1177/1359105309353647

Bode, L., & Vraga, E. K. (2015). In Related News, That Was Wrong: The Correction of Misinformation Through Related Stories Functionality in Social Media. Journal of Communication, 65(4), 619–638. https://doi.org/10.1111/jcom.12166

Brenner, R. A., Simons-Morton, B. G., Bhaskar, B., Das, A., Clemens, J. D., & NIH-D.C. Initiative Immunization Working Group. (2001). Prevalence and Predictors of Immunization Among Inner-City Infants: A Birth Cohort Study. PEDIATRICS, 108(3), 661–670. https://doi.org/10.1542/peds.108.3.661

Caron, C. (2019). Facebook Announces Plan to Curb Vaccine Misinformation – The New York Times. Retrieved October 29, 2019, from https://www.nytimes.com/2019/03/07/technology/facebook-anti-vaccine-misinformation.html

Centers for Disease Control. (2019a). CDC Media Statement: Measles cases in the U.S. are highest since measles was eliminated in 2000 | CDC Online Newsroom | CDC. Retrieved December 9, 2019, from https://www.cdc.gov/media/releases/2019/s0424-highest-measles-cases-since-elimination.html

Centers for Disease Control. (2019b). Vaccine Safety | Vaccine Safety | CDC. Retrieved December 12, 2019, from https://www.cdc.gov/vaccinesafety/index.html

Chan, M. S., Jones, C. R., Jamieson, K. H., & Albarracín, D. (2017). Debunking: A Meta-Analysis of the Psychological Efficacy of Messages Countering Misinformation. Psychological Science, 28(11), 1531–1546. https://doi.org/10.1177/0956797617714579

Chou, W.-Y. S., Oh, A., & Klein, W. M. P. (2018). Addressing Health-Related Misinformation on Social Media. JAMA, 320(23), 2417. https://doi.org/10.1001/jama.2018.16865

Dempsey, A. F., & O’Leary, S. T. (2018). Human Papillomavirus Vaccination: Narrative Review of Studies on How Providers’ Vaccine Communication Affects Attitudes and Uptake. Academic Pediatrics, 18(2), S23–S27. https://doi.org/10.1016/j.acap.2017.09.001

DiFonzo, N., Beckstead, J. W., Stupak, N., & Walders, K. (2016). Validity judgments of rumors heard multiple times: the shape of the truth effect. Social Influence, 11(1), 22–39. https://doi.org/10.1080/15534510.2015.1137224

Dixon, G. N., McKeever, B. W., Holton, A. E., Clarke, C., & Eosco, G. (2015). The Power of a Picture: Overcoming Scientific Misinformation by Communicating Weight-of-Evidence Information with Visual Exemplars. Journal of Communication, 65(4), 639–659. https://doi.org/10.1111/jcom.12159

Evrony, A., & Caplan, A. (2017, June 3). The overlooked dangers of anti-vaccination groups’ social media presence. Human Vaccines and Immunotherapeutics. Taylor and Francis Inc. https://doi.org/10.1080/21645515.2017.1283467

Facebook Media and Publisher Help Center. (2019). Fact-Checking on Facebook: What Publishers Should Know. Retrieved December 11, 2019, from https://www.facebook.com/help/publisher/182222309230722

Fine, P., Eames, K., & Heymann, D. L. (2011). Herd Immunity: A Rough Guide. Clinical Infectious Diseases, 52(7), 911–916. https://doi.org/10.1093/cid/cir007

Gagneur, A., Lemaitre, T., Gosselin, V., Farrands, A., Carrier, N., Petit, G., … Wals, P. De. (2018). Promoting Vaccination at Birth Using Motivational Interviewing Techniques Improves Vaccine Intention: The PromoVac Strategy. Journal of Infectious Diseases & Therapy, 06(05), 1–7. https://doi.org/10.4172/2332-0877.1000379

Harvey, D. (2019). Helping you find reliable public health information on Twitter. Retrieved November 5, 2019, from https://blog.twitter.com/en_us/topics/company/2019/helping-you-find-reliable-public-health-information-on-twitter.html

Jamison, A. M., Broniatowski, D. A., Dredze, M., Wood-Doughty, Z., Khan, D., & Quinn, S. C. (2019). Vaccine-related advertising in the Facebook Ad Archive. Vaccine. https://doi.org/10.1016/J.VACCINE.2019.10.066

Kata, A. (2010). A postmodern Pandora’s box: Anti-vaccination misinformation on the Internet. Vaccine, 28(7), 1709–1716. https://doi.org/10.1016/J.VACCINE.2009.12.022

Keelan, J., Pavri-Garcia, V., Tomlinson, G., & Wilson, K. (2007). YouTube as a Source of Information on Immunization: A Content Analysis. JAMA, 298(21), 2481. https://doi.org/10.1001/jama.298.21.2482

Kreiss, D., & McGregor, S. C. (2019). The “Arbiters of What Our Voters See”: Facebook and Google’s Struggle with Policy, Process, and Enforcement around Political Advertising. Political Communication, 36(4), 499–522. https://doi.org/10.1080/10584609.2019.1619639

Larson, H. J., de Figueiredo, A., Xiahong, Z., Schulz, W. S., Verger, P., Johnston, I. G., … Jones, N. S. (2016). The State of Vaccine Confidence 2016: Global Insights Through a 67-Country Survey. EBioMedicine, 12, 295–301. https://doi.org/10.1016/j.ebiom.2016.08.042

Madara, J. L. (2019). AMA urges tech giants to combat vaccine misinformation | American Medical Association. Retrieved December 9, 2019, from https://www.ama-assn.org/press-center/press-releases/ama-urges-tech-giants-combat-vaccine-misinformation

McDonald, J. (2019). Instagram Post Falsely Links Flu Vaccine to Polio – FactCheck.org. Retrieved October 24, 2019, from https://www.factcheck.org/2019/10/instagram-post-falsely-links-flu-vaccine-to-polio/?platform=hootsuite

Merkley, E. (2019). Are Experts (News)Worthy? Balance, Conflict, and Mass Media Coverage of Expert Consensus. https://doi.org/10.31219/OSF.IO/S2AP8

Offit, P., & Rath, B. A. (2019). YouTube nixes advertising on anti-vaccine content. Retrieved December 9, 2019, from https://www.healio.com/pediatrics/vaccine-preventable-diseases/news/online/%7Bccd8ec33-5f46-42a8-93fa-9aefab6516be%7D/youtube-nixes-advertising-on-anti-vaccine-content

Oxford Vaccine Group. (2016). Herd Immunity: How does it work? — Oxford Vaccine Group. Retrieved October 29, 2019, from https://www.ovg.ox.ac.uk/news/herd-immunity-how-does-it-work

Pluviano, S., Watt, C., & Della Sala, S. (2017). Misinformation lingers in memory: Failure of three pro-vaccination strategies. PLOS ONE, 12(7), e0181640. https://doi.org/10.1371/journal.pone.0181640

Richwine, C., Dor, A., & Moghtaderi, A. (2019). Do Stricter Immunization Laws Improve Coverage? Evidence from the Repeal of Non-medical Exemptions for School Mandated Vaccines. Cambridge, MA. https://doi.org/10.3386/w25847

Sarlin, J. (2019). Amazon is full of anti-vaccine misinformation. Retrieved December 11, 2019, from https://www.cnn.com/2019/02/27/tech/amazon-anti-vaccine-books-movies/index.html

Smith, T. C. (2017). Vaccine Rejection and Hesitancy: A Review and Call to Action. Open Forum Infectious Diseases, 4(3). https://doi.org/10.1093/ofid/ofx146

Stampler, L. (2019). How Pinterest Is Going Further Than Facebook and Google to Quash Anti-Vaccination Misinformation. Retrieved November 5, 2019, from https://fortune.com/2019/02/20/how-pinterest-is-going-further-than-facebook-and-google-to-quash-anti-vaccination-misinformation/

Walter, N., & Murphy, S. T. (2018). How to unring the bell: A meta-analytic approach to correction of misinformation. Communication Monographs, 85(3), 423–441. https://doi.org/10.1080/03637751.2018.1467564

Wang, W.-C., Brashier, N. M., Wing, E. A., Marsh, E. J., & Cabeza, R. (2016). On Known Unknowns: Fluency and the Neural Mechanisms of Illusory Truth. Journal of Cognitive Neuroscience, 28(5), 739–746. https://doi.org/10.1162/jocn_a_00923

Zadrozny, B. (2019). Anti-vaccination groups still crowdfunding on Facebook despite crackdown. Retrieved October 24, 2019, from https://www.nbcnews.com/tech/internet/anti-vaccination-groups-still-crowdfunding-facebook-despite-crackdown-n1064981

Funding

The study was funded by the Science of Science Communication Endowment of the Annenberg Public Policy Center.

Competing Interests

No conflicts of interest to report.

Ethics

The research protocol employed was approved as exempt research by the University of Pennsylvania Institutional Review Board. Respondents’ self-reported gender was not measured. Respondents’ selfreported biological sex and ethnicity were provided by the survey research company NORC.