Peer Reviewed

Can WhatsApp benefit from debunked fact-checked stories to reduce misinformation?

Article Metrics

22

CrossRef Citations

PDF Downloads

Page Views

WhatsApp was alleged to have been widely used to spread misinformation and propaganda during the 2018 elections in Brazil and the 2019 elections in India. Due to the private encrypted nature of the messages on WhatsApp, it is hard to track the dissemination of misinformation at scale. In this work, using public WhatsApp data from Brazil and India, we observe that misinformation has been largely shared on WhatsApp public groups even after they were already fact-checked by popular fact-checking agencies. This represents a significant portion of misinformation spread in both Brazil and India in the groups analyzed. We posit that such misinformation content could be prevented if WhatsApp had a means to flag already fact-checked content. To this end, we propose an architecture that could be implemented by WhatsApp to counter such misinformation. Our proposal respects the current end-to-end encryption architecture on WhatsApp, thus protecting users’ privacy while providing an approach to detect the misinformation that benefits from fact-checking efforts.

Research Questions

- To what extent is misinformation shared on WhatsApp after they are debunked?

- How can WhatsApp benefit from using fact-checked content in the fight against misinformation?

Essay Summary

- We collected two large public datasets consisting of: (i) images from public WhatsApp groups during the recent elections in Brazil and India; and (ii) images labeled as misinformation by professional fact-checking agencies in these two countries. This data was used to perform a series of experiments to evaluate whether debunked fact-checked stories are shared on WhatsApp;

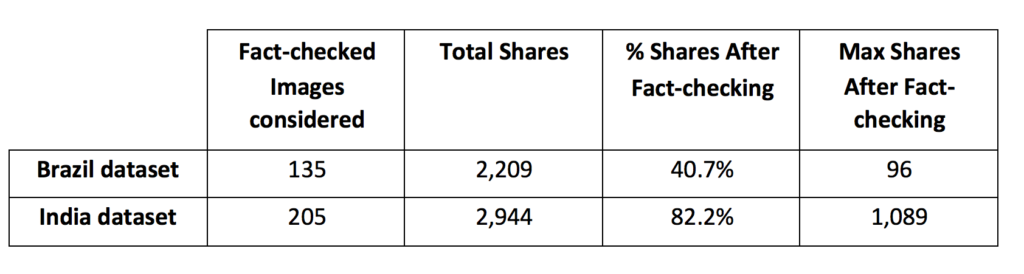

- By looking at the time when an image was shared on WhatsApp and when it was debunked by fact-checkers, we find that a non-negligible number of the fact-checked images were shared in our WhatsApp dataset even after they were marked as misinformation by a fact-checking organization. Looking at the number of shares of these fact-checked images in our dataset, we find that 40.7% and 82.2% of the shares containing misinformation in our data were made after the content was debunked by the fact-checking agencies. This suggests that, if WhatsApp flagged the content as false at the time it was fact-checked, it could prevent a large fraction of shares of misinformation from occurring.

- Building on this idea of flagging content, we discuss a potential architecture change to WhatsApp that would allow it to benefit from fact-checked content to counter misinformation. Our proposal is based on on-device fact-checking, where WhatsApp can detect when a user shares content that has been previously labeled by fact-checkers as misinformation by using a digital fingerprint for that content. The potential architecture makes it possible for WhatsApp to protect users’ privacy, keep the end-to-end encryption, and detect misinformation in a timely fashion.

Implications

Social media platforms have dramatically changed how people consume and share news. An individual user can reach as many readers as other traditional media nowadays (Allcott & Gentzkow, 2017). Social communication around news is becoming more private as messaging apps continue to grow around the world. With over 2 billion users, WhatsApp is a primary network for discussing and sharing news in countries like Brazil and India where smartphone use for news access is already much higher than other devices, including desktop computers and tablets (Newman et al., 2019). WhatsApp users send more than 55 billion messages a day, of which 4.5 billion are images (WhatsApp, 2017). Along with massive information flows, a large amount of misinformation is posted on WhatsApp without any moderation (Resende et al., 2019). Several works have already shown how misinformation has negatively affected the democratic discussion in some countries (Moreno et al., 2017; Resende et al., 2019) and even led to violent lynchings (Arun, 2019). Furthermore, during the COVID-19 pandemic, there has been an uptick in rumours and conspiracies spreading through online platforms (Ferrara, 2020). The International Fact-Checking Network found more than 3,500 false claims related to COVID-19 in less than two months (Poynter, 2020). WhatsApp has played a big role in the proliferation and spread of misinformation around the world (Delcker et al., 2020; Romm, 2020). Several high profile examples, including state-backed misinformation campaigns on WhatsApp have been observed recently (Brito, 2020). Unlike social platforms such as Twitter and Facebook, which can perform content moderation and hence take down offending content, the end-to-end encrypted (E2EE) structure of WhatsApp creates a very different scenario where the same approach is not possible. Only the users involved in the conversation have access to the content shared, shielding abusive content from being detected or removed. This obstacle has already led governments to propose breaking encryption as a way to enable moderation and law enforcement on platforms like WhatsApp (Sabbagh, 2019). The key challenge we tackle in this paper is to find a balance between fighting misinformation on WhatsApp and keeping it secure with end-to-end encryption.

We first assessed the role of data from professional fact-checking agencies to combat misinformation on WhatsApp. We measured this using data collected from both fact-checking agencies and public groups on WhatsApp during recent elections in Brazil and India. In our analysis, we found that a significant part of misinformation shared in the groups we monitor were done after the content was fact-checked as misinformation by the fact-checking agencies. This represents a significant fraction of shares of misinformation, 40.7% and 82.2% in Brazil and India respectively. This shows that, even though fact-checking efforts label content as misinformation, it is still being freely shared on the platform, indicating that public fact-checking alone does not block the spreading of misinformation on WhatsApp. This is a missed opportunity for WhatsApp, which could have prevented the further spread of already known misinformation.

Building upon this finding, we propose a moderation methodology that benefits from the observed potential of using fact-checking, in which WhatsApp can automatically detect when a user shares images and videos which have previously been labeled as misinformation using hashes, without violating the privacy of the user nor compromising the E2EE within the messaging service. This solution is similar to how Facebook would flag content as misinformation, based on having hashes of previously fact-checked content. The proposal works entirely on the device of the user before the content is encrypted, thus making it tenable within the existing E2EE design on WhatsApp.

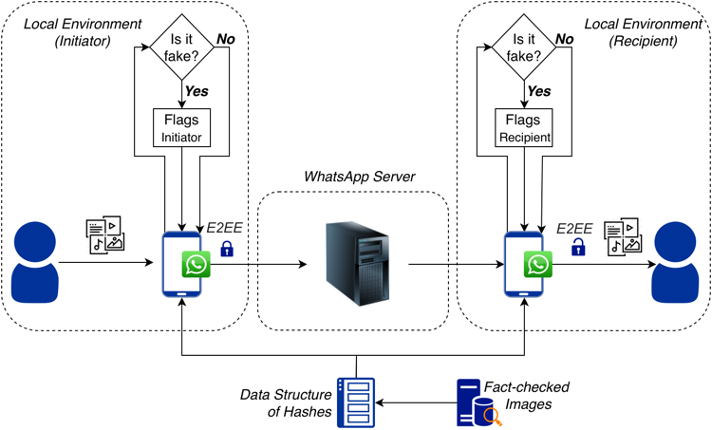

An overview of the potential architecture is shown in Figure 1 and can be explained in the following steps: (i) WhatsApp maintains a set of hashes of images which have been previously fact-checked, either from publicly available sources or through internal review processes. (ii) These hashes are shipped with the WhatsApp app, storing it on a user’s phone. This step can be periodically updated based on images that Facebook’s moderators have been fact-checking on Facebook, which is much more openly accessible. This set could be condensed and efficiently stored using existing probabilistic data structures like Bloom Filters (Song et al., 2005). (iii) Once a user intends to send an image, WhatsApp checks whether it already exists in the hashed set on the user’s device. If so, a warning label could be applied to the content or further sharing could be denied. (iv) The message is encrypted and transferred through the usual E2EE methods. (v) When the recipient user receives the message, WhatsApp decrypts the image on the phone, obtains a perceptual hash, and also checks it on a hashed set on the receiver’s end. (vi) If it already exists, the content is flagged on the receiver’s end. The flagging could be implemented as a warning shown to the user indicating that the image could be potential misinformation, providing information about where the image was fact-checked; and in addition, also prevent the image from being forwarded further.

The strategy works to prevent coordinated disinformation campaigns that are particularly important during elections and other high profile national and international events (such as the COVID-19 crisis) but also stops basic misinformation, where a lack of awareness leads to spreading. For instance, we observed that roughly 15% of the images in our data were related to false health information. These are forwarded mostly with the assumption that they might help someone in case they are true. In some cases (e.g. the child kidnapping rumors (Arun, 2019)), such benign forwarding of misinformation leads to violence and killings.

The potential architecture requires changes in WhatsApp, as it introduces a new component containing hashes stored on the phone and also checking images. It provides high flexibility and the ability to detect near similar images, hence increasing the coverage and effectiveness in countering misinformation. As previously mentioned, this architecture also fully abides by the current E2EE pipeline WhatsApp has, where WhatsApp does not have access to any content information. All the matching and intervention is done on the device without the need for any aggregate metadata in the message. Facebook, that owns WhatsApp, could optionally keep statistics of how many times a match occurred to establish the prevalence and virality of different types of misinformation and to collect stats about users who repeatedly send such content. Note that similar designs have been proposed recently in informing policy decisions in light of governments requesting a backdoor in the encryption (Gupta & Taneja, 2018; Mayer, 2019).

It is important to mention that, while WhatsApp messages are encrypted in transit, the endpoint devices, such as smartphones and computers, do not offer encryption. In this sense, this potential architecture adds new components to the client, adding also more potential for security breaches. Our idea is practical and deployable because it is an industry-standard to detect unlawful behavior in social media platforms (Farid, 2018). For example, WhatsApp scans all unencrypted information on its network such as user/group profile photos, and group metadata for unlawful content such as child pornography, drugs, etc. If flagged, these are manually verified (Constine, 2018) and the abusing accounts are banned. Our proposal extends the same methodology to the user’s device in order to enable private detection and it could be easily integrated within a network of fact-checkers that Facebook already has up and running.

Guaranteeing the privacy of the users is as important as combating misinformation. In our understanding, both can coexist in parallel in the EE2E encrypted chat environment of WhatsApp. Hence, our objective is to provide a middle ground solution which could satisfy those who request actions in combat of misinformation spreading in such platforms, but also keep the privacy of the users’ phone before it is encrypted.

Most previous qualitative research on misinformation in the form of images on social media (Paris & Donovan, 2019; Brennen et al., 2020; Garimella & Eckles, 2020) has found that “cheap fakes” or old images reshared out of context are the most popular form of misinformation. Our work proposes the use of a database to maintain hashes (fingerprints) of such old images which were already fact-checked, and to use that database to prevent the spread of misinformation without breaking encryption on WhatsApp. Our work shows that a significant amount of shares of highly popular misinformation images could have been prevented if our proposal was applied during the Indian and Brazilian elections. The current debate on content moderation on WhatsApp has been only at either of two extremes: On the one hand, governments claiming that WhatsApp provides them with a backdoor, essentially breaking encryption, and on the other hand, WhatsApp claiming that any such moderation is impossible because of the end to end encryption design. This work provides a concrete suggestion to WhatsApp on what could be done to combat misinformation spreading on their platform. Since misinformation on WhatsApp has led to tragic real-life consequences like lynchings, social disorder, and threats to democratic processes, implementing such a system, even on a case by case basis (such as during elections or a pandemic), must be considered. This work also adds a counter-voice to claims of breaking encryption on WhatsApp by showing that a simple solution might go a long way in addressing the misinformation problem on WhatsApp. Since a majority of users never share viral misinformation, this design change, even when applied to all WhatsApp users, would be perceived by, and potentially affect a small set of users on WhatsApp, although it could still have a high impact in detecting and preventing the spread of misinformation.

It is important to mention that there might be multiple design choices to deal with a content that is detected as misinformation by WhatsApp (Nyhan & Reifler, 2010; Clayton et al., 2019), and user experience tests need to be conducted to investigate the best of them. For instance, WhatsApp could inform the user that the content was debunked, but still allow it to spread, or prevent it to be forwarded, block users, etc. This is a decision that WhatsApp should make, consulting previous research on the effects of flagging content as misinformation (Nyhan & Reifler, 2010; Clayton et al., 2019).

Findings

We identified images which were fact-checked to be false by popular fact-checking agencies in India and Brazil and analyzed the sharing patterns of these images on public WhatsApp groups. We found that 40.7% and 82.2% of the shares of these misinformation images from Brazil and India respectively, occurred after the images were debunked by fact-checking agencies. This indicates that the fact-checking efforts do not completely stop the spread of misinformation on WhatsApp. This provides an opportunity for WhatsApp to prevent misinformation from being shared on the app by using previously fact-checked data.

In order to assess the potential of previously fact-checked content to combat misinformation, our analysis requires WhatsApp messages containing misinformation along with information on their spread. Thus, we collected data from over 400 and 4,200 public groups from Brazil and India, respectively, dedicated to political discussions. We also performed an extensive collection of hundreds of images that were fact-checked by popular fact-checking agencies in India and Brazil. Details of this collection are provided in the Methods section.

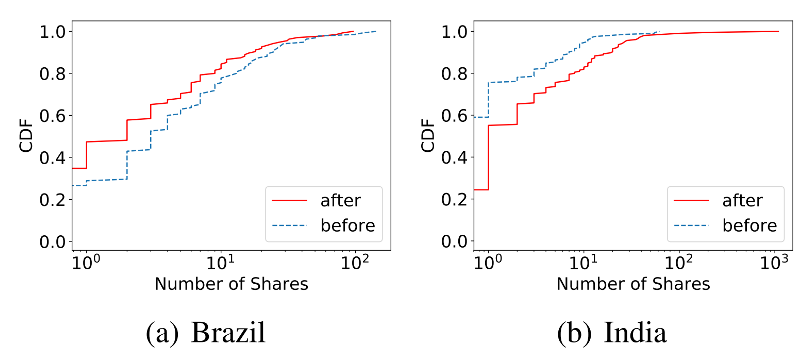

After matching the occurrences of misinformation labeled by professionals with those shared in WhatsApp, we evaluated the volume of misinformation shared in our sample after the time it was already debunked. To get estimates on these shares, we measured the occurrence of these fact-checked images in our WhatsApp. This way, we are able to measure how many posts were done for each misinformation image before and after the first fact-check of this image.Figure 2 shows the cumulative distribution function (CDF) of the number of shares done before and after the checking for each distinct misinformation image. We can observe in both countries that for the most broadly shared images there are as many posts before as after the checking date. Moreover, for India, there are more shares after checking than before and there are even images with up to 1,000 shares after fact-checking while the maximum shares before do not exceed 100.

before/after fact-checking on both countries.

As shown in Table 1, summing all shares on our sampled data, we find that 40.7% of the misinformation image shares in Brazil and 82.2% 1This number drops to 71.7% if we remove the outlier image with the maximum number of shares, as it was shared over 1000 times. of the shares in India happened after we already know the content is false. We observe that a considerable number of images are still shared on WhatsApp, even though they were already publicly fact-checked. This demonstrates that fact-checking content on WhatsApp is, to a large extent, a one-sided effort and is not enough to combat the huge volume of misinformation shared on an encrypted, closed platform like WhatsApp.

Methods

Combating misinformation in messaging apps such as WhatsApp is a big challenge due to end to end encryption (E2EE). This is even more challenging in an infodemic where we’ve been experiencing an avalanche of misinformation spreading on WhatsApp (Delcker et al., 2020). The main goal of this work is to investigate the impact of fact-checking in detecting misinformation in such systems and to propose an architecture capable of automatically finding and counter misinformation shared on WhatsApp and systems protected with E2EE safety without violating users’ privacy.

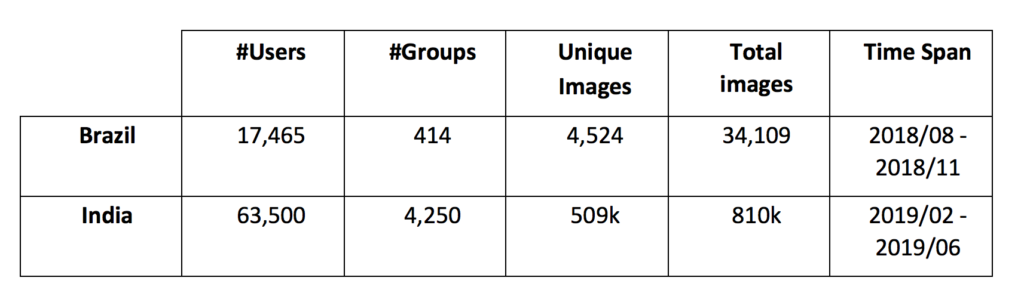

For this study, we need a large dataset from WhatsApp containing misinformation and a large dataset from fact-checkers identifying which content is fake. To obtain WhatsApp data, we used existing tools (Garimella & Tyson, 2018) to collect data from public WhatsApp groups. 2Any WhatsApp group with a link to join publicly available is considered a public group. We specifically were interested in monitoring WhatsApp conversations discussing politics in two of WhatsApp’s biggest markets: India and Brazil. We first obtained a list of publicly accessible WhatsApp groups by searching extensively for political parties and politicians in the two countries on Facebook and Google. We obtained over 400 and 4,200 groups from Brazil and India respectively, dedicated to political discussions. Note that even though we were thorough in our search, these groups at best represent a convenience sample of all WhatsApp groups. The period of data collection for both countries includes the respective national elections in these countries. 3Elections in India and Brazil took place over a few weeks. In India, they were held from 11 April to 19 May 2019, and from 7 to 28 October 2018 in Brazil. We started our data collection 2 months before the start of the elections and stopped the collection 1 month after the election. Public groups discussing politics have been shown to be widely used in both these countries (Newman et al., 2019; Lokniti, 2018) and contain a large amount of misinformation (Resende et al., 2019; Melo et al., 2019). For this work, we choose to filter only messages containing images, which make up over 30% of the content in our dataset. The dataset overview and the total number of users, groups, and distinct images are described in Table 2. Note that the volume of content for India is ten times bigger than Brazil.

Since most of WhatsApp is private and obtaining data is hard, public groups have become a common approach to evaluate information flows on WhatsApp (Garimella & Tyson, 2018; Resende et al., 2019; Melo et al., 2019). Although this represents a small portion of messages exchanged on all of WhatsApp, collecting WhatsApp data at scale is a challenge itself due to its closed nature. To the best of our knowledge, the datasets used in this study are the largest in understanding the spread of misinformation images on WhatsApp.

To collect a set of misinformation pieces that already spread we obtained images that were fact-checked in the past by fact-checking agencies for each country. First, we crawl all images which were fact-checked from popular fact-checking websites from Brazil and India. For each of these images, we also obtained the date when they were fact-checked. Second, we used Google reverse image search to check whether one of the main fact-checking domains were returned when searching for an image in our database. If so, we parsed the fact-checking page and automatically labeled the image as fake or true depending on how the image was tagged on the fact-checking page (Resende et al., 2019). Our final dataset of images previously fact-checked contains 135 images from Brazil and 205 images from India, which were shown to contain misinformation. It is important to highlight that many checking agencies do not post the actual image that has been disseminated. Often only altered versions of the image are posted and other versions of the false story are omitted to avoid contributing to the spreading of misinformation. This leads to us to have a small number of matches compared to the total number of fact-checked images we obtained, but that is sufficient to properly investigate the feasibility of the potential architecture. Note that even though the set of fact-checked images was small, the fact that these images have been fact-checked means that they were popular and spread widely. Table 1 shows a summary of the fact-checked images and their activity in our dataset.

Next, we used a state-of-the-art perceptual hashing based image matching technique, PDQ hashing (PDQ Facebook, 2018), to look for occurrences of the fact-checked images in our WhatsApp data. The PDQ hashing algorithm is an improvement over the commonly used pHash (Zauner, 2010) and produces a 256-bit hash using a discrete cosine transformation algorithm. PDQ is used by Facebook to detect similar content and is the best known state-of-the-art approach for clustering together similar images. The hashing algorithm can detect near similar images, even if they were cropped differently or they have small amounts of text overlaid on them. Finally, not all images which are fact-checked contain misinformation. To make sure our dataset was accurately built, we manually verified each image that appears in both the fact-checking websites and in the WhatsApp data.

Our WhatsApp data originated only for public WhatsApp groups, and we emphasize that all sensitive information (i.e., group names and phone numbers) were anonymized in order to ensure the privacy of users. The dataset only consists of images that were publicly fact-checked and anonymized user/group information. Hence, to the best of our knowledge, it does not violate the WhatsApp terms of service.Our methodology has the following limitations: (i) Labeling and implementing forwarding restrictions on already known fake images can only help to a certain degree. There is inconclusive evidence on whether labeling images as misinformation might backfire (Nyhan & Reifler, 2010; Levin, 2017; Wood & Porter, 2019); (ii) Our dataset of WhatsApp groups is not representative. However, since most of WhatsApp data is private, any research or evaluation of a policy proposal must be done on the publicly available data from WhatsApp. So, even though our data is limited, it provides hints at sharing patterns of misinformation on WhatsApp. To date, this is the largest WhatsApp data available sample; (iii) On a similar note, the images from fact-checking websites are also a biased sample. Notably, the fact that these images have been selected by a fact-checking agency to be debunked means that they were already popular on social media; (iv) Finally, our proposed solution is only one piece in solving the misinformation problem. It should not be considered as a one-step solution to the problem of misinformation as it also relies on fact-checking efforts done by third parties experts.

Topics

Bibliography

Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211-36. https://doi.org/10.1257/jep.31.2.211

Arun, C. (2019). On WhatsApp, Rumours, Lynchings, and the Indian Government. Economic & Political Weekly, 54(6), Forthcoming. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3336127

Brennen, J. S., Simon, F. M., Howard, P. N., & Nielsen, R. K. (2020). Types, sources, and claims of COVID-19 misinformation. Reuters Institute. https://reutersinstitute.politics.ox.ac.uk/node/4305

Brito, R. (2020). Death and denial in Brazil’s Amazon capital. The Washington Post. https://www.washingtonpost.com/world/the_americas/death-and-denial-in-brazils-amazon-capital/2020/05/26/2fb6bb36-9f06-11ea-be06-af5514ee0385_story.html

Clayton, K., Blair, S., Busam, J. A., Forstner, S., Glance, J., Green, G., Kawata, A., Kovvuri, A., Martin, J., Morgan, E., Sandhu, M., Sang, R., Scholz-Bright, R., Welch, A., Wolff, A., Zhou, A., & Nyhan, B. (2019). Real solutions for fake news? Measuring the effectiveness of general warnings and fact-check tags in reducing belief in false stories on social media. Political Behavior, 1-23. https://doi.org/10.1007/s11109-019-09533-0

Constine, J. (2018). Whatsapp has an encrypted child abuse problem – facebook fails to provide enough moderators. TechCrunch. https://techcrunch.com/2018/12/20/whatsapp-pornography/.

Delcker, J., Wanat, Z., & Scott, M. (2020). The coronavirus fake news pandemic sweeping WhatsApp. Politico Europe Edition. www.politico.eu/article/the-coronavirus-covid19-fake-news-pandemic-sweeping-whatsapp -misinformation/.

Farid, H. (2018). Reining in Online Abuses. Technology & Innovation, 19(3), 593-599. http://dx.doi.org/10.21300/19.3.2018.593

Ferrara, E. (2020). What types of COVID-19 conspiracies are populated by Twitter bots? First Monday, 25(6). https://doi.org/10.5210/fm.v25i6.10633

Garimella, K., & Tyson, G. (2018). Whatapp doc? A first look at whatsapp public group data. In Proceedings of the International AAAI Conference on Web and Social Media – ICWSM, 511-517. https://aaai.org/ocs/index.php/ICWSM/ICWSM18/paper/view/17865

Garimella, K., & Eckles, D. (2020). Images and misinformation in political groups: evidence from WhatsApp in India. The Harvard Kennedy School (HKS) Misinformation Review. https://doi.org/10.37016/mr-2020-030

Gupta, H. & Taneja, H. (2018). WhatsApp Has a Fake News Problem-That Can Be Fixed without Breaking Encryption. Columbia Journalism Review. www.cjr.org/tow_center/whatsapp-doesnt-have-to-break-encryption-to-beat-fake-news.php.

Levin, S. (2017). Facebook promised to tackle fake news. But the evidence shows it’s not working. The Guardian. https://www.theguardian.com/technology/2017/may/16/facebook-fake-news-tools-not-working

Lokniti, C. (2018). How widespread is Whatsapp’s usage in India? Livemint. https://www.livemint.com/Technology/O6DLmIibCCV5luEG9XuJWL/How-widespread-is-WhatsApps-usage-in-India.html.

Mayer, J. (2019). Content Moderation for End-to-End Encrypted Messaging. Princeton University. https://www.cs.princeton.edu/~jrmayer/papers/Content_Moderation_for_End-to-End_Encrypted_Messaging.pdf

Melo, P., Messias, J., Resende, G., Garimella, K., Almeida, J., & Benevenuto, F. (2019). WhatsApp monitor: a fact-checking system for WhatsApp. In Proceedings of the International AAAI Conference on Web and Social Media – ICWSM, 676-677. https://www.aaai.org/ojs/index.php/ICWSM/article/view/3271

Moreno, A., Garrison, P., & Bhat, K. (2017). Whatsapp for monitoring and response during critical events: Aggie in the ghana 2016 election. In Proceedings of the International Conference on Information Systems for Crisis Response and Management – ISCRAM. https://collections.unu.edu/view/UNU:6189

Newman, N., Fletcher, R., Kalogeropoulos, A., & Nielsen, R. (2019). Reuters institute digital news report 2019 (Vol. 2019). Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/sites/default/files/2019-06/DNR_2019_FINAL_0.pdf

Nyhan, B., & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303-330. https://doi.org/10.1007/s11109-010-9112-2

Paris, B., & Donovan, J. (2019). Deepfakes and Cheap Fakes. United States of America: Data & Society. https://datasociety.net/wp-content/uploads/2019/09/DS_Deepfakes_Cheap_FakesFinal-1.pdf

PDQ Facebook (2018). PDQ reference implementation: Java. GitHub repository, https://github.com/facebook/ThreatExchange/tree/master/hashing/pdq/java

Poynter (2020). Fighting the Infodemic: The #CoronaVirusFacts Alliance. Poynter Institute. https://www.poynter.org/coronavirusfactsalliance/

Reis, J. C. S., Melo, P., Garimella, K., Almeida, J. M., Eckles, D., & Benevenuto, F. (2020). A Dataset of Fact-Checked Images Shared on WhatsApp During the Brazilian and Indian Elections. In Proceedings of the International AAAI Conference on Web and Social Media – ICWSM, 903-908. https://aaai.org/ojs/index.php/ICWSM/article/view/7356

Resende, G., Melo, P., Sousa, H., Messias, J., Vasconcelos, M., Almeida, J., & Benevenuto, F. (2019). (Mis) Information Dissemination in WhatsApp: Gathering, Analyzing and Countermeasures. In Proceedings of The World Wide Web Conference – WWW, 818-828. https://doi.org/10.1145/3308558.3313688

Romm, T (2020). Fake cures and other coronavirus conspiracy theories are flooding WhatsApp, leaving governments and users with a ‘sense of panic. The Washington Post. https://www.washingtonpost.com/technology/2020/03/02/whatsapp-coronavirus-misinformation/

Sabbagh, D. (2019). Calls for backdoor access to WhatsApp as Five Eyes nations meet. The Guardian. https://www.theguardian.com/uk-news/2019/jul/30/five-eyes-backdoor-access-whatsapp-encryption

Song, H., Dharmapurikar, S., Turner, J., & Lockwood, J. (2005). Fast hash table lookup using extended bloom filter: an aid to network processing. ACM SIGCOMM Computer Communication Review, 35(4), 181-192. https://doi.org/10.1145/1080091.1080114

WhatsApp (2017). Connecting a billion users every day. WhatsApp Blog. https://blog.whatsapp.com/connecting-one-billion-users-every-day.

Wood, T., & Porter, E. (2019). The elusive backfire effect: Mass attitudes’ steadfast factual adherence. Political Behavior, 41(1), 135-163. http://dx.doi.org/10.2139/ssrn.2819073

Zauner, C. (2010). Implementation and benchmarking of perceptual image hash functions. Master’s thesis, Upper Austria University of Applied Sciences. http://phash.org/docs/pubs/thesis_zauner.pdf

Funding

This research was partially supported by Ministério Público de Minas Gerais (MPMG), project Analytical Capabilities, as well as grants from FAPEMIG, CNPq, and CAPES. Kiran Garimella was funded by a Michael Hammer postdoctoral fellowship at MIT.

Competing Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethics

This work does not involve any human subjects. The fact-checking data is publicly available and only includes highly shared images. WhatsApp data collection for India was approved by MIT’s Committee on the Use of Humans as Experimental Subjects, which included a waiver of informed consent.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

All the data used in this paper is publicly available here: http://doi.org/10.5281/zenodo.3779157 (Reis et al., 2020).