Peer Reviewed

Community-based strategies for combating misinformation: Learning from a popular culture fandom

Article Metrics

9

CrossRef Citations

Altmetric Score

PDF Downloads

Page Views

Through the lens of one of the fastest-growing international fandoms, this study explores everyday misinformation in the context of networked online environments. Findings show that fans experience a range of misinformation, similar to what we see in other political, health, or crisis contexts. However, the strong sense of community and shared purpose of the group is the basis for effective grassroot efforts and strategies to build collective resilience to misinformation, which offer a model for combating misinformation in ways that move beyond the individual context to incorporate shared community values and tactics.

Research Questions

- RQ1: What types of misinformation do fans encounter within the context of fandom?

- RQ2: What strategies do fans report employing to navigate or combat misinformation?

Essay Summary

- This study employs virtual ethnography and semi-structured interviews of 34 Twitter users from the ARMY fandom, a global fan community supporting the Korean music group BTS.

- A wide variety of mis-/disinformation is prevalent in the fandom context: similar to political, health, or disaster contexts, we observed forms of misinformation such as evidence collages, disinformation, playful misrepresentation, and perpetuating debunked content.

- The strong sense of community and shared goals of the fandom affect how users utilize, share, and combat misinformation using a variety of strategies: intentional playful use of misinformation as community-based humor, prominent disengagement strategies to actively discourage discovery and amplification of misinformation, and strategies focused on community members’ mental health and wellness.

- ARMY fandom exemplifies community-based, grassroot efforts to sustainably combat misinformation and build collective resilience to misinformation at the community level, offering a model for others.

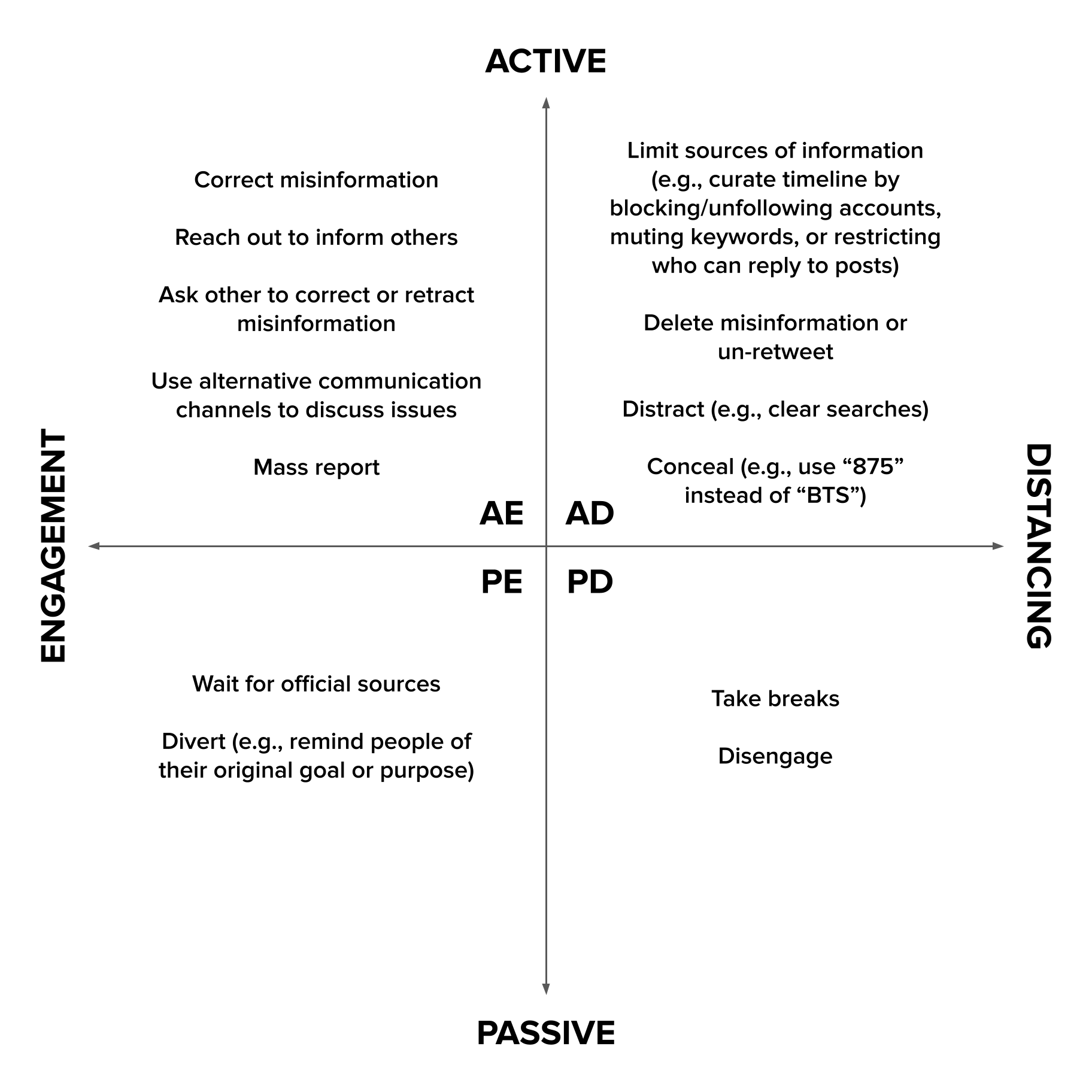

- The users’ strategies are organized in the Activation-Engagement framework that can be used by future researchers and designers to 1) envision new media tools to scaffold a spectrum of engagement at individual and community levels, and 2) study other social groups as well as routine and non-routine contexts to identify and compare which everyday misinformation strategies are employed and effective.

Implications

Misinformation is recognized as a critical challenge for society (Calo et al., 2021; O’Connor & Weatherall, 2019). Much of the growing body of empirical work addressing this challenge focuses exclusively on crisis or high-stress contexts such as natural disasters, terrorism, pandemics, or elections (Bail et al., 2020; Benkler et al., 2018; Starbird et al., 2020). These contexts, which are fertile ground for mis-/disinformation and leave us collectively vulnerable to significant societal harm, have led to notable advances in our understanding of the spread of information (Arif et al., 2018; Krafft & Donovan, 2020; Pennycook & Rand, 2021), individual factors related to information processing (Marwick, 2018; Yeo & McKasy, 2021), and the algorithmic tools used to identify and curb the spread of mis-/disinformation (Wu et al., 2019; Zhou et al., 2019). However, this scope limits our knowledge of how people encounter and deal with misinformation in their daily lives, when they are less likely to be making time-sensitive, emotionally charged, safety-critical, and/or rushed decisions.

Misinformation in everyday contexts is likely to be persistent and recurrent. Although the resulting harms might be less visible and acute, it is no less impactful. Increasing our understanding of the role of misinformation in everyday online interactions can be useful as the strategies people develop here are likely to be also used when non-routine situations arise. Our study illustrates that the fan’s experience with misinformation is one such example of community-based tactic for combating problematic information. Here, we follow Jenkins’ definition of fans as “individuals who maintain a passionate connection to popular media, assert their identity through their engagement with and mastery over its contents, and experience social affiliation around shared tastes and preferences” (2012). We study the music group BTS’ fandom called ARMY, which is one of the largest, most diverse music fandoms today (Lee, 2019; Lee & Nguyen, 2020) known for their active participation in mobilizing to promote social good (Kanozia & Ganghariya, 2021; Park et al., 2021).

The fans in our study shared that they actively engage with a variety of social media. Fans build community identity through shared interest and social support (Park et al., 2021), but they also find themselves constantly confronted and forced to question rumors, media reports, and unverified information. Issues of language translation for global fanbases, algorithmic curation of content that is strategically targeted, and notable false content designed to engage fans’ imagination and excitement further complicate their experience with misinformation.

Our work adds to the growing body of knowledge on misinformation, filling gaps in our understanding by focusing on misinformation in everyday, routine contexts: here, popular culture fandom. Not only do we see in our results that fans experience a wide variety of mis-/disinformation similar to other political, health, or disaster contexts (Lewandowsky & van der Linden, 2021; Phadke & Mitra, 2021; SEIP, 2021; Starbird et al., 2020), but we see that fans report a variety of different strategies for dealing with misinformation in the fandom. Notably, the tactics that individuals and groups employ in these settings were developed over time based on personal and social experiences, as well as learned from peers within the community. As such, they have proved effective for stemming the flow of problematic context, while also increasing community resilience and well-being. Our work also complements the existing body of work in fan studies regarding informal learning and transmedia literacy (e.g., Hills, 2003; Jenkins, 2006; Scolari et al., 2018) by providing insights on how people navigate a complex information space.

Misinformation strategies are shared among social groups through informal learning and mentoring. Groups formed around common identities and mutual interests have a notable interest in building and maintaining community values and group well-being through these activities. We propose the Activation-Engagement framework (Figure 1) to organize and compare along two dimensions the community-driven misinformation strategies discussed by participants. The Activation dimension captures the range of fan activities and behaviors based on timely and clear (in)action from the individual. The Engagement/Distancing dimension captures the extent to which these actions result in close engagement between the individual and the information versus attempts to distance oneself or others from it.

Participants used different combinations of approaches depending on the context. In some situations, participants stated that it was better to actively deal with misinformation because there would be immediate and/or harmful consequences for the fandom or artists (e.g., defaming). In other cases, they noted that it could be better to take a more passive stance, especially if the discussion contained baseless rumors or intent to provoke fans for engagement.

Likewise, participants sometimes chose approaches that directly dealt with misinformation by engaging and/or amplifying the issues (e.g., checking for official statements to verify the information) or approaches to distance themselves from the issues (e.g., stop responding to stressful and triggering tweets). In active distancing, participants try to remove the information they do not want to see, whereas, in passive distancing, they remove themselves from the information space. They also sometimes conceal information by using codes for certain keywords or distract the users by flooding the space with other information (e.g., hashtag hijacking, clearing searches).

The strategies fans have developed, shared, and implemented paint a more holistic picture of how people deal with misinformation in their lives and highlight how people can have agency beyond simply being passive receivers of misinformation. Past approaches developed and advocated for by information professionals tend to focus on evaluating the source and accuracy of information, but the strategies presented herein highlight the role that the user and social group can play. Beyond simply evaluating a particular piece of information or the source, these new approaches emphasize the active role of users in curating the information flow (Thorson & Wells, 2016) by carefully selecting or blocking various sources and agents, and deciding to change the distance between themselves and problematic information to take care of their mental health. Furthermore, users try to positively control or influence the information flow by using tactics to conceal the information, using private channels to avoid unwanted attention from sensational news media, or sharing screenshots so others can avoid accessing the source to minimize engagement with the content, reducing the visibility and spread of the information. These are examples of users engaging with mis-/disinformation in ways apart from merely evaluating the information given to them. In a sense, fans are learning how media manipulation and disinformation occur in the current information ecosystem, a well-studied phenomenon in 4chan or other online subgroups (Marwick & Lewis, 2017), and figuring out their own strategies for protecting themselves.

This framework could be used to expand our understanding of what “engagement” means as an individual navigates misinformation beyond what is commonly observed in social media. It can also be used to study and compare strategies that are employed in different communities, as the characteristics and compositions of user groups and their historical and cultural context could all affect which strategies work. This understanding will help media literacy programs build resilience to misinformation among different online communities in various contexts. Social media platforms can also be designed to value users’ agency in active and passive engagement and nudge them so that they do not always feel pressured towards active engagement, for instance, using reminders to “take a break from a heated conversation.”

Fandoms offer a rich opportunity to further study the growing complexities of mis-/disinformation online and to provide new strategies to address them in an everyday context. Importantly, fandoms tend to be a supportive place of community, where individuals are invested in the success of others; the fandom operates more as a social group than a simple collection of individuals (Park et al., 2021). The fandom’s information work and currently employed tactics can be a source of inspiration for other groups, especially when an abundance of misinformation and sophisticated disinformation tactics cause feelings of cynicism and helplessness.

Evidence

The landscape of misinformation encountered in fandom

Most participants reported encountering some kind of misinformation—defined as “inaccurate, incorrect, or misleading” (Jack, 2017, p. 2) information—on Twitter and recalled a few cases where they retracted or corrected information they shared because it turned out to be false. Many considered the spread of misinformation to be a problem within the fandom (e.g., “information can sometimes come out so fast on Twitter…I think sometimes people will grab whatever they get and run with it before it even becomes proven or disproven” [P2]). Others discussed how being part of the fandom helped build stronger information literacy skills (e.g., “I learned how to be really informed about things, to research,” [P19]) and allowed them to become more aware of misinformation in mainstream media (e.g., “I would never have noticed before how controlling and xenophobic some media can be especially in the West,” [P2]). Fans’ reports of their experiences mirrored results in other disinformation domains (EIP, 2021).

Participants enumerated common types of misinformation in the fandom, including rumors about BTS members and misinterpretations due to the translation of materials through multiple languages (P23). The cultural context and nuances in linguistic expressions were often lost during translation and sharing reinterpretations (e.g., difficulty in translating a member’s pun).

Evidence collages. In addition to factually inaccurate information, participants found cases of misleading information that was outdated, selectively presented from different sources and contexts to support a particular narrative, or often devoid of context (e.g., exposé threads that are a compilation of screen captures of past tweets with a goal of accusing someone of doing something). Krafft and Donovan (2020) refer to these kinds of media artifacts that are strategically constructed to aggregate positive evidence and support disinformation campaigns as “evidence collages.”

For example, several participants mentioned that while fan-edited YouTube videos are helpful to learn about the group when entering the fandom, they can be misleading because they portray the members based on the creator’s selected contexts. P1 shares how she was misled to believe that Big Hit (Now HYBE: the entertainment company BTS belongs to) was exploiting BTS:

“For a while, you kind of believe it because they tailor evidence for their argument. They show you these videos where they’re exhausted, and they’re just falling out on the floor and Big Hit workers just moving past them and they only show you that small clip… And I kind of had to learn for myself and see the full clips later to say, ‘oh, the context was all off and BTS signed another seven-year contract.’” (P1)

Disinformation agents. The boundary between mis-/disinformation was often blurry. Clear cases of disinformation—those with malicious intent—often originated from fan wars (i.e., conflicts with other fandoms). Several participants discussed people from other fandoms pretending to be members of ARMY and maliciously spreading false information. P3 reported hearing that some accounts were paid to spread malicious content. P13 shared:

“They pretend to be an ARMY and they try to join some voting group to get some detailed or secret message, like when will we start to vote, at which time, like a spy.”

Playful misrepresentation. Another type of misinformation is from fans’ playful engagement with exaggeration or misrepresentation of members (e.g., “Yoongi is savage,” “Taehyung and Jungkook are in a relationship,” [P5]). Content is often fabricated or manipulated, but with the intent to foster positive engagement and community identity (Ringland et al., 2022). Some fans playfully engage in shipping (fans who imagine a romantic relationship between members) content, although not everyone believes it. P22 shared how people engage satirically on Q&A sites like Quora about BTS, confusing new fans as well as outsiders of the fandom” “The people answering…would have weird answers and they were like, ‘I’m just kidding, I’m just kidding.’”

Perpetuating debunked content. Participants also discussed misinformation that has been debunked but was difficult to remove. One example is the belief that using emojis in a YouTube comment freezes the view counts (P4). Such information was commonly shared in the comments section of music videos, and we observed some ARMYs expressing frustration as the information seemed to perpetuate despite multiple attempts to correct it. These “folk theories” of algorithmic curation of information are common across domains (DeVito et al., 2018; Karizat et al., 2021). The fandom context also illustrates recurrent misinformation narratives and the challenge of debunking content (Cacciatore, 2021; EIP, 2021; Mosleh et al., 2021).

Experiences and strategies for dealing with misinformation

Many participants actively engaged with misinformation to avoid and stop its spread, such as countering it by providing links to evidence from credible sources or clearing/flooding negative trends using other positive keywords. When the post was a personal opinion, participants would try to engage in dialog either on the Twitter post or in private messages to explain their perspective. At times, they reach out to journalists to correct misinformation in media coverage (e.g., P9, P20, P26, P27). They also disengaged with the conversation or the account if it could harm them emotionally. A few participants found that being silent was better than drawing attention to the false content by tweeting or retweeting about it; asking people not to click on or engage with something that may increase people’s curiosity (P7), thus drawing more engagement.

“It’s like, ‘Let’s clear the searches for Jin’ and when you see those posts with 100 likes, what’s the first thing you’re going to do? You’re definitely going to search what Jin thing is trending.” (P12)

Participants heavily tailored the content on their timeline using both precautionary and disengagement strategies. Precautionary strategies mentioned by participants included being cautious about whom to follow by going through people’s timelines and tweets. Over time, they developed specific criteria to decide whom to follow. Participants also mentioned muting certain keywords on Twitter as adding “another layer of safety” (P10). Being blocked by those whom they considered malicious actors was also treated by some as an accomplishment (P20).

Participants sometimes used more active disengagement strategies such as blocking or unfollowing after they encountered accounts that spread misinformation, views that participants disapproved of, and/or disturbing content. Muting was a soft block strategy to shield themselves but in a less confrontational way (e.g., “…because I don’t want to hurt anybody’s feelings,” [P5]). P17 shared that they also muted phrases that often led to arguments, such as names of other Kpop groups. Some participants talked about disengaging with information that was perceived as invasions of artists’ privacy.

Overall, most participants mentioned being part of efforts to clarify or verify information with a common goal of helping each other become more “informed.” This form of care extended towards members of the ARMY community. P11 characterized the fandom as “a very giving and sharing fandom, and it is very warm.” P6 reported that as a collective group, they believe ARMY can no longer be deceived and are not gullible.

Methods

This study employs virtual ethnography and semi-structured interviews, along with observation of social media data, to understand the types of misinformation in the fan communities and the strategies they adopt to combat misinformation. Virtual ethnography is an approach to investigating people’s interactions and cultures in virtual worlds such as online communities (e.g., web-based discussion forums, group chats on messaging apps) (Boellstorff et al., 2012). It allows researchers to explore social interactions in virtual environments and gain a holistic understanding by immersing themselves in the research setting for extended periods of time (Beneito-Montagut, 2011; Given, 2008). Three authors embedded themselves in the ARMY Twitter space to gain basic insights related to the culture and practice of the fandom for two to three years (2018/2019–2020). The ethnography allowed the researchers to ask relevant interview questions and better understand the context explained by the interviewees. The researchers discussed tweets and other online materials related to the spread of misinformation, conversations around misinformation, and relevant user strategies and took field notes. Based on this work, an initial interview protocol asking about ARMY’s prior experiences related to misinformation in the fandom, their perceptions of information accuracy, practices for identifying trusted sources, ways to evaluate and share information, and strategies for addressing and countering mis-/disinformation was designed. The protocol was piloted with three fans and iterated on before full deployment. Descriptive data about the social media profiles of fans—both interview participants and others—were collected using the Twitter API.

We recruited interviewees on Twitter using a prominent BTS research account (over 50k followers), as Twitter is one of the primary social networking platforms for the fandom. Thus, the research insights here are primarily on the fans’ strategies on Twitter, and further research is needed to understand the user behavior on other social media platforms. The recruitment posts were retweeted over 400 times and viewed over 60,000 times. In 2020, we asked interested fans, 18 years old or above, to fill out a screener survey in which they were asked about basic demographic information such as age, gender, race, and nationality. Questions were also included about how long they have been a BTS fan, the number of accounts they follow and the number of their followers, whether they participated in collaborative fan efforts (e.g., donations, campaigns), and how they would describe their involvement in the fandom. The screener survey was completed by 652 participants; 34 were selected as interviewees, chosen to represent a variety of fans. The demographics of the 34 interviewees are included in the appendix. The interviews were conducted between July and September of 2020. Most interviews were between 90-120 minutes long. Participants also provided social media usernames, allowing researchers to collect account information using the Twitter API. We found that participants ranged in their Twitter social network characteristics with incoming ties ranging from just 5 to over 260K and outgoing ties ranging from 32 to 5K. Participants also varied in their activity levels, with some posting over 350K tweets during their account tenure and others having fewer than 20 total posts. The interview protocol and process were approved by the Institutional Review Board at the University of Washington.

We inductively analyzed the interview data through open coding (Corbin & Strauss, 2015) on a qualitative data analysis platform, Dedoose. To create the initial codebook, three researchers generated annotations capturing the concepts from the transcripts and notes. This was followed by affinity diagramming to organize annotations of similar themes, distilling them to a reduced set of codes, and discussing how each code should be applied. After two researchers independently coded four interview transcripts using the initial codebook, they met to compare the coded results and discuss any discrepancies observed to reach a consensus (Hill et al., 1997). The codes were iterated upon, and the final codebook consisted of 15 thematic categories covering the participants’ networking behavior on social media, participants’ information sharing behavior, characteristics of misinformation in fandom, and the strategies for addressing misinformation.

Using the final codebook, the first coder coded the entire data set. The coders wrote analytical memos on the higher-level themes. Researchers reviewed field notes taken during ethnographic observations to triangulate additional data related to the thematic areas. For instance, all the strategies presented in the framework were mentioned by the interviewees, and researchers confirmed that they commonly observed the use and recommendations of those strategies in the ARMY Twitter space. The findings discussed in this article make use of characteristics of misinformation and strategies for addressing misinformation codes.

Topics

Bibliography

Arif, A., Stewart, L. G., & Starbird, K. (2018). Acting the part: Examining information operations within #BlackLivesMatter discourse. Proceedings of the ACM on Human-Computer Interaction, 2(CSCW), 1–27. https://doi.org/10.1145/3274289

Bail, C. A. (2016). Emotional feedback and the viral spread of social media messages about autism spectrum disorders. American Journal of Public Health (1971), 106(7), 1173–1180. https://doi.org/10.2105/AJPH.2016.303181

Beneito-Montagut, R. (2011). Ethnography goes online: Towards a user-centred methodology to research interpersonal communication on the internet. Qualitative Research, 11(6), 716–735. https://doi.org/10.1177/1468794111413368

Benkler, Y., Faris, R., & Roberts, H. (2018). Network propaganda: Manipulation, disinformation, and radicalization in American politics. Oxford University Press. https://doi.org/10.1093/oso/9780190923624.001.0001

Bradshaw, S. & Howard, P. (2018). Challenging truth and trust: A global inventory of organized social media manipulation. The Computational Propaganda Project. https://issuu.com/disinfoportal/docs/challenging_truth_and_trust_a_globa

Boellstorff, T., Nardi, B., Pearce, C., & Taylor, T. L. (2012). Ethnography and virtual worlds: A handbook of method. Princeton University Press.

Cacciatore, M. A. (2021). Misinformation and public opinion of science and health: Approaches, findings, and future directions. Proceedings of the National Academy of Sciences, 118(15), e1912437117. https://doi.org/10.1073/pnas.1912437117

Corbin, J. & Strauss, A. (2015). Basics of qualitative research (4th ed.). SAGE.

Calo, R., Coward, C., Spiro, E. S., Starbird, K., & West, J. D. (2021). How do you solve a problem like misinformation? Science Advances, 7(50), eabn0481. https://doi.org/10.1126/sciadv.abn0481

DeVito, M. A., Birnholtz, J., Hancock, J. T., French, M., & Liu, S. (2018, April). How people form folk theories of social media feeds and what it means for how we study self-presentation. In Proceedings of the 2018 CHI conference on human factors in computing systems (pp. 1–12). Association of Computing Machinery. https://doi.org/10.1145/3173574.3173694

Election Integrity Partnership (2021). The long fuse: Misinformation and the 2020 election. Stanford Digital Repository: Election Integrity Partnership. v1.3.0. https://purl.stanford.edu/tr171zs0069

Given, L. M. (2008). Virtual ethnography. In The SAGE Encyclopedia of Qualitative Research Methods (pp. 922–924). SAGE. https://dx.doi.org/10.4135/9781412963909.n484

Hill, C., Thompson, B., & Williams, E. (1997). A guide to conducting consensual qualitative research. The Counseling Psychologist, 25(4), 517–57. https://doi.org/10.1177/0011000097254001

Kanozia, & Ganghariya, G. (2021). More than K-pop fans: BTS fandom and activism amid COVID-19 outbreak. Media Asia, 48(4), 338–345. https://doi.org/10.1080/01296612.2021.1944542

Karizat, N., Delmonaco, D., Eslami, M., & Andalibi, N. (2021). Algorithmic folk theories and identity: How TikTok users co-produce Knowledge of identity and engage in algorithmic resistance. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2), 1–44. https://doi.org/10.1145/3476046

Krafft, P. M. & Donovan, J. (2020). Disinformation by design: The use of evidence collages and platform filtering in a media manipulation campaign. Political Communication, 37(2), 194–214. https://doi.org/10.1080/10584609.2019.1686094

Hills, M. (2003). Fan cultures. Taylor and Francis. https://doi.org/10.4324/9780203361337

Jack, C. (2017). Lexicon of lies: Terms for problematic information. Data & Society Research Institute. https://datasociety.net/wp-content/uploads/2017/08/DataAndSociety_LexiconofLies.pdf

Jenkins, H. (2006). Convergence culture. Where old and new media collide. New York University Press.

Jenkins, H. (2012). Fan studies. Oxford Bibliographies. https://doi.org/10.1093/obo/9780199791286-0027

Lee, J. (2019). BTS and ARMY Culture. CommunicationBooks.

Lee, J. H. & Nguyen, A. T. (2020). How music fans shape commercial music services: a case study of BTS & ARMY. In Proceedings of the 21st international society for music information retrieval conference (pp. 837–845). ISMIR. https://archives.ismir.net/ismir2020/paper/000147.pdf

Lewandowsky, S., & van der Linden, S. (2021). Countering misinformation and fake news through inoculation and prebunking. European Review of Social Psychology, 32(2), 348–384. https://doi.org/10.1080/10463283.2021.1876983

Marwick, A. E. (2018). Why do people share fake news? A sociotechnical model of media effects. Georgetown Law Technology Review, 2(2), 474–512. https://georgetownlawtechreview.org/wp-content/uploads/2018/07/2.2-Marwick-pp-474-512.pdf

Marwick, A. & Lewis, R. (2017). Media manipulation and disinformation online. Data & Society. https://datasociety.net/library/media-manipulation-and-disinfo-online/

Mosleh, M., Martel, C., Eckles, D., & Rand, D. (2021). Perverse downstream consequences of debunking: Being corrected by another user for posting false political news increases subsequent sharing of low quality, partisan, and toxic content in a Twitter field experiment. In Proceedings of the 2021 CHI conference on human factors in computing systems (pp. 1–13). Association of Computing Machinery. https://doi.org/10.1145/3411764.3445642

O’Connor, C., & Weatherall, J. O. (2019). The misinformation age: How false beliefs spread. Yale University Press.

Phadke, S., & Mitra, T. (2021). Educators, solicitors, flamers, motivators, sympathizers: characterizing roles in online extremist movements. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2), 1–35. https://doi.org/10.1145/3476051

Park, S. Y., Santero, N. K., Kaneshiro, B., & Lee, J. H. (2021, May). Armed in ARMY: A case study of how BTS fans successfully collaborated to #MatchAMillion for Black Lives Matter. In Proceedings of the 2021 CHI conference on human factors in computing systems (pp. 1–14). Association for Computing Machinery. https://doi.org/10.1145/3411764.3445353

Pennycook, G., & Rand, D. G. (2021). The psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402. https://doi.org/10.1016/j.tics.2021.02.007

Posetti, J., & Matthews, A. (2018). A short guide to the history of “fake news” and disinformation. International Center for Journalists, 7, 1–19. http://dx.doi.org/10.1145/3411764.3445353

Ringland, K. E., Bhattacharya, A., Weatherwax, K., Eagle, T., & Wolf, C. T. (2022). ARMY’s magic shop: Understanding the collaborative construction of playful places in online communities. In Proceedings of the 2022 CHI conference on human factors in computing systems (pp. 1–19). Association of Computing Machinery. https://doi.org/10.1145/3491102.3517442

Scolari, Masanet, M.-J., Guerrero-Pico, M., & Establés, M.-J. (2018). Transmedia literacy in the new media ecology: Teens’ transmedia skills and informal learning strategies. El Profesional de La Información, 27(4), 801–812. https://doi.org/10.3145/epi.2018.jul.09

Starbird, K. (2019). Disinformation’s spread: Bots, trolls and all of us. Nature, 571(7766), 449–450. https://doi.org/10.1038/d41586-019-02235-x

Starbird, K., Spiro, E. S., & Koltai, K. (2020). Misinformation, crisis, and public health—Reviewing the literature. Social Science Research Council. https://mediawell.ssrc.org/literature-reviews/misinformation-crisis-and-public-health/versions/v1-0/

Thorson, K. & Wells, C. (2016). Curated flows: A framework for mapping media exposure in the digital age. Communication Theory, 26(3), 309–328. https://doi.org/10.1111/comt.12087

Wu, L., Morstatter, F., Carley, K. M., & Liu, H. (2019). Misinformation in social media: Definition, manipulation, and detection. ACM SIGKDD Explorations Newsletter, 21(2), 80–90. https://doi.org/10.1145/3373464.3373475

Yeo, S. K. & McKasy, M. (2021). Emotion and humor as misinformation antidotes. Proceedings of the National Academy of Sciences, 118(15). https://doi.org/10.1073/pnas.2002484118

Zhou, X., Zafarani, R., Shu, K., & Liu, H. (2019). Fake news: Fundamental theories, detection strategies and challenges. In Proceedings of the twelfth ACM international conference on web search and data mining (pp. 836–837). Association for Computing Machinery. https://doi.org/10.1145/3289600.3291382

Funding

This project was supported by the University of Washington Center for an Informed Public and the John S. and James L. Knight Foundation.

Competing Interests

The authors declare no competing interests.

Ethics

The interview protocol and process were approved by the University of Washington Human Subjects Division (HSD) (ID: 00010674). The informed consent to participate in the study was obtained from all participants. The ethnicity, gender, and age were self-reported by the participants and were used to recruit diverse interviewees.

Copyright

This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided that the original author and source are properly credited.

Data Availability

This study primarily uses interview data that is anonymized but still can be used to potentially identify individual participants and thus, the IRB restricts us from openly sharing it outside of the research team.

Acknowledgements

The authors sincerely thank the interview participants who made this research possible.